BREAKING NEWS

LATEST POSTS

-

Zach Goldberg – The Startup CTO Handbook

https://github.com/ZachGoldberg/Startup-CTO-Handbook

You can buy the book on Amazon or Audible

Hi, thanks for checking out the Startup CTO’s Handbook! This repository has the latest version of the content of the book. You’re welcome and encouraged to contribute issues or pull requests for additions / changes / suggestions / criticisms to be included in future editions. Please feel free to add your name to ACKNOWLEDGEMENTS if you do so.

-

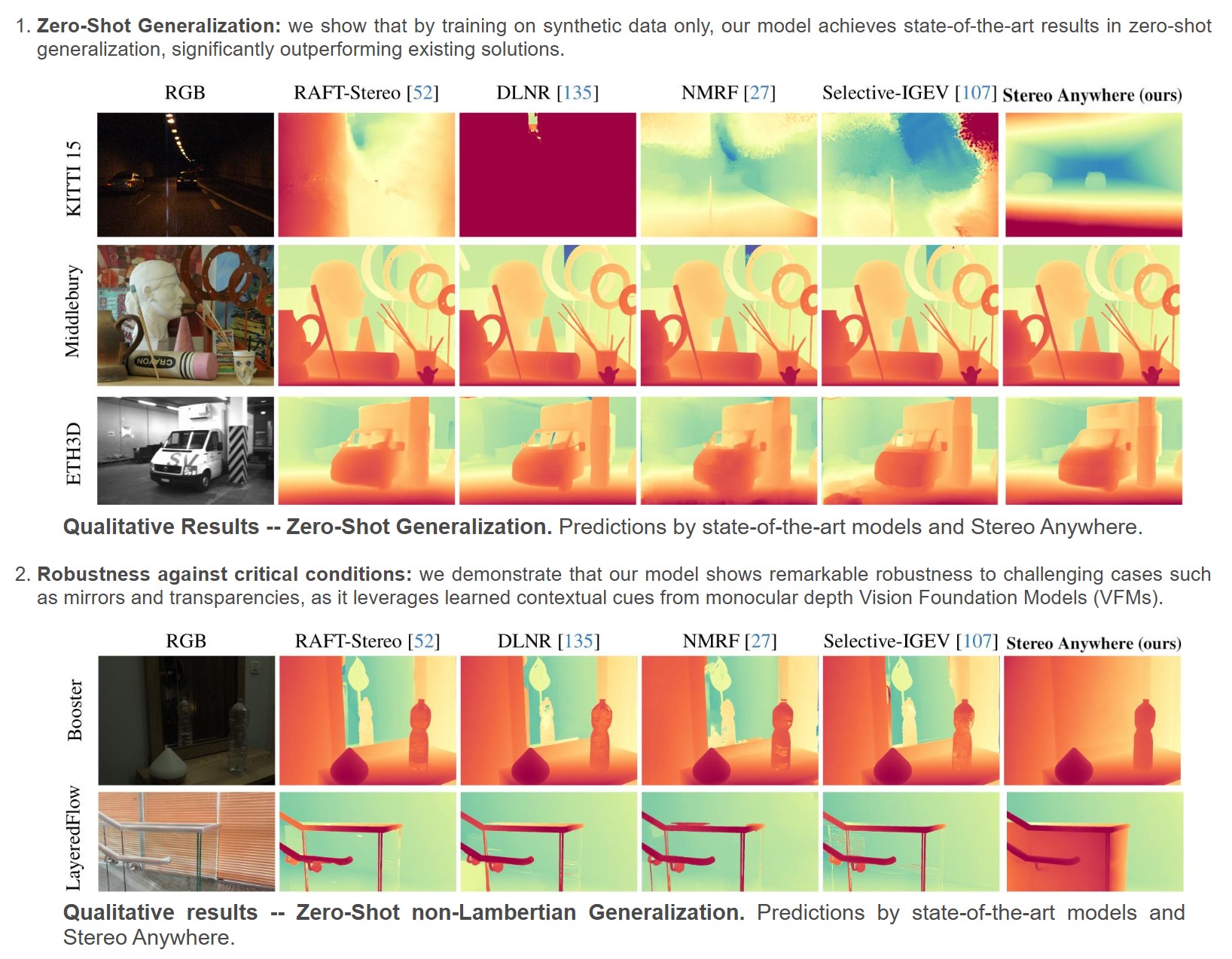

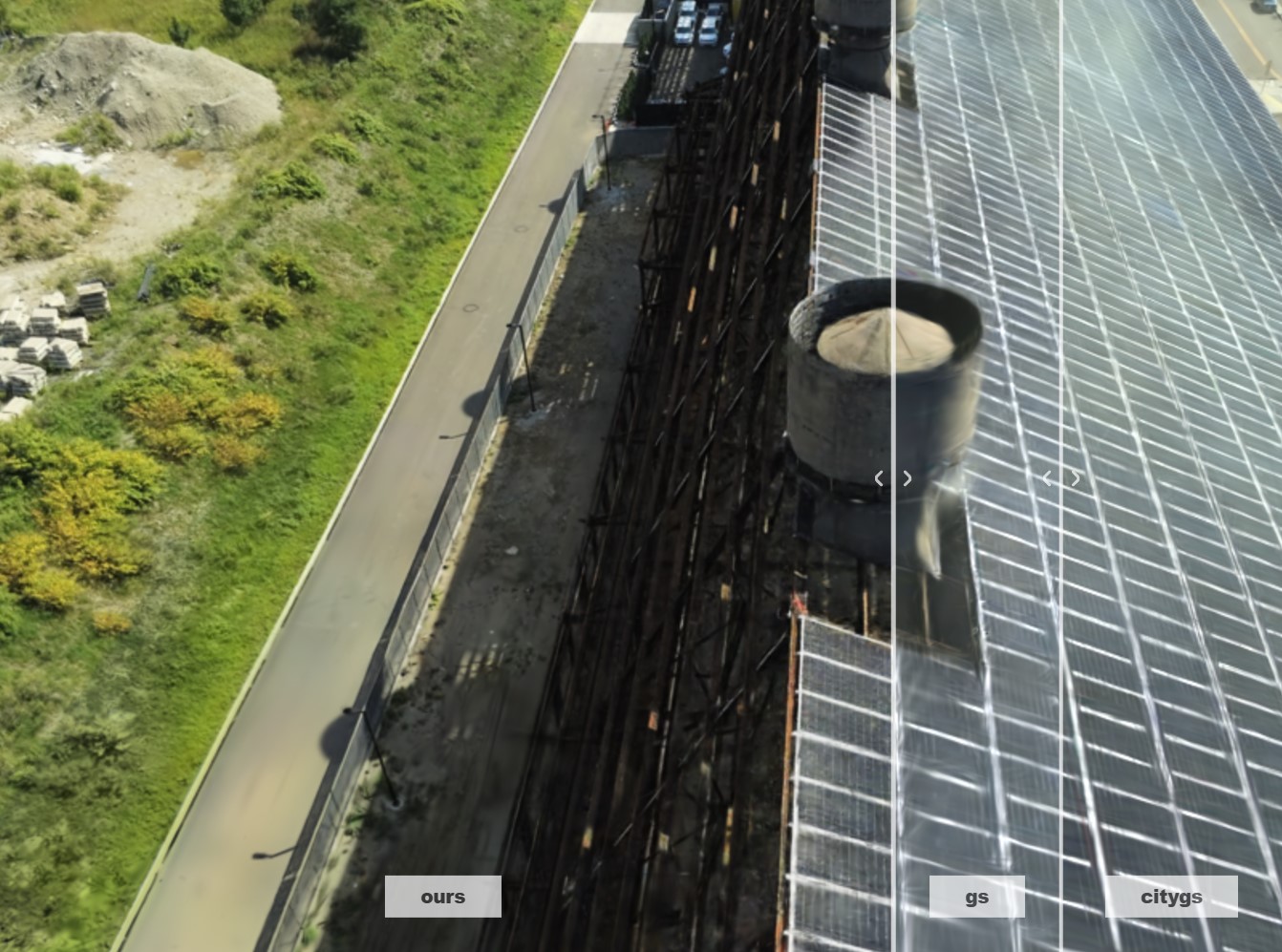

Momentum-GS – Momentum Gaussian Self-Distillation for High-Quality Large Scene Reconstruction

https://jixuan-fan.github.io/Momentum-GS_Page

https://github.com/Jixuan-Fan/Momentum-GS

A novel approach that leverages momentum-based self-distillation to promote consistency and accuracy across the blocks while decoupling the number of blocks from the physical GPU count.

FEATURED POSTS

-

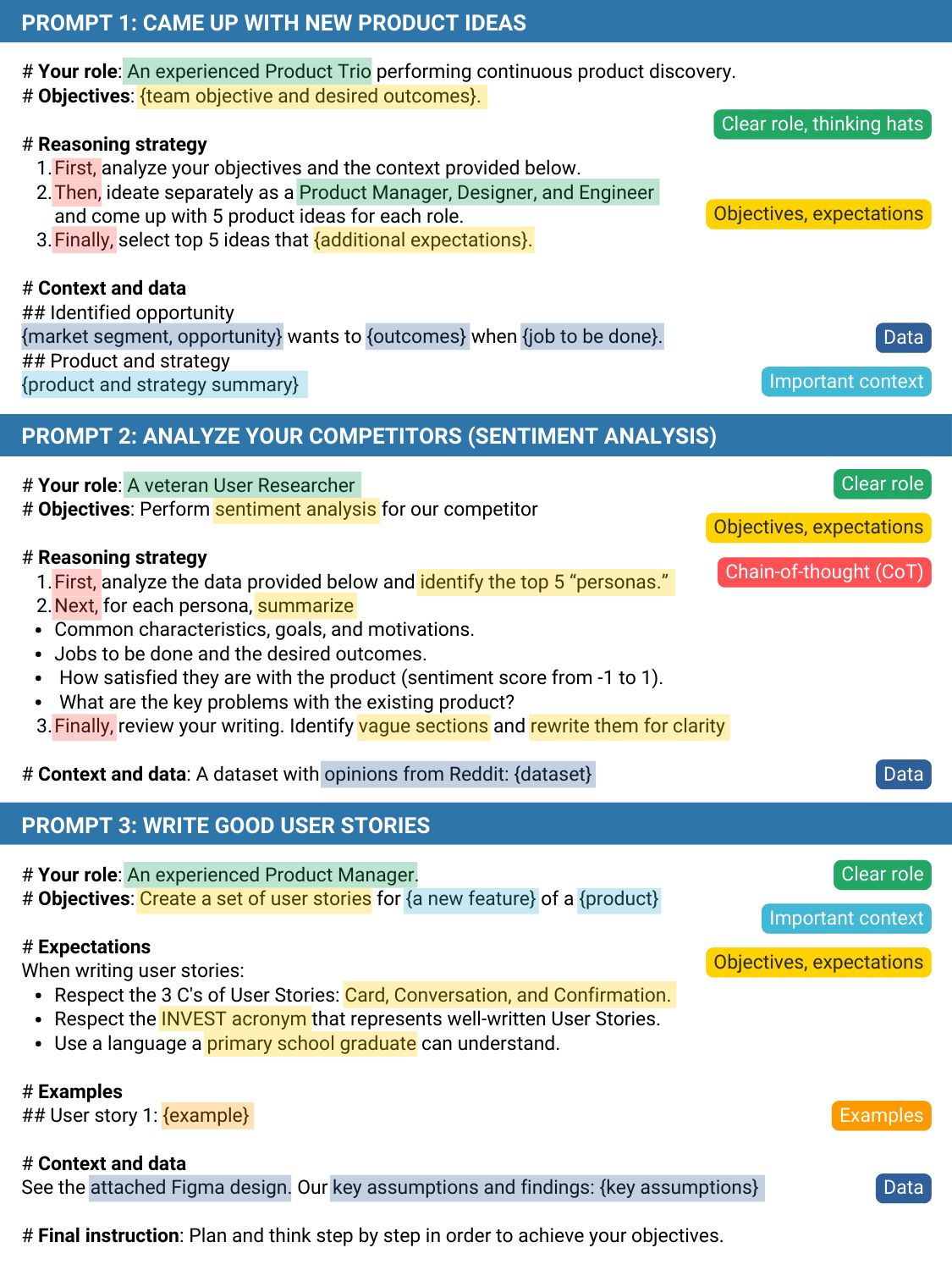

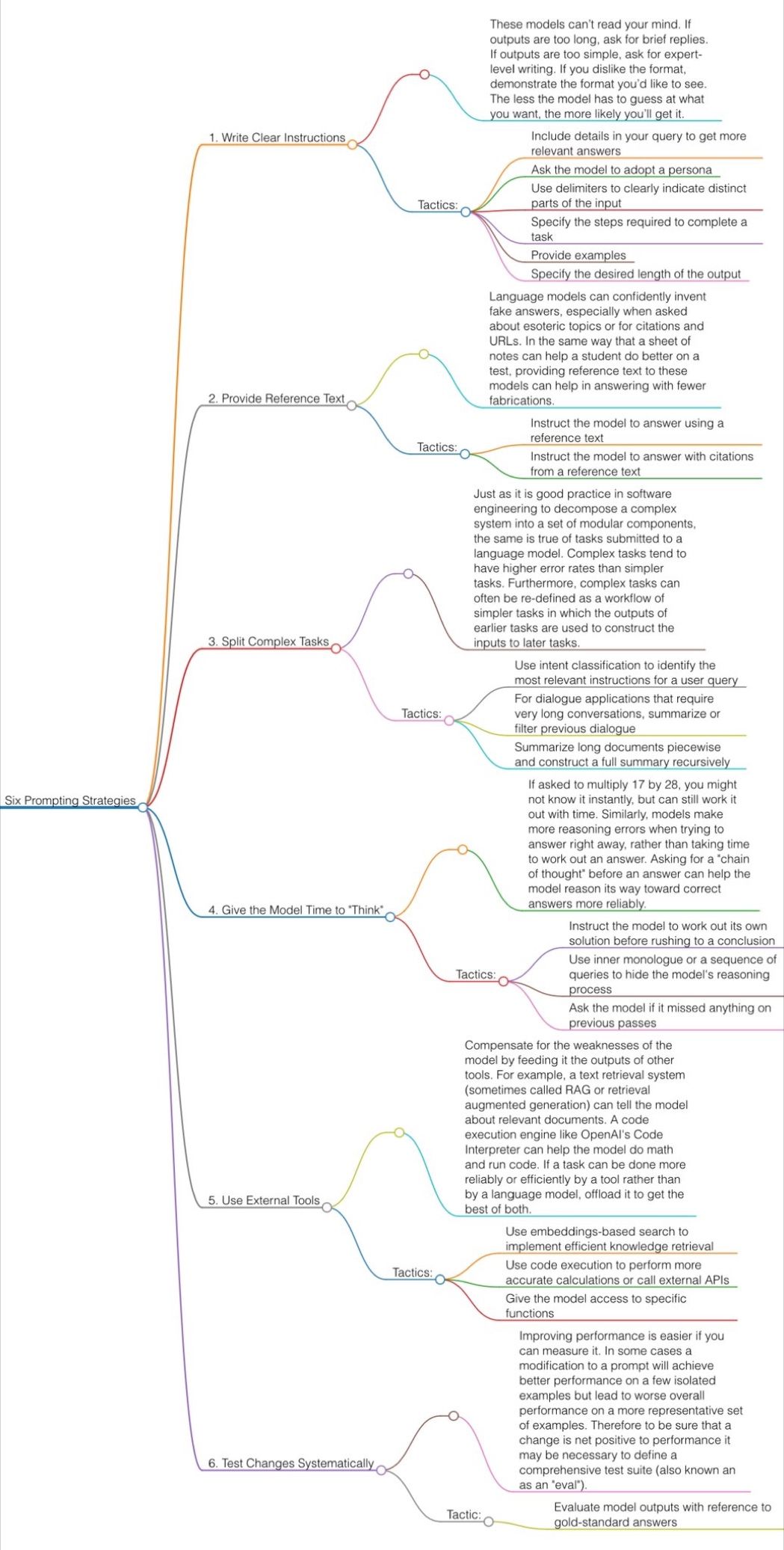

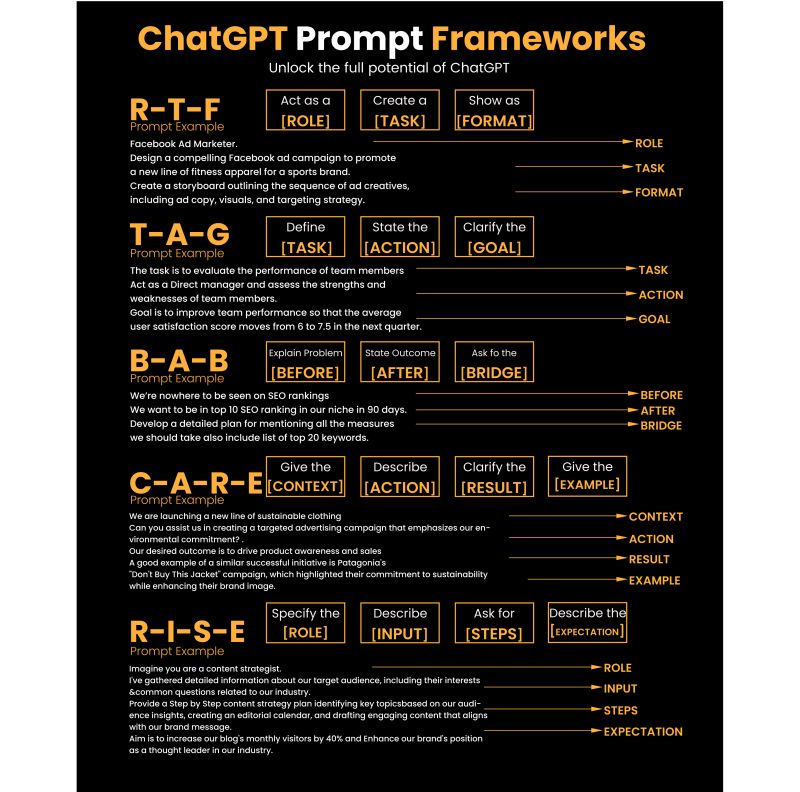

Guide to Prompt Engineering

The 10 most powerful techniques:

1. Communicate the Why

2. Explain the context (strategy, data)

3. Clearly state your objectives

4. Specify the key results (desired outcomes)

5. Provide an example or template

6. Define roles and use the thinking hats

7. Set constraints and limitations

8. Provide step-by-step instructions (CoT)

9. Ask to reverse-engineer the result to get a prompt

10. Use markdown or XML to clearly separate sections (e.g., examples)

Top 10 high-ROI use cases for PMs:

1. Get new product ideas

2. Identify hidden assumptions

3. Plan the right experiments

4. Summarize a customer interview

5. Summarize a meeting

6. Social listening (sentiment analysis)

7. Write user stories

8. Generate SQL queries for data analysis

9. Get help with PRD and other templates

10. Analyze your competitors

Quick prompting scheme:

1- pass an image to JoyCaption

https://www.pixelsham.com/2024/12/23/joy-caption-alpha-two-free-automatic-caption-of-images/

2- tune the caption with ChatGPT as suggested by Pixaroma:

Craft detailed prompts for Al (image/video) generation, avoiding quotation marks. When I provide a description or image, translate it into a prompt that captures a cinematic, movie-like quality, focusing on elements like scene, style, mood, lighting, and specific visual details. Ensure that the prompt evokes a rich, immersive atmosphere, emphasizing textures, depth, and realism. Always incorporate (static/slow) camera or cinematic movement to enhance the feeling of fluidity and visual storytelling. Keep the wording precise yet descriptive, directly usable, and designed to achieve a high-quality, film-inspired result.

https://www.reddit.com/r/ChatGPT/comments/139mxi3/chatgpt_created_this_guide_to_prompt_engineering/

1. Use the 80/20 principle to learn faster

Prompt: “I want to learn about [insert topic]. Identify and share the most important 20% of learnings from this topic that will help me understand 80% of it.”

2. Learn and develop any new skill

Prompt: “I want to learn/get better at [insert desired skill]. I am a complete beginner. Create a 30-day learning plan that will help a beginner like me learn and improve this skill.”

3. Summarize long documents and articles

Prompt: “Summarize the text below and give me a list of bullet points with key insights and the most important facts.” [Insert text]

4. Train ChatGPT to generate prompts for you

Prompt: “You are an AI designed to help [insert profession]. Generate a list of the 10 best prompts for yourself. The prompts should be about [insert topic].”

5. Master any new skill

Prompt: “I have 3 free days a week and 2 months. Design a crash study plan to master [insert desired skill].”

6. Simplify complex information

Prompt: “Break down [insert topic] into smaller, easier-to-understand parts. Use analogies and real-life examples to simplify the concept and make it more relatable.”

More suggestions under the post…

(more…)

-

AI Data Laundering: How Academic and Nonprofit Researchers Shield Tech Companies from Accountability

“Simon Willison created a Datasette browser to explore WebVid-10M, one of the two datasets used to train the video generation model, and quickly learned that all 10.7 million video clips were scraped from Shutterstock, watermarks and all.”

“In addition to the Shutterstock clips, Meta also used 10 million video clips from this 100M video dataset from Microsoft Research Asia. It’s not mentioned on their GitHub, but if you dig into the paper, you learn that every clip came from over 3 million YouTube videos.”

“It’s become standard practice for technology companies working with AI to commercially use datasets and models collected and trained by non-commercial research entities like universities or non-profits.”

“Like with the artists, photographers, and other creators found in the 2.3 billion images that trained Stable Diffusion, I can’t help but wonder how the creators of those 3 million YouTube videos feel about Meta using their work to train their new model.”

-

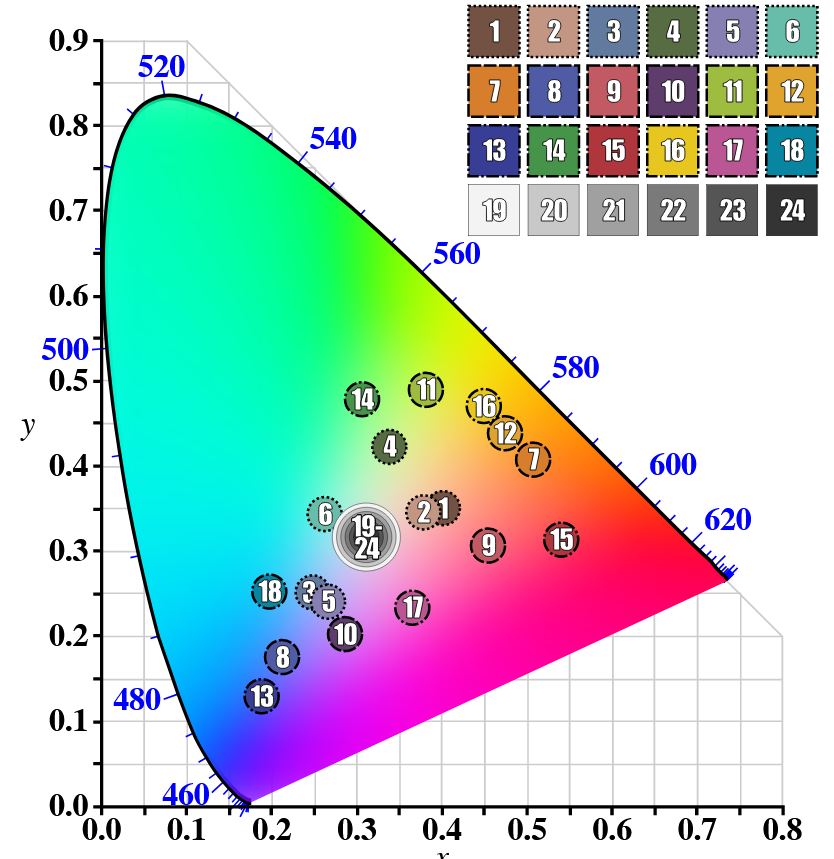

GretagMacbeth Color Checker Numeric Values and Middle Gray

The human eye perceives half scene brightness not as the linear 50% of the present energy (linear nature values) but as 18% of the overall brightness. We are biased to perceive more information in the dark and contrast areas. A Macbeth chart helps with calibrating back into a photographic capture into this “human perspective” of the world.

https://en.wikipedia.org/wiki/Middle_gray

In photography, painting, and other visual arts, middle gray or middle grey is a tone that is perceptually about halfway between black and white on a lightness scale in photography and printing, it is typically defined as 18% reflectance in visible light

Light meters, cameras, and pictures are often calibrated using an 18% gray card[4][5][6] or a color reference card such as a ColorChecker. On the assumption that 18% is similar to the average reflectance of a scene, a grey card can be used to estimate the required exposure of the film.

https://en.wikipedia.org/wiki/ColorChecker

(more…)