https://www.fxguide.com/quicktakes/diffusing-reality-how-nvidia-reimagined-relighting/

https://research.nvidia.com/labs/toronto-ai/DiffusionRenderer/

3Dprinting (178) A.I. (833) animation (348) blender (206) colour (233) commercials (52) composition (152) cool (361) design (646) Featured (79) hardware (311) IOS (109) jokes (138) lighting (288) modeling (144) music (186) photogrammetry (189) photography (754) production (1287) python (91) quotes (496) reference (314) software (1350) trailers (305) ves (549) VR (221)

https://civitai.com/models/735980/flux-equirectangular-360-panorama

https://civitai.com/models/745010?modelVersionId=833115

The trigger phrase is “equirectangular 360 degree panorama”. I would avoid saying “spherical projection” since that tends to result in non-equirectangular spherical images.

Image resolution should always be a 2:1 aspect ratio. 1024 x 512 or 1408 x 704 work quite well and were used in the training data. 2048 x 1024 also works.

I suggest using a weight of 0.5 – 1.5. If you are having issues with the image generating too flat instead of having the necessary spherical distortion, try increasing the weight above 1, though this could negatively impact small details of the image. For Flux guidance, I recommend a value of about 2.5 for realistic scenes.

8-bit output at the moment

Bella works in spectral space, allowing effects such as BSDF wavelength dependency, diffraction, or atmosphere to be modeled far more accurately than in color space.

https://superrendersfarm.com/blog/uncategorized/bella-a-new-spectral-physically-based-renderer/

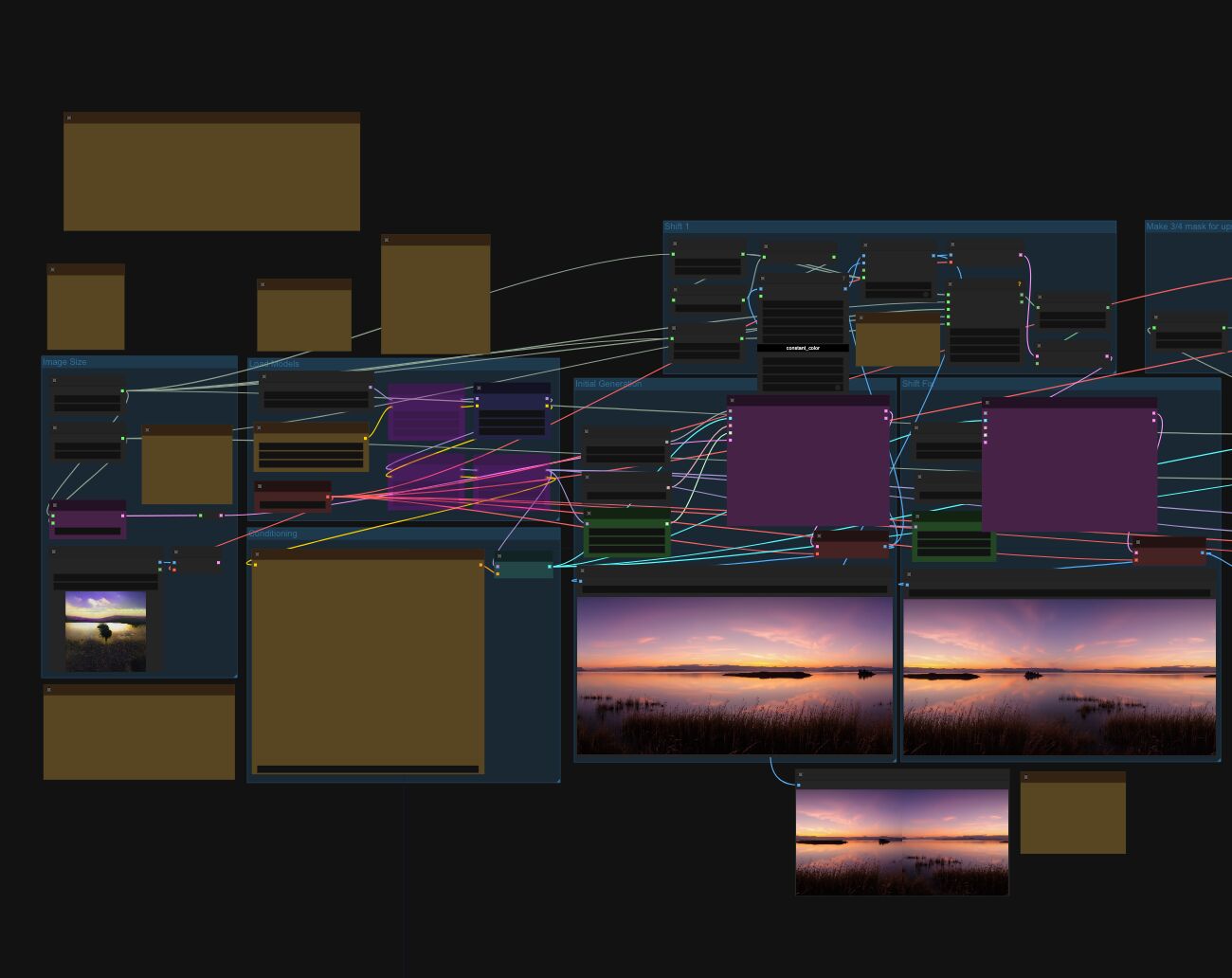

https://github.com/jedypod/debayer

The only required dependency is oiiotool. However other “debayer engines” are also supported.

Additions:

COLLECTIONS

| Featured AI

| Design And Composition

| Explore posts

POPULAR SEARCHES

unreal | pipeline | virtual production | free | learn | photoshop | 360 | macro | google | nvidia | resolution | open source | hdri | real-time | photography basics | nuke

FEATURED POSTS

Social Links

DISCLAIMER – Links and images on this website may be protected by the respective owners’ copyright. All data submitted by users through this site shall be treated as freely available to share.