COMPOSITION

DESIGN

COLOR

-

OpenColorIO standard

Read more: OpenColorIO standardhttps://www.provideocoalition.com/color-management-part-11-introducing-opencolorio/

OpenColorIO (OCIO) is a new open source project from Sony Imageworks.

Based on development started in 2003, OCIO enables color transforms and image display to be handled in a consistent manner across multiple graphics applications. Unlike other color management solutions, OCIO is geared towards motion-picture post production, with an emphasis on visual effects and animation color pipelines.

-

Paul Debevec, Chloe LeGendre, Lukas Lepicovsky – Jointly Optimizing Color Rendition and In-Camera Backgrounds in an RGB Virtual Production Stage

Read more: Paul Debevec, Chloe LeGendre, Lukas Lepicovsky – Jointly Optimizing Color Rendition and In-Camera Backgrounds in an RGB Virtual Production Stagehttps://arxiv.org/pdf/2205.12403.pdf

RGB LEDs vs RGBWP (RGB + lime + phospor converted amber) LEDs

Local copy:

-

Scene Referred vs Display Referred color workflows

Read more: Scene Referred vs Display Referred color workflowsDisplay Referred it is tied to the target hardware, as such it bakes color requirements into every type of media output request.

Scene Referred uses a common unified wide gamut and targeting audience through CDL and DI libraries instead.

So that color information stays untouched and only “transformed” as/when needed.Sources:

– Victor Perez – Color Management Fundamentals & ACES Workflows in Nuke

– https://z-fx.nl/ColorspACES.pdf

– Wicus

-

FXGuide – ACES 2.0 with ILM’s Alex Fry

Read more: FXGuide – ACES 2.0 with ILM’s Alex Fryhttps://draftdocs.acescentral.com/background/whats-new/

ACES 2.0 is the second major release of the components that make up the ACES system. The most significant change is a new suite of rendering transforms whose design was informed by collected feedback and requests from users of ACES 1. The changes aim to improve the appearance of perceived artifacts and to complete previously unfinished components of the system, resulting in a more complete, robust, and consistent product.

Highlights of the key changes in ACES 2.0 are as follows:

- New output transforms, including:

- A less aggressive tone scale

- More intuitive controls to create custom outputs to non-standard displays

- Robust gamut mapping to improve perceptual uniformity

- Improved performance of the inverse transforms

- Enhanced AMF specification

- An updated specification for ACES Transform IDs

- OpenEXR compression recommendations

- Enhanced tools for generating Input Transforms and recommended procedures for characterizing prosumer cameras

- Look Transform Library

- Expanded documentation

Rendering Transform

The most substantial change in ACES 2.0 is a complete redesign of the rendering transform.

ACES 2.0 was built as a unified system, rather than through piecemeal additions. Different deliverable outputs “match” better and making outputs to display setups other than the provided presets is intended to be user-driven. The rendering transforms are less likely to produce undesirable artifacts “out of the box”, which means less time can be spent fixing problematic images and more time making pictures look the way you want.

Key design goals

- Improve consistency of tone scale and provide an easy to use parameter to allow for outputs between preset dynamic ranges

- Minimize hue skews across exposure range in a region of same hue

- Unify for structural consistency across transform type

- Easy to use parameters to create outputs other than the presets

- Robust gamut mapping to improve harsh clipping artifacts

- Fill extents of output code value cube (where appropriate and expected)

- Invertible – not necessarily reversible, but Output > ACES > Output round-trip should be possible

- Accomplish all of the above while maintaining an acceptable “out-of-the box” rendering

- New output transforms, including:

-

mmColorTarget – Nuke Gizmo for color matching a MacBeth chart

Read more: mmColorTarget – Nuke Gizmo for color matching a MacBeth charthttps://www.marcomeyer-vfx.de/posts/2014-04-11-mmcolortarget-nuke-gizmo/

https://www.marcomeyer-vfx.de/posts/mmcolortarget-nuke-gizmo/

https://vimeo.com/9.1652466e+07

https://www.nukepedia.com/gizmos/colour/mmcolortarget

-

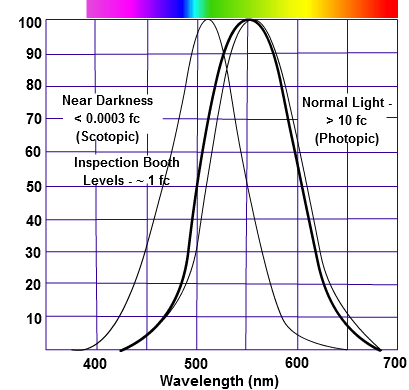

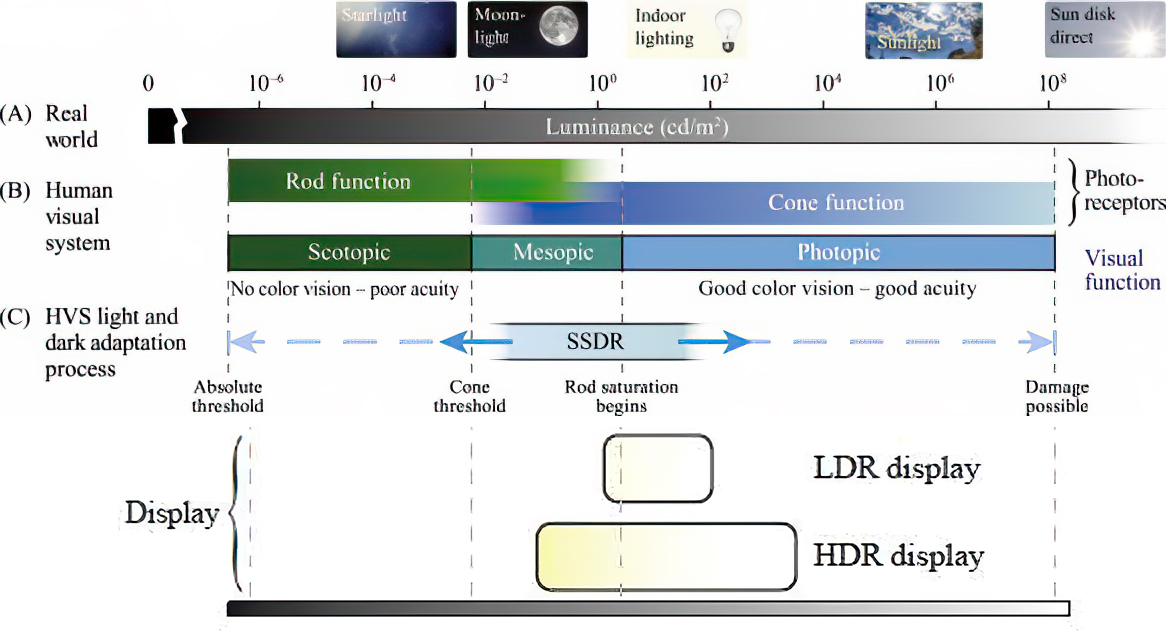

Sensitivity of human eye

Read more: Sensitivity of human eyehttp://www.wikilectures.eu/index.php/Spectral_sensitivity_of_the_human_eye

http://www.normankoren.com/Human_spectral_sensitivity_small.jpg

Spectral sensitivity of eye is influenced by light intensity. And the light intensity determines the level of activity of cones cell and rod cell. This is the main characteristic of human vision. Sensitivity to individual colors, in other words, wavelengths of the light spectrum, is explained by the RGB (red-green-blue) theory. This theory assumed that there are three kinds of cones. It’s selectively sensitive to red (700-630 nm), green (560-500 nm), and blue (490-450 nm) light. And their mutual interaction allow to perceive all colors of the spectrum.

http://weeklysciencequiz.blogspot.com/2013/01/violet-skies-are-for-birds.html

Sensitivity of human eye Sensitivity of human eyes to light increase with the decrease in light intensity. In day-light condition, the cones cell is responding to this condition. And the eye is most sensitive at 555 nm. In darkness condition, the rod cell is responding to this condition. And the eye is most sensitive at 507 nm.

As light intensity decreases, cone function changes more effective way. And when decrease the light intensity, it prompt to accumulation of rhodopsin. Furthermore, in activates rods, it allow to respond to stimuli of light in much lower intensity.

The three curves in the figure above shows the normalized response of an average human eye to various amounts of ambient light. The shift in sensitivity occurs because two types of photoreceptors called cones and rods are responsible for the eye’s response to light. The curve on the right shows the eye’s response under normal lighting conditions and this is called the photopic response. The cones respond to light under these conditions.

As mentioned previously, cones are composed of three different photo pigments that enable color perception. This curve peaks at 555 nanometers, which means that under normal lighting conditions, the eye is most sensitive to a yellowish-green color. When the light levels drop to near total darkness, the response of the eye changes significantly as shown by the scotopic response curve on the left. At this level of light, the rods are most active and the human eye is more sensitive to the light present, and less sensitive to the range of color. Rods are highly sensitive to light but are comprised of a single photo pigment, which accounts for the loss in ability to discriminate color. At this very low light level, sensitivity to blue, violet, and ultraviolet is increased, but sensitivity to yellow and red is reduced. The heavier curve in the middle represents the eye’s response at the ambient light level found in a typical inspection booth. This curve peaks at 550 nanometers, which means the eye is most sensitive to yellowish-green color at this light level. Fluorescent penetrant inspection materials are designed to fluoresce at around 550 nanometers to produce optimal sensitivity under dim lighting conditions.

-

Photography basics: Lumens vs Candelas (candle) vs Lux vs FootCandle vs Watts vs Irradiance vs Illuminance

Read more: Photography basics: Lumens vs Candelas (candle) vs Lux vs FootCandle vs Watts vs Irradiance vs Illuminancehttps://www.translatorscafe.com/unit-converter/en-US/illumination/1-11/

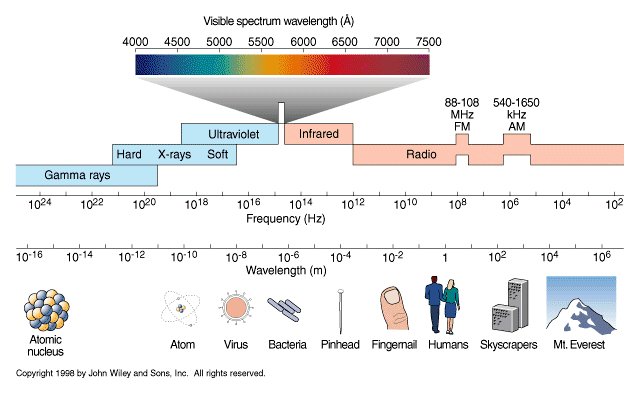

The power output of a light source is measured using the unit of watts W. This is a direct measure to calculate how much power the light is going to drain from your socket and it is not relatable to the light brightness itself.

The amount of energy emitted from it per second. That energy comes out in a form of photons which we can crudely represent with rays of light coming out of the source. The higher the power the more rays emitted from the source in a unit of time.

Not all energy emitted is visible to the human eye, so we often rely on photometric measurements, which takes in account the sensitivity of human eye to different wavelenghts

Details in the post

(more…)

LIGHTING

-

IES Light Profiles and editing software

Read more: IES Light Profiles and editing softwarehttp://www.derekjenson.com/3d-blog/ies-light-profiles

https://ieslibrary.com/en/browse#ies

https://leomoon.com/store/shaders/ies-lights-pack

https://docs.arnoldrenderer.com/display/a5afmug/ai+photometric+light

IES profiles are useful for creating life-like lighting, as they can represent the physical distribution of light from any light source.

The IES format was created by the Illumination Engineering Society, and most lighting manufacturers provide IES profile for the lights they manufacture.

-

Lighting Every Darkness with 3DGS: Fast Training and Real-Time Rendering and Denoising for HDR View Synthesis

Read more: Lighting Every Darkness with 3DGS: Fast Training and Real-Time Rendering and Denoising for HDR View Synthesishttps://srameo.github.io/projects/le3d/

LE3D is a method for real-time HDR view synthesis from RAW images. It is particularly effective for nighttime scenes.

https://github.com/Srameo/LE3D

-

Photography basics: Solid Angle measures

Read more: Photography basics: Solid Angle measureshttp://www.calculator.org/property.aspx?name=solid+angle

A measure of how large the object appears to an observer looking from that point. Thus. A measure for objects in the sky. Useful to retuen the size of the sun and moon… and in perspective, how much of their contribution to lighting. Solid angle can be represented in ‘angular diameter’ as well.

http://en.wikipedia.org/wiki/Solid_angle

http://www.mathsisfun.com/geometry/steradian.html

A solid angle is expressed in a dimensionless unit called a steradian (symbol: sr). By default in terms of the total celestial sphere and before atmospheric’s scattering, the Sun and the Moon subtend fractional areas of 0.000546% (Sun) and 0.000531% (Moon).

http://en.wikipedia.org/wiki/Solid_angle#Sun_and_Moon

On earth the sun is likely closer to 0.00011 solid angle after athmospheric scattering. The sun as perceived from earth has a diameter of 0.53 degrees. This is about 0.000064 solid angle.

http://www.numericana.com/answer/angles.htm

The mean angular diameter of the full moon is 2q = 0.52° (it varies with time around that average, by about 0.009°). This translates into a solid angle of 0.0000647 sr, which means that the whole night sky covers a solid angle roughly one hundred thousand times greater than the full moon.

More info

http://lcogt.net/spacebook/using-angles-describe-positions-and-apparent-sizes-objects

http://amazing-space.stsci.edu/glossary/def.php.s=topic_astronomy

Angular Size

The apparent size of an object as seen by an observer; expressed in units of degrees (of arc), arc minutes, or arc seconds. The moon, as viewed from the Earth, has an angular diameter of one-half a degree.

The angle covered by the diameter of the full moon is about 31 arcmin or 1/2°, so astronomers would say the Moon’s angular diameter is 31 arcmin, or the Moon subtends an angle of 31 arcmin.

-

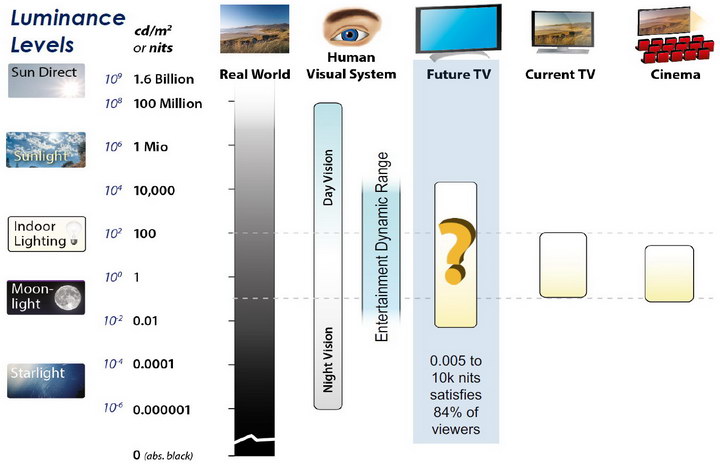

Rec-2020 – TVs new color gamut standard used by Dolby Vision?

Read more: Rec-2020 – TVs new color gamut standard used by Dolby Vision?https://www.hdrsoft.com/resources/dri.html#bit-depth

The dynamic range is a ratio between the maximum and minimum values of a physical measurement. Its definition depends on what the dynamic range refers to.

For a scene: Dynamic range is the ratio between the brightest and darkest parts of the scene.

For a camera: Dynamic range is the ratio of saturation to noise. More specifically, the ratio of the intensity that just saturates the camera to the intensity that just lifts the camera response one standard deviation above camera noise.

For a display: Dynamic range is the ratio between the maximum and minimum intensities emitted from the screen.

The Dynamic Range of real-world scenes can be quite high — ratios of 100,000:1 are common in the natural world. An HDR (High Dynamic Range) image stores pixel values that span the whole tonal range of real-world scenes. Therefore, an HDR image is encoded in a format that allows the largest range of values, e.g. floating-point values stored with 32 bits per color channel. Another characteristics of an HDR image is that it stores linear values. This means that the value of a pixel from an HDR image is proportional to the amount of light measured by the camera.

For TVs HDR is great, but it’s not the only new TV feature worth discussing.

Wide color gamut, or WCG, is often lumped in with HDR. While they’re often found together, they’re not intrinsically linked. Where HDR is an increase in the dynamic range of the picture (with contrast and brighter highlights in particular), a TV’s wide color gamut coverage refers to how much of the new, larger color gamuts a TV can display.

Wide color gamuts only really matter for HDR video sources like UHD Blu-rays and some streaming video, as only HDR sources are meant to take advantage of the ability to display more colors.

www.cnet.com/how-to/what-is-wide-color-gamut-wcg/

Color depth is only one aspect of color representation, expressing the precision with which the amount of each primary can be expressed through a pixel; the other aspect is how broad a range of colors can be expressed (the gamut)

Image rendering bit depth

Wide color gamuts include a greater number of colors than what most current TVs can display, so the greater a TV’s coverage of a wide color gamut, the more colors a TV will be able to reproduce.

When we talk about a color space or color gamut we refer to the range of color values stored in an image. The perception of these color also requires a display that has been tuned with to resolve these color profiles at best. This is often referred to as a ‘viewer lut’.

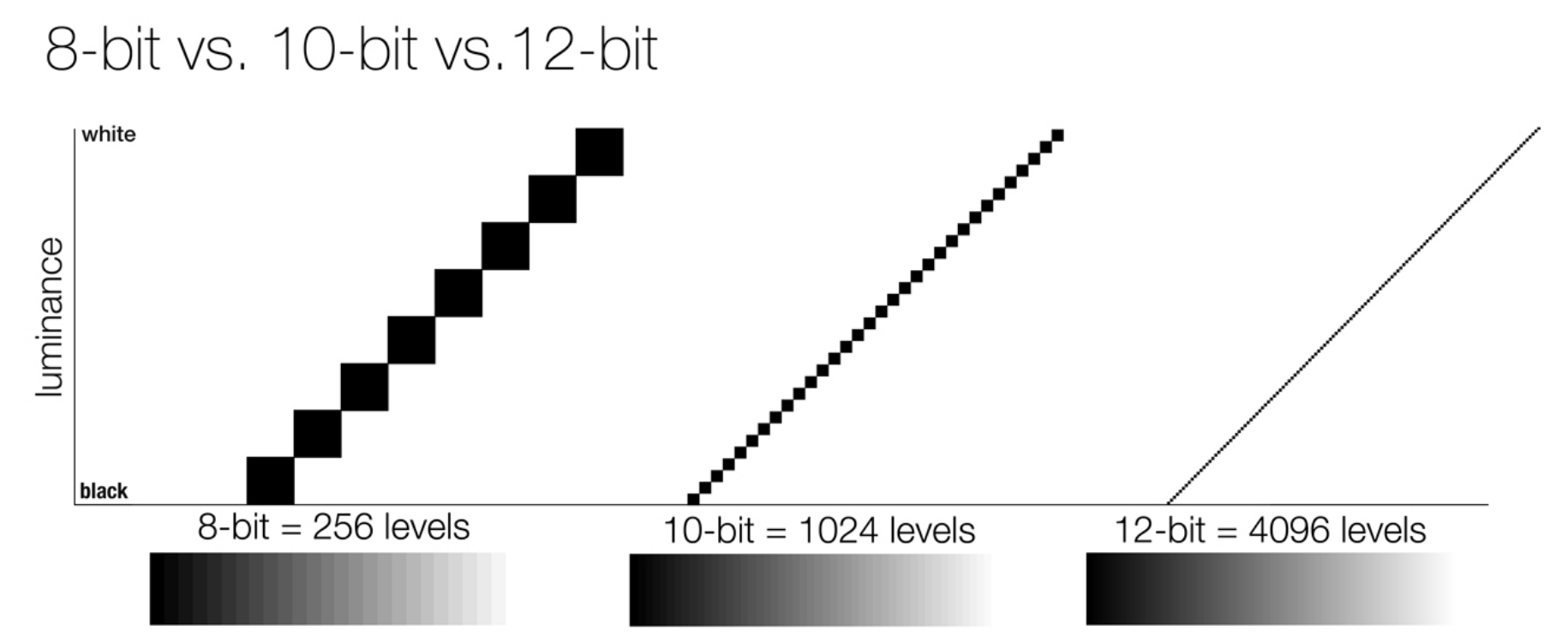

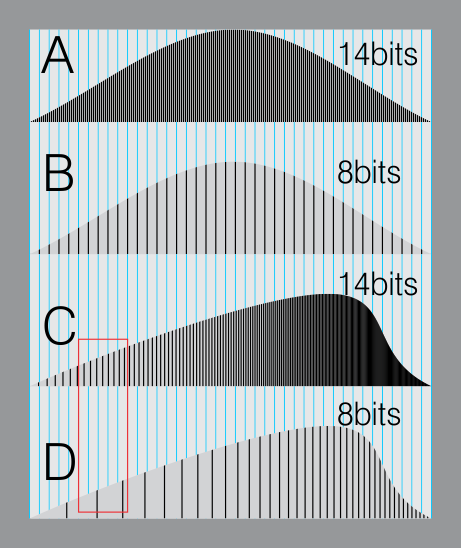

So this comes also usually paired with an increase in bit depth, going from the old 8 bit system (256 shades per color, with the potential of over 16.7 million colors: 256 green x 256 blue x 256 red) to 10 (1024+ shades per color, with access to over a billion colors) or higher bits, like 12 bit (4096 shades per RGB for 68 billion colors).

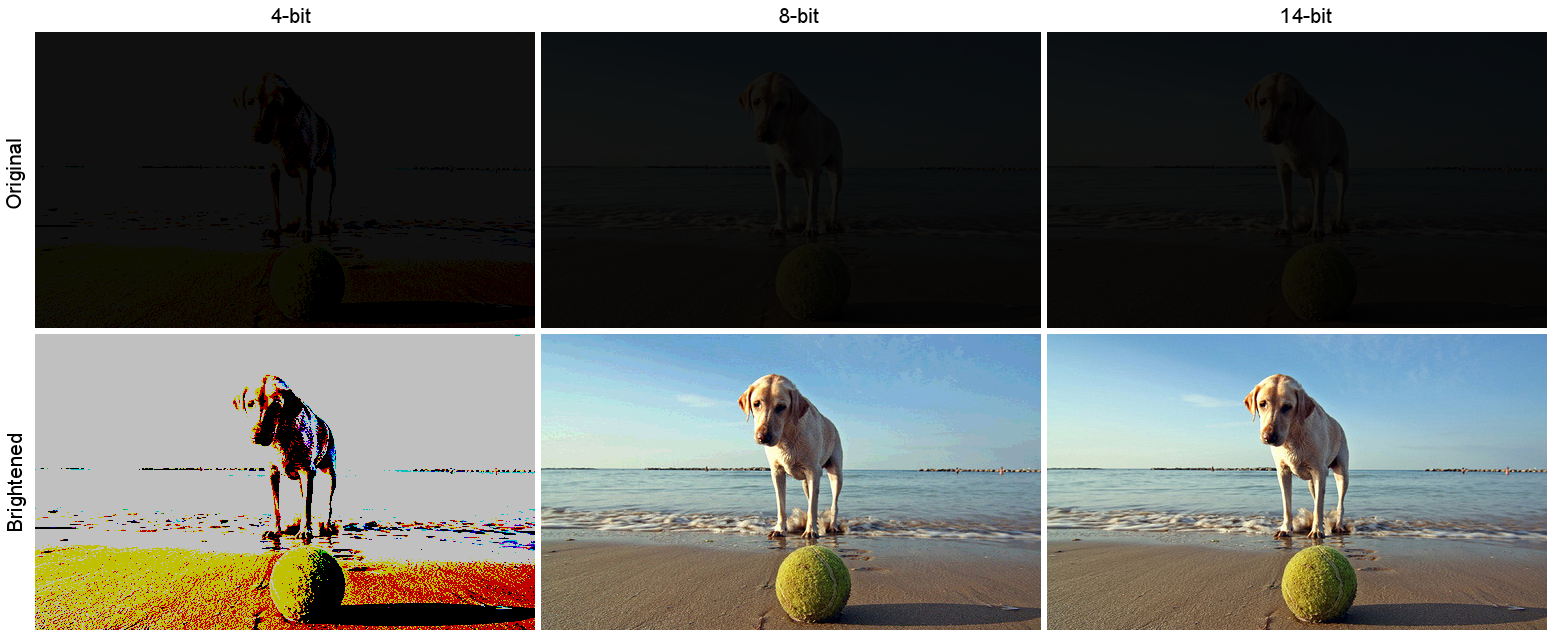

The advantage of higher bit depth is in the ability to bias color with the minimum loss.

For an extreme example, raising the brightness from a completely dark image allows for better reproduction, independently on the reproduction medium, due to the amount of data available at editing time:

For reference, 8-bit images (i.e. 24 bits per pixel for a color image) are considered Low Dynamic Range.

They can store around 5 stops of light and each pixel carry a value from 0 (black) to 255 (white).

As a comparison, DSLR cameras can capture ~12-15 stops of light and they use RAW files to store the information.

https://www.cambridgeincolour.com/tutorials/dynamic-range.htm

https://www.hdrsoft.com/resources/dri.html#bit-depth

Note that the number of bits itself may be a misleading indication of the real dynamic range that the image reproduces — converting a Low Dynamic Range image to a higher bit depth does not change its dynamic range, of course.

- 8-bit images (i.e. 24 bits per pixel for a color image) are considered Low Dynamic Range.

- 16-bit images (i.e. 48 bits per pixel for a color image) resulting from RAW conversion are still considered Low Dynamic Range, even though the range of values they can encode is significantly higher than for 8-bit images (65536 versus 256). Note that converting a RAW file involves applying a tonal curve that compresses the dynamic range of the RAW data so that the converted image shows correctly on low dynamic range monitors. The need to adapt the output image file to the dynamic range of the display is the factor that dictates how much the dynamic range is compressed, not the output bit-depth. By using 16 instead of 8 bits, you will gain precision but you will not gain dynamic range.

- 32-bit images (i.e. 96 bits per pixel for a color image) are considered High Dynamic Range.Unlike 8- and 16-bit images which can take a finite number of values, 32-bit images are coded using floating point numbers, which means the values they can take is unlimited.It is important to note, though, that storing an image in a 32-bit HDR format is a necessary condition for an HDR image but not a sufficient one. When an image comes from a single capture with a standard camera, it will remain a Low Dynamic Range image,

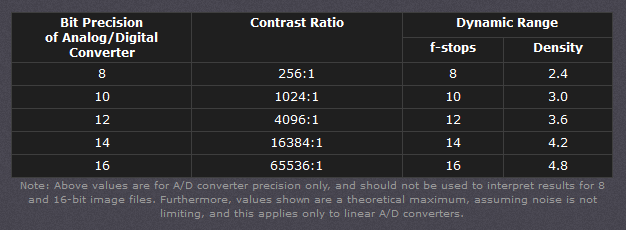

Also note that bit depth and dynamic range are often confused as one, but are indeed separate concepts and there is no direct one to one relationship between them. Bit depth is about capacity, dynamic range is about the actual ratio of data stored.

The bit depth of a capturing or displaying device gives you an indication of its dynamic range capacity. That is, the highest dynamic range that the device would be capable of reproducing if all other constraints are eliminated.https://rawpedia.rawtherapee.com/Bit_Depth

Finally, note that there are two ways to “count” bits for an image — either the number of bits per color channel (BPC) or the number of bits per pixel (BPP). A bit (0,1) is the smallest unit of data stored in a computer.

For a grayscale image, 8-bit means that each pixel can be one of 256 levels of gray (256 is 2 to the power 8).

For an RGB color image, 8-bit means that each one of the three color channels can be one of 256 levels of color.

Since each pixel is represented by 3 colors in this case, 8-bit per color channel actually means 24-bit per pixel.Similarly, 16-bit for an RGB image means 65,536 levels per color channel and 48-bit per pixel.

To complicate matters, when an image is classified as 16-bit, it just means that it can store a maximum 65,535 values. It does not necessarily mean that it actually spans that range. If the camera sensors can not capture more than 12 bits of tonal values, the actual bit depth of the image will be at best 12-bit and probably less because of noise.

The following table attempts to summarize the above for the case of an RGB color image.

Type of digital support Bit depth per color channel Bit depth per pixel FStops Theoretical maximum Dynamic Range Reality 8-bit 8 24 8 256:1 most consumer images 12-bit CCD 12 36 12 4,096:1 real maximum limited by noise 14-bit CCD 14 42 14 16,384:1 real maximum limited by noise 16-bit TIFF (integer) 16 48 16 65,536:1 bit-depth in this case is not directly related to the dynamic range captured 16-bit float EXR 16 48 30 65,536:1 values are distributed more closely in the (lower) darker tones than in the (higher) lighter ones, thus allowing for a more accurate description of the tones more significant to humans. The range of normalized 16-bit floats can represent thirty stops of information with 1024 steps per stop. We have eighteen and a half stops over middle gray, and eleven and a half below. The denormalized numbers provide an additional ten stops with decreasing precision per stop.

http://download.nvidia.com/developer/GPU_Gems/CD_Image/Image_Processing/OpenEXR/OpenEXR-1.0.6/doc/#recsHDR image (e.g. Radiance format) 32 96 “infinite” 4.3 billion:1 real maximum limited by the captured dynamic range 32-bit floats are often called “single-precision” floats, and 64-bit floats are often called “double-precision” floats. 16-bit floats therefore are called “half-precision” floats, or just “half floats”.

https://petapixel.com/2018/09/19/8-12-14-vs-16-bit-depth-what-do-you-really-need

On a separate note, even Photoshop does not handle 16bit per channel. Photoshop does actually use 16-bits per channel. However, it treats the 16th digit differently – it is simply added to the value created from the first 15-digits. This is sometimes called 15+1 bits. This means that instead of 216 possible values (which would be 65,536 possible values) there are only 215+1 possible values (which is 32,768 +1 = 32,769 possible values).

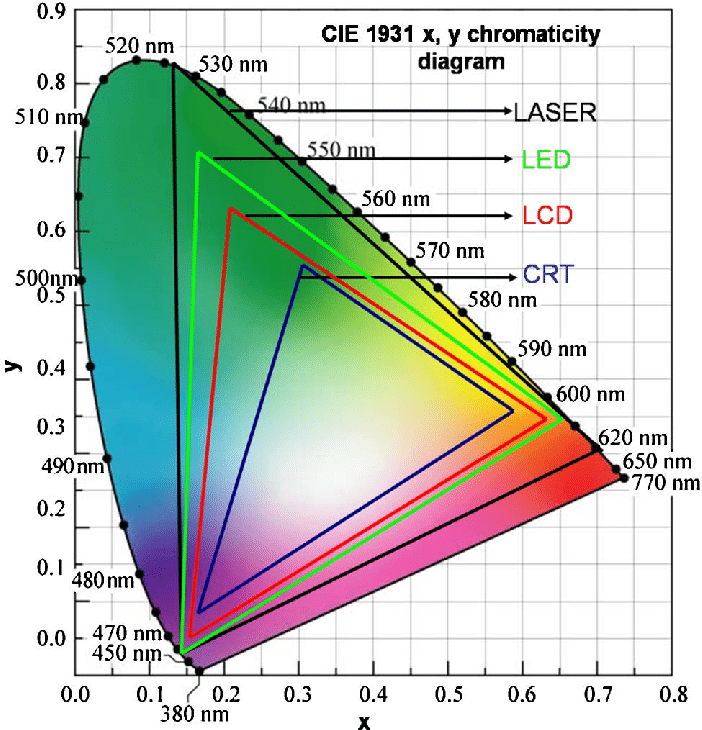

Rec-601 (for the older SDTV format, very similar to rec-709) and Rec-709 (the HDTV’s recommended set of color standards, at times also referred to sRGB, although not exactly the same) are currently the most spread color formats and hardware configurations in the world.

Following those you can find the larger P3 gamut, more commonly used in theaters and in digital production houses (with small variations and improvements to color coverage), as well as most of best 4K/WCG TVs.

And a new standard is now promoted against P3, referred to Rec-2020 and UHDTV.

It is still debatable if this is going to be adopted at consumer level beyond the P3, mainly due to lack of hardware supporting it. But initial tests do prove that it would be a future proof investment.

www.colour-science.org/anders-langlands/

Rec. 2020 is ultimately designed for television, and not cinema. Therefore, it is to be expected that its properties must behave according to current signal processing standards. In this respect, its foundation is based on current HD and SD video signal characteristics.

As far as color bit depth is concerned, it allows for a maximum of 12 bits, which is more than enough for humans.

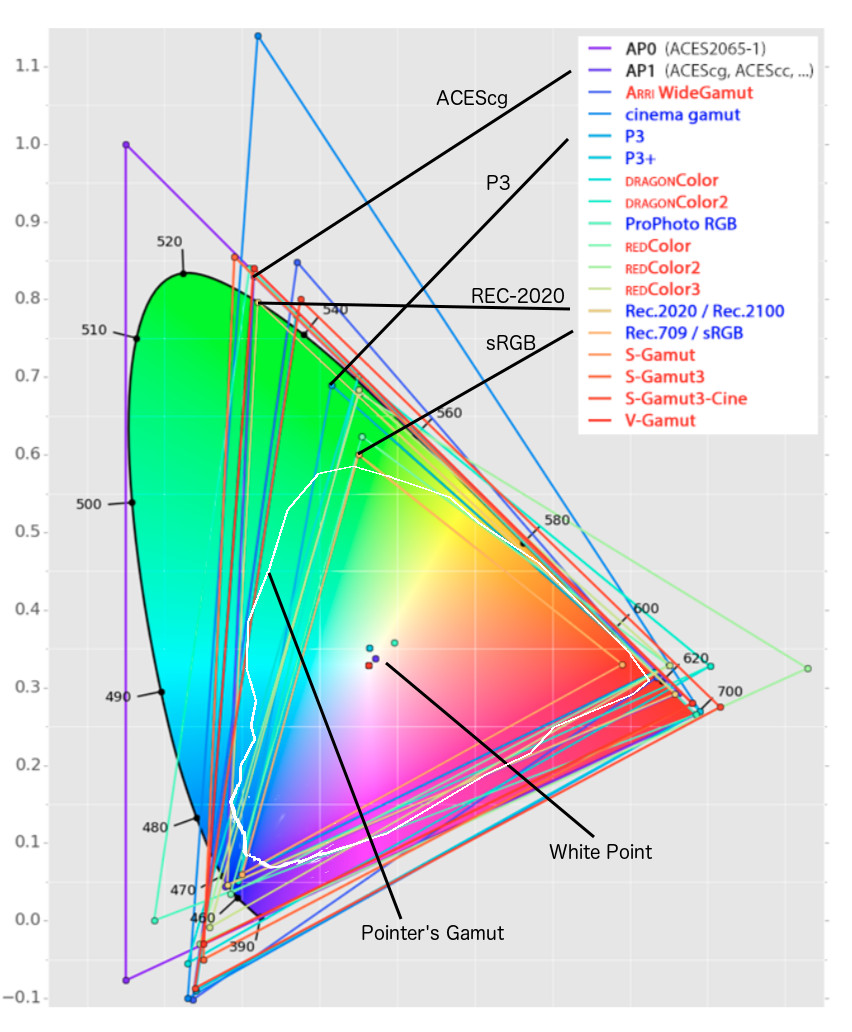

Comparing standards, REC-709 covers 35.9% of the human visible spectrum. P3 45.5%. And REC-2020 75.8%.

https://www.avsforum.com/forum/166-lcd-flat-panel-displays/2812161-what-color-volume.htmlComparing coverage to hardware devices

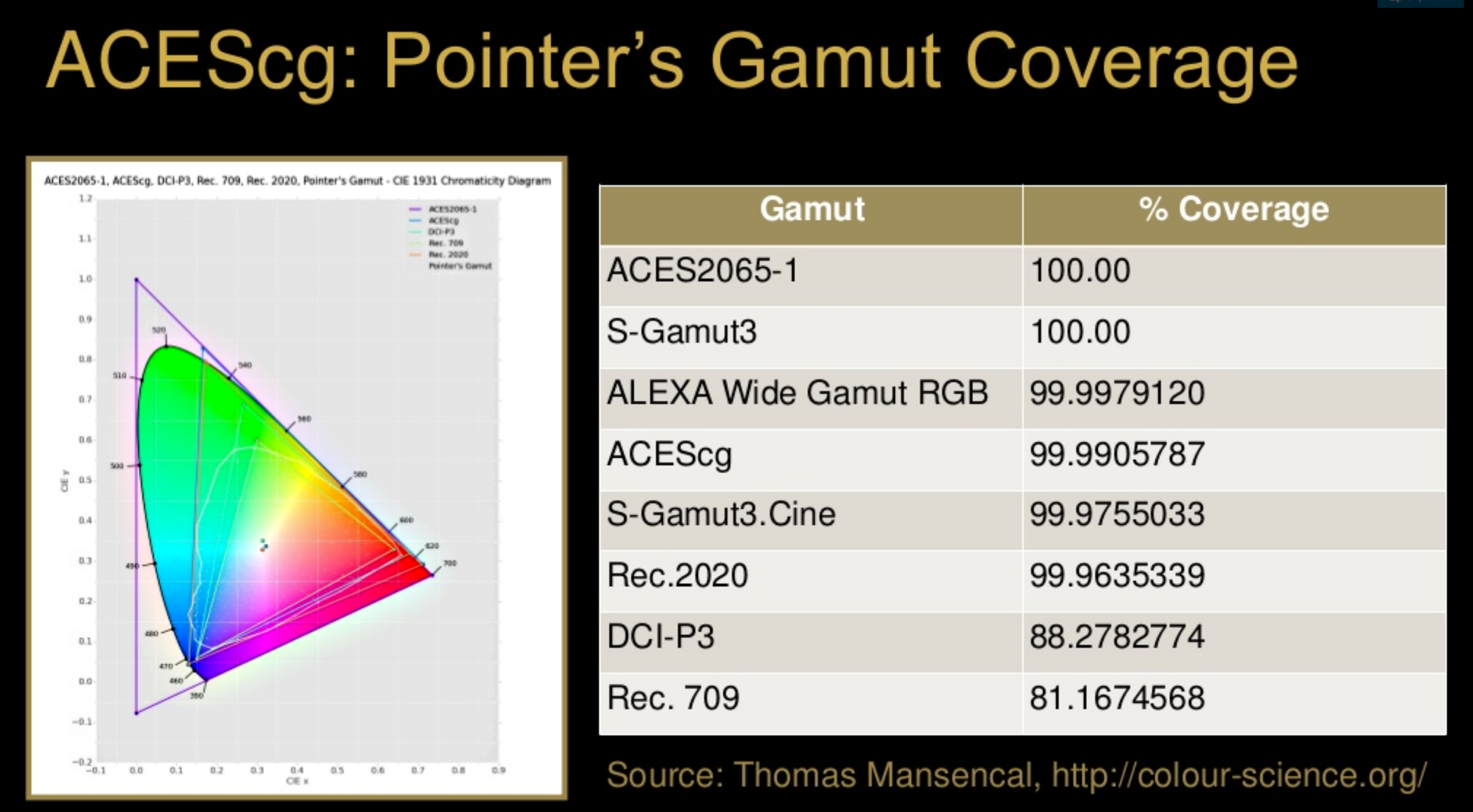

To note that all the new standards generally score very high on the Pointer’s Gamut chart. But with REC-2020 scoring 99.9% vs P3 at 88.2%.

www.tftcentral.co.uk/articles/pointers_gamut.htmhttps://www.slideshare.net/hpduiker/acescg-a-common-color-encoding-for-visual-effects-applications

The Pointer’s gamut is (an approximation of) the gamut of real surface colors as can be seen by the human eye, based on the research by Michael R. Pointer (1980). What this means is that every color that can be reflected by the surface of an object of any material is inside the Pointer’s gamut. Basically establishing a widely respected target for color reproduction. Visually, Pointers Gamut represents the colors we see about us in the natural world. Colors outside Pointers Gamut include those that do not occur naturally, such as neon lights and computer-generated colors possible in animation. Which would partially be accounted for with the new gamuts.

cinepedia.com/picture/color-gamut/

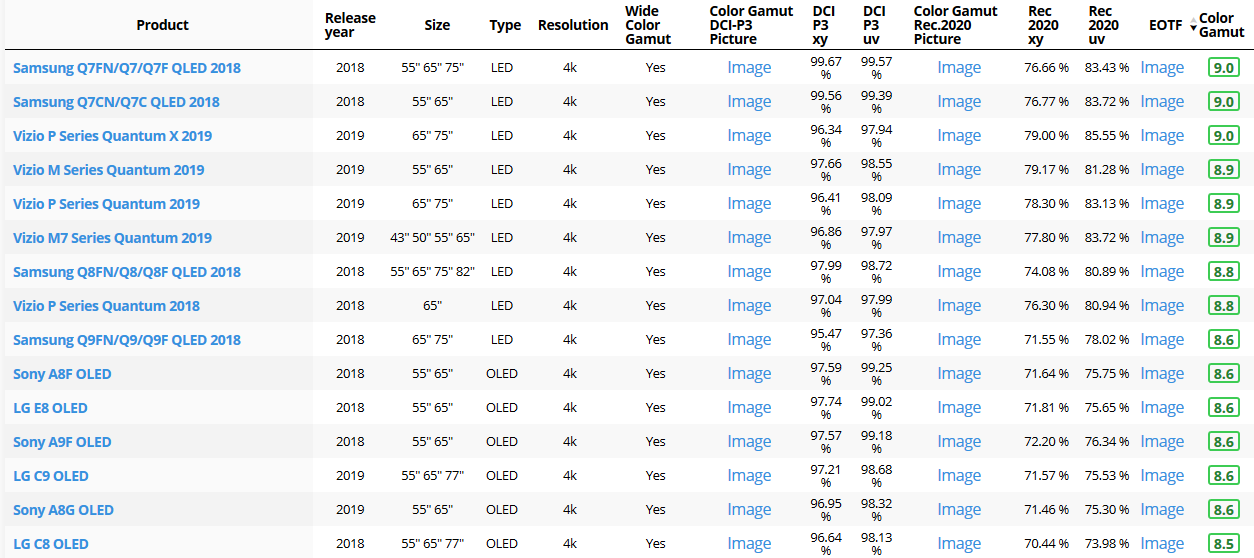

Not all current TVs can support the full spread of the new gamuts. Here is a list of modern TVs’ color coverage in percentage:

www.rtings.com/tv/tests/picture-quality/wide-color-gamut-rec-709-dci-p3-rec-2020There are no TVs that can come close to displaying all the colors within Rec.2020, and there likely won’t be for at least a few years. However, to help future-proof the technology, Rec.2020 support is already baked into the HDR spec. That means that the same genuine HDR media that fills the DCI P3 space on a compatible TV now, will in a few years also fill Rec.2020 on a TV supporting that larger space.

Rec.2020’s main gains are in the number of new tones of green that it will display, though it also offers improvements to the number of blue and red colors as well. Altogether, Rec.2020 will cover about 75% of the visual spectrum, which is a sizeable increase in coverage even over DCI P3.

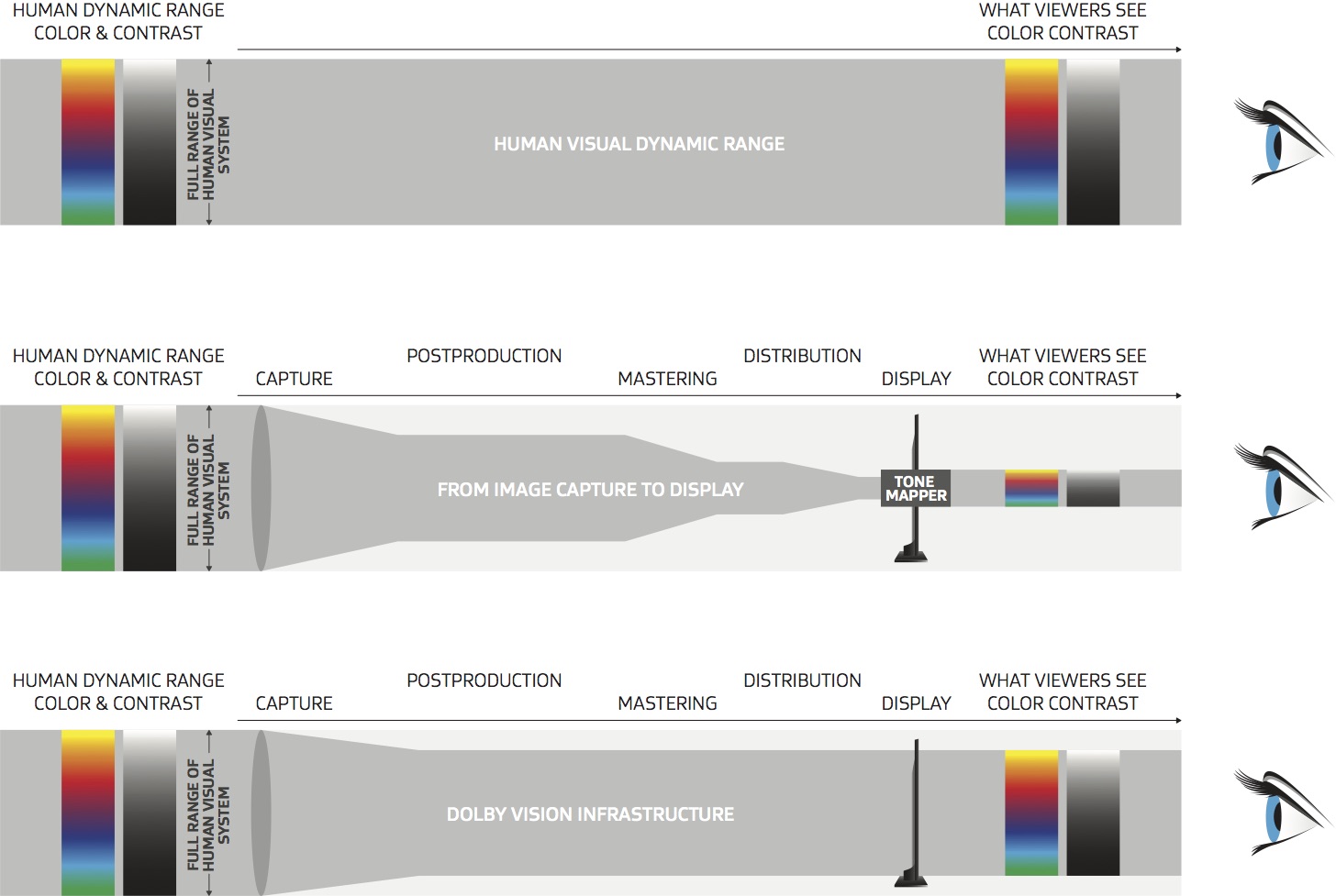

Dolby Vision

https://www.highdefdigest.com/news/show/what-is-dolby-vision/39049

https://www.techhive.com/article/3237232/dolby-vision-vs-hdr10-which-is-best.html

Dolby Vision is a proprietary end-to-end High Dynamic Range (HDR) format that covers content creation and playback through select cinemas, Ultra HD displays, and 4K titles. Like other HDR standards, the process uses expanded brightness to improve contrast between dark and light aspects of an image, bringing out deeper black levels and more realistic details in specular highlights — like the sun reflecting off of an ocean — in specially graded Dolby Vision material.

The iPhone 12 Pro gets the ability to record 4K 10-bit HDR video. According to Apple, it is the very first smartphone that is capable of capturing Dolby Vision HDR.

The iPhone 12 Pro takes two separate exposures and runs them through Apple’s custom image signal processor to create a histogram, which is a graph of the tonal values in each frame. The Dolby Vision metadata is then generated based on that histogram. In Laymen’s terms, it is essentially doing real-time grading while you are shooting. This is only possible due to the A14 Bionic chip.

Dolby Vision also allows for 12-bit color, as opposed to HDR10’s and HDR10+’s 10-bit color. While no retail TV we’re aware of supports 12-bit color, Dolby claims it can be down-sampled in such a way as to render 10-bit color more accurately.

Resources for more reading:

https://www.avsforum.com/forum/166-lcd-flat-panel-displays/2812161-what-color-volume.html

wolfcrow.com/say-hello-to-rec-2020-the-color-space-of-the-future/

www.cnet.com/news/ultra-hd-tv-color-part-ii-the-future/

-

domeble – Hi-Resolution CGI Backplates and 360° HDRI

Read more: domeble – Hi-Resolution CGI Backplates and 360° HDRIWhen collecting hdri make sure the data supports basic metadata, such as:

- Iso

- Aperture

- Exposure time or shutter time

- Color temperature

- Color space Exposure value (what the sensor receives of the sun intensity in lux)

- 7+ brackets (with 5 or 6 being the perceived balanced exposure)

In image processing, computer graphics, and photography, high dynamic range imaging (HDRI or just HDR) is a set of techniques that allow a greater dynamic range of luminances (a Photometry measure of the luminous intensity per unit area of light travelling in a given direction. It describes the amount of light that passes through or is emitted from a particular area, and falls within a given solid angle) between the lightest and darkest areas of an image than standard digital imaging techniques or photographic methods. This wider dynamic range allows HDR images to represent more accurately the wide range of intensity levels found in real scenes ranging from direct sunlight to faint starlight and to the deepest shadows.

The two main sources of HDR imagery are computer renderings and merging of multiple photographs, which in turn are known as low dynamic range (LDR) or standard dynamic range (SDR) images. Tone Mapping (Look-up) techniques, which reduce overall contrast to facilitate display of HDR images on devices with lower dynamic range, can be applied to produce images with preserved or exaggerated local contrast for artistic effect. Photography

In photography, dynamic range is measured in Exposure Values (in photography, exposure value denotes all combinations of camera shutter speed and relative aperture that give the same exposure. The concept was developed in Germany in the 1950s) differences or stops, between the brightest and darkest parts of the image that show detail. An increase of one EV or one stop is a doubling of the amount of light.

The human response to brightness is well approximated by a Steven’s power law, which over a reasonable range is close to logarithmic, as described by the Weber�Fechner law, which is one reason that logarithmic measures of light intensity are often used as well.

HDR is short for High Dynamic Range. It’s a term used to describe an image which contains a greater exposure range than the “black” to “white” that 8 or 16-bit integer formats (JPEG, TIFF, PNG) can describe. Whereas these Low Dynamic Range images (LDR) can hold perhaps 8 to 10 f-stops of image information, HDR images can describe beyond 30 stops and stored in 32 bit images.

COLLECTIONS

| Featured AI

| Design And Composition

| Explore posts

POPULAR SEARCHES

unreal | pipeline | virtual production | free | learn | photoshop | 360 | macro | google | nvidia | resolution | open source | hdri | real-time | photography basics | nuke

FEATURED POSTS

Social Links

DISCLAIMER – Links and images on this website may be protected by the respective owners’ copyright. All data submitted by users through this site shall be treated as freely available to share.