BREAKING NEWS

LATEST POSTS

-

Mamba and MicroMamba – A free, open source general software package managers for any kind of software and all operating systems

https://mamba.readthedocs.io/en/latest/user_guide/micromamba.html

https://mamba.readthedocs.io/en/latest/installation/micromamba-installation.html

https://micro.mamba.pm/api/micromamba/win-64/latest

https://prefix.dev/docs/mamba/overview

With mamba, it’s easy to set up

software environments. A software environment is simply a set of different libraries, applications and their dependencies. The power of environments is that they can co-exist: you can easily have an environment called py27 for Python 2.7 and one called py310 for Python 3.10, so that multiple of your projects with different requirements have their dedicated environments. This is similar to “containers” and images. However, mamba makes it easy to add, update or remove software from the environments.

Download the latest executable from https://micro.mamba.pm/api/micromamba/win-64/latest

You can install it or just run the executable to create a python environment under Windows:

(more…) -

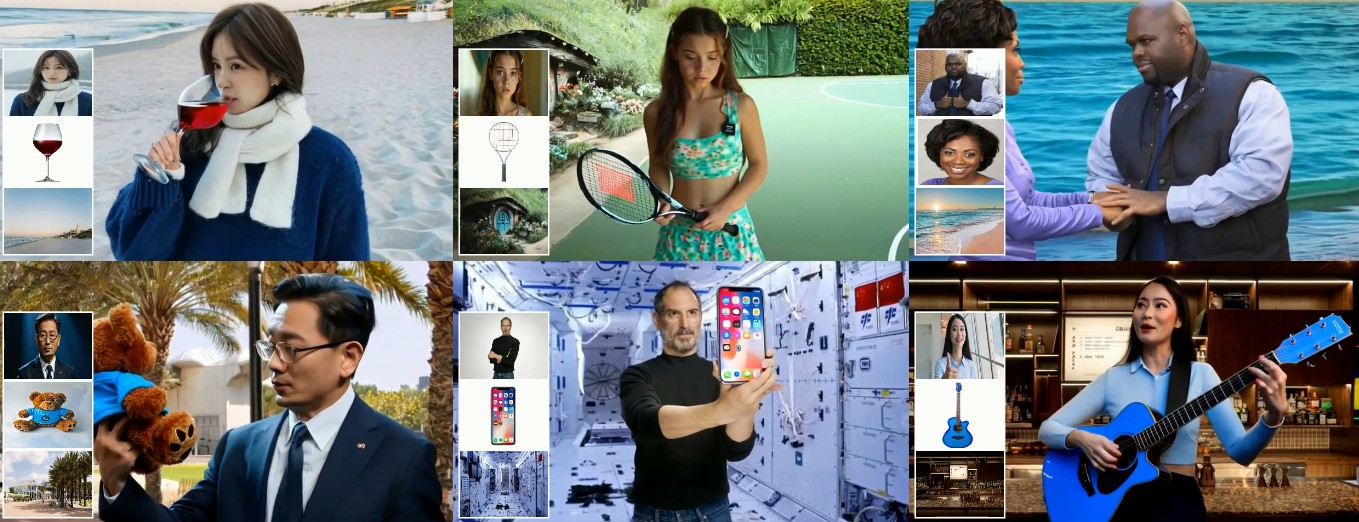

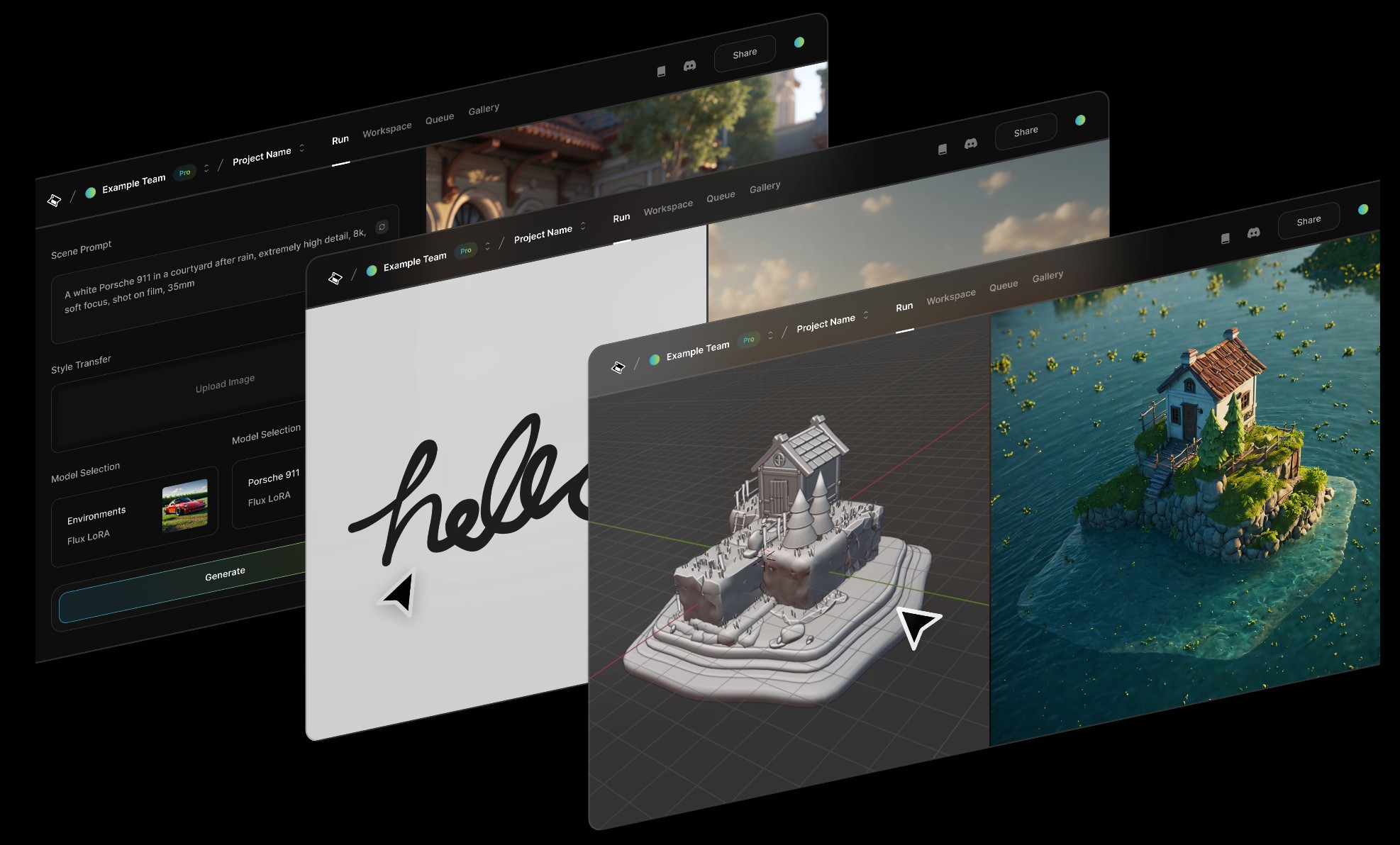

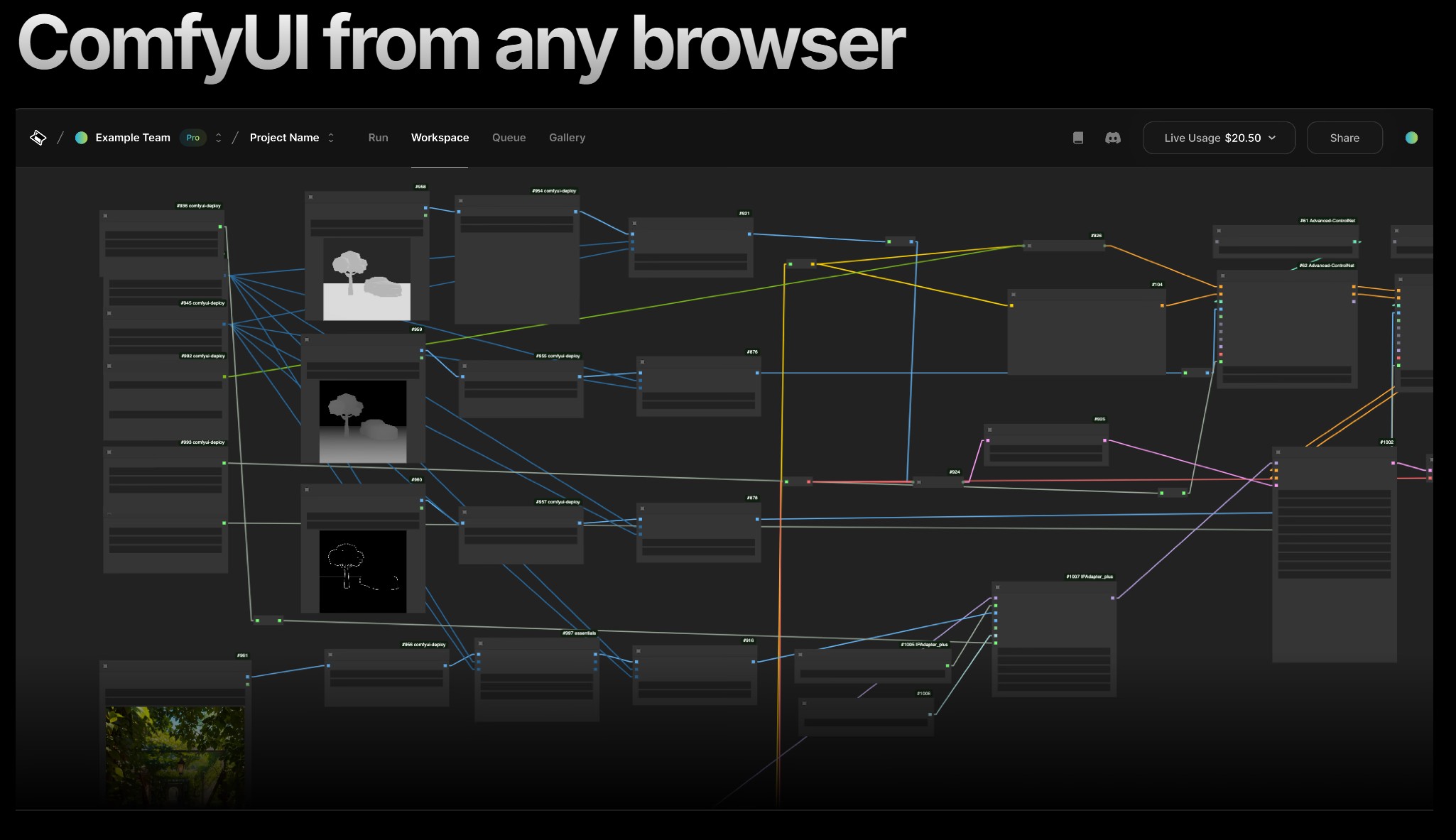

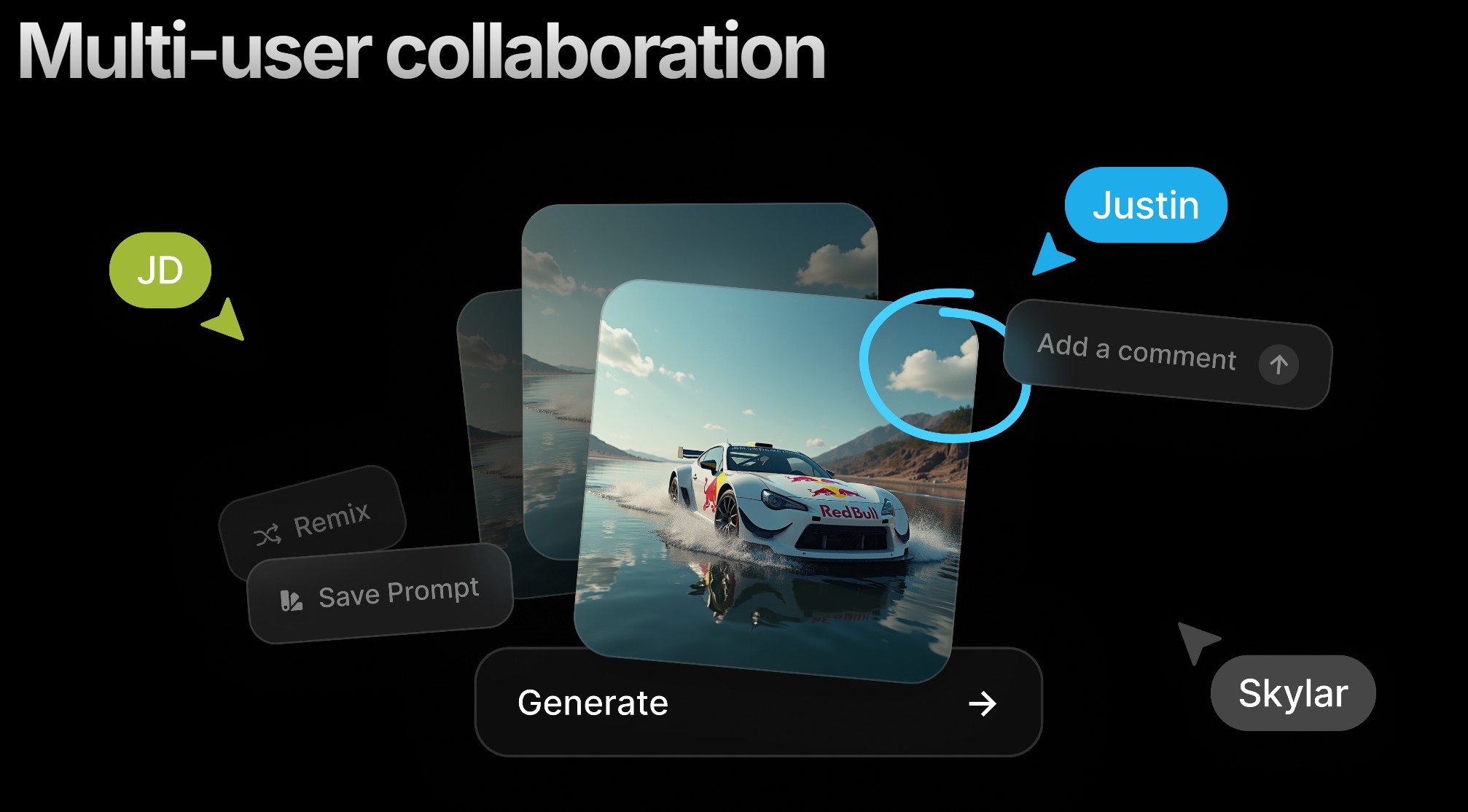

PlayBook3D – Creative controls for all media formats

Playbook3d.com is a diffusion-based render engine that reduces the time to final image with AI. It is accessible via web editor and API with support for scene segmentation and re-lighting, integration with production pipelines and frame-to-frame consistency for image, video, and real-time 3D formats.

-

AI and the Law – AI Creativity – Genius or Gimmick?

7:59-9:50 Justine Bateman:

“I mean first I want to give people, help people have a little bit of a definition of what generative AI is—

think of it as like a blender and if you have a blender at home and you turn it on, what does it do? It depends on what I put into it, so it cannot function unless it’s fed things.

Then you turn on the blender and you give it a prompt, which is your little spoon, and you get a little spoonful—little Frankenstein spoonful—out of what you asked for.

So what is going into the blender? Every but a hundred years of film and television or many, many years of, you know, doctor’s reports or students’ essays or whatever it is.

In the film business, in particular, that’s what we call theft; it’s the biggest violation. And the term that continues to be used is “all we did.” I think the CTO of OpenAI—believe that’s her position; I forget her name—when she was asked in an interview recently what she had to say about the fact that they didn’t ask permission to take it in, she said, “Well, it was all publicly available.”

And I will say this: if you own a car—I know we’re in New York City, so it’s not going to be as applicable—but if I see a car in the street, it’s publicly available, but somehow it’s illegal for me to take it. That’s what we have the copyright office for, and I don’t know how well staffed they are to handle something like this, but this is the biggest copyright violation in the history of that office and the US government” -

Aze Alter – What If Humans and AI Unite? | AGE OF BEYOND

https://www.patreon.com/AzeAlter

Voices & Sound Effects: https://elevenlabs.io/

Video Created mainly with Luma: https://lumalabs.ai/

LUMA LABS

KLING

RUNWAY

ELEVEN LABS

MINIMAX

MIDJOURNEY

Music By Scott Buckley -

ComfyUI-Manager Joins Comfy-Org

https://blog.comfy.org/p/comfyui-manager-joins-comfy-org

On March 28, ComfyUI-Manager will be moving to the Comfy-Org GitHub organization as Comfy-Org/ComfyUI-Manager. This represents a natural evolution as they continue working to improve the custom node experience for all ComfyUI users.

What This Means For You

This change is primarily about improving support and development velocity. There are a few practical considerations:

- Automatic GitHub redirects will ensure all existing links, git commands, and references to the repository will continue to work seamlessly without any action needed

- For developers: Any existing PRs and issues will be transferred to the new repository location

- For users: ComfyUI-Manager will continue to function exactly as before—no action needed

- For workflow authors: Resources that reference ComfyUI-Manager will continue to work without interruption

-

AccVideo – Accelerating Video Diffusion Model with Synthetic Dataset

https://aejion.github.io/accvideo

https://github.com/aejion/AccVideo

https://huggingface.co/aejion/AccVideo

AccVideo is a novel efficient distillation method to accelerate video diffusion models with synthetic datset. This method is 8.5x faster than HunyuanVideo.

FEATURED POSTS

-

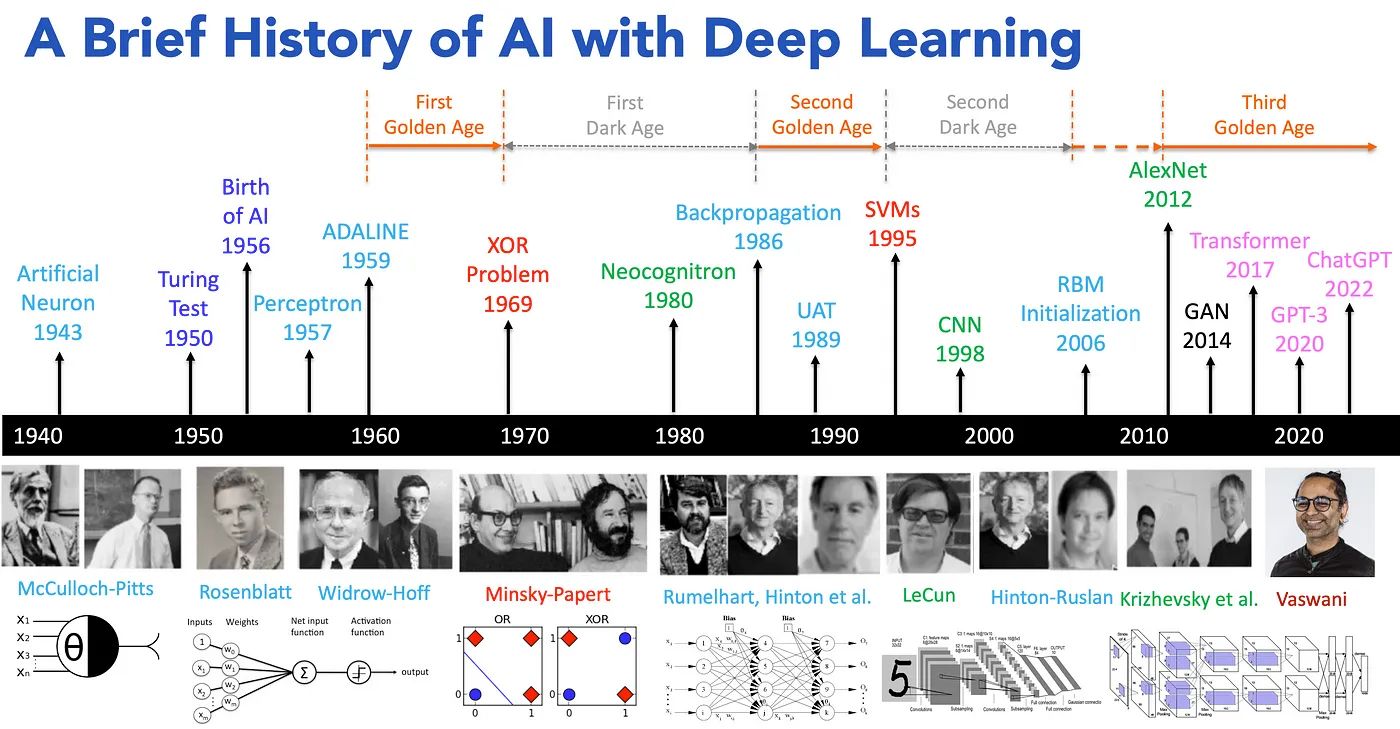

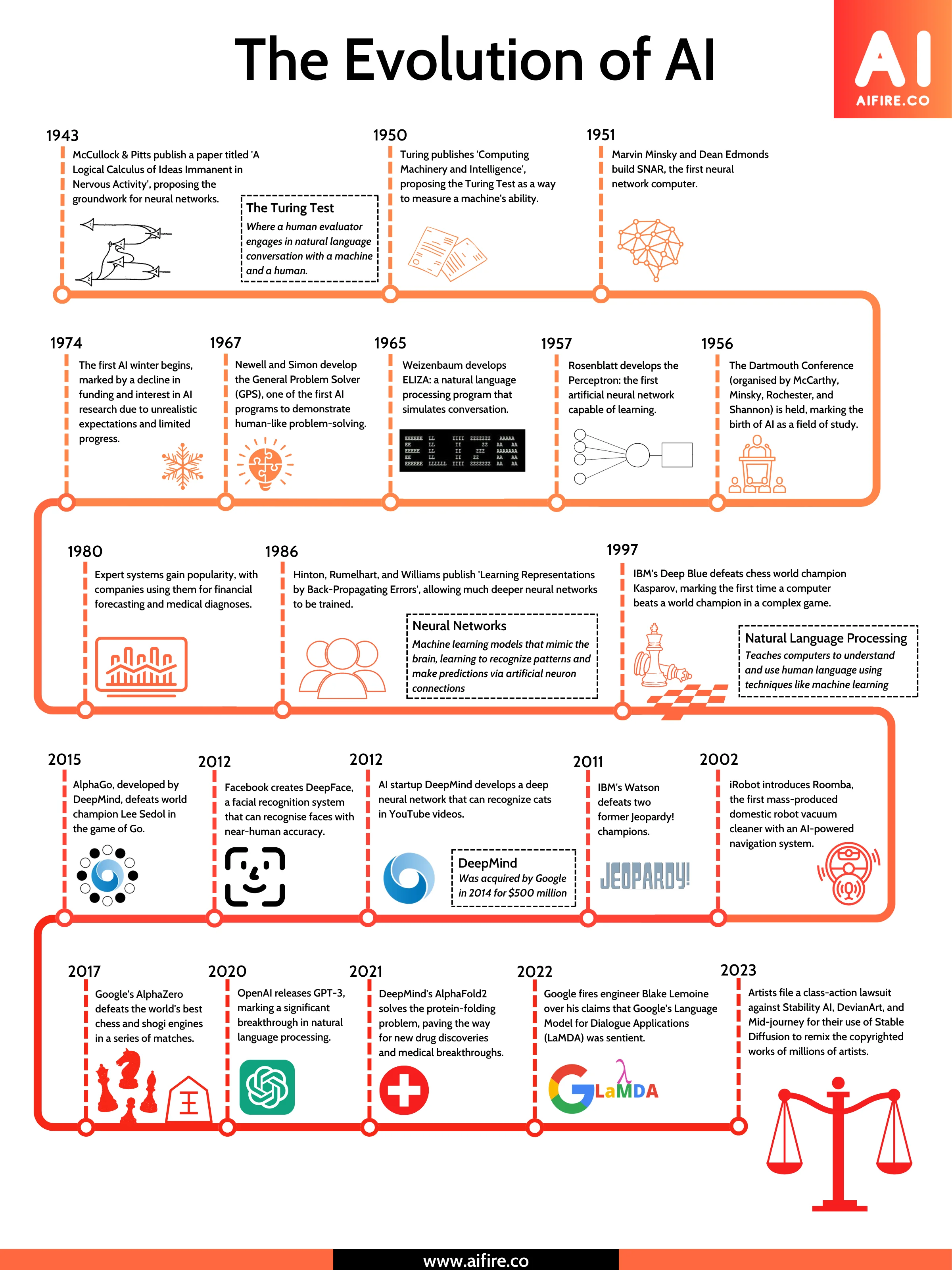

The History, Evolution and Rise of AI

https://medium.com/@lmpo/a-brief-history-of-ai-with-deep-learning-26f7948bc87b

🔹 1943: 𝗠𝗰𝗖𝘂𝗹𝗹𝗼𝗰𝗵 & 𝗣𝗶𝘁𝘁𝘀 create the first artificial neuron.

🔹 1950: 𝗔𝗹𝗮𝗻 𝗧𝘂𝗿𝗶𝗻𝗴 introduces the Turing Test, forever changing the way we view intelligence.

🔹 1956: 𝗝𝗼𝗵𝗻 𝗠𝗰𝗖𝗮𝗿𝘁𝗵𝘆 coins the term “Artificial Intelligence,” marking the official birth of the field.

🔹 1957: 𝗙𝗿𝗮𝗻𝗸 𝗥𝗼𝘀𝗲𝗻𝗯𝗹𝗮𝘁𝘁 invents the Perceptron, one of the first neural networks.

🔹 1959: 𝗕𝗲𝗿𝗻𝗮𝗿𝗱 𝗪𝗶𝗱𝗿𝗼𝘄 and 𝗧𝗲𝗱 𝗛𝗼𝗳𝗳 create ADALINE, a model that would shape neural networks.

🔹 1969: 𝗠𝗶𝗻𝘀𝗸𝘆 & 𝗣𝗮𝗽𝗲𝗿𝘁 solve the XOR problem, but also mark the beginning of the “first AI winter.”

🔹 1980: 𝗞𝘂𝗻𝗶𝗵𝗶𝗸𝗼 𝗙𝘂𝗸𝘂𝘀𝗵𝗶𝗺𝗮 introduces Neocognitron, laying the groundwork for deep learning.

🔹 1986: 𝗚𝗲𝗼𝗳𝗳𝗿𝗲𝘆 𝗛𝗶𝗻𝘁𝗼𝗻 and 𝗗𝗮𝘃𝗶𝗱 𝗥𝘂𝗺𝗲𝗹𝗵𝗮𝗿𝘁 introduce backpropagation, making neural networks viable again.

🔹 1989: 𝗝𝘂𝗱𝗲𝗮 𝗣𝗲𝗮𝗿𝗹 advances UAT (Understanding and Reasoning), building a foundation for AI’s logical abilities.

🔹 1995: 𝗩𝗹𝗮𝗱𝗶𝗺𝗶𝗿 𝗩𝗮𝗽𝗻𝗶𝗸 and 𝗖𝗼𝗿𝗶𝗻𝗻𝗮 𝗖𝗼𝗿𝘁𝗲𝘀 develop Support Vector Machines (SVMs), a breakthrough in machine learning.

🔹 1998: 𝗬𝗮𝗻𝗻 𝗟𝗲𝗖𝘂𝗻 popularizes Convolutional Neural Networks (CNNs), revolutionizing image recognition.

🔹 2006: 𝗚𝗲𝗼𝗳𝗳𝗿𝗲𝘆 𝗛𝗶𝗻𝘁𝗼𝗻 and 𝗥𝘂𝘀𝗹𝗮𝗻 𝗦𝗮𝗹𝗮𝗸𝗵𝘂𝘁𝗱𝗶𝗻𝗼𝘃 introduce deep belief networks, reigniting interest in deep learning.

🔹 2012: 𝗔𝗹𝗲𝘅 𝗞𝗿𝗶𝘇𝗵𝗲𝘃𝘀𝗸𝘆 and 𝗚𝗲𝗼𝗳𝗳𝗿𝗲𝘆 𝗛𝗶𝗻𝘁𝗼𝗻 launch AlexNet, sparking the modern AI revolution in deep learning.

🔹 2014: 𝗜𝗮𝗻 𝗚𝗼𝗼𝗱𝗳𝗲𝗹𝗹𝗼𝘄 introduces Generative Adversarial Networks (GANs), opening new doors for AI creativity.

🔹 2017: 𝗔𝘀𝗵𝗶𝘀𝗵 𝗩𝗮𝘀𝘄𝗮𝗻𝗶 and team introduce Transformers, redefining natural language processing (NLP).

🔹 2020: OpenAI unveils GPT-3, setting a new standard for language models and AI’s capabilities.

🔹 2022: OpenAI releases ChatGPT, democratizing conversational AI and bringing it to the masses.

-

Zibra.AI – Real-Time Volumetric Effects in Virtual Production. Now free for Indies!

A New Era for Volumetrics

For a long time, volumetric visual effects were viable only in high-end offline VFX workflows. Large data footprints and poor real-time rendering performance limited their use: most teams simply avoided volumetrics altogether. It’s similar to the early days of online video: limited computational power and low network bandwidth made video content hard to share or stream. Today, of course, we can’t imagine the internet without it, and we believe volumetrics are on a similar path.

With advanced data compression and real-time, GPU-driven decompression, anyone can now bring CGI-class visual effects into Unreal Engine.

From now on, it’s completely free for individual creators!

What it means for you?

(more…)

-

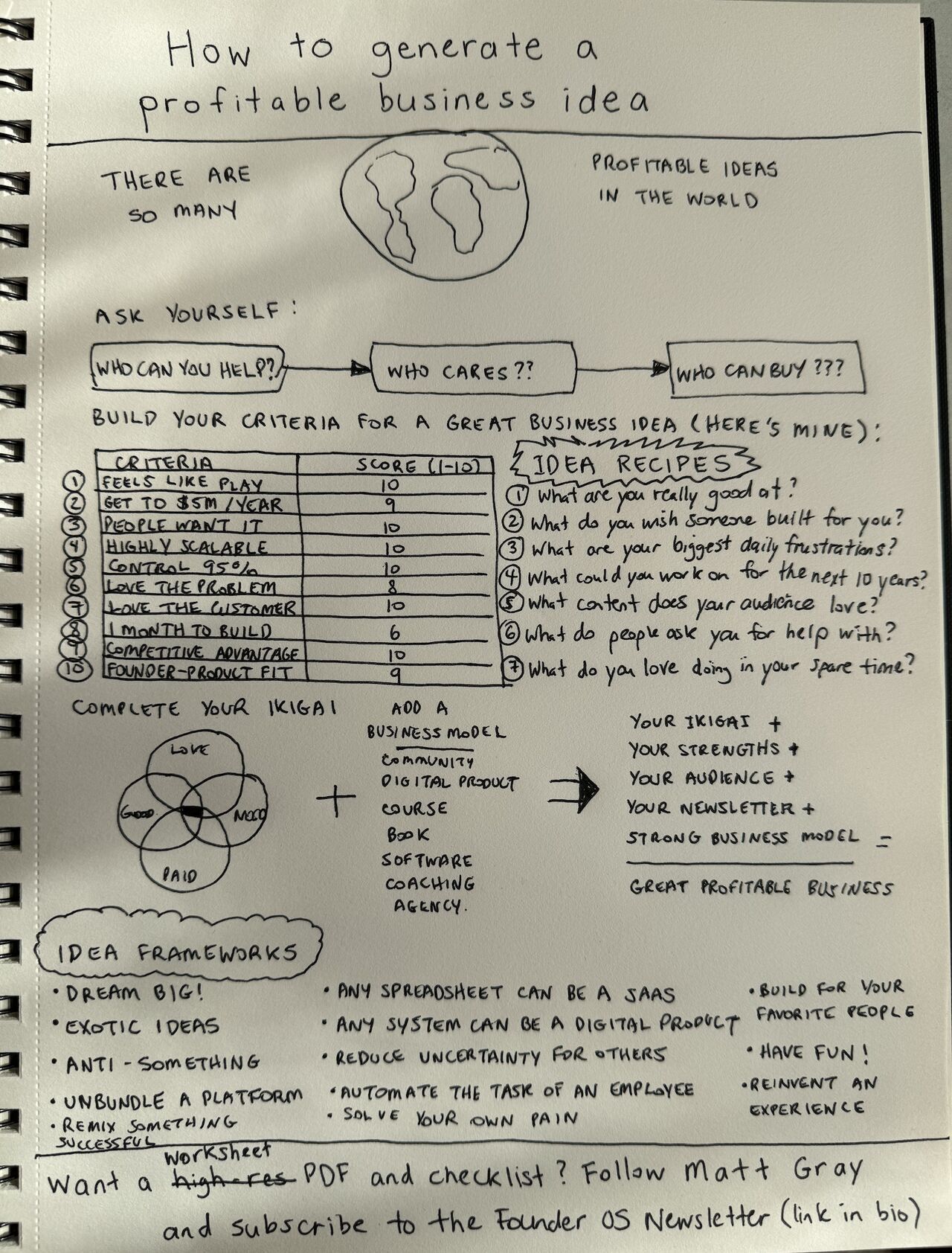

Matt Gray – How to generate a profitable business

In the last 10 years, over 1,000 people have asked me how to start a business. The truth? They’re all paralyzed by limiting beliefs. What they are and how to break them today:

(more…)

Before we get into the How, let’s first unpack why people think they can’t start a business.

Here are the biggest reasons I’ve found:

-

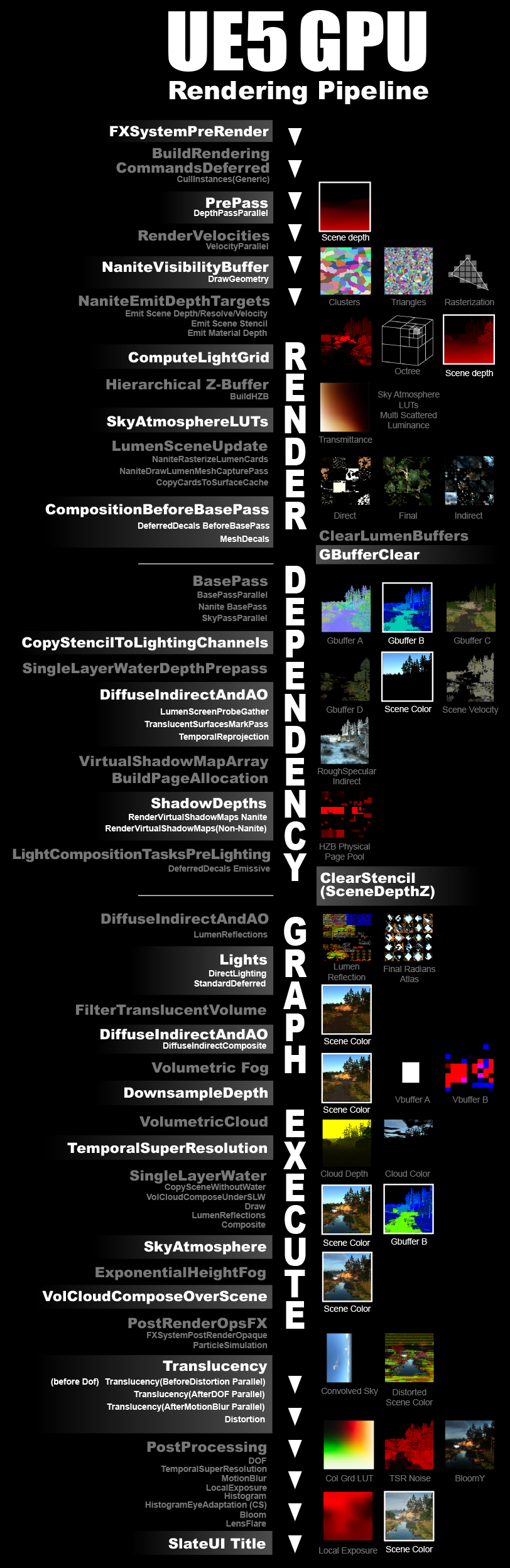

What is physically correct lighting all about?

http://gamedev.stackexchange.com/questions/60638/what-is-physically-correct-lighting-all-about

2012-08 Nathan Reed wrote:

Physically-based shading means leaving behind phenomenological models, like the Phong shading model, which are simply built to “look good” subjectively without being based on physics in any real way, and moving to lighting and shading models that are derived from the laws of physics and/or from actual measurements of the real world, and rigorously obey physical constraints such as energy conservation.

For example, in many older rendering systems, shading models included separate controls for specular highlights from point lights and reflection of the environment via a cubemap. You could create a shader with the specular and the reflection set to wildly different values, even though those are both instances of the same physical process. In addition, you could set the specular to any arbitrary brightness, even if it would cause the surface to reflect more energy than it actually received.

In a physically-based system, both the point light specular and the environment reflection would be controlled by the same parameter, and the system would be set up to automatically adjust the brightness of both the specular and diffuse components to maintain overall energy conservation. Moreover you would want to set the specular brightness to a realistic value for the material you’re trying to simulate, based on measurements.

Physically-based lighting or shading includes physically-based BRDFs, which are usually based on microfacet theory, and physically correct light transport, which is based on the rendering equation (although heavily approximated in the case of real-time games).

It also includes the necessary changes in the art process to make use of these features. Switching to a physically-based system can cause some upsets for artists. First of all it requires full HDR lighting with a realistic level of brightness for light sources, the sky, etc. and this can take some getting used to for the lighting artists. It also requires texture/material artists to do some things differently (particularly for specular), and they can be frustrated by the apparent loss of control (e.g. locking together the specular highlight and environment reflection as mentioned above; artists will complain about this). They will need some time and guidance to adapt to the physically-based system.

On the plus side, once artists have adapted and gained trust in the physically-based system, they usually end up liking it better, because there are fewer parameters overall (less work for them to tweak). Also, materials created in one lighting environment generally look fine in other lighting environments too. This is unlike more ad-hoc models, where a set of material parameters might look good during daytime, but it comes out ridiculously glowy at night, or something like that.

Here are some resources to look at for physically-based lighting in games:

SIGGRAPH 2013 Physically Based Shading Course, particularly the background talk by Naty Hoffman at the beginning. You can also check out the previous incarnations of this course for more resources.

Sébastien Lagarde, Adopting a physically-based shading model and Feeding a physically-based shading model

And of course, I would be remiss if I didn’t mention Physically-Based Rendering by Pharr and Humphreys, an amazing reference on this whole subject and well worth your time, although it focuses on offline rather than real-time rendering.