BREAKING NEWS

LATEST POSTS

-

FXGuide – ACES 2.0 with ILM’s Alex Fry

https://draftdocs.acescentral.com/background/whats-new/

ACES 2.0 is the second major release of the components that make up the ACES system. The most significant change is a new suite of rendering transforms whose design was informed by collected feedback and requests from users of ACES 1. The changes aim to improve the appearance of perceived artifacts and to complete previously unfinished components of the system, resulting in a more complete, robust, and consistent product.

Highlights of the key changes in ACES 2.0 are as follows:

- New output transforms, including:

- A less aggressive tone scale

- More intuitive controls to create custom outputs to non-standard displays

- Robust gamut mapping to improve perceptual uniformity

- Improved performance of the inverse transforms

- Enhanced AMF specification

- An updated specification for ACES Transform IDs

- OpenEXR compression recommendations

- Enhanced tools for generating Input Transforms and recommended procedures for characterizing prosumer cameras

- Look Transform Library

- Expanded documentation

Rendering Transform

The most substantial change in ACES 2.0 is a complete redesign of the rendering transform.

ACES 2.0 was built as a unified system, rather than through piecemeal additions. Different deliverable outputs “match” better and making outputs to display setups other than the provided presets is intended to be user-driven. The rendering transforms are less likely to produce undesirable artifacts “out of the box”, which means less time can be spent fixing problematic images and more time making pictures look the way you want.

Key design goals

- Improve consistency of tone scale and provide an easy to use parameter to allow for outputs between preset dynamic ranges

- Minimize hue skews across exposure range in a region of same hue

- Unify for structural consistency across transform type

- Easy to use parameters to create outputs other than the presets

- Robust gamut mapping to improve harsh clipping artifacts

- Fill extents of output code value cube (where appropriate and expected)

- Invertible – not necessarily reversible, but Output > ACES > Output round-trip should be possible

- Accomplish all of the above while maintaining an acceptable “out-of-the box” rendering

- New output transforms, including:

FEATURED POSTS

-

PixVerse – Prompt, lypsync and extended video generation

https://app.pixverse.ai/onboard

PixVerse now has 3 main features:

text to video➡️ How To Generate Videos With Text Promptsimage to video➡️ How To Animate Your Images And Bring Them To Lifeupscale➡️ How to Upscale Your Video

Enhanced Capabilities

– Improved Prompt Understanding: Achieve more accurate prompt interpretation and stunning video dynamics.

– Supports Various Video Ratios: Choose from 16:9, 9:16, 3:4, 4:3, and 1:1 ratios.

– Upgraded Styles: Style functionality returns with options like Anime, Realistic, Clay, and 3D. It supports both text-to-video and image-to-video stylization.New Features

– Lipsync: The new Lipsync feature enables users to add text or upload audio, and PixVerse will automatically sync the characters’ lip movements in the generated video based on the text or audio.

– Effect: Offers 8 creative effects, including Zombie Transformation, Wizard Hat, Monster Invasion, and other Halloween-themed effects, enabling one-click creativity.

– Extend: Extend the generated video by an additional 5-8 seconds, with control over the content of the extended segment.

-

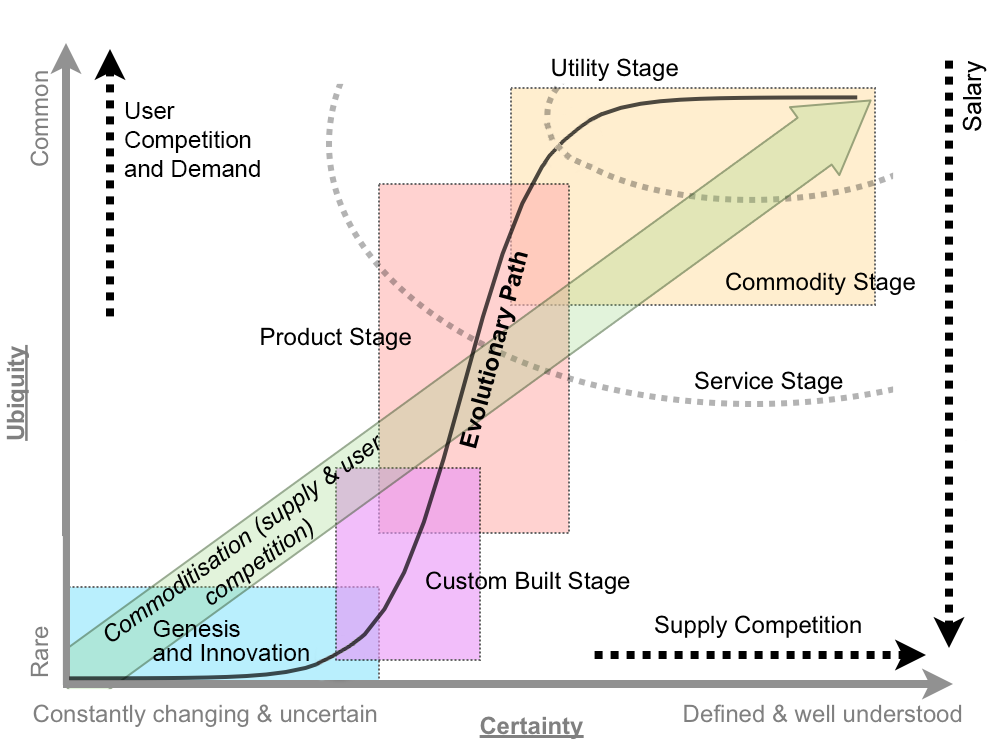

What the Boeing 737 MAX’s crashes can teach us about production business – the effects of commoditisation

Airplane manufacturing is no different from mortgage lending or insulin distribution or make-believe blood analyzing software (or VFX?) —another cash cow for the one percent, bound inexorably for the slaughterhouse.

The beginning of the end was “Boeing’s 1997 acquisition of McDonnell Douglas, a dysfunctional firm with a dilapidated aircraft plant in Long Beach and a CEO (Harry Stonecipher) who liked to use what he called the “Hollywood model” for dealing with engineers: Hire them for a few months when project deadlines are nigh, fire them when you need to make numbers.” And all that came with it. “Stonecipher’s team had driven the last nail in the coffin of McDonnell’s flailing commercial jet business by trying to outsource everything but design, final assembly, and flight testing and sales.”

It is understood, now more than ever, that capitalism does half-assed things like that, especially in concert with computer software and oblivious regulators.

There was something unsettlingly familiar when the world first learned of MCAS in November, about two weeks after the system’s unthinkable stupidity drove the two-month-old plane and all 189 people on it to a horrific death. It smacked of the sort of screwup a 23-year-old intern might have made—and indeed, much of the software on the MAX had been engineered by recent grads of Indian software-coding academies making as little as $9 an hour, part of Boeing management’s endless war on the unions that once represented more than half its employees.

Down in South Carolina, a nonunion Boeing assembly line that opened in 2011 had for years churned out scores of whistle-blower complaints and wrongful termination lawsuits packed with scenes wherein quality-control documents were regularly forged, employees who enforced standards were sabotaged, and planes were routinely delivered to airlines with loose screws, scratched windows, and random debris everywhere.

Shockingly, another piece of the quality failure is Boeing securing investments from all airliners, starting with SouthWest above all, to guarantee Boeing’s production lines support in exchange for fair market prices and favorite treatments. Basically giving Boeing financial stability independently on the quality of their product. “Those partnerships were but one numbers-smoothing mechanism in a diversified tool kit Boeing had assembled over the previous generation for making its complex and volatile business more palatable to Wall Street.”

-

Zibra.AI – Real-Time Volumetric Effects in Virtual Production. Now free for Indies!

A New Era for Volumetrics

For a long time, volumetric visual effects were viable only in high-end offline VFX workflows. Large data footprints and poor real-time rendering performance limited their use: most teams simply avoided volumetrics altogether. It’s similar to the early days of online video: limited computational power and low network bandwidth made video content hard to share or stream. Today, of course, we can’t imagine the internet without it, and we believe volumetrics are on a similar path.

With advanced data compression and real-time, GPU-driven decompression, anyone can now bring CGI-class visual effects into Unreal Engine.

From now on, it’s completely free for individual creators!

What it means for you?

(more…)

-

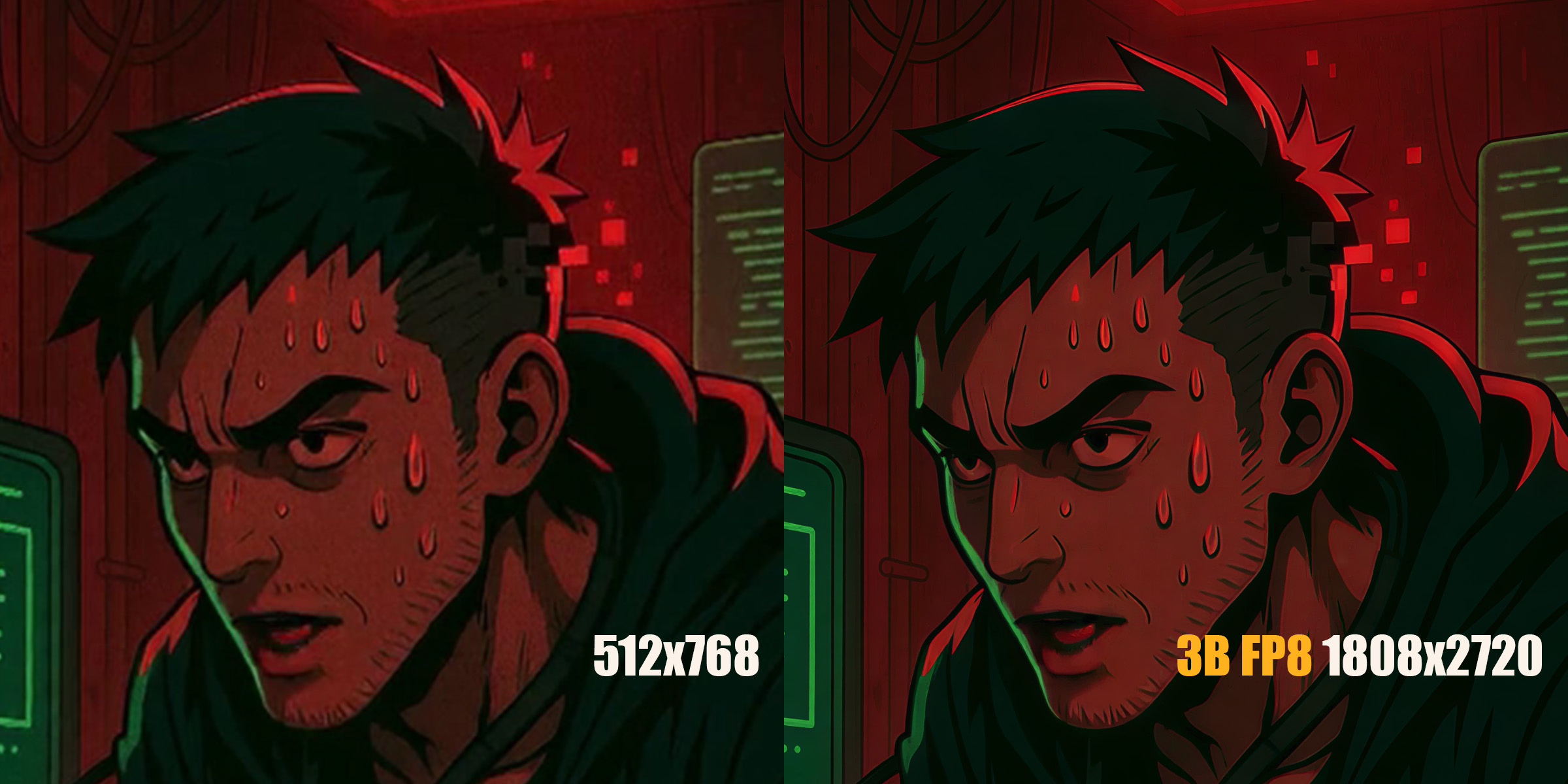

Fast, optimized ‘for’ pixel loops with OpenCV and Python to create tone mapped HDR images

https://pyimagesearch.com/2017/08/28/fast-optimized-for-pixel-loops-with-opencv-and-python/

https://learnopencv.com/exposure-fusion-using-opencv-cpp-python/

Exposure Fusion is a method for combining images taken with different exposure settings into one image that looks like a tone mapped High Dynamic Range (HDR) image.