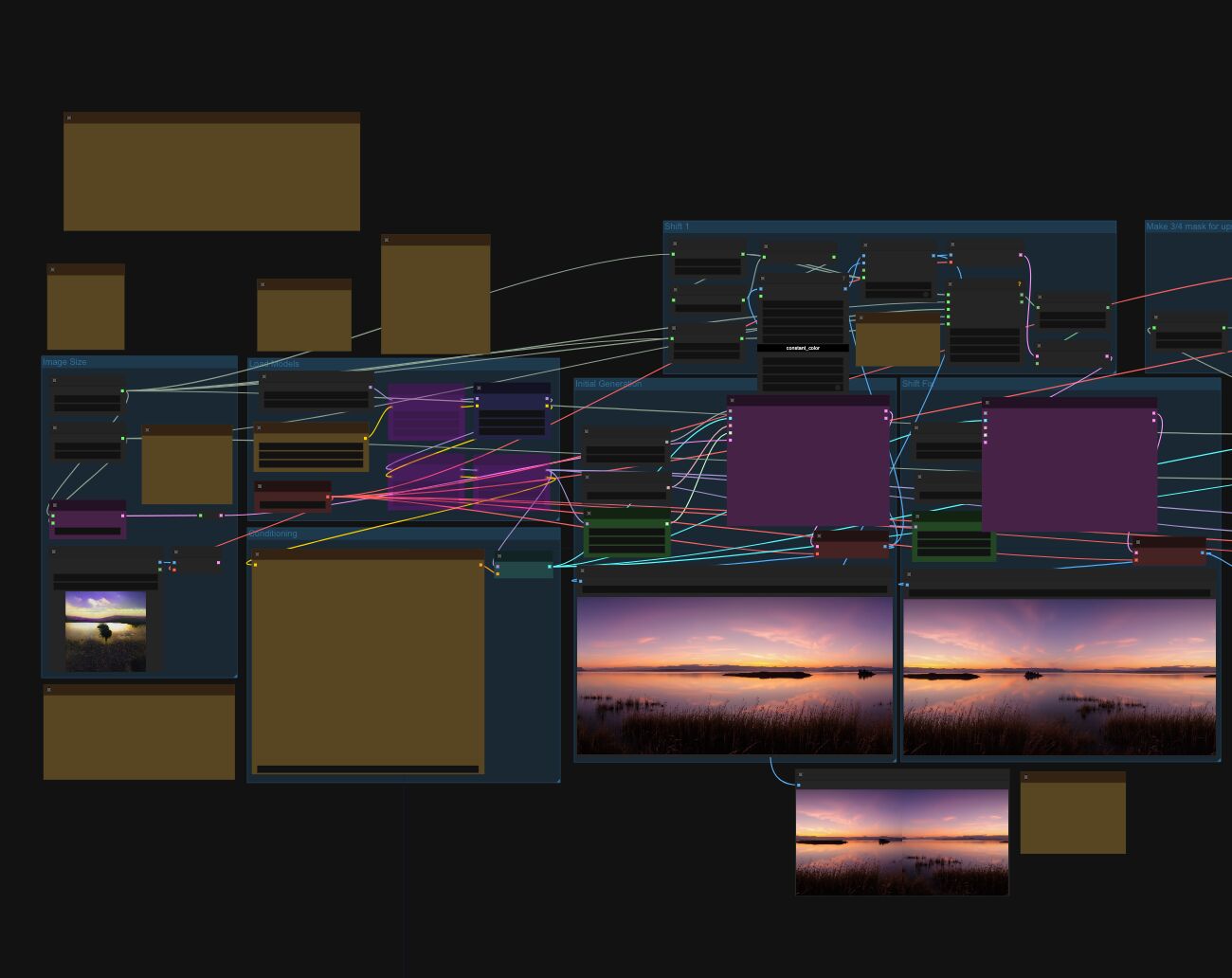

https://www.keheka.com/combining-projections-in-nuke/

3Dprinting (178) A.I. (846) animation (350) blender (210) colour (233) commercials (52) composition (153) cool (364) design (649) Featured (80) hardware (314) IOS (109) jokes (139) lighting (289) modeling (145) music (186) photogrammetry (192) photography (755) production (1291) python (94) quotes (497) reference (314) software (1356) trailers (307) ves (555) VR (221)

Video Projection Tool Software

https://hcgilje.wordpress.com/vpt/

https://www.projectorpoint.co.uk/news/how-bright-should-my-projector-be/

http://www.adwindowscreens.com/the_calculator/

heavym

https://heavym.net/en/

MadMapper

https://madmapper.com/

https://www.derivative.ca/088/Applications/

TouchDesigner is a visual development platform that equips you with the tools you need to create stunning realtime projects and rich user experiences.

Whether you’re creating interactive media systems, architectural projections, live music visuals, or simply rapid-prototyping your latest creative impulse, TouchDesigner is the platform that can do it all.

In the increasingly popular technique of mapping projector outputs to real-world objects, TouchDesigner is the tool of choice. With an integrated 3D engine to accurately model and texture real-world objects, completely configurable multiprojector output options, and one of the most powerful realtime graphics engines available, TouchDesigner is ready for the unique requirements of any projection mapping project.

https://civitai.com/models/735980/flux-equirectangular-360-panorama

https://civitai.com/models/745010?modelVersionId=833115

The trigger phrase is “equirectangular 360 degree panorama”. I would avoid saying “spherical projection” since that tends to result in non-equirectangular spherical images.

Image resolution should always be a 2:1 aspect ratio. 1024 x 512 or 1408 x 704 work quite well and were used in the training data. 2048 x 1024 also works.

I suggest using a weight of 0.5 – 1.5. If you are having issues with the image generating too flat instead of having the necessary spherical distortion, try increasing the weight above 1, though this could negatively impact small details of the image. For Flux guidance, I recommend a value of about 2.5 for realistic scenes.

8-bit output at the moment

While in ZBrush, call up your image editing package and use it to modify the active ZBrush document or tool, then go straight back into ZBrush.

ZAppLink can work on different saved points of view for your model. What you paint in your image editor is then projected to the model’s PolyPaint or texture for more creative freedom.

With ZAppLink you can combine ZBrush’s powerful capabilities with all the painting power of the PSD-capable 2D editor of your choice, making it easy to create stunning textures.

At the age of 94, this is what the great Clint Eastwood looks like.

Standing, lucid, brilliant, directing his latest film. Eastwood himself says it: “I don’t let the old man in. I keep myself busy. You have to stay active, alive, happy, strong, capable. I don’t let in the old critic, hostile, envious, gossiping, full of rage and complaints, of lack of courage, which denies to itself that old age can be creative, decisive, full of light and projection. Getting older is not for sissies.”

~Clint Eastwood

https://kartaverse.github.io/Reactor-Docs/#/com.AndrewHazelden.KartaVR

Kartaverse is a free open source post-production pipeline that is optimized for the immersive media sector. If you can imagine it, Kartaverse can help you create it in XR!

“Karta” is the Swedish word for map. With KartaVR you can stitch, composite, retouch, and remap any kind of panoramic video: from any projection to any projection. This provides the essential tools for 360VR, panoramic video stitching, depthmap, lightfield, and fulldome image editing workflows.

Kartaverse makes it a breeze to accessibly and affordably create content for use with virtual reality HMDs (head mounted displays) and fulldome theatres by providing ready to go scripts, templates, plugins, and command-line tools that allow you to work efficiently with XR media. The toolset works inside of Blackmagic Design’s powerful node based Fusion Studio and DaVinci Resolve Studio software.

https://www.statsignificant.com/p/the-broken-economics-of-streaming

This report examines the financial instability in the streaming industry, focusing on the unsustainable economic models of platforms such as Paramount Plus.

NEWS TV NEWS

Hollywood’s Top TV Execs Are Happy About The Death Of Peak TV – Here’s Why

https://www.slashfilm.com/1593571/peak-tv-dead-hollywood-top-tv-execs-happy/

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

https://www.nature.com/articles/d41586-024-01518-2

The prevalence of myopia is increasing rapidly, with projections indicating that by 2050, around half of the global population could be affected. This surge is largely attributed to lifestyle changes, such as increased time spent indoors and on screens, and decreased outdoor activities, starting with the Covid lock down.

To combat this epidemic, researchers are advocating for more outdoor exposure for children, as natural light is beneficial in slowing the progression of myopia. They also emphasize the importance of regular eye check-ups and early interventions. Additionally, innovative treatments such as specially designed contact lenses and low-dose atropine eye drops are being explored to manage and reduce the progression of myopia.

“These companies chose to blame thousands of people for a problem that was specifically created by their execs. The same people writing crocodile-tear-stained layoff letters are the same ones responsible for unrealistic projections, unrealistic spending and unrealistic hiring.”

“With corporate leaders having incentives not to benefit stakeholders at shareholder expense, delegating the guardianship of stakeholder interests to corporate leaders would prove futile. The promise of pluralistic stakeholderism is illusory.”

The Big Tech and big fintech companies aren’t worried about letting people go because when the next up-cycle hits they’ll pay top dollars (of course), but just as importantly, these resources can (nearly) seamlessly “plug and pay” into the tech environments in any of these companies.

“They use the same communication tools, the same programming tools, the same everything. There’s no (or hardly any) onboarding and training time required to get someone up to speed.”

“Without the need to spend months (weeks at the least) and untold dollars on training new employees, technology firms are emboldened to just let people go when it’s expedient for them.”

https://www.marcelpichert.com/post/12-toolsets-for-a-smarter-and-faster-comp-workflow

http://www.nukepedia.com/miscellaneous/m_toolsets

Efficient-Workflow Toolsets:

– degrain

– prerender

– concatenation

Keying Toolsets:

– IBK stacker

– Keying Setup Basic

– Keying Setup Plus

Projection Toolsets:

– uv project

– project warp

– project shadow

Mini Toolsets:

– rotate normals

– clamp saturation

– check comp

https://www.freecodecamp.org/news/advanced-computer-vision-with-python

https://www.freecodecamp.org/news/how-to-use-opencv-and-python-for-computer-vision-and-ai

Working for a VFX (Visual Effects) studio provides numerous opportunities to leverage the power of Python and OpenCV for various tasks. OpenCV is a versatile computer vision library that can be applied to many aspects of the VFX pipeline. Here’s a detailed list of opportunities to take advantage of Python and OpenCV in a VFX studio:

Interpolating frames from an EXR sequence using OpenCV can be useful when you have only every second frame of a final render and you want to create smoother motion by generating intermediate frames. However, keep in mind that interpolating frames might not always yield perfect results, especially if there are complex changes between frames. Here’s a basic example of how you might use OpenCV to achieve this:

import cv2

import numpy as np

import os

# Replace with the path to your EXR frames

exr_folder = "path_to_exr_frames"

# Replace with the appropriate frame extension and naming convention

frame_template = "frame_{:04d}.exr"

# Define the range of frame numbers you have

start_frame = 1

end_frame = 100

step = 2

# Define the output folder for interpolated frames

output_folder = "output_interpolated_frames"

os.makedirs(output_folder, exist_ok=True)

# Loop through the frame range and interpolate

for frame_num in range(start_frame, end_frame + 1, step):

frame_path = os.path.join(exr_folder, frame_template.format(frame_num))

next_frame_path = os.path.join(exr_folder, frame_template.format(frame_num + step))

if os.path.exists(frame_path) and os.path.exists(next_frame_path):

frame = cv2.imread(frame_path, cv2.IMREAD_ANYDEPTH | cv2.IMREAD_COLOR)

next_frame = cv2.imread(next_frame_path, cv2.IMREAD_ANYDEPTH | cv2.IMREAD_COLOR)

# Interpolate frames using simple averaging

interpolated_frame = (frame + next_frame) / 2

# Save interpolated frame

output_path = os.path.join(output_folder, frame_template.format(frame_num))

cv2.imwrite(output_path, interpolated_frame)

print(f"Interpolated frame {frame_num}") # alternatively: print("Interpolated frame {}".format(frame_num))

Please note the following points:

https://helpx.adobe.com/after-effects/using/preparing-importing-3d-image-files.html

Using Video Copilot’s Element 3D

httpv://www.youtube.com/watch?v=K–8k4zpW54

www.videocopilot.net/docs/element/

Projection mapping in Element 3D

https://www.videocopilot.net/forum/viewtopic.php?f=42&t=129682

Using Blender

Using Cinema 4D

helpx.adobe.com/ca/after-effects/using/c4d.html

https://helpx.adobe.com/ca/after-effects/using/c4d.html

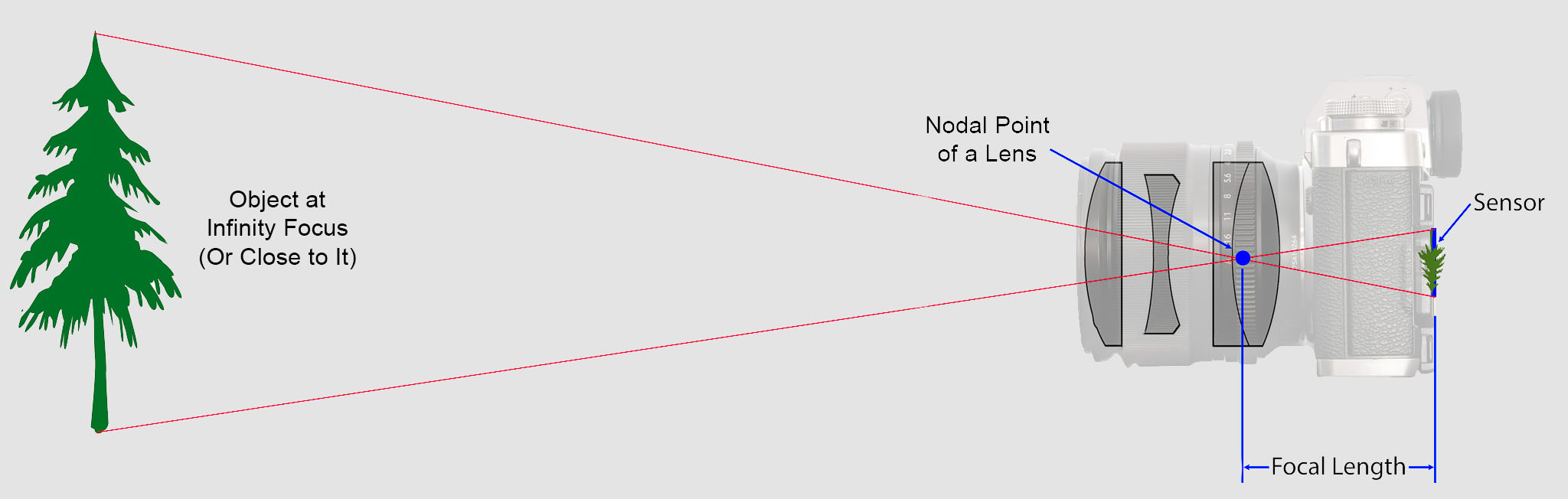

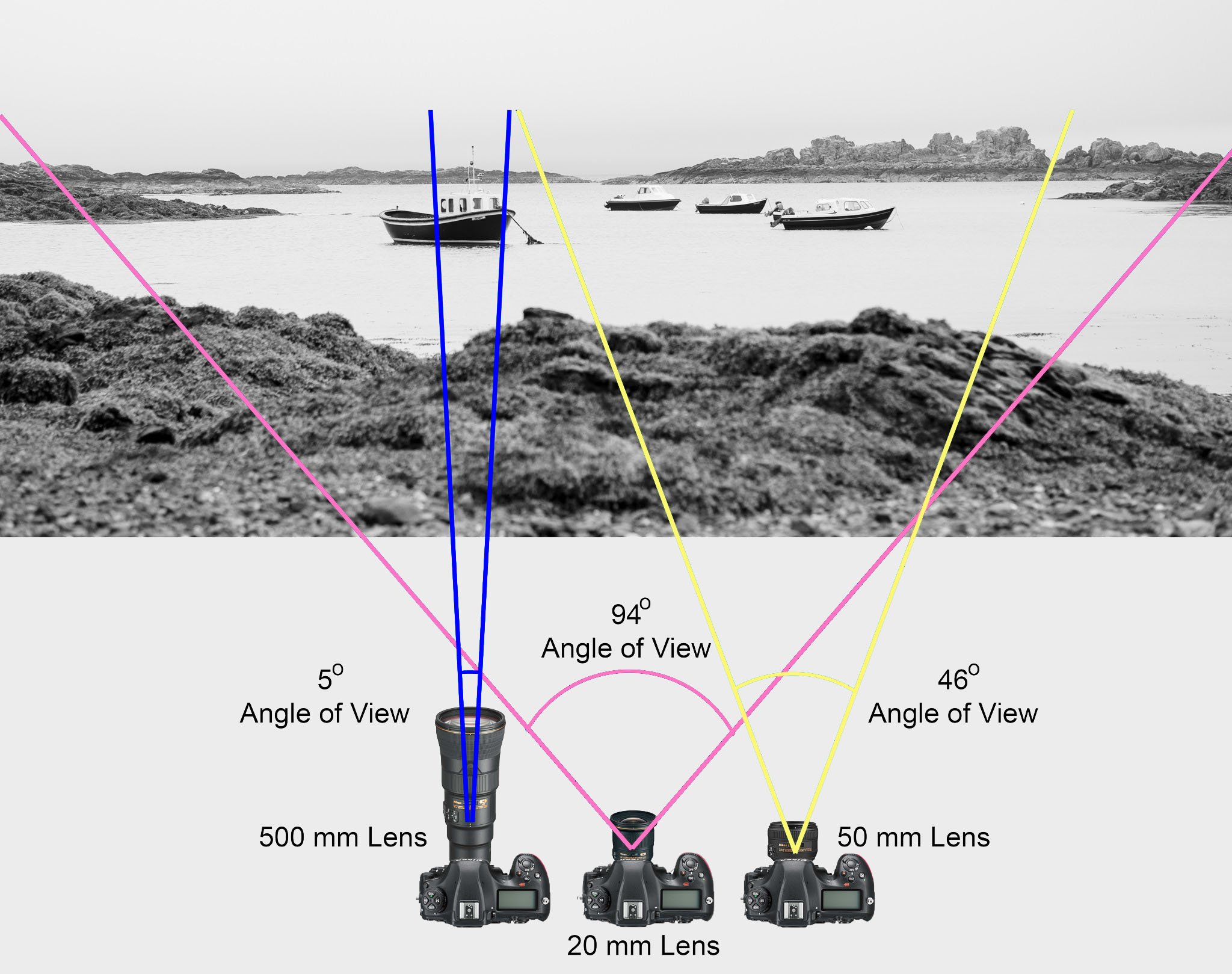

https://en.wikipedia.org/wiki/Focal_length

The focal length of an optical system is a measure of how strongly the system converges or diverges light.

Without getting into an in-depth physics discussion, the focal length of a lens is an optical property of the lens.

The exact definition is: Focal length measures the distance, in millimeters, between the “nodal point” of the lens and the camera’s sensor.

Lenses are named by their focal length. You can find this information on the barrel of the lens, and almost every camera lens ever made will prominently display the focal length. For example, a 50mm lens has a focal length of 50 millimeters.

In most photography and all telescopy, where the subject is essentially infinitely far away, longer focal length (lower optical power) leads to higher magnification and a narrower angle of view;

Conversely, shorter focal length or higher optical power is associated with lower magnification and a wider angle of view.

On the other hand, in applications such as microscopy in which magnification is achieved by bringing the object close to the lens, a shorter focal length (higher optical power) leads to higher magnification because the subject can be brought closer to the center of projection.

Focal length is important because it relates to the field of view of a lens – that is, how much of the scene you’ll capture. It also explains how large or small a subject in your photo will appear.

(more…)

COLLECTIONS

| Featured AI

| Design And Composition

| Explore posts

POPULAR SEARCHES

unreal | pipeline | virtual production | free | learn | photoshop | 360 | macro | google | nvidia | resolution | open source | hdri | real-time | photography basics | nuke

FEATURED POSTS

Social Links

DISCLAIMER – Links and images on this website may be protected by the respective owners’ copyright. All data submitted by users through this site shall be treated as freely available to share.