Views : 5

3Dprinting (178) A.I. (833) animation (348) blender (206) colour (233) commercials (52) composition (152) cool (361) design (646) Featured (79) hardware (311) IOS (109) jokes (138) lighting (288) modeling (144) music (186) photogrammetry (189) photography (754) production (1287) python (91) quotes (496) reference (314) software (1350) trailers (305) ves (549) VR (221)

Category: quotes

-

Jeffrey Ian Wilson – The Hidden Risks of Using ChatGPT and Anonymous AI Tools in non-secured Confidential Workflows Outside Proper Production Pipelines

https://www.linkedin.com/pulse/hidden-risks-using-chatgpt-anonymous-ai-tools-workflows-wilson-govcc

What You Can Do Today

If you’re serious about protecting your IP, client relationships, and professional credibility, you need to stop treating generative AI tools like consumer-grade apps. This isn’t about fear, it’s about operational discipline. Below are immediate steps you can take to reduce your exposure and stay in control of your creative pipeline.

- Use ChatGPT via the API, not the public app, for any sensitive data.

- Isolate ComfyUI to a sandboxed VM, Docker container, or offline machine.

- Audit every custom node, don’t blindly trust GitHub links or ComfyUI workflows

- Educate your team, a single mistake can leak an unreleased game asset, a feature film script, or trade secrets.

- Open source does not mean secure.

-

TED 2025 Rob Bredow – Artist-Driven Innovation in the Age of AI

https://robbredow.com/2025/05/ted-artist-driven-innovation/

https://www.ted.com/talks/rob_bredow_star_wars_changed_visual_effects_ai_is_doing_it_again

Rob Bredow speaks at SESSION 3 at TED 2025: Humanity Reimagined. April 7-11, 2025, Vancouver, BC. Photo: Gilberto Tadday / TED -

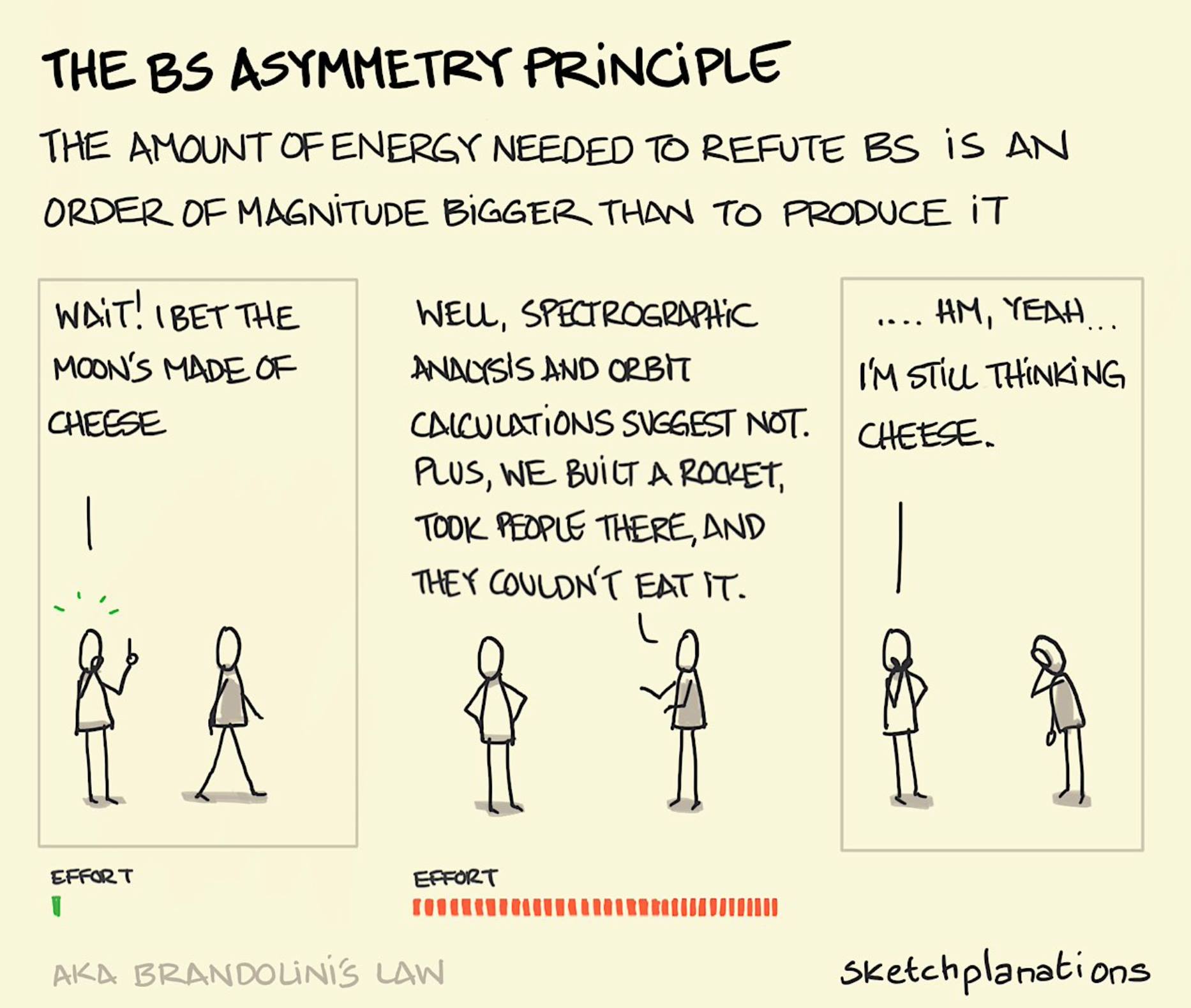

Mars Lewis on the Brandolini’s Law

Brandolini’s law (or the bullshit asymmetry principle) is an internet adage coined in 2013 by Italian programmer Alberto Brandolini. It compares the considerable effort of debunking misinformation to the relative ease of creating it in the first place.

The law states: “The amount of energy needed to refute bullshit is an order of magnitude bigger than to produce it.”

https://en.wikipedia.org/wiki/Brandolini%27s_law

This is why every time you kill a lie, it feels like nothing changed. It’s why no matter how many facts you post, how many sources you cite, how many receipts you show—the swarm just keeps coming. Because while you’re out in the open doing surgery, the machine is behind the curtain spraying aerosol deceit into every vent.

The lie takes ten seconds. The truth takes ten paragraphs. And by the time you’ve written the tenth, the people you’re trying to reach have already scrolled past.

Every viral deception—the fake quote, the rigged video, the synthetic outrage—takes almost nothing to create. And once it’s out there, you’re not just correcting a fact—you’re prying it out of someone’s identity. Because people don’t adopt lies just for information. They adopt them for belonging. The lie becomes part of who they are, and your correction becomes an attack.

And still—you must correct it. Still, you must fight.

Because even if truth doesn’t spread as fast, it roots deeper. Even if it doesn’t go viral, it endures. And eventually, it makes people bulletproof to the next wave of narrative sewage.

You’re not here to win a one-day war. You’re here to outlast a never-ending invasion.

The lies are roaches. You kill one, and a hundred more scramble behind the drywall.The lies are Hydra heads. You cut one off, and two grow back. But you keep swinging anyway.

Because this isn’t about instant wins. It’s about making the cost of lying higher. It’s about being the resistance that doesn’t fold. You don’t fight because it’s easy. You fight because it’s right.

-

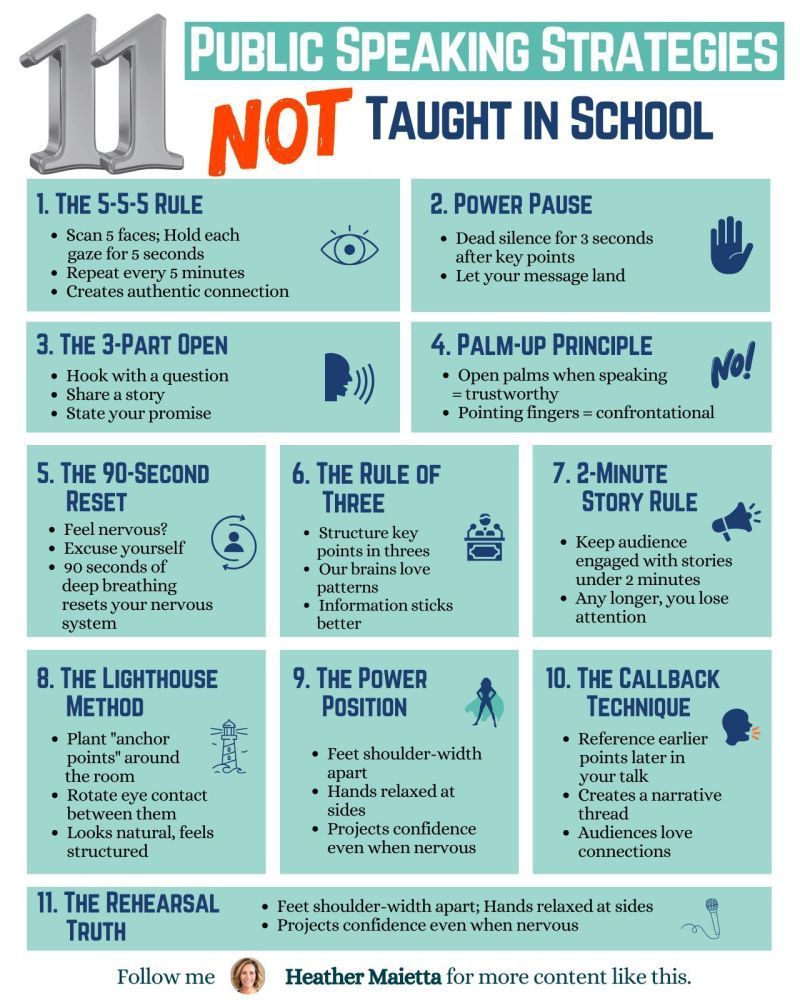

11 Public Speaking Strategies

What do people report as their #1 greatest fear?

It’s not death….

It’s public speaking.

Glossophobia, the fear of public speaking, has been a daunting obstacle for me for years.

11 confidence-boosting tips

1/ The 5-5-5 Rule

→ Scan 5 faces; Hold each gaze for 5 seconds.

→ Repeat every 5 minutes.

→ Creates an authentic connection.

2/Power Pause

→ Dead silence for 3 seconds after key points.

→ Let your message land.

3/ The 3-Part Open

→ Hook with a question.

→ Share a story.

→ State your promise.

4/ Palm-Up Principle

→ Open palms when speaking = trustworthy.

→ Pointing fingers = confrontational.

5/ The 90-Second Reset

→ Feel nervous? Excuse yourself.

→ 90 seconds of deep breathing reset your nervous system.

6/ Rule of Three

→ Structure key points in threes.

→ Our brains love patterns.

7/ 2-Minute Story Rule

→ Keep stories under 2 minutes.

→ Any longer, you lose them.

8/ The Lighthouse Method

→ Plant “anchor points” around the room.

→ Rotate eye contact between them.

→ Looks natural, feels structured.

9/ The Power Position

→ Feet shoulder-width apart.

→ Hands relaxed at sides.

→ Projects confidence even when nervous.

10/ The Callback Technique

→ Reference earlier points later in your talk.

→ Creates a narrative thread.

→ Audiences love connections.

11/ The Rehearsal Truth

→ Practice the opening 3x more than the rest.

→ Nail the first 30 seconds; you’ll nail the talk. -

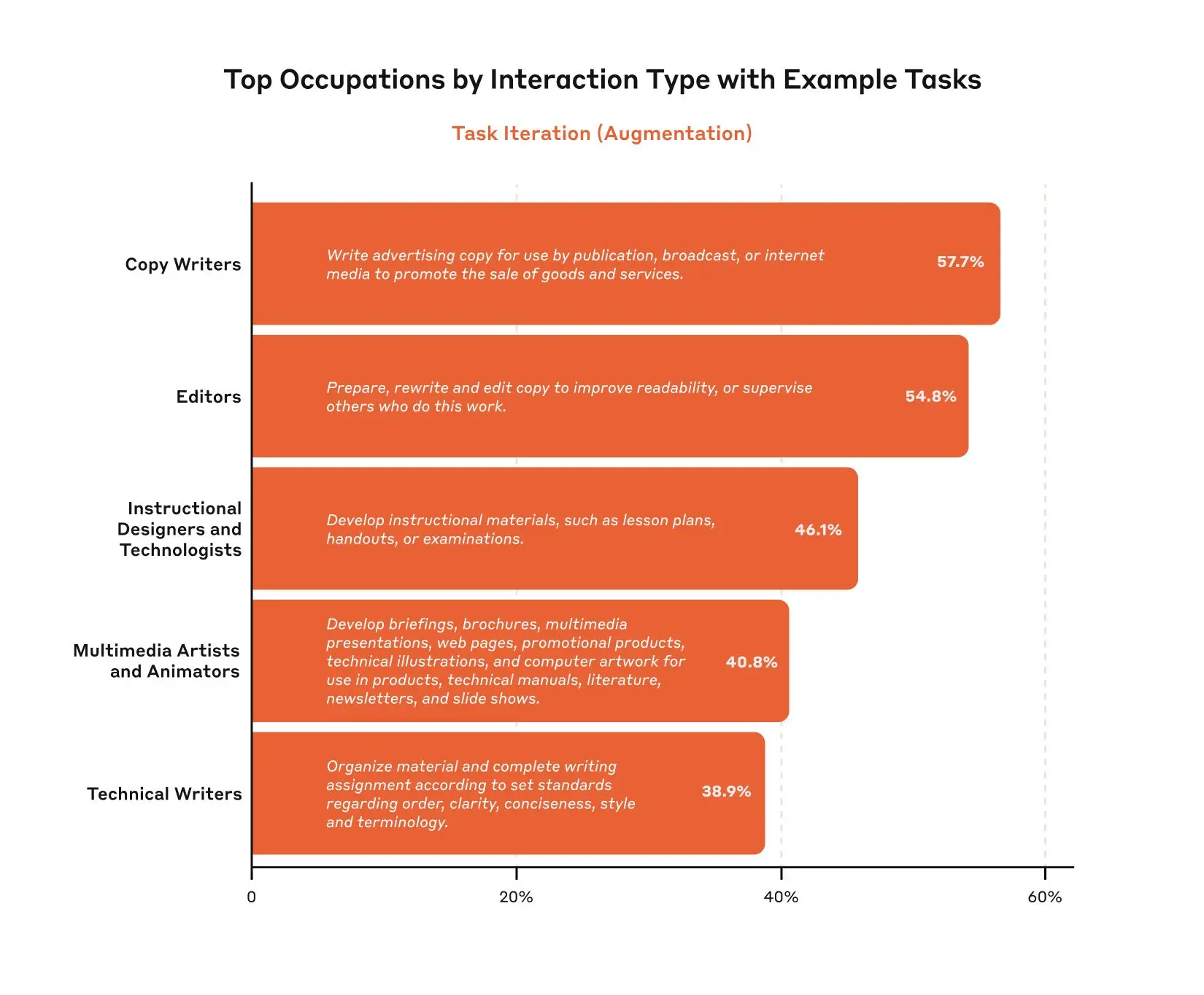

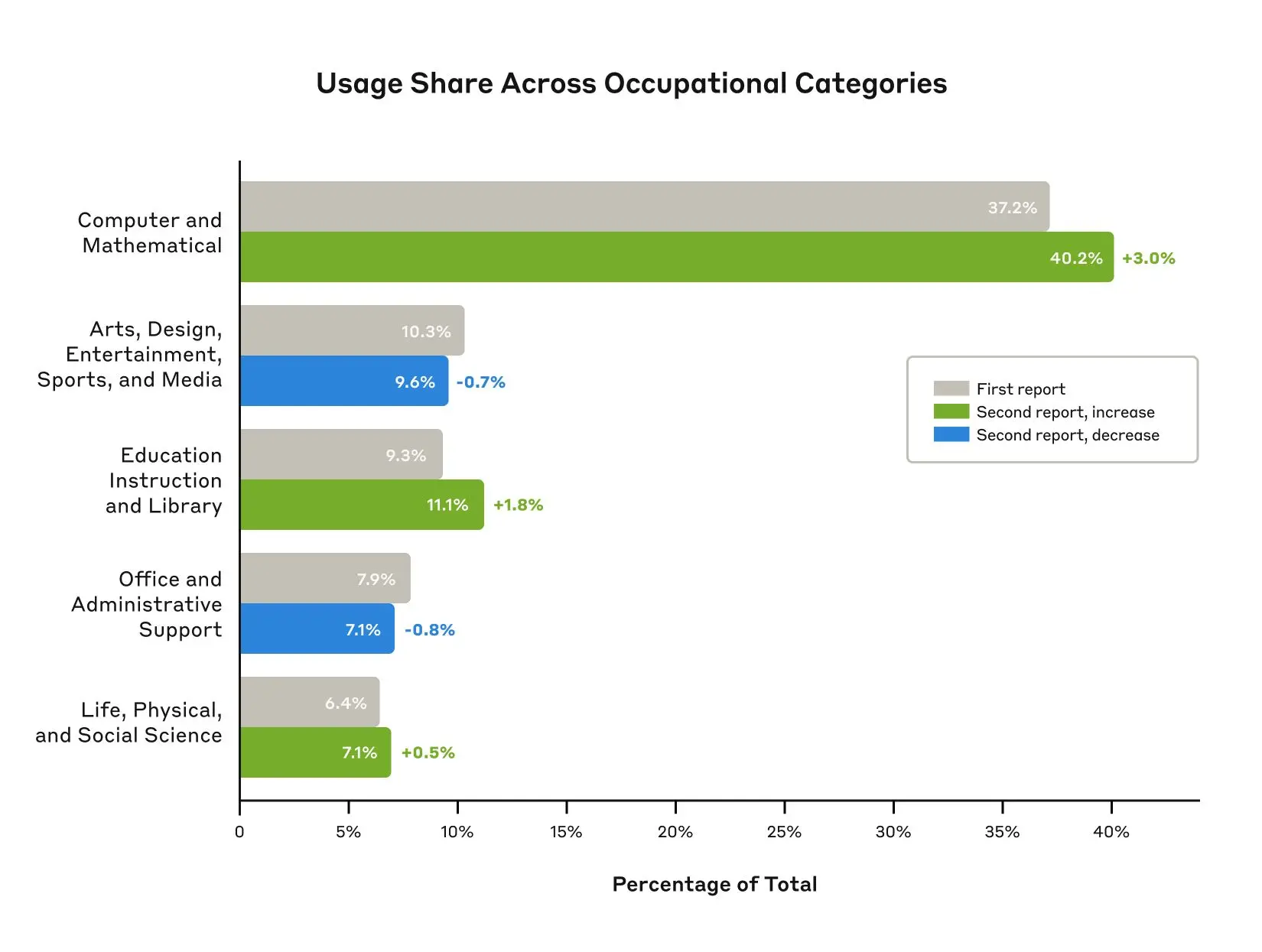

Anthropic Economic Index – Insights from Claude 3.7 Sonnet on AI future prediction

https://www.anthropic.com/news/anthropic-economic-index-insights-from-claude-sonnet-3-7

As models continue to advance, so too must our measurement of their economic impacts. In our second report, covering data since the launch of Claude 3.7 Sonnet, we find relatively modest increases in coding, education, and scientific use cases, and no change in the balance of augmentation and automation. We find that Claude’s new extended thinking mode is used with the highest frequency in technical domains and tasks, and identify patterns in automation / augmentation patterns across tasks and occupations. We release datasets for both of these analyses.

-

The Diary Of A CEO – A talk with Dr. Bessel Van Der Kolk described as “perhaps the most influential psychiatrist of the 21st century”

– Why traumatic memories are not like normal memories?

– What it was like working in a mental asylum.

– Does childhood trauma impact us permanently?

– Can yoga reverse deep past trauma? -

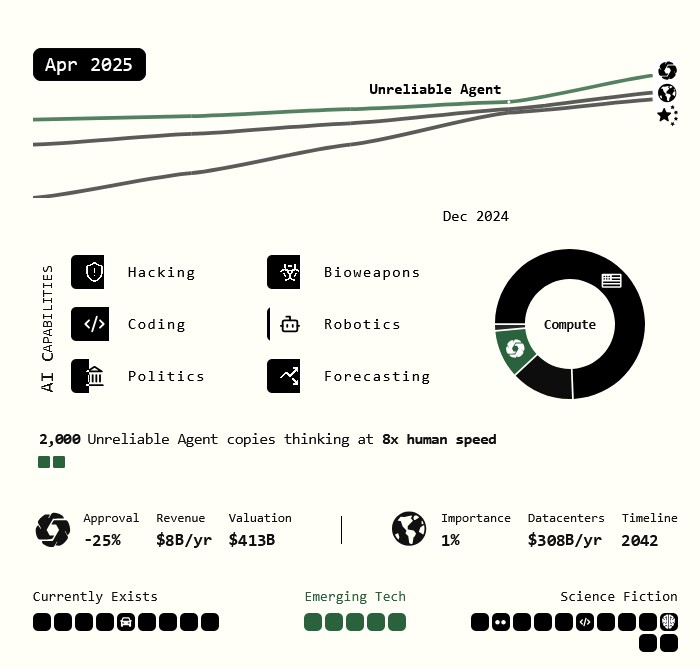

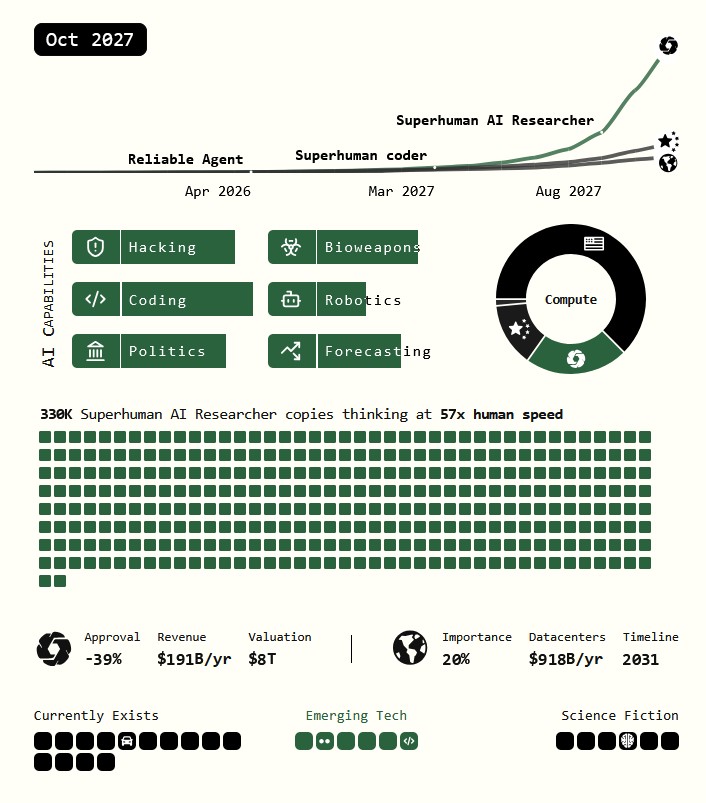

AI 2027 – Predicting the impact of superhuman AI over the next decade

We predict that the impact of superhuman AI over the next decade will be enormous, exceeding that of the Industrial Revolution.

We wrote a scenario that represents our best guess about what that might look like.1 It’s informed by trend extrapolations, wargames, expert feedback, experience at OpenAI, and previous forecasting successes.

-

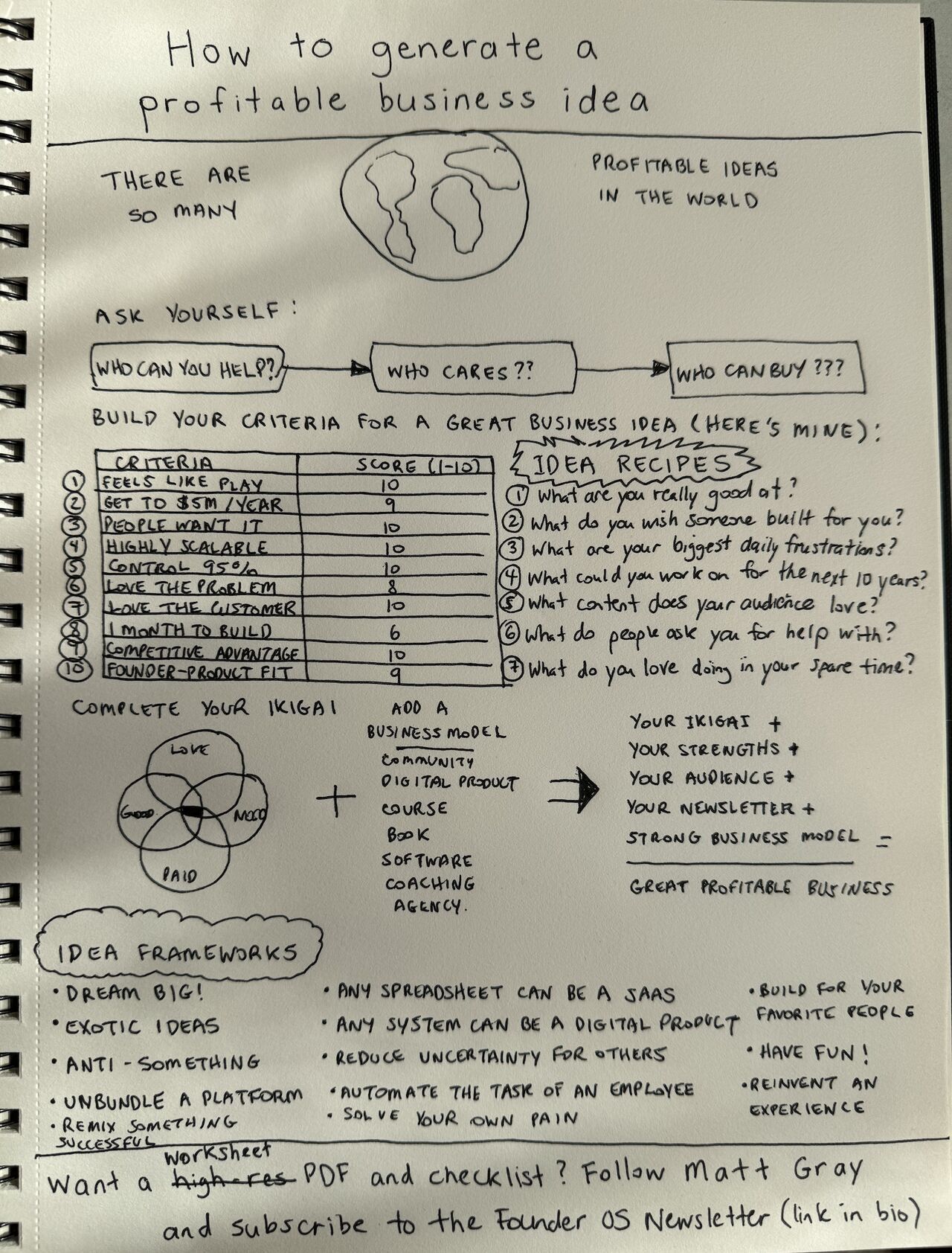

Matt Gray – How to generate a profitable business

In the last 10 years, over 1,000 people have asked me how to start a business. The truth? They’re all paralyzed by limiting beliefs. What they are and how to break them today:

(more…)

Before we get into the How, let’s first unpack why people think they can’t start a business.

Here are the biggest reasons I’ve found: -

QNTM – Developer Philosophy

- Avoid, at all costs, arriving at a scenario where the ground-up rewrite starts to look attractive

- Aim to be 90% done in 50% of the available time

- Automate good practice

- Think about pathological data

- There is usually a simpler way to write it

- Write code to be testable

- It is insufficient for code to be provably correct; it should be obviously, visibly, trivially correct

-

The AI-Copyright Trap document by Carys Craig

https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4905118

“There are many good reasons to be concerned about the rise of generative AI(…). Unfortunately, there are also many good reasons to be concerned about copyright’s growing prevalence in the policy discourse around AI’s regulation. Insisting that copyright protects an exclusive right to use materials for text and data mining practices (whether for informational analysis or machine learning to train generative AI models) is likely to do more harm than good. As many others have explained, imposing copyright constraints will certainly limit competition in the AI industry, creating cost-prohibitive barriers to quality data and ensuring that only the most powerful players have the means to build the best AI tools (provoking all of the usual monopoly concerns that accompany this kind of market reality but arguably on a greater scale than ever before). It will not, however, prevent the continued development and widespread use of generative AI.”

…

“(…) As Michal Shur-Ofry has explained, the technical traits of generative AI already mean that its outputs will tend towards the dominant, likely reflecting ‘a relatively narrow, mainstream view, prioritizing the popular and conventional over diverse contents and narratives.’ Perhaps, then, if the political goal is to push for equality, participation, and representation in the AI age, critics’ demands should focus not on exclusivity but inclusivity. If we want to encourage the development of ethical and responsible AI, maybe we should be asking what kind of material and training data must be included in the inputs and outputs of AI to advance that goal. Certainly, relying on copyright and the market to dictate what is in and what is out is unlikely to advance a public interest or equality-oriented agenda.”

…

“If copyright is not the solution, however, it might reasonably be asked: what is? The first step to answering that question—to producing a purposively sound prescription and evidence-based prognosis, is to correctly diagnose the problem. If, as I have argued, the problem is not that AI models are being trained on copyright works without their owners’ consent, then requiring copyright owners’ consent and/or compensation for the use of their work in AI-training datasets is not the appropriate solution. (…)If the only real copyright problem is that the outputs of generative AI may be substantially similar to specific human-authored and copyright-protected works, then copyright law as we know it already provides the solution.” -

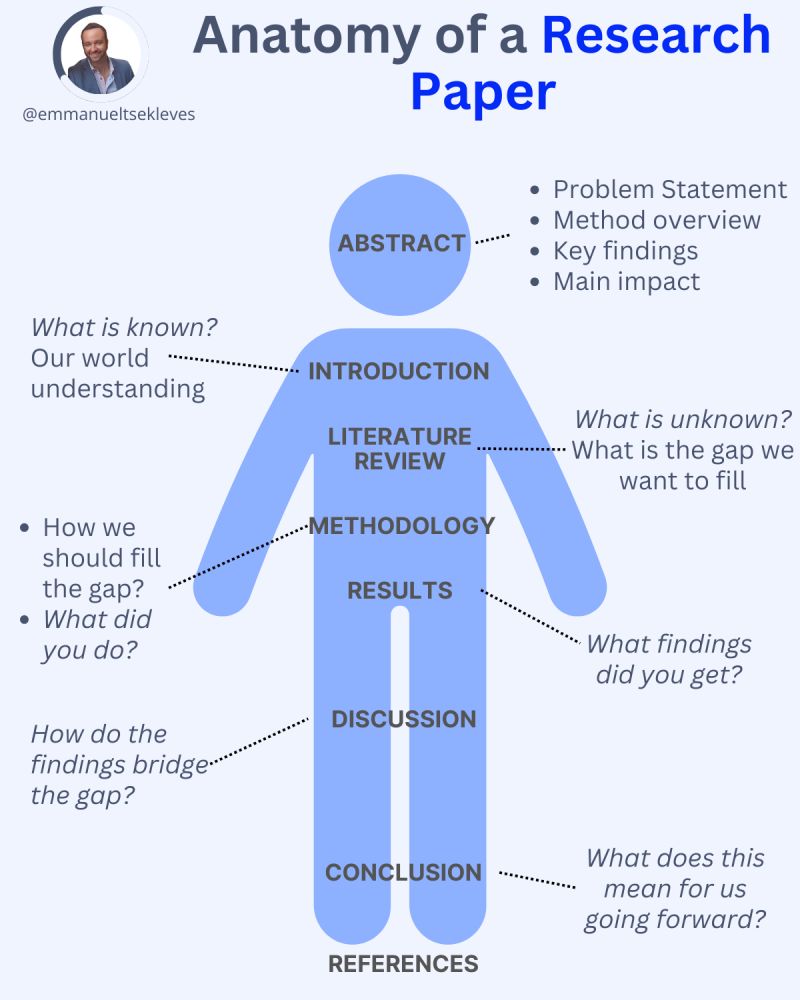

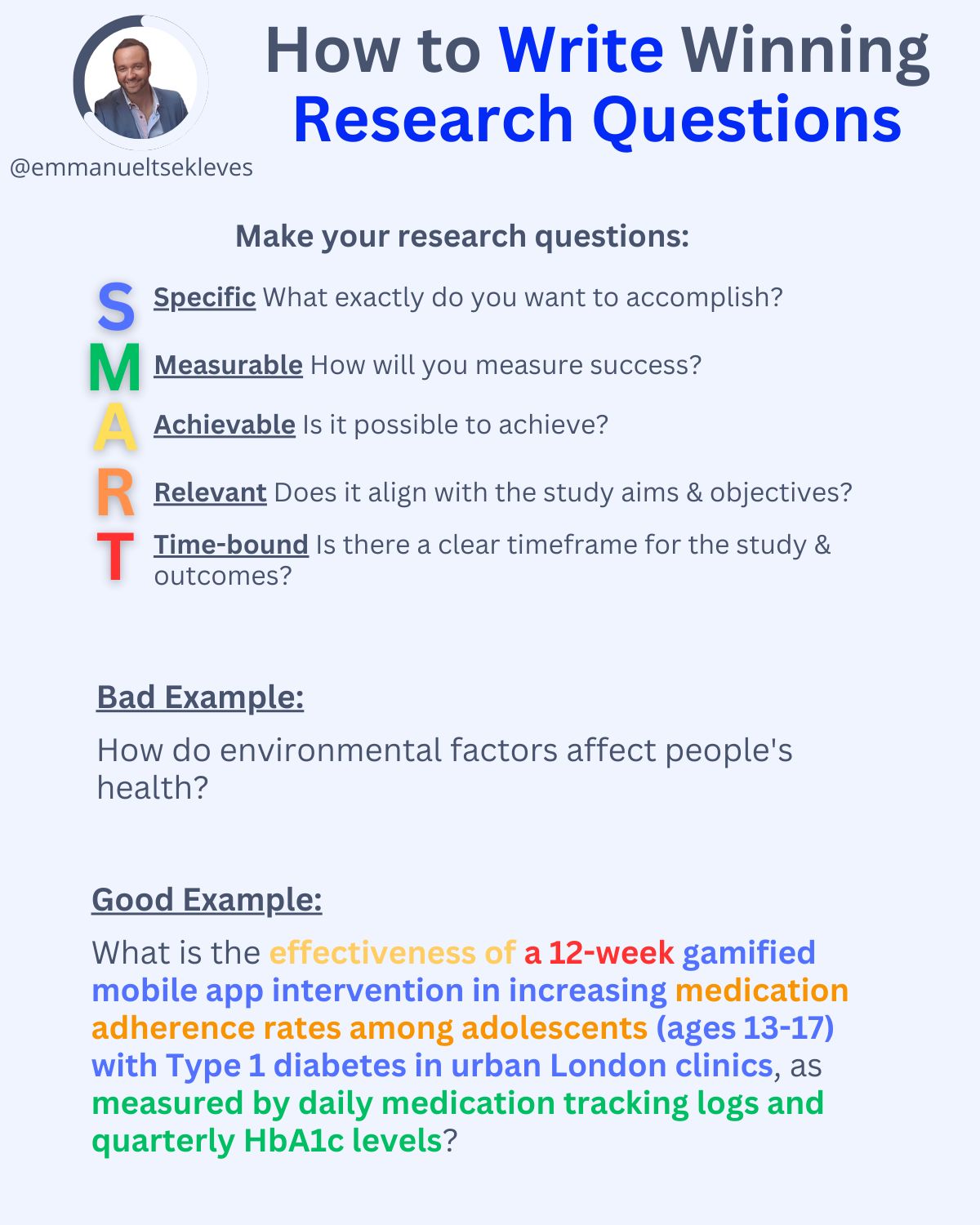

Emmanuel Tsekleves – Writing Research Papers

Here’s the journey of crafting a compelling paper:

1️. ABSTRACT

This is your elevator pitch.

Give a methodology overview.

Paint the problem you’re solving.

Highlight key findings and their impact.

2️. INTRODUCTION

Start with what we know.

Set the stage for our current understanding.

Hook your reader with the relevance of your work.

3️. LITERATURE REVIEW

Identify what’s unknown.

Spot the gaps in current knowledge.

Your job in the next sections is to fill this gap.

4️. METHODOLOGY

What did you do?

Outline how you’ll fill that gap.

Be transparent about your approach.

Make it reproducible so others can follow.

5️. RESULTS

Let the data speak for itself.

Present your findings clearly.

Keep it concise and focused.

6️. DISCUSSION

Now, connect the dots.

Discuss implications and significance.

How do your findings bridge the knowledge gap?

7️. CONCLUSION

Wrap it up with future directions.

What does this mean for us moving forward?

Leave the reader with a call to action or reflection.

8️. REFERENCES

Acknowledge the giants whose shoulders you stand on.

A robust reference list shows the depth of your research.

-

Zach Goldberg – The Startup CTO Handbook

https://github.com/ZachGoldberg/Startup-CTO-Handbook

You can buy the book on Amazon or Audible

Hi, thanks for checking out the Startup CTO’s Handbook! This repository has the latest version of the content of the book. You’re welcome and encouraged to contribute issues or pull requests for additions / changes / suggestions / criticisms to be included in future editions. Please feel free to add your name to ACKNOWLEDGEMENTS if you do so.

-

Elon Musk finally admits Tesla’s HW3 might not support full self-driving

The CEO said when asked about Tesla achieving its promised unsupervised self-driving on HW3 vehicles:

We are not 100% sure. HW4 has several times the capability of HW3. It’s easier to get things to work on HW4 and it takes a lot of efforts to squeeze that into HW3. There is some chance that HW3 does not achieve the safety level that allows for unsupervised FSD.

-

Linus Torvalds on GenAI

Linus Torvalds, the creator and maintainer of the Linux kernel, talks modern developments.

-

Managers’ Guide to Effective Annual Feedback

https://peterszasz.com/engineering-managers-guide-to-effective-annual-feedback

The main goals of a regular, written feedback cycle are:

- Recognition, support for self-reflection and personal growth

- Alignment with team- and company needs

- Documentation

These promote:

- Recognize Achievements: Use the feedback process to boost morale and support self-reflection.

- Align Goals: Ensure individual contributions match company objectives.

- Document Progress: Keep a clear record of performance for future decisions.

- Prepare Feedback: Gather 360-degree feedback, focus on examples, and anticipate reactions.

- Strength-Based Approach: Focus on enhancing strengths over fixing weaknesses.

- Deliver Feedback Live: Engage in discussion before providing written feedback.

- Follow-Up: Use feedback to guide future goals and performance improvement.

-

Ben Gunsberger – AI generated podcast about AI using Google NotebookLM

Listen to the podcast in the post

“I just created a AI-Generated podcast by feeding an article I write into Google’s NotebookLM. If I hadn’t make it myself, I would have been 100% fooled into thinking it was real people talking.”

-

Sam Altman – The Intelligence Age

In the next couple of decades, we will be able to do things that would have seemed like magic to our grandparents.

This phenomenon is not new, but it will be newly accelerated. People have become dramatically more capable over time; we can already accomplish things now that our predecessors would have believed to be impossible.

We are more capable not because of genetic change, but because we benefit from the infrastructure of society being way smarter and more capable than any one of us; in an important sense, society itself is a form of advanced intelligence. Our grandparents – and the generations that came before them – built and achieved great things. They contributed to the scaffolding of human progress that we all benefit from. AI will give people tools to solve hard problems and help us add new struts to that scaffolding that we couldn’t have figured out on our own. The story of progress will continue, and our children will be able to do things we can’t.

-

The Perils of Technical Debt – Understanding Its Impact on Security, Usability, and Stability

In software development, “technical debt” is a term used to describe the accumulation of shortcuts, suboptimal solutions, and outdated code that occur as developers rush to meet deadlines or prioritize immediate goals over long-term maintainability. While this concept initially seems abstract, its consequences are concrete and can significantly affect the security, usability, and stability of software systems.

The Nature of Technical Debt

Technical debt arises when software engineers choose a less-than-ideal implementation in the interest of saving time or reducing upfront effort. Much like financial debt, these decisions come with an interest rate: over time, the cost of maintaining and updating the system increases, and more effort is required to fix problems that stem from earlier choices. In extreme cases, technical debt can slow development to a crawl, causing future updates or improvements to become far more difficult than they would have been with cleaner, more scalable code.

Impact on Security

One of the most significant threats posed by technical debt is the vulnerability it creates in terms of software security. Outdated code often lacks the latest security patches or is built on legacy systems that are no longer supported. Attackers can exploit these weaknesses, leading to data breaches, ransomware, or other forms of cybercrime. Furthermore, as systems grow more complex and the debt compounds, identifying and fixing vulnerabilities becomes increasingly challenging. Failing to address technical debt leaves an organization exposed to security risks that may only become apparent after a costly incident.

Impact on Usability

Technical debt also affects the user experience. Systems burdened by outdated code often become clunky and slow, leading to poor usability. Engineers may find themselves continuously patching minor issues rather than implementing larger, user-centric improvements. Over time, this results in a product that feels antiquated, is difficult to use, or lacks modern functionality. In a competitive market, poor usability can alienate users, causing a loss of confidence and driving them to alternative products or services.

Impact on Stability

Stability is another critical area impacted by technical debt. As developers add features or make updates to systems weighed down by previous quick fixes, they run the risk of introducing bugs or causing system crashes. The tangled, fragile nature of code laden with technical debt makes troubleshooting difficult and increases the likelihood of cascading failures. Over time, instability in the software can erode both the trust of users and the efficiency of the development team, as more resources are dedicated to resolving recurring issues rather than innovating or expanding the system’s capabilities.

The Long-Term Costs of Ignoring Technical Debt

While technical debt can provide short-term gains by speeding up initial development, the long-term costs are much higher. Unaddressed technical debt can lead to project delays, escalating maintenance costs, and an ever-widening gap between current code and modern best practices. The more technical debt accumulates, the harder and more expensive it becomes to address. For many companies, failing to pay down this debt eventually results in a critical juncture: either invest heavily in refactoring the codebase or face an expensive overhaul to rebuild from the ground up.

Conclusion

Technical debt is an unavoidable aspect of software development, but understanding its perils is essential for minimizing its impact on security, usability, and stability. By actively managing technical debt—whether through regular refactoring, code audits, or simply prioritizing long-term quality over short-term expedience—organizations can avoid the most dangerous consequences and ensure their software remains robust and reliable in an ever-changing technological landscape.

-

The riddles humans can solve but AI computers cannot

https://www.bbc.com/future/article/20240912-what-riddles-teach-us-about-the-human-mind

“As human beings, it’s very easy for us to have common sense, and apply it at the right time and adapt it to new problems,” says Ilievski, who describes his branch of computer science as “common sense AI”. But right now, AI has a “general lack of grounding in the world”, which makes that kind of basic, flexible reasoning a struggle.

AI excels at pattern recognition, “but it tends to be worse than humans at questions that require more abstract thinking”, says Xaq Pitkow, an associate professor at Carnegie Mellon University in the US, who studies the intersection of AI and neuroscience. In many cases, though, it depends on the problem.

A bizarre truth about AI is we have no idea how it works. The same is true about the brain.

That’s why the best systems may come from a combination of AI and human work; we can play to the machine’s strengths, Ilievski says.

-

Clint Eastwood on the set of his latest movie

At the age of 94, this is what the great Clint Eastwood looks like.

Standing, lucid, brilliant, directing his latest film. Eastwood himself says it: “I don’t let the old man in. I keep myself busy. You have to stay active, alive, happy, strong, capable. I don’t let in the old critic, hostile, envious, gossiping, full of rage and complaints, of lack of courage, which denies to itself that old age can be creative, decisive, full of light and projection. Getting older is not for sissies.”

~Clint Eastwood

-

How to Lead Your Team when the House Is on Fire

https://peterszasz.com/how-to-lead-your-team-when-the-house-is-on-fire/

The three focus areas of an Engineering Manager

- Ensuring delivery that’s aligned with company goals;

- Building and sustaining a high-performing engineering team;

- Supporting the success and personal growth of the individuals on the team.

COLLECTIONS

| Featured AI

| Design And Composition

| Explore posts

POPULAR SEARCHES

unreal | pipeline | virtual production | free | learn | photoshop | 360 | macro | google | nvidia | resolution | open source | hdri | real-time | photography basics | nuke

FEATURED POSTS

-

Types of Film Lights and their efficiency – CRI, Color Temperature and Luminous Efficacy

-

UV maps

-

HDRI Median Cut plugin

-

RawTherapee – a free, open source, cross-platform raw image and HDRi processing program

-

How to paint a boardgame miniatures

-

Matt Hallett – WAN 2.1 VACE Total Video Control in ComfyUI

-

Guide to Prompt Engineering

-

Matt Gray – How to generate a profitable business

Social Links

DISCLAIMER – Links and images on this website may be protected by the respective owners’ copyright. All data submitted by users through this site shall be treated as freely available to share.