BREAKING NEWS

LATEST POSTS

-

Lumotive Light Control Metasurface – This Tiny Chip Replaces Bulky Optics & Mechanical Mirrors

Programmable Optics for LiDAR and 3D Sensing: How Lumotive’s LCM is Changing the Game

For decades, LiDAR and 3D sensing systems have relied on mechanical mirrors and bulky optics to direct light and measure distance. But at CES 2025, Lumotive unveiled a breakthrough—a semiconductor-based programmable optic that removes the need for moving parts altogether.

The Problem with Traditional LiDAR and Optical Systems

LiDAR and 3D sensing systems work by sending out light and measuring when it returns, creating a precise depth map of the environment. However, traditional systems have relied on physically moving mirrors and lenses, which introduce several limitations:

- Size and weight – Bulky components make integration difficult.

- Complexity – Mechanical parts are prone to failure and expensive to produce.

- Speed limitations – Physical movement slows down scanning and responsiveness.

To bring high-resolution depth sensing to wearables, smart devices, and autonomous systems, a new approach is needed.

Enter the Light Control Metasurface (LCM)

Lumotive’s Light Control Metasurface (LCM) replaces mechanical mirrors with a semiconductor-based optical chip. This allows LiDAR and 3D sensing systems to steer light electronically, just like a processor manages data. The advantages are game-changing:

- No moving parts – Increased durability and reliability

- Ultra-compact form factor – Fits into small devices and wearables

- Real-time reconfigurability – Optics can adapt instantly to changing environments

- Energy-efficient scanning – Focuses on relevant areas, saving power

How Does it Work?

LCM technology works by controlling how light is directed using programmable metasurfaces. Unlike traditional optics that require physical movement, Lumotive’s approach enables light to be redirected with software-controlled precision.

This means:

- No mechanical delays – Everything happens at electronic speeds.

- AI-enhanced tracking – The sensor can focus only on relevant objects.

- Scalability – The same technology can be adapted for industrial, automotive, AR/VR, and smart city applications.

Live Demo: Real-Time 3D Sensing

At CES 2025, Lumotive showcased how their LCM-enabled sensor can scan a room in real time, creating an instant 3D point cloud. Unlike traditional LiDAR, which has a fixed scan pattern, this system can dynamically adjust to track people, objects, and even gestures on the fly.

This is a huge leap forward for AI-powered perception systems, allowing cameras and sensors to interpret their environment more intelligently than ever before.

Who Needs This Technology?

Lumotive’s programmable optics have the potential to disrupt multiple industries, including:

- Automotive – Advanced LiDAR for autonomous vehicles

- Industrial automation – Precision 3D scanning for robotics and smart factories

- Smart cities – Real-time monitoring of public spaces

- AR/VR/XR – Depth-aware tracking for immersive experiences

The Future of 3D Sensing Starts Here

Lumotive’s Light Control Metasurface represents a fundamental shift in how we think about optics and 3D sensing. By bringing programmability to light steering, it opens up new possibilities for faster, smarter, and more efficient depth-sensing technologies.

With traditional LiDAR now facing a serious challenge, the question is: Who will be the first to integrate programmable optics into their designs?

-

ComfyDock – The Easiest (Free) Way to Safely Run ComfyUI Sessions in a Boxed Container

https://www.reddit.com/r/comfyui/comments/1j2x4qv/comfydock_the_easiest_free_way_to_run_comfyui_in/

ComfyDock is a tool that allows you to easily manage your ComfyUI environments via Docker.

Common Challenges with ComfyUI

- Custom Node Installation Issues: Installing new custom nodes can inadvertently change settings across the whole installation, potentially breaking the environment.

- Workflow Compatibility: Workflows are often tested with specific custom nodes and ComfyUI versions. Running these workflows on different setups can lead to errors and frustration.

- Security Risks: Installing custom nodes directly on your host machine increases the risk of malicious code execution.

How ComfyDock Helps

- Environment Duplication: Easily duplicate your current environment before installing custom nodes. If something breaks, revert to the original environment effortlessly.

- Deployment and Sharing: Workflow developers can commit their environments to a Docker image, which can be shared with others and run on cloud GPUs to ensure compatibility.

- Enhanced Security: Containers help to isolate the environment, reducing the risk of malicious code impacting your host machine.

-

Why the Solar Maximum means peak Northern Lights in 2025

https://northernlightscanada.com/explore/solar-maximum

Every 11 years the Sun’s magnetic pole flips. Leading up to this event, there is a period of increased solar activity — from sunspots and solar flares to spectacular northern and southern lights. The current solar cycle began in 2019 and scientists predict it will peak sometime in 2024 or 2025 before the Sun returns to a lower level of activity in the early 2030s.

The most dramatic events produced by the solar photosphere (the “surface” of the Sun) are coronal mass ejections. When these occur and solar particles get spewed out into space, they can wash over the Earth and interact with our magnetic field. This interaction funnels the charged particles towards Earth’s own North and South magnetic poles — where the particles interact with molecules in Earth’s ionosphere and cause them to fluoresce — phenomena known as aurora borealis (northern lights) and aurora australis (southern lights).

In 2019, it was predicted that the solar maximum would likely occur sometime around July 2025. However, Nature does not have to conform with our predictions, and seems to be giving us the maximum earlier than expected.

Very strong solar activity — especially the coronal mass ejections — can indeed wreak some havoc on our satellite and communication electronics. Most often, it is fairly minor — we get what is known as a “radio blackout” that interferes with some of our radio communications. Once in a while, though, a major solar event occurs. The last of these was in 1859 in what is now known as the Carrington Event, which knocked out telegraph communications across Europe and North America. Should a similar solar storm happen today it would be fairly devastating, affecting major aspects of our infrastructure including our power grid and, (gasp), the internet itself.

-

Mike Seymour – Amid Industry Collapses, with guest panelist Scott Ross (ex ILM and DD)

Beyond Technicolor’s specific challenges, the broader VFX industry continues to grapple with systemic issues, including cost-cutting pressures, exploitative working conditions, and an unsustainable business model. VFX houses often operate on razor-thin margins, competing in a race to the bottom due to studios’ demand for cheaper and faster work. This results in a cycle of overwork, burnout, and, in many cases, eventual bankruptcy, as seen with Rhythm & Hues in 2013 and now at Technicolor. The reliance on tax incentives and outsourcing further complicates matters, making VFX work highly unstable. With major vendors collapsing and industry workers facing continued uncertainty, many are calling for structural changes, including better contracts, collective bargaining, and a more sustainable production pipeline. Without meaningful reform, the industry risks seeing more historic names disappear and countless skilled artists move to other fields.

-

Niels Cautaerts – Python dependency management is a dumpster fire

https://nielscautaerts.xyz/python-dependency-management-is-a-dumpster-fire.html

For many modern programming languages, the associated tooling has the lock-file based dependency management mechanism baked in. For a great example, consider Rust’s Cargo.

(more…)

Not so with Python.

The default package manager for Python is pip. The default instruction to install a package is to runpip install package. Unfortunately, this imperative approach for creating your environment is entirely divorced from the versioning of your code. You very quickly end up in a situation where you have 100’s of packages installed. You no longer know which packages you explicitly asked to install, and which packages got installed because they were a transitive dependency. You no longer know which version of the code worked in which environment, and there is no way to roll back to an earlier version of your environment. Installing any new package could break your environment.

… -

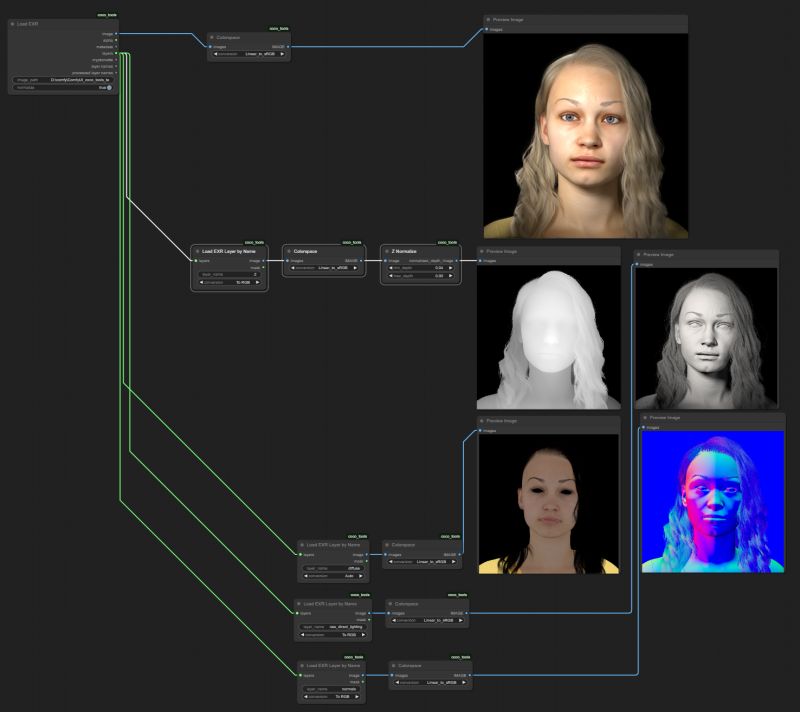

Meta Avat3r – Large Animatable Gaussian Reconstruction Model for High-fidelity 3D Head Avatars

https://tobias-kirschstein.github.io/avat3r

Avat3r takes 4 input images of a person’s face and generates an animatable 3D head avatar in a single forward pass. The resulting 3D head representation can be animated at interactive rates. The entire creation process of the 3D avatar, from taking 4 smartphone pictures to the final result, can be executed within minutes.

https://www.uploadvr.com/meta-researchers-generate-photorealistic-avatars-from-just-four-selfies

-

Shadow of Mordor’s brilliant Nemesis system is locked away by a Warner Bros patent until 2036, despite studio shutdown

The Nemesis system, for those unfamiliar, is a clever in-game mechanic which tracks a player’s actions to create enemies that feel capable of remembering past encounters. In the studio’s Middle-earth games, this allowed foes to rise through the ranks and enact revenge.

The patent itself – which you can view here – was originally filed back in 2016, before it was granted in 2021. It is dubbed “Nemesis characters, nemesis forts, social vendettas and followers in computer games”. As it stands, the patent has an expiration date of 11th August, 2036.

-

Crypto Mining Attack via ComfyUI/Ultralytics in 2024

https://github.com/ultralytics/ultralytics/issues/18037

zopieux on Dec 5, 2024 : Ultralytics was attacked (or did it on purpose, waiting for a post mortem there), 8.3.41 contains nefarious code downloading and running a crypto miner hosted as a GitHub blob.

FEATURED POSTS

-

Google – Artificial Intelligence free courses

1. Introduction to Large Language Models: Learn about the use cases and how to enhance the performance of large language models.

https://www.cloudskillsboost.google/course_templates/5392. Introduction to Generative AI: Discover the differences between Generative AI and traditional machine learning methods.

https://www.cloudskillsboost.google/course_templates/5363. Generative AI Fundamentals: Earn a skill badge by demonstrating your understanding of foundational concepts in Generative AI.

https://www.cloudskillsboost.google/paths4. Introduction to Responsible AI: Learn about the importance of Responsible AI and how Google implements it in its products.

https://www.cloudskillsboost.google/course_templates/5545. Encoder-Decoder Architecture: Learn about the encoder-decoder architecture, a critical component of machine learning for sequence-to-sequence tasks.

https://www.cloudskillsboost.google/course_templates/5436. Introduction to Image Generation: Discover diffusion models, a promising family of machine learning models in the image generation space.

https://www.cloudskillsboost.google/course_templates/5417. Transformer Models and BERT Model: Get a comprehensive introduction to the Transformer architecture and the Bidirectional Encoder Representations from the Transformers (BERT) model.

https://www.cloudskillsboost.google/course_templates/5388. Attention Mechanism: Learn about the attention mechanism, which allows neural networks to focus on specific parts of an input sequence.

https://www.cloudskillsboost.google/course_templates/537

-

RawTherapee – a free, open source, cross-platform raw image and HDRi processing program

5.10 of this tool includes excellent tools to clean up cr2 and cr3 used on set to support HDRI processing.

Converting raw to AcesCG 32 bit tiffs with metadata.

-

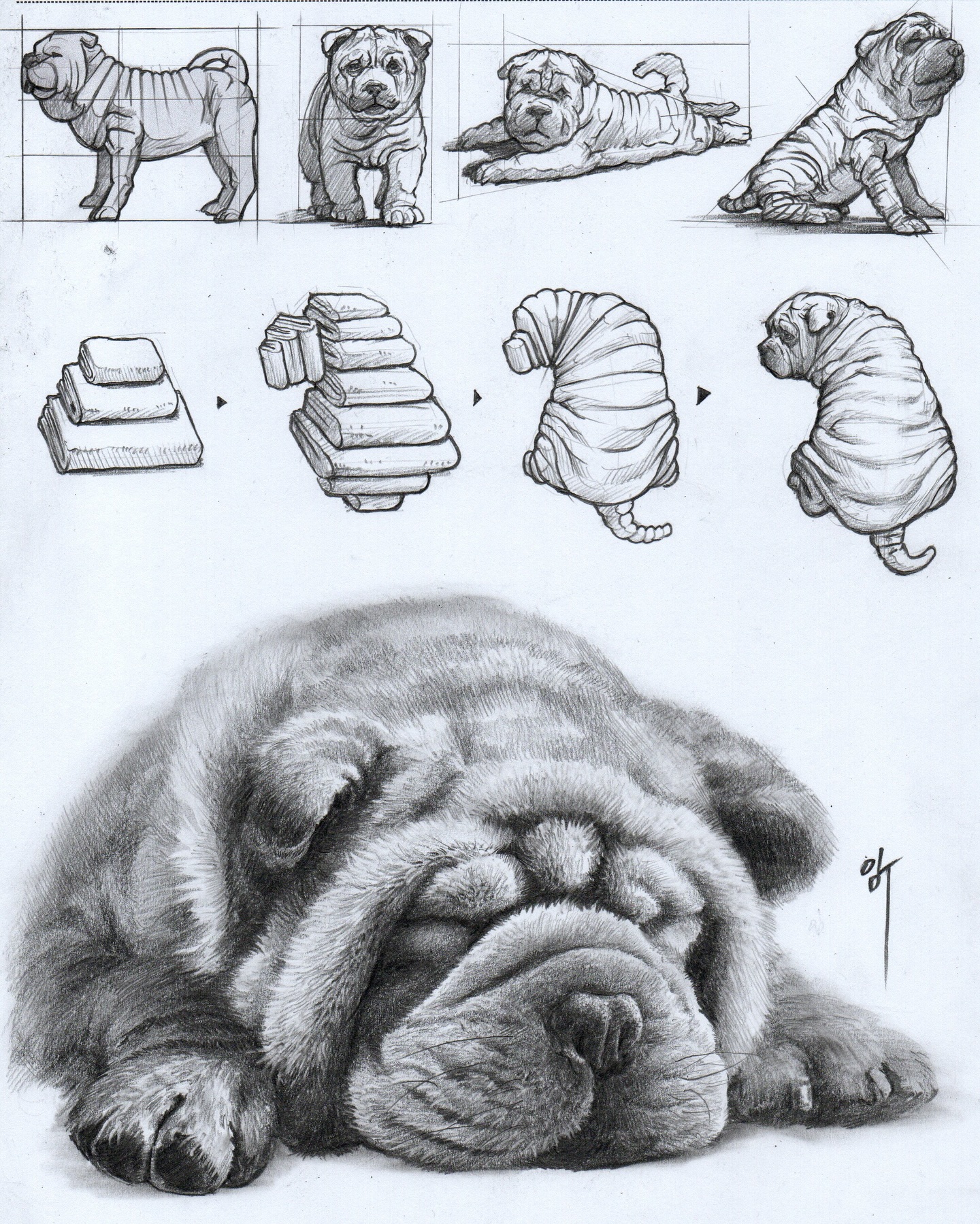

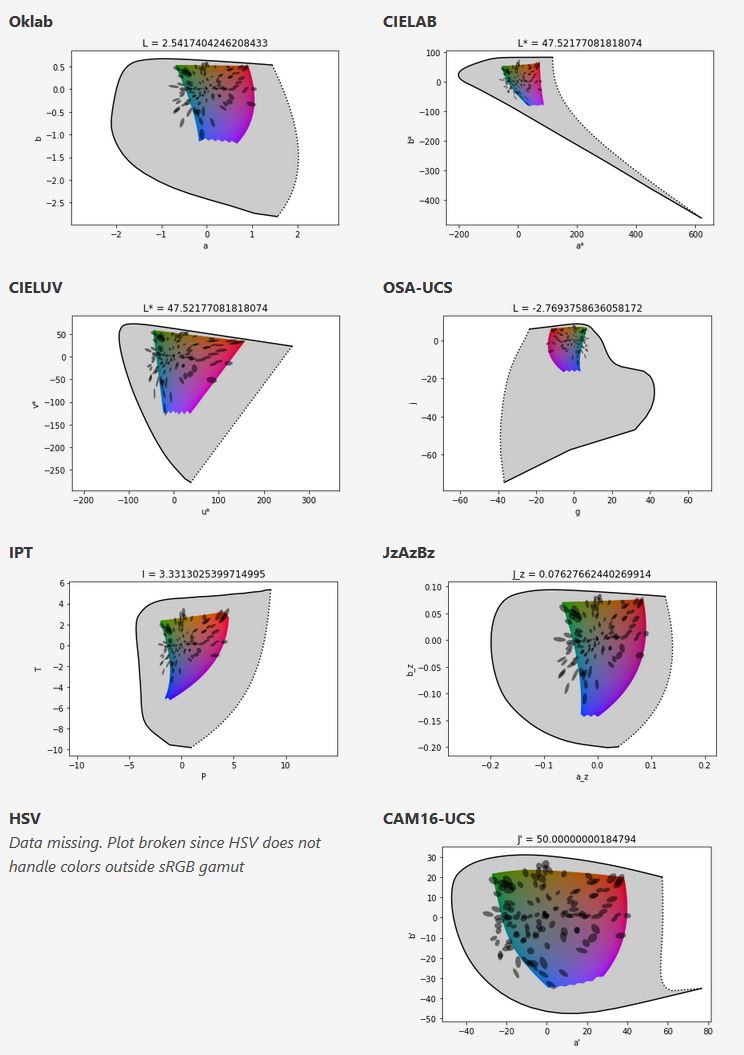

Björn Ottosson – OKHSV and OKHSL – Two new color spaces for color picking

https://bottosson.github.io/misc/colorpicker

https://bottosson.github.io/posts/colorpicker/

https://www.smashingmagazine.com/2024/10/interview-bjorn-ottosson-creator-oklab-color-space/

One problem with sRGB is that in a gradient between blue and white, it becomes a bit purple in the middle of the transition. That’s because sRGB really isn’t created to mimic how the eye sees colors; rather, it is based on how CRT monitors work. That means it works with certain frequencies of red, green, and blue, and also the non-linear coding called gamma. It’s a miracle it works as well as it does, but it’s not connected to color perception. When using those tools, you sometimes get surprising results, like purple in the gradient.

There were also attempts to create simple models matching human perception based on XYZ, but as it turned out, it’s not possible to model all color vision that way. Perception of color is incredibly complex and depends, among other things, on whether it is dark or light in the room and the background color it is against. When you look at a photograph, it also depends on what you think the color of the light source is. The dress is a typical example of color vision being very context-dependent. It is almost impossible to model this perfectly.

I based Oklab on two other color spaces, CIECAM16 and IPT. I used the lightness and saturation prediction from CIECAM16, which is a color appearance model, as a target. I actually wanted to use the datasets used to create CIECAM16, but I couldn’t find them.

IPT was designed to have better hue uniformity. In experiments, they asked people to match light and dark colors, saturated and unsaturated colors, which resulted in a dataset for which colors, subjectively, have the same hue. IPT has a few other issues but is the basis for hue in Oklab.

In the Munsell color system, colors are described with three parameters, designed to match the perceived appearance of colors: Hue, Chroma and Value. The parameters are designed to be independent and each have a uniform scale. This results in a color solid with an irregular shape. The parameters are designed to be independent and each have a uniform scale. This results in a color solid with an irregular shape. Modern color spaces and models, such as CIELAB, Cam16 and Björn Ottosson own Oklab, are very similar in their construction.

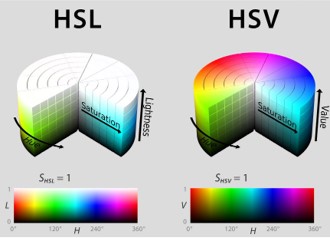

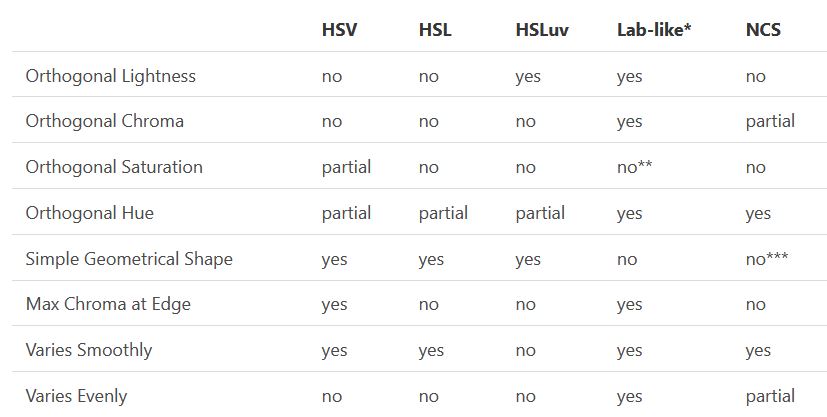

By far the most used color spaces today for color picking are HSL and HSV, two representations introduced in the classic 1978 paper “Color Spaces for Computer Graphics”. HSL and HSV designed to roughly correlate with perceptual color properties while being very simple and cheap to compute.

Today HSL and HSV are most commonly used together with the sRGB color space.

One of the main advantages of HSL and HSV over the different Lab color spaces is that they map the sRGB gamut to a cylinder. This makes them easy to use since all parameters can be changed independently, without the risk of creating colors outside of the target gamut.

The main drawback on the other hand is that their properties don’t match human perception particularly well.

Reconciling these conflicting goals perfectly isn’t possible, but given that HSV and HSL don’t use anything derived from experiments relating to human perception, creating something that makes a better tradeoff does not seem unreasonable.

With this new lightness estimate, we are ready to look into the construction of Okhsv and Okhsl.