BREAKING NEWS

LATEST POSTS

-

Bloomberg – Sam Altman on ChatGPT’s First Two Years, Elon Musk and AI Under Trump

https://www.bloomberg.com/features/2025-sam-altman-interview

One of the strengths of that original OpenAI group was recruiting. Somehow you managed to corner the market on a ton of the top AI research talent, often with much less money to offer than your competitors. What was the pitch?

The pitch was just come build AGI. And the reason it worked—I cannot overstate how heretical it was at the time to say we’re gonna build AGI. So you filter out 99% of the world, and you only get the really talented, original thinkers. And that’s really powerful. If you’re doing the same thing everybody else is doing, if you’re building, like, the 10,000th photo-sharing app? Really hard to recruit talent.

OpenAI senior executives at the company’s headquarters in San Francisco on March 13, 2023, from left: Sam Altman, chief executive officer; Mira Murati, chief technology officer; Greg Brockman, president; and Ilya Sutskever, chief scientist. Photographer: Jim Wilson/The New York Times

-

LG 45GX990A – The world’s first bendable gaming monitor

The monitor resembles a typical thin flat screen when in its home position, but it can flex its 45-inch body to 900R curvature in the blink of an eye.

-

DMesh++ – An Efficient Differentiable Mesh for Complex Shapes

https://sonsang.github.io/dmesh2-project

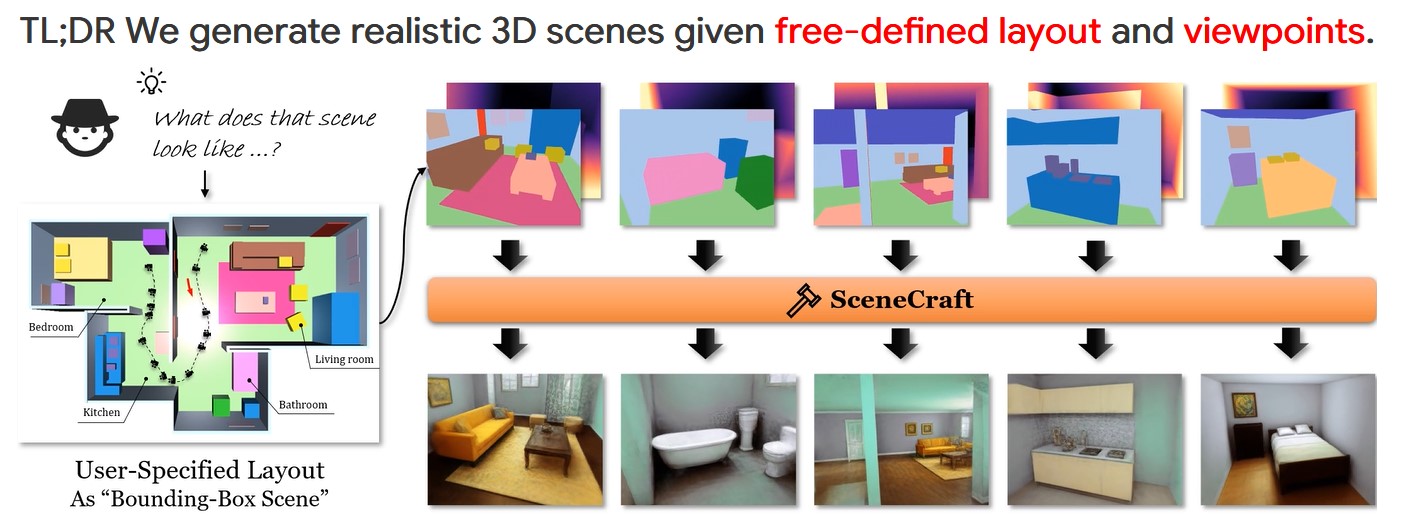

An efficient differentiable mesh-based method that can effectively handle complex 2D and 3D shapes. For instance, it can be used for reconstructing complex shapes from point clouds and multi-view images.

-

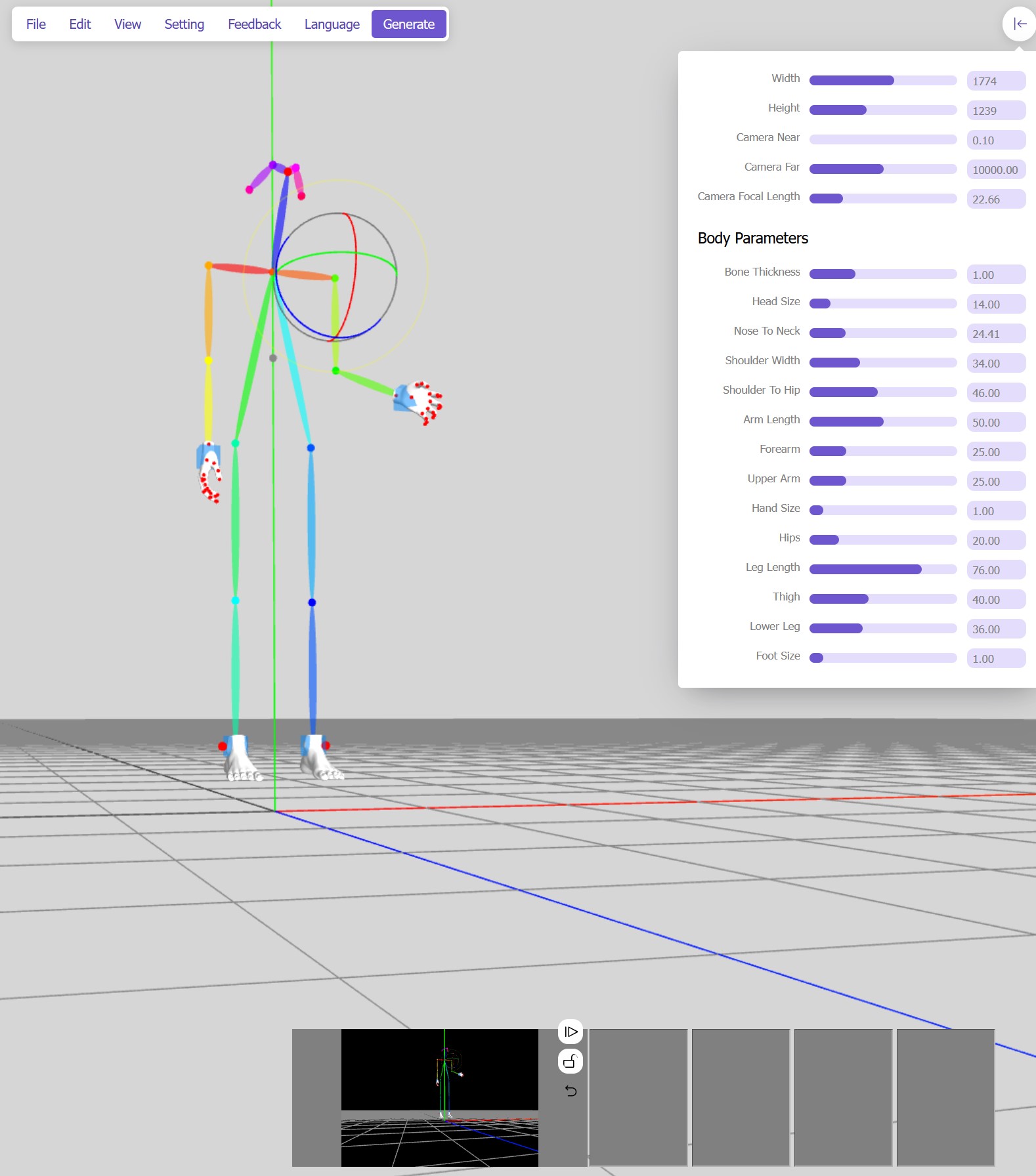

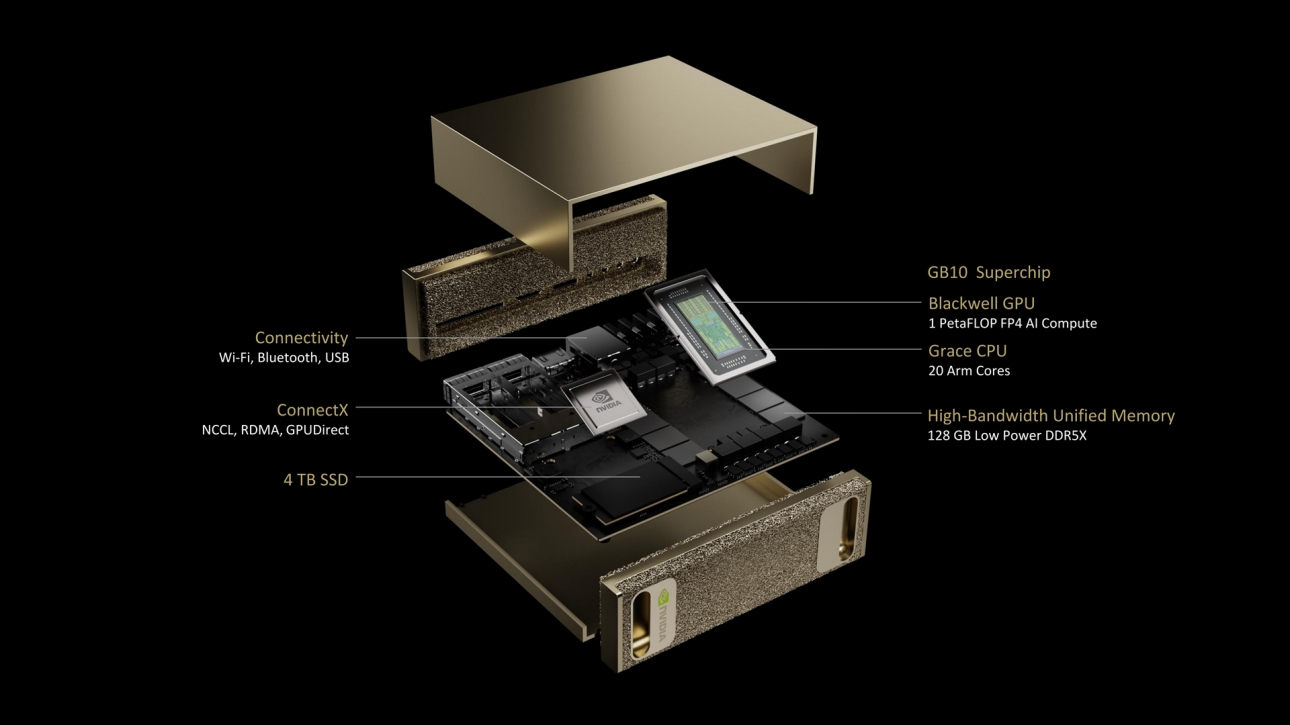

Nvidia unveils $3,000 desktop AI computer for home LLM researchers

https://arstechnica.com/ai/2025/01/nvidias-first-desktop-pc-can-run-local-ai-models-for-3000

https://www.nvidia.com/en-us/project-digits

Some smaller open-weights AI language models (such as Llama 3.1 70B, with 70 billion parameters) and various AI image-synthesis models like Flux.1 dev (12 billion parameters) could probably run comfortably on Project DIGITS, but larger open models like Llama 3.1 405B, with 405 billion parameters, may not. Given the recent explosion of smaller AI models, a creative developer could likely run quite a few interesting models on the unit.

DIGITS’ 128GB of unified RAM is notable because a high-power consumer GPU like the RTX 4090 has only 24GB of VRAM. Memory serves as a hard limit on AI model parameter size, and more memory makes room for running larger local AI models.

FEATURED POSTS

-

Running DeepSeek R1 Locally Due To Security Issues

DeepSeek Gets an ‘F’ in Safety From Researchers https://gizmodo.com/deepseek-gets-an-f-in-safety-from-researchers-2000558645

-

Survivorship Bias: The error resulting from systematically focusing on successes and ignoring failures. How a young statistician saved his planes during WW2.

A young statistician saved their lives.

His insight (and how it can change yours):

(more…)

During World War II, the U.S. wanted to add reinforcement armor to specific areas of its planes.

Analysts examined returning bombers, plotted the bullet holes and damage on them (as in the image below), and came to the conclusion that adding armor to the tail, body, and wings would improve their odds of survival.

But a young statistician named Abraham Wald noted that this would be a tragic mistake. By only plotting data on the planes that returned, they were systematically omitting the data on a critical, informative subset: The planes that were damaged and unable to return.

-

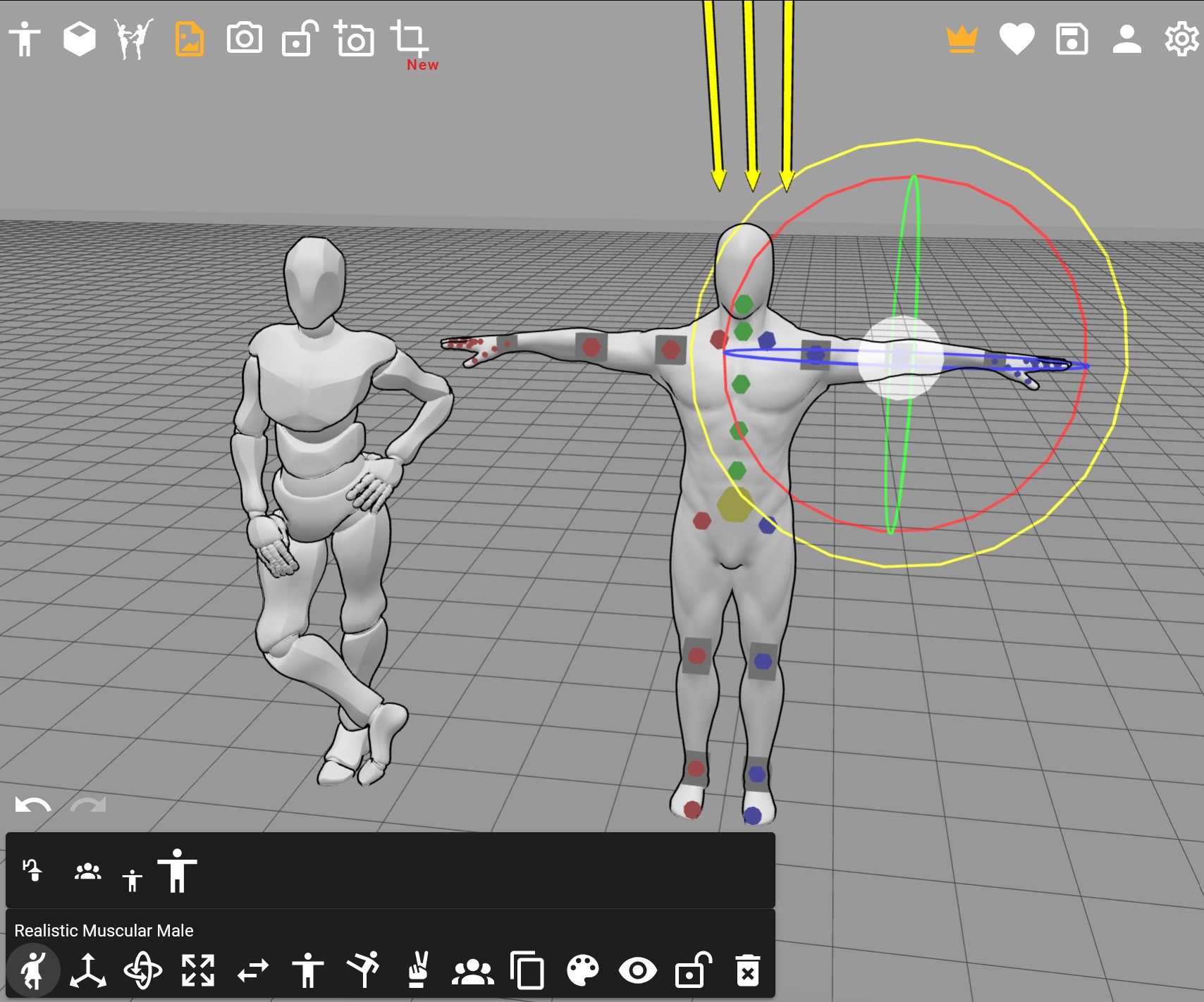

NVidia – High-Fidelity 3D Mesh Generation at Scale with Meshtron

https://developer.nvidia.com/blog/high-fidelity-3d-mesh-generation-at-scale-with-meshtron/

Meshtron provides a simple and scalable, data-driven solution for generating intricate, artist-like meshes of up to 64K faces at 1024-level coordinate resolution. This is over an order of magnitude higher face count and 8x higher coordinate resolution compared to existing methods.