BREAKING NEWS

LATEST POSTS

-

Google Gemini Robotics

For safety considerations, Google mentions a “layered, holistic approach” that maintains traditional robot safety measures like collision avoidance and force limitations. The company describes developing a “Robot Constitution” framework inspired by Isaac Asimov’s Three Laws of Robotics and releasing a dataset unsurprisingly called “ASIMOV” to help researchers evaluate safety implications of robotic actions.

This new ASIMOV dataset represents Google’s attempt to create standardized ways to assess robot safety beyond physical harm prevention. The dataset appears designed to help researchers test how well AI models understand the potential consequences of actions a robot might take in various scenarios. According to Google’s announcement, the dataset will “help researchers to rigorously measure the safety implications of robotic actions in real-world scenarios.”

-

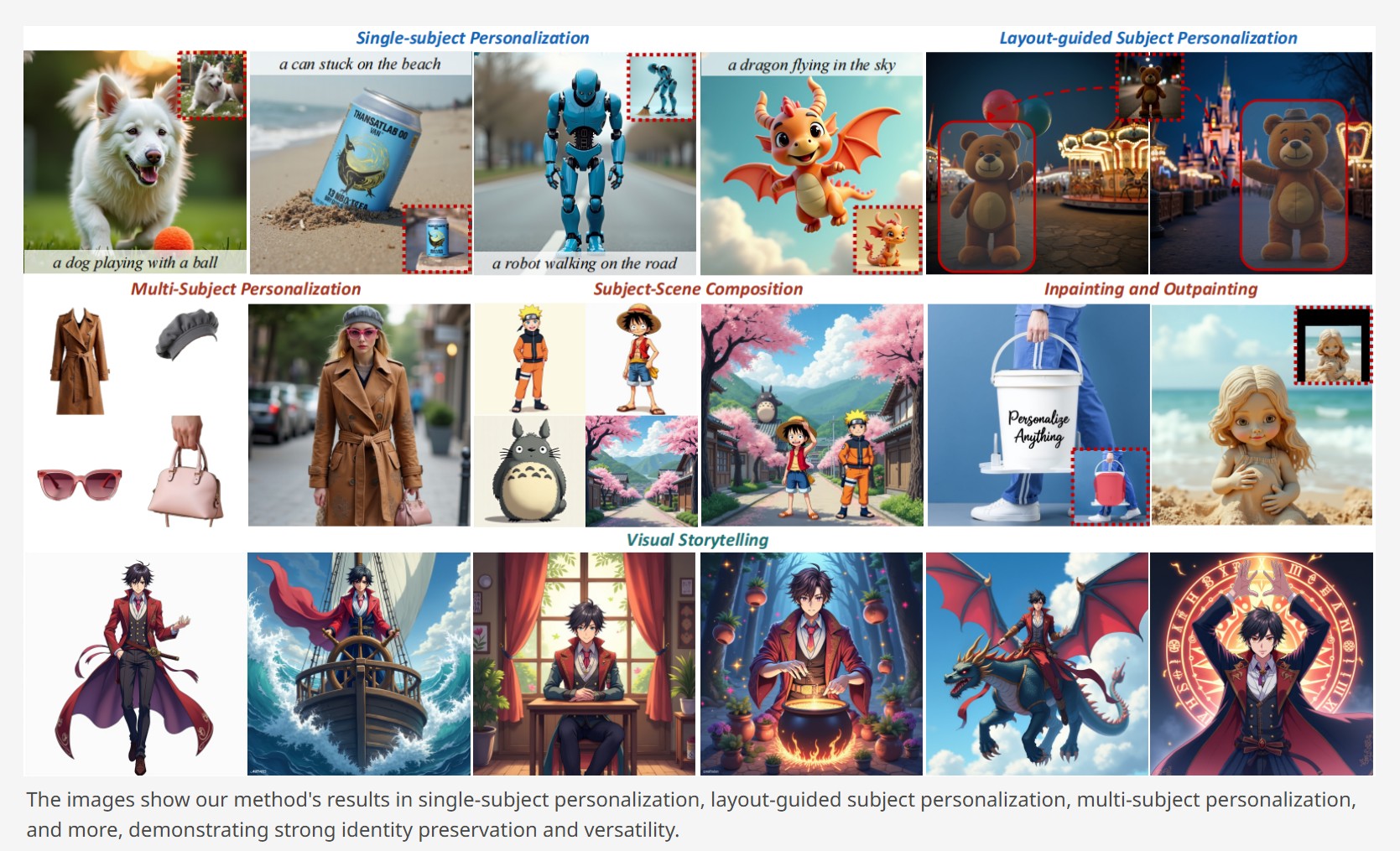

Personalize Anything – For Free with Diffusion Transformer

https://fenghora.github.io/Personalize-Anything-Page

Customize any subject with advanced DiT without additional fine-tuning.

-

Google Gemini 2.0 Flash new AI model extremely proficient at removing watermarks from images

-

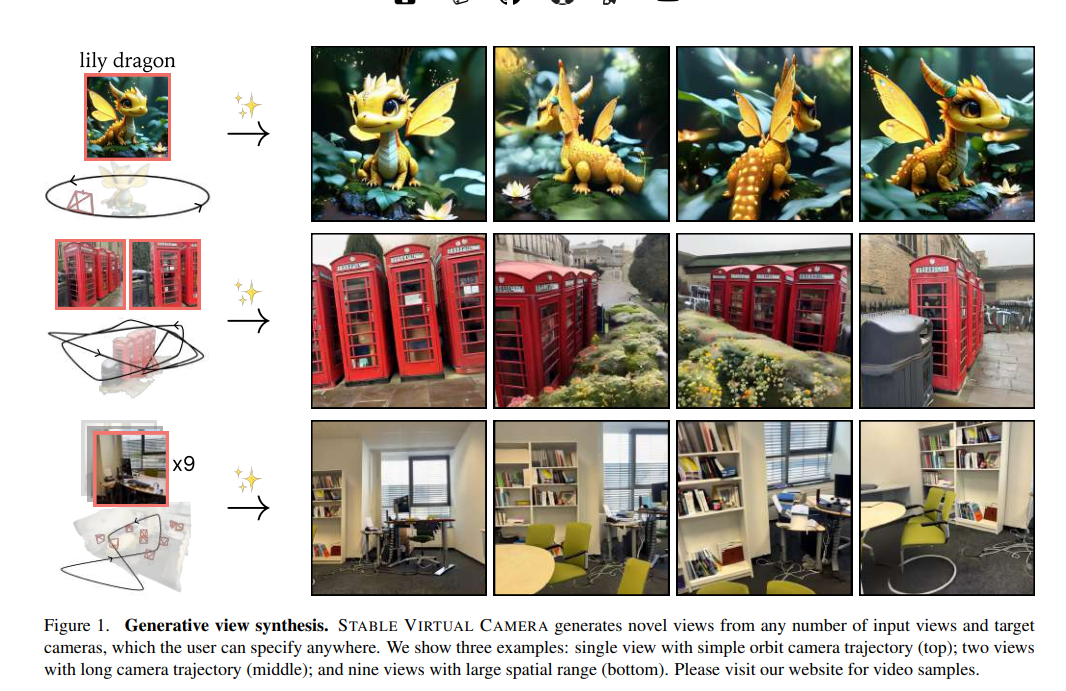

Stability.ai – Introducing Stable Virtual Camera: Multi-View Video Generation with 3D Camera Control

Capabilities

Stable Virtual Camera offers advanced capabilities for generating 3D videos, including:

- Dynamic Camera Control: Supports user-defined camera trajectories as well as multiple dynamic camera paths, including: 360°, Lemniscate (∞ shaped path), Spiral, Dolly Zoom In, Dolly Zoom Out, Zoom In, Zoom Out, Move Forward, Move Backward, Pan Up, Pan Down, Pan Left, Pan Right, and Roll.

- Flexible Inputs: Generates 3D videos from just one input image or up to 32.

- Multiple Aspect Ratios: Capable of producing videos in square (1:1), portrait (9:16), landscape (16:9), and other custom aspect ratios without additional training.

- Long Video Generation: Ensures 3D consistency in videos up to 1,000 frames, enabling seamless

Model limitations

In its initial version, Stable Virtual Camera may produce lower-quality results in certain scenarios. Input images featuring humans, animals, or dynamic textures like water often lead to degraded outputs. Additionally, highly ambiguous scenes, complex camera paths that intersect objects or surfaces, and irregularly shaped objects can cause flickering artifacts, especially when target viewpoints differ significantly from the input images.

-

Sony tests AI-powered Playstation characters

https://www.independent.co.uk/tech/ai-playstation-characters-sony-ps5-chatgpt-b2712813.html

A demo video, first reported by The Verge, showed an AI version of the character Aloy from the Playstation game Horizon Forbidden West conversing through voice prompts during gameplay on the PS5 console.

The character’s facial expressions are also powered by Sony’s advanced AI software Mockingbird, while the speech artificially replicates the voice of the actor Ashly Burch.

FEATURED POSTS

-

Lisa Tagliaferri – 3 Python Machine Learning Projects

A Compilation of 3 Python Machine Learning Projects

- How To Build a Machine Learning Classifier in Python with Scikit-learn

- How To Build a Neural Network to Recognize Handwritten Digits with

TensorFlow - Bias-Variance for Deep Reinforcement Learning: How To Build a Bot for Atari with openAI gym

-

The new Blackmagic URSA Cine Immersive camera – 8160 x 7200 resolution per eye

Based on the new Blackmagic URSA Cine platform, the new Blackmagic URSA Cine Immersive model features a fixed, custom lens system with a sensor that features 8160 x 7200 resolution per eye with pixel level synchronization. It has an extremely wide 16 stops of dynamic range, and shoots at 90 fps stereoscopic into a Blackmagic RAW Immersive file. The new Blackmagic RAW Immersive file format is an enhanced version of Blackmagic RAW that’s been designed to make immersive video simple to use through the post production workflow.

-

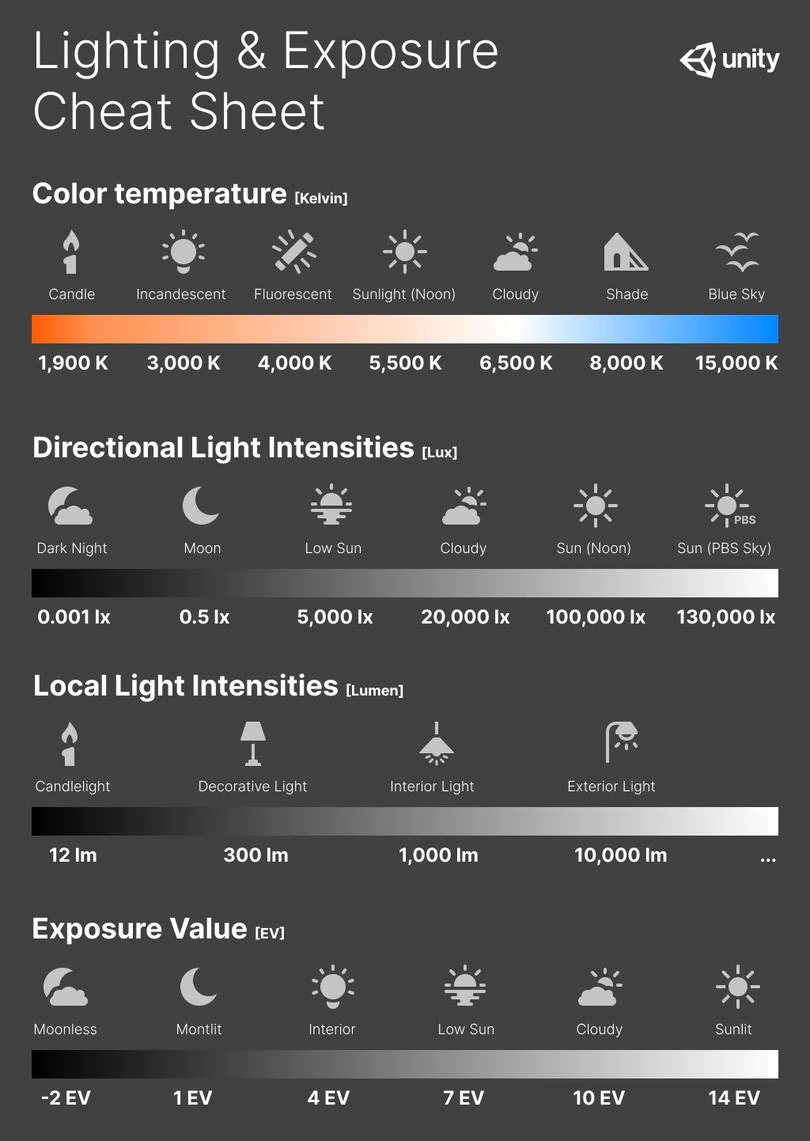

Photography basics: Exposure Value vs Photographic Exposure vs Il/Luminance vs Pixel luminance measurements

Also see: https://www.pixelsham.com/2015/05/16/how-aperture-shutter-speed-and-iso-affect-your-photos/

In photography, exposure value (EV) is a number that represents a combination of a camera’s shutter speed and f-number, such that all combinations that yield the same exposure have the same EV (for any fixed scene luminance).

The EV concept was developed in an attempt to simplify choosing among combinations of equivalent camera settings. Although all camera settings with the same EV nominally give the same exposure, they do not necessarily give the same picture. EV is also used to indicate an interval on the photographic exposure scale. 1 EV corresponding to a standard power-of-2 exposure step, commonly referred to as a stop

EV 0 corresponds to an exposure time of 1 sec and a relative aperture of f/1.0. If the EV is known, it can be used to select combinations of exposure time and f-number.Note EV does not equal to photographic exposure. Photographic Exposure is defined as how much light hits the camera’s sensor. It depends on the camera settings mainly aperture and shutter speed. Exposure value (known as EV) is a number that represents the exposure setting of the camera.

Thus, strictly, EV is not a measure of luminance (indirect or reflected exposure) or illuminance (incidentl exposure); rather, an EV corresponds to a luminance (or illuminance) for which a camera with a given ISO speed would use the indicated EV to obtain the nominally correct exposure. Nonetheless, it is common practice among photographic equipment manufacturers to express luminance in EV for ISO 100 speed, as when specifying metering range or autofocus sensitivity.

The exposure depends on two things: how much light gets through the lenses to the camera’s sensor and for how long the sensor is exposed. The former is a function of the aperture value while the latter is a function of the shutter speed. Exposure value is a number that represents this potential amount of light that could hit the sensor. It is important to understand that exposure value is a measure of how exposed the sensor is to light and not a measure of how much light actually hits the sensor. The exposure value is independent of how lit the scene is. For example a pair of aperture value and shutter speed represents the same exposure value both if the camera is used during a very bright day or during a dark night.

Each exposure value number represents all the possible shutter and aperture settings that result in the same exposure. Although the exposure value is the same for different combinations of aperture values and shutter speeds the resulting photo can be very different (the aperture controls the depth of field while shutter speed controls how much motion is captured).

EV 0.0 is defined as the exposure when setting the aperture to f-number 1.0 and the shutter speed to 1 second. All other exposure values are relative to that number. Exposure values are on a base two logarithmic scale. This means that every single step of EV – plus or minus 1 – represents the exposure (actual light that hits the sensor) being halved or doubled.Formulas

(more…)

-

Capturing the world in HDR for real time projects – Call of Duty: Advanced Warfare

Real-World Measurements for Call of Duty: Advanced Warfare

www.activision.com/cdn/research/Real_World_Measurements_for_Call_of_Duty_Advanced_Warfare.pdf

Local version

Real_World_Measurements_for_Call_of_Duty_Advanced_Warfare.pdf