BREAKING NEWS

LATEST POSTS

-

ComfyUI Tutorial Series Ep 25 – LTX Video – Fast AI Video Generator Model

https://comfyanonymous.github.io/ComfyUI_examples/ltxv

LTX-Video 2B v0.9.1 Checkpoint model

https://huggingface.co/Lightricks/LTX-Video/tree/main

More details under the post

(more…) -

AI and the Law – The AI-Copyright Trap document by Carys Craig

https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4905118

“There are many good reasons to be concerned about the rise of generative AI(…). Unfortunately, there are also many good reasons to be concerned about copyright’s growing prevalence in the policy discourse around AI’s regulation. Insisting that copyright protects an exclusive right to use materials for text and data mining practices (whether for informational analysis or machine learning to train generative AI models) is likely to do more harm than good. As many others have explained, imposing copyright constraints will certainly limit competition in the AI industry, creating cost-prohibitive barriers to quality data and ensuring that only the most powerful players have the means to build the best AI tools (provoking all of the usual monopoly concerns that accompany this kind of market reality but arguably on a greater scale than ever before). It will not, however, prevent the continued development and widespread use of generative AI.”

…

“(…) As Michal Shur-Ofry has explained, the technical traits of generative AI already mean that its outputs will tend towards the dominant, likely reflecting ‘a relatively narrow, mainstream view, prioritizing the popular and conventional over diverse contents and narratives.’ Perhaps, then, if the political goal is to push for equality, participation, and representation in the AI age, critics’ demands should focus not on exclusivity but inclusivity. If we want to encourage the development of ethical and responsible AI, maybe we should be asking what kind of material and training data must be included in the inputs and outputs of AI to advance that goal. Certainly, relying on copyright and the market to dictate what is in and what is out is unlikely to advance a public interest or equality-oriented agenda.”

…

“If copyright is not the solution, however, it might reasonably be asked: what is? The first step to answering that question—to producing a purposively sound prescription and evidence-based prognosis, is to correctly diagnose the problem. If, as I have argued, the problem is not that AI models are being trained on copyright works without their owners’ consent, then requiring copyright owners’ consent and/or compensation for the use of their work in AI-training datasets is not the appropriate solution. (…)If the only real copyright problem is that the outputs of generative AI may be substantially similar to specific human-authored and copyright-protected works, then copyright law as we know it already provides the solution.” -

Newton’s Cradle – An AI Film By Jeff Synthesized

Narrative voice via Artlistai, News Reporter PlayAI, All other voices are V2V in Elevenlabs.

Powered by (in order of amount) ‘HailuoAI’, ‘KlingAI’ and of course some of our special source. Performance capture by ‘Runway’s Act-One’.

Edited and color graded in ‘DaVinci Resolve’. Composited with ‘After Effects’.

In this film, the ‘Newton’s Cradle’ isn’t just a symbolic object—it represents the fragile balance between control and freedom in a world where time itself is being manipulated. The oscillation of the cradle reflects the constant push and pull of power in this dystopian society. By the end of the film, we discover that this seemingly innocuous object holds the potential to disrupt the system, offering a glimmer of hope that time can be reset and balance restored. -

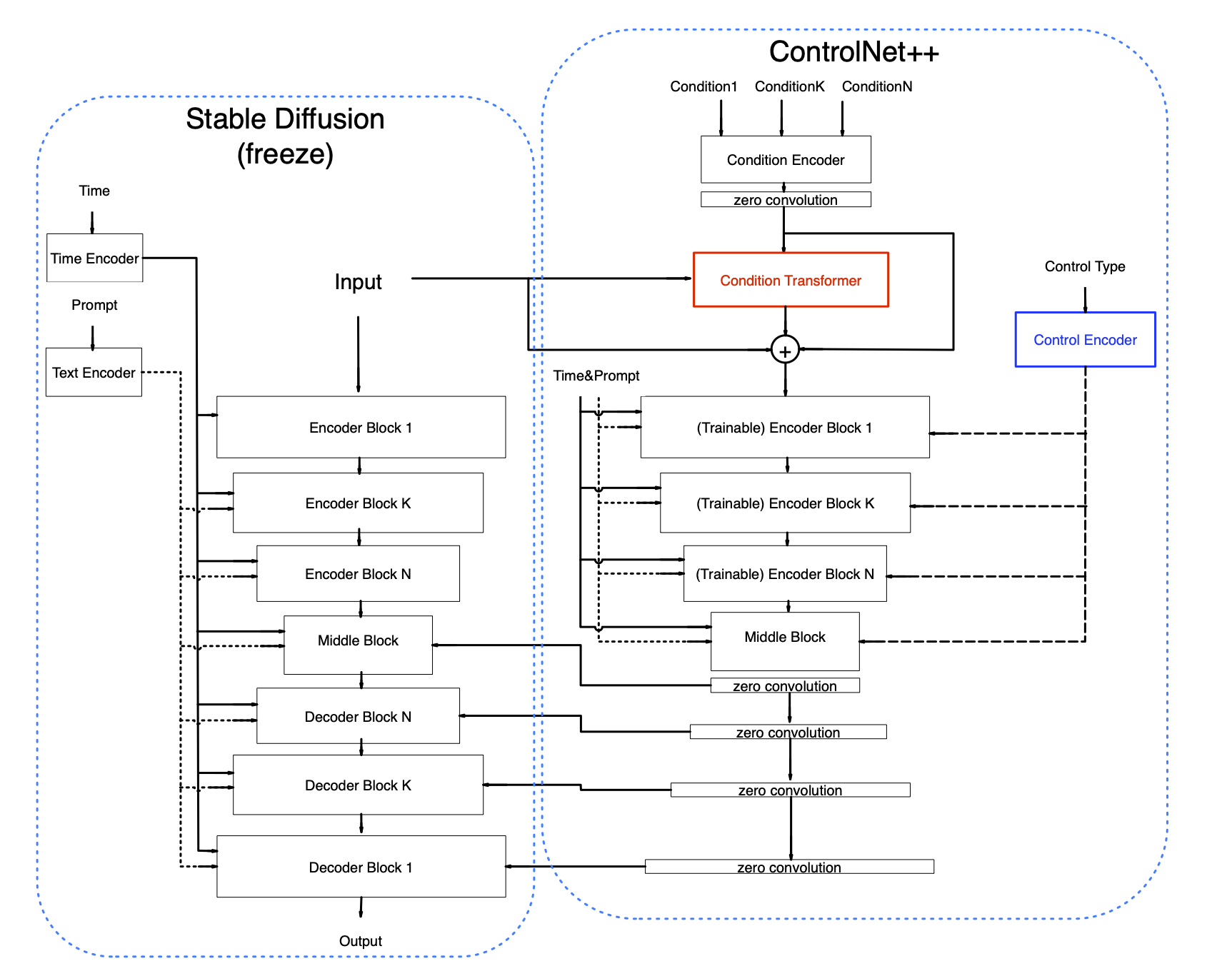

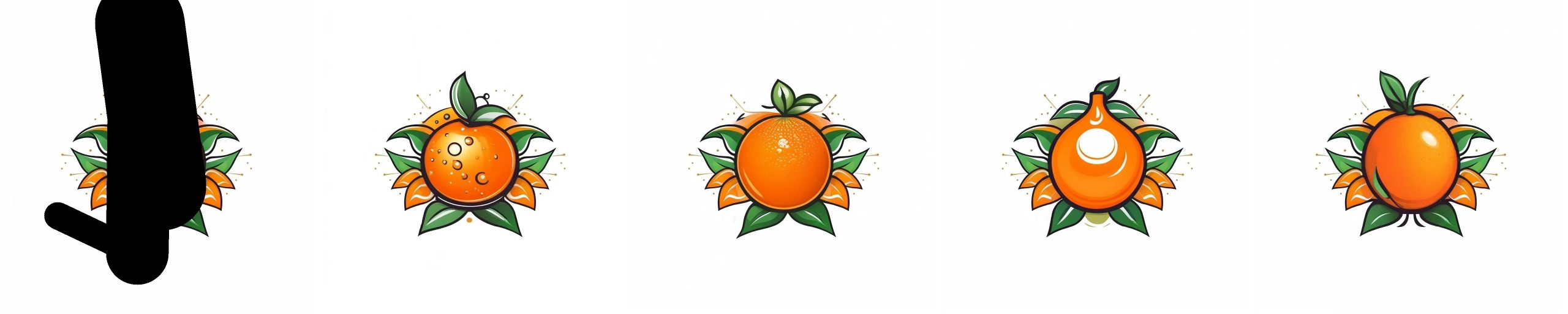

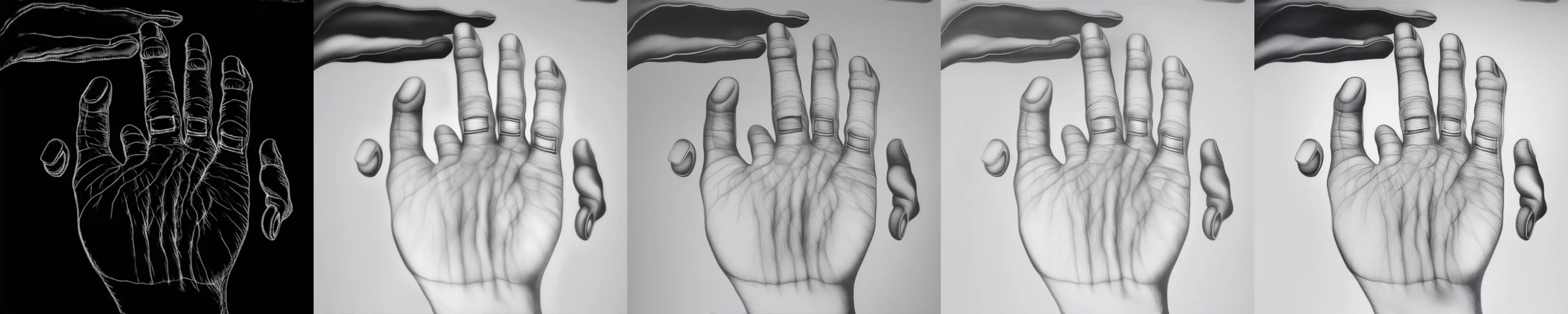

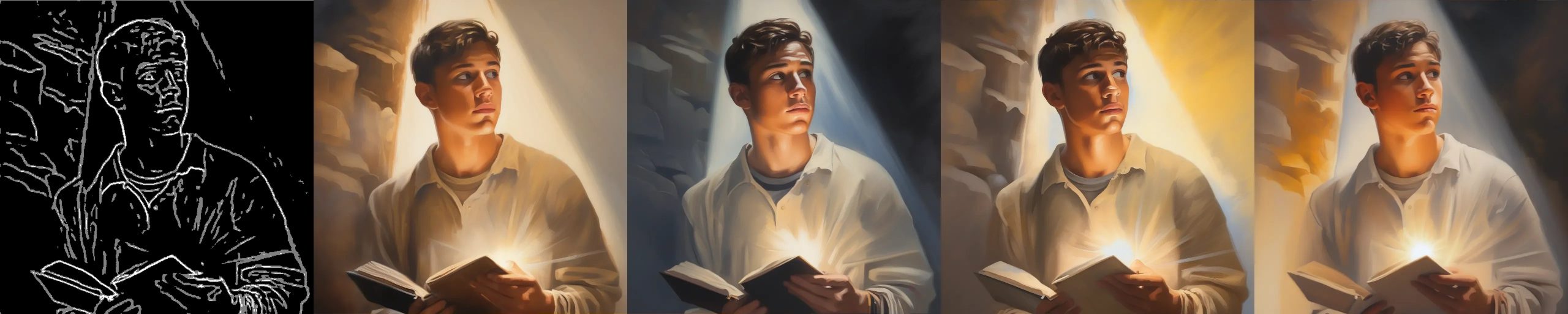

xinsir – controlnet-union-sdxl-1.0 examples

https://huggingface.co/xinsir/controlnet-union-sdxl-1.0

deblur

inpainting

outpainting

upscale

openpose

depthmap

canny

lineart

anime lineart

mlsd

scribble

hed

softedge

ted

segmentation

normals

openpose + canny

-

What is deepfake GAN (Generative Adversarial Network) technology?

https://www.techtarget.com/whatis/definition/deepfake

Deepfake technology is a type of artificial intelligence used to create convincing fake images, videos and audio recordings. The term describes both the technology and the resulting bogus content and is a portmanteau of deep learning and fake.

Deepfakes often transform existing source content where one person is swapped for another. They also create entirely original content where someone is represented doing or saying something they didn’t do or say.

Deepfakes aren’t edited or photoshopped videos or images. In fact, they’re created using specialized algorithms that blend existing and new footage. For example, subtle facial features of people in images are analyzed through machine learning (ML) to manipulate them within the context of other videos.

Deepfakes uses two algorithms — a generator and a discriminator — to create and refine fake content. The generator builds a training data set based on the desired output, creating the initial fake digital content, while the discriminator analyzes how realistic or fake the initial version of the content is. This process is repeated, enabling the generator to improve at creating realistic content and the discriminator to become more skilled at spotting flaws for the generator to correct.

The combination of the generator and discriminator algorithms creates a generative adversarial network.

A GAN uses deep learning to recognize patterns in real images and then uses those patterns to create the fakes.

When creating a deepfake photograph, a GAN system views photographs of the target from an array of angles to capture all the details and perspectives.

When creating a deepfake video, the GAN views the video from various angles and analyzes behavior, movement and speech patterns.

This information is then run through the discriminator multiple times to fine-tune the realism of the final image or video.

FEATURED POSTS

-

Photography basics: Production Rendering Resolution Charts

https://www.urtech.ca/2019/04/solved-complete-list-of-screen-resolution-names-sizes-and-aspect-ratios/

Resolution – Aspect Ratio 4:03 16:09 16:10 3:02 5:03 5:04 CGA 320 x 200 QVGA 320 x 240 VGA (SD, Standard Definition) 640 x 480 NTSC 720 x 480 WVGA 854 x 450 WVGA 800 x 480 PAL 768 x 576 SVGA 800 x 600 XGA 1024 x 768 not named 1152 x 768 HD 720 (720P, High Definition) 1280 x 720 WXGA 1280 x 800 WXGA 1280 x 768 SXGA 1280 x 1024 not named (768P, HD, High Definition) 1366 x 768 not named 1440 x 960 SXGA+ 1400 x 1050 WSXGA 1680 x 1050 UXGA (2MP) 1600 x 1200 HD1080 (1080P, Full HD) 1920 x 1080 WUXGA 1920 x 1200 2K 2048 x (any) QWXGA 2048 x 1152 QXGA (3MP) 2048 x 1536 WQXGA 2560 x 1600 QHD (Quad HD) 2560 x 1440 QSXGA (5MP) 2560 x 2048 4K UHD (4K, Ultra HD, Ultra-High Definition) 3840 x 2160 QUXGA+ 3840 x 2400 IMAX 3D 4096 x 3072 8K UHD (8K, 8K Ultra HD, UHDTV) 7680 x 4320 10K (10240×4320, 10K HD) 10240 x (any) 16K (Quad UHD, 16K UHD, 8640P) 15360 x 8640

-

StudioBinder.com – CRI color rendering index

www.studiobinder.com/blog/what-is-color-rendering-index

“The Color Rendering Index is a measurement of how faithfully a light source reveals the colors of whatever it illuminates, it describes the ability of a light source to reveal the color of an object, as compared to the color a natural light source would provide. The highest possible CRI is 100. A CRI of 100 generally refers to a perfect black body, like a tungsten light source or the sun. ”

www.pixelsham.com/2021/04/28/types-of-film-lights-and-their-efficiency