BREAKING NEWS

LATEST POSTS

-

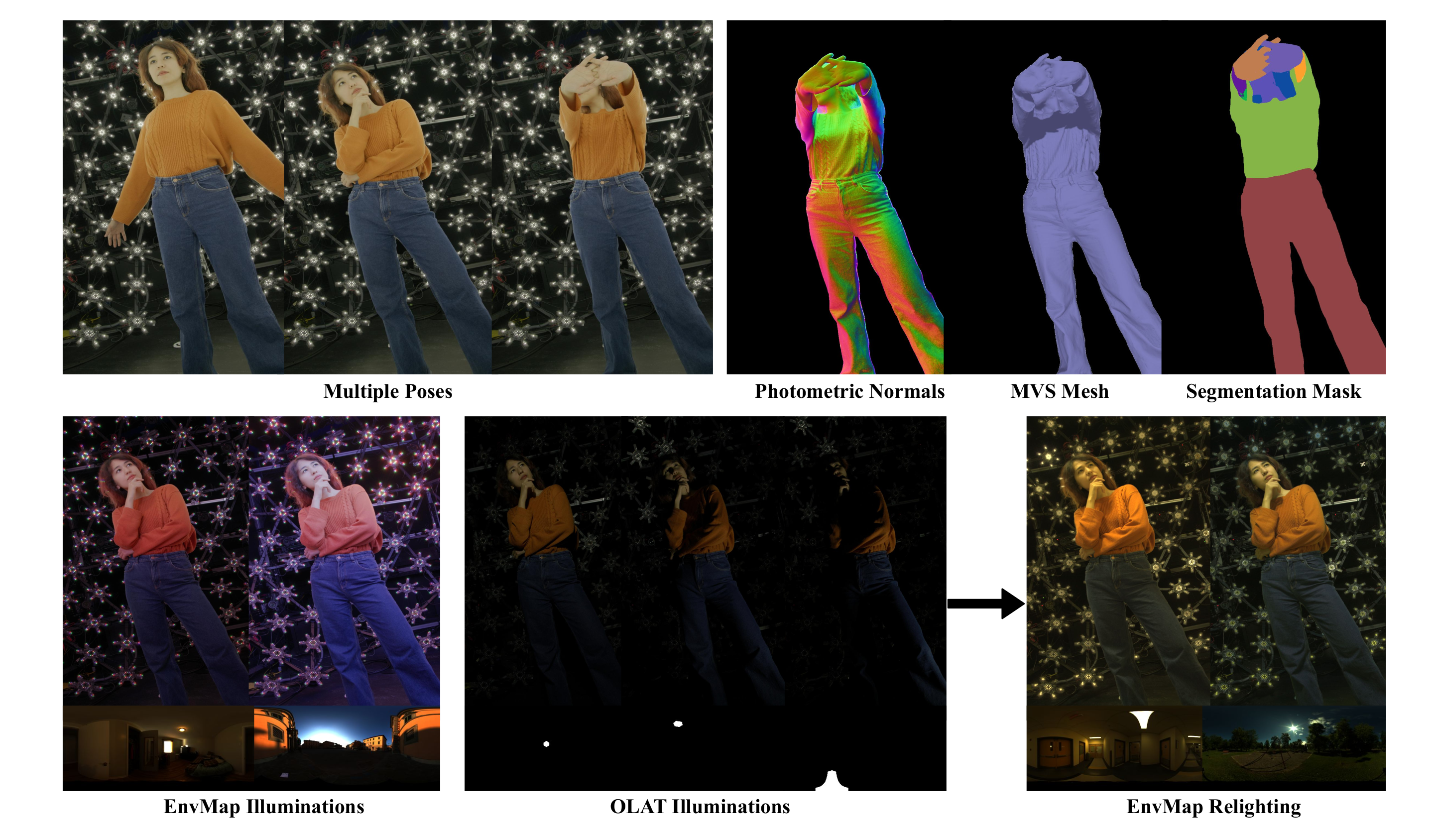

Andrii Shramko – How to process 20,000 photos for a 3DGS model on a single RTX 4090 using GreenValley International Lidar360MLS

The goal was ambitious: to generate a hyper-detailed 3DGS scan from a massive dataset—20,000 drone photos at full resolution (5280x3956px). All of this on a single machine with just one RTX 4090 GPU.

What was the problem?

Most existing tools simply can’t handle this volume of data. For instance, Postshot, which is excellent for many tasks, confidently processed up to 7,000 photos but choked on 20,000—it ran for two days without even starting the model training.

The Breakthrough Solution.

The real discovery was the software from GreenValley Internationalhttps://www.greenvalleyintl.com/LiDAR360MLS

Their approach is brilliant: instead of trying to swallow the entire dataset at once, the program intelligently divides it into smaller, manageable chunks, trains each one individually, and then seamlessly merges them into one giant, detailed scene. After 40 hours of rendering, we got this stunning 103 million splats PLY result:

(more…) -

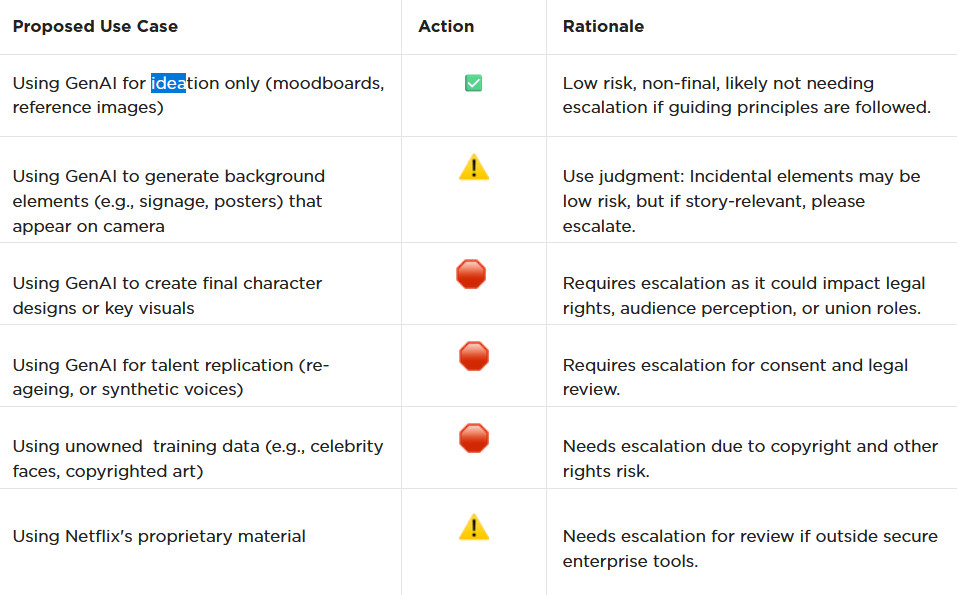

AI and the Law – Netflix : Using Generative AI in Content Production

https://www.cartoonbrew.com/business/netflix-generative-ai-use-guidelines-253300.html

- Temporary Use: AI-generated material can be used for ideation, visualization, and exploration—but is currently considered temporary and not part of final deliverables.

- Ownership & Rights: All outputs must be carefully reviewed to ensure rights, copyright, and usage are properly cleared before integrating into production.

- Transparency: Productions are expected to document and disclose how generative AI is used.

- Human Oversight: AI tools are meant to support creative teams, not replace them—final decision-making rests with human creators.

- Security & Compliance: Any use of AI tools must align with Netflix’s security protocols and protect confidential production material.

-

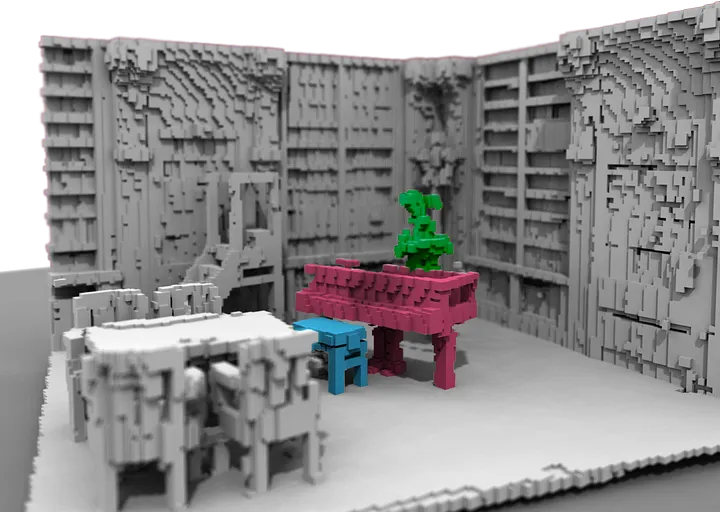

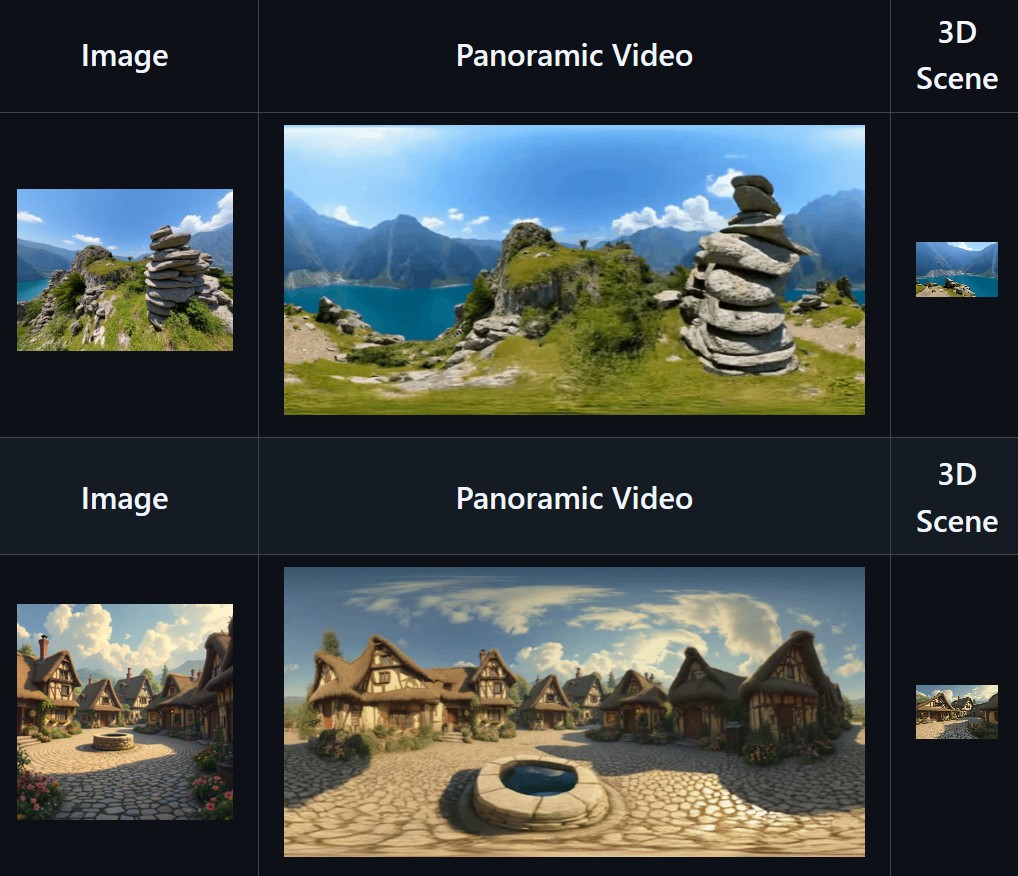

SkyworkAI Matrix-3D – Omnidirectional Explorable 3D World Generation

https://github.com/SkyworkAI/Matrix-3D

Matrix-3D utilizes panoramic representation for wide-coverage omnidirectional explorable 3D world generation that combines conditional video generation and panoramic 3D reconstruction.

- Large-Scale Scene Generation : Compared to existing scene generation approaches, Matrix-3D supports the generation of broader, more expansive scenes that allow for complete 360-degree free exploration.

- High Controllability : Matrix-3D supports both text and image inputs, with customizable trajectories and infinite extensibility.

- Strong Generalization Capability : Built upon self-developed 3D data and video model priors, Matrix-3D enables the generation of diverse and high-quality 3D scenes.

- Speed-Quality Balance: Two types of panoramic 3D reconstruction methods are proposed to achieve rapid and detailed 3D reconstruction respectively.

-

Zibra.AI – Real-Time Volumetric Effects in Virtual Production. Now free for Indies!

A New Era for Volumetrics

For a long time, volumetric visual effects were viable only in high-end offline VFX workflows. Large data footprints and poor real-time rendering performance limited their use: most teams simply avoided volumetrics altogether. It’s similar to the early days of online video: limited computational power and low network bandwidth made video content hard to share or stream. Today, of course, we can’t imagine the internet without it, and we believe volumetrics are on a similar path.

With advanced data compression and real-time, GPU-driven decompression, anyone can now bring CGI-class visual effects into Unreal Engine.

From now on, it’s completely free for individual creators!

What it means for you?

(more…)

FEATURED POSTS

-

A question of ethics – What CG simulation and deepfakes means for the future of performance

www.ibc.org/create-and-produce/re-animators-night-of-the-living-avatars/5504.article

“When your performance is captured as data it can be manipulated, reworked or sampled, much like the music industry samples vocals and beats. If we can do that then where does the intellectual property lie? Who owns authorship of the performance? Where are the boundaries?”

“Tracking use of an original data captured performance is tricky given that any character or creature you can imagine can be animated using the artist’s work as a base.”

“Conventionally, when an actor contracts with a studio they will assign rights to their performance in that production to the studio. Typically, that would also licence the producer to use the actor’s likeness in related uses, such as marketing materials, or video games.

Similarly, a digital avatar will be owned by the commissioners of the work who will buy out the actor’s performance for that role and ultimately own the IP.

However, in UK law there is no such thing as an ‘image right’ or ‘personality right’ because there is no legal process in the UK which protects the Intellectual Property Rights that identify an image or personality.

The only way in which a pure image right can be protected in the UK is under the Law of Passing-Off.”

“Whether a certain project is ethical or not depends mainly on the purpose of using the ‘face’ of the dead actor,” “Legally, when an actor dies, the rights of their [image/name/brand] are controlled through their estate, which is often managed by family members. This can mean that different people have contradictory ideas about what is and what isn’t appropriate.”

“The advance of performance capture and VFX techniques can be liberating for much of the acting community. In theory, they would be cast on talent alone, rather than defined by how they look.”

“The question is whether that is ethically right.”

-

59 AI Filmmaking Tools For Your Workflow

https://curiousrefuge.com/blog/ai-filmmaking-tools-for-filmmakers

- Runway

- PikaLabs

- Pixverse (free)

- Haiper (free)

- Moonvalley (free)

- Morph Studio (free)

- SORA

- Google Veo

- Stable Video Diffusion (free)

- Leonardo

- Krea

- Kaiber

- Letz.AI

- Midjourney

- Ideogram

- DALL-E

- Firefly

- Stable Diffusion

- Google Imagen 3

- Polycam

- LTX Studio

- Simulon

- Elevenlabs

- Auphonic

- Adobe Enhance

- Adobe’s AI Rotoscoping

- Adobe Photoshop Generative Fill

- Canva Magic Brush

- Akool

- Topaz Labs

- Magnific.AI

- FreePik

- BigJPG

- LeiaPix

- Move AI

- Mootion

- Heygen

- Synthesia

- Chat GPT-4

- Claude 3

- Nolan AI

- Google Gemini

- Meta Llama 3

- Suno

- Udio

- Stable Audio

- Soundful

- Google MusicML

- Viggle

- SyncLabs

- Lalamu

- LensGo

- D-ID

- WonderStudio

- Cuebric

- Blockade Labs

- Chat GPT-4o

- Luma Dream Machine

- Pallaidium (free)

-

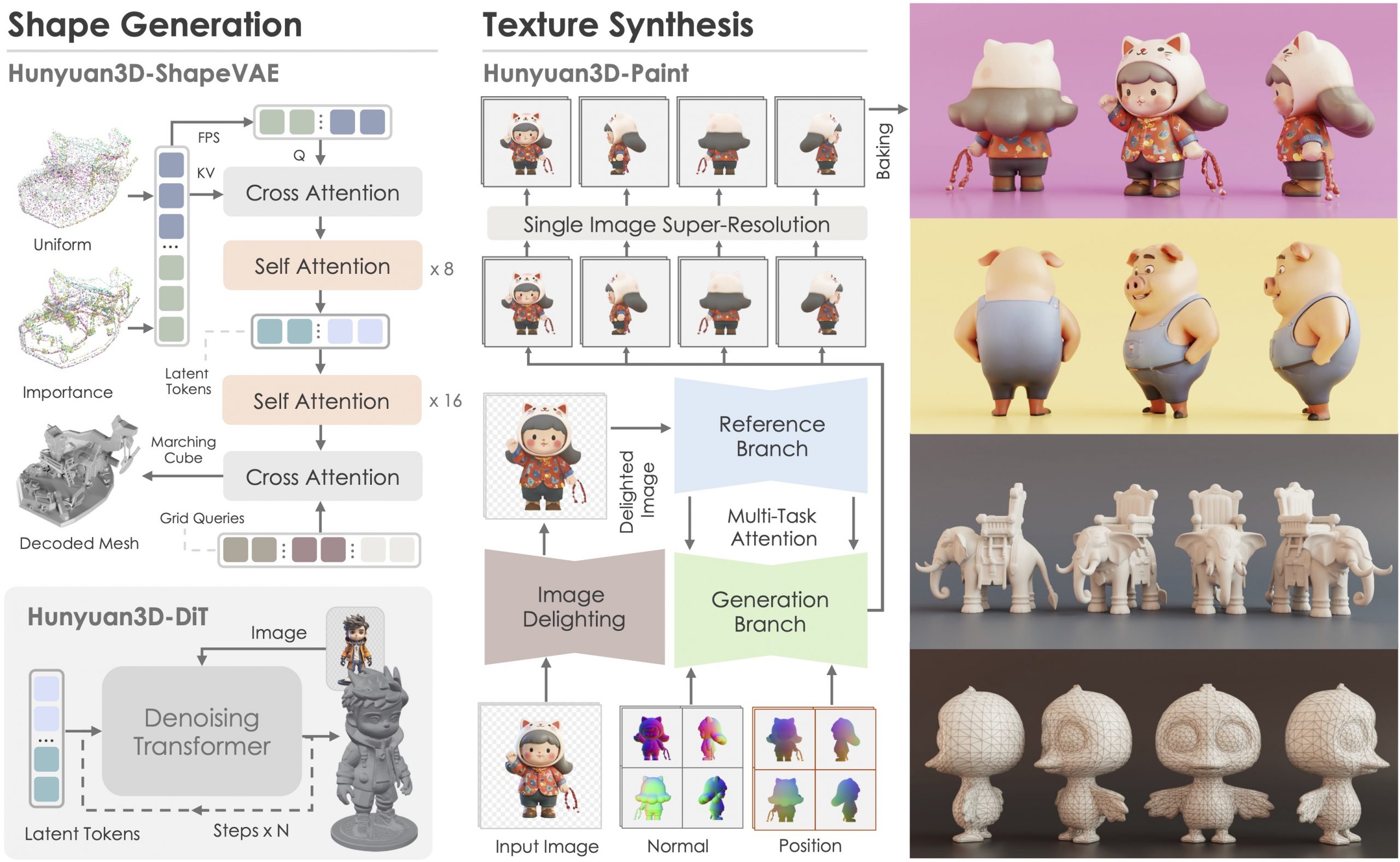

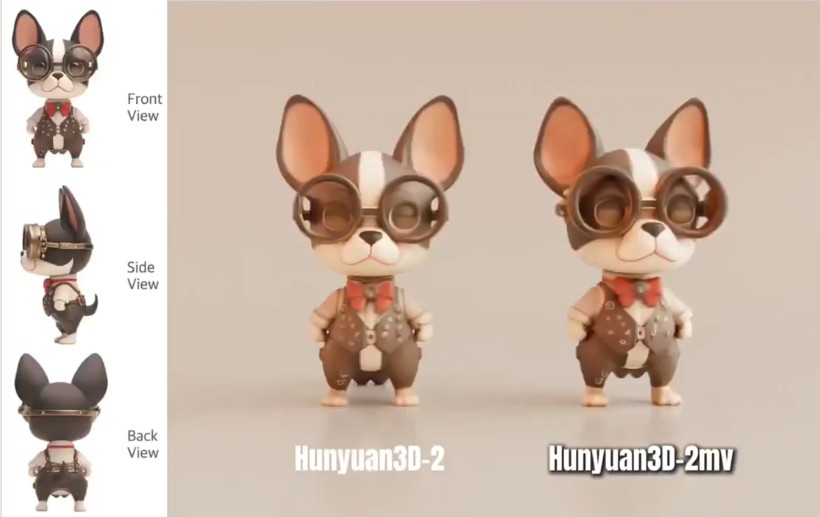

Tencent Hunyuan3D 2.1 goes Open Source and adds MV (Multi-view) and MV Mini

https://huggingface.co/tencent/Hunyuan3D-2mv

https://huggingface.co/tencent/Hunyuan3D-2mini

https://github.com/Tencent/Hunyuan3D-2

Tencent just made Hunyuan3D 2.1 open-source.

This is the first fully open-source, production-ready PBR 3D generative model with cinema-grade quality.

https://github.com/Tencent-Hunyuan/Hunyuan3D-2.1

What makes it special?

• Advanced PBR material synthesis brings realistic materials like leather, bronze, and more to life with stunning light interactions.

• Complete access to model weights, training/inference code, data pipelines.

• Optimized to run on accessible hardware.

• Built for real-world applications with professional-grade output quality.

They’re making it accessible to everyone:

• Complete open-source ecosystem with full documentation.

• Ready-to-use model weights and training infrastructure.

• Live demo available for instant testing.

• Comprehensive GitHub repository with implementation details.

-

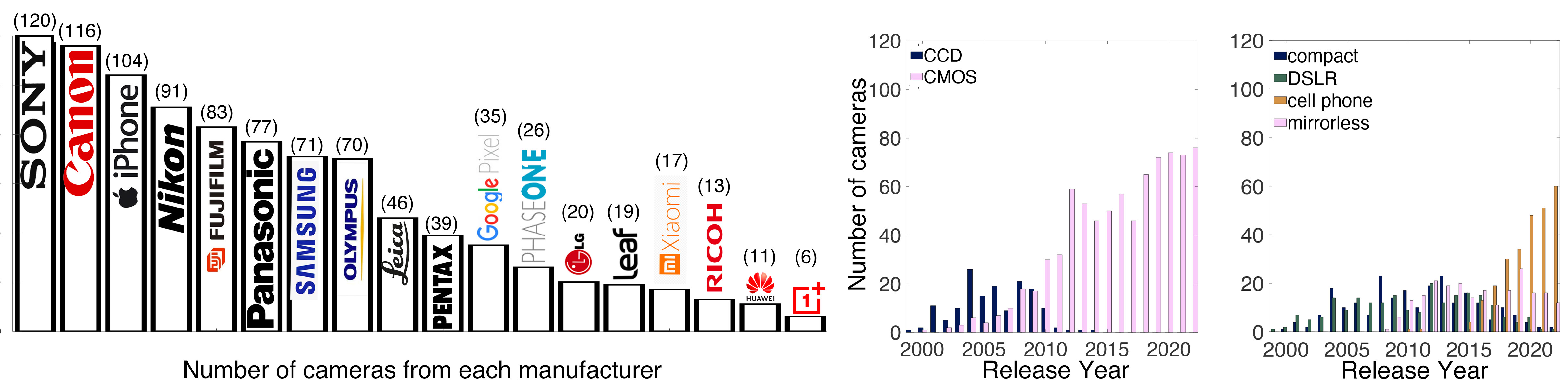

Photography Basics : Spectral Sensitivity Estimation Without a Camera

https://color-lab-eilat.github.io/Spectral-sensitivity-estimation-web/

A number of problems in computer vision and related fields would be mitigated if camera spectral sensitivities were known. As consumer cameras are not designed for high-precision visual tasks, manufacturers do not disclose spectral sensitivities. Their estimation requires a costly optical setup, which triggered researchers to come up with numerous indirect methods that aim to lower cost and complexity by using color targets. However, the use of color targets gives rise to new complications that make the estimation more difficult, and consequently, there currently exists no simple, low-cost, robust go-to method for spectral sensitivity estimation that non-specialized research labs can adopt. Furthermore, even if not limited by hardware or cost, researchers frequently work with imagery from multiple cameras that they do not have in their possession.

To provide a practical solution to this problem, we propose a framework for spectral sensitivity estimation that not only does not require any hardware (including a color target), but also does not require physical access to the camera itself. Similar to other work, we formulate an optimization problem that minimizes a two-term objective function: a camera-specific term from a system of equations, and a universal term that bounds the solution space.

Different than other work, we utilize publicly available high-quality calibration data to construct both terms. We use the colorimetric mapping matrices provided by the Adobe DNG Converter to formulate the camera-specific system of equations, and constrain the solutions using an autoencoder trained on a database of ground-truth curves. On average, we achieve reconstruction errors as low as those that can arise due to manufacturing imperfections between two copies of the same camera. We provide predicted sensitivities for more than 1,000 cameras that the Adobe DNG Converter currently supports, and discuss which tasks can become trivial when camera responses are available.