BREAKING NEWS

LATEST POSTS

-

RigAnything – Template-Free Autoregressive Rigging for Diverse 3D Assets

https://www.liuisabella.com/RigAnything

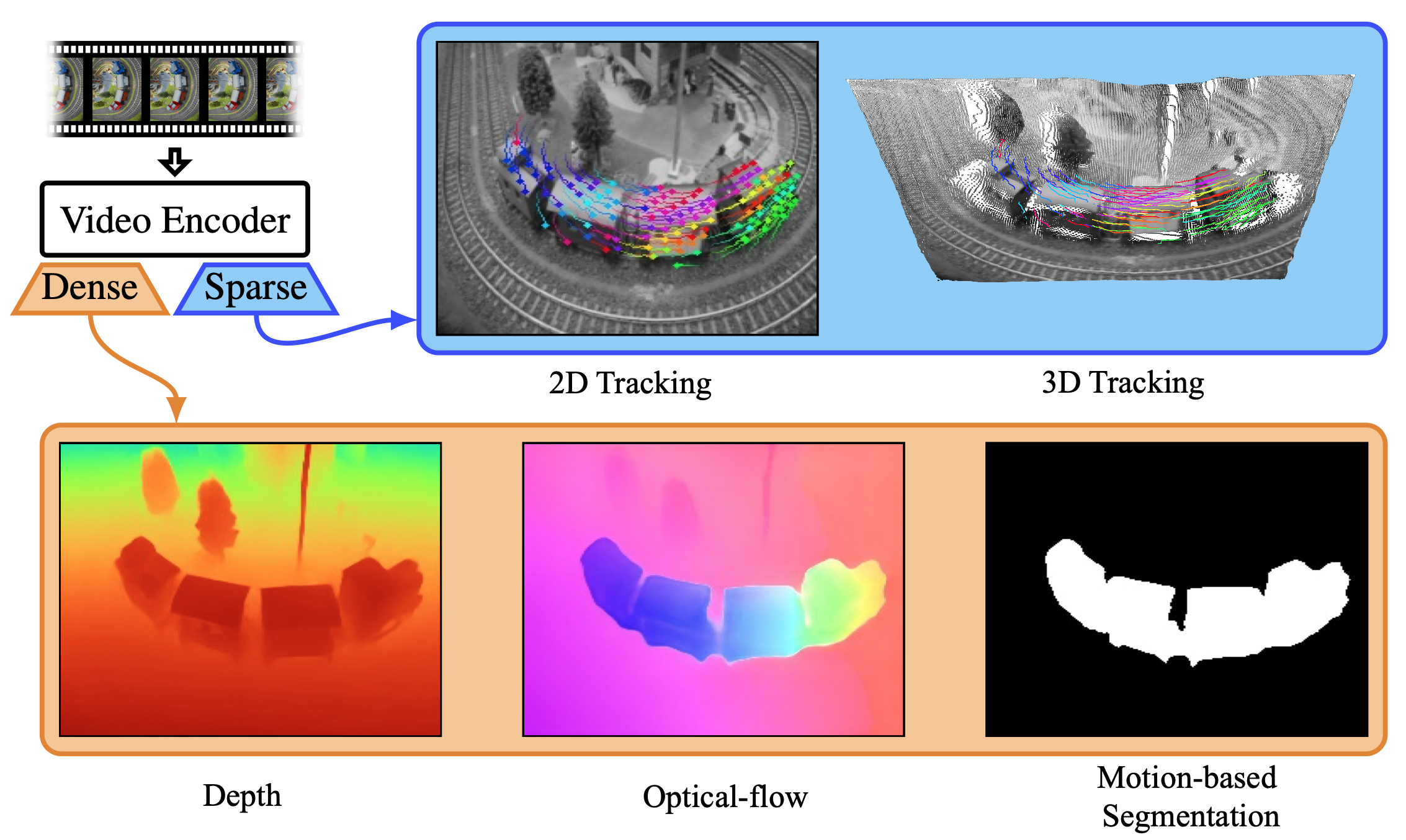

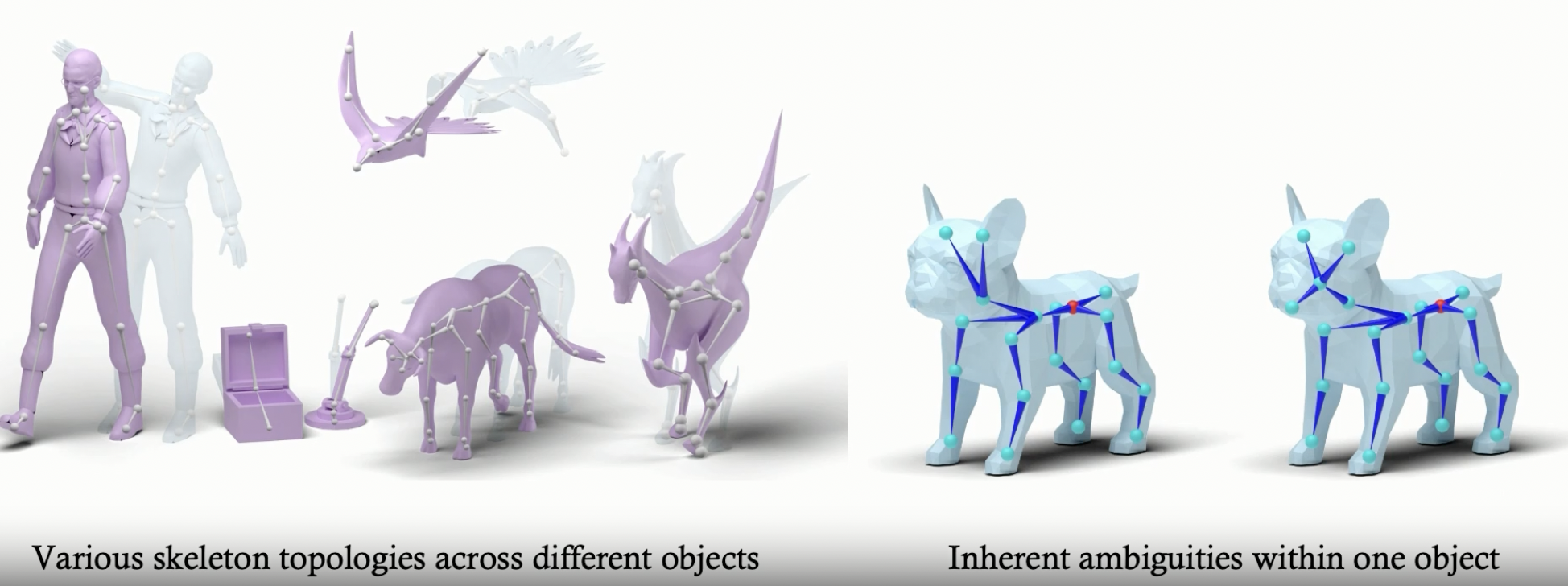

RigAnything was developed through a collaboration between UC San Diego, Adobe Research, and Hillbot Inc. It addresses one of 3D animation’s most persistent challenges: automatic rigging.

- Template-Free Autoregressive Rigging. A transformer-based model that sequentially generates skeletons without predefined templates, enabling automatic rigging across diverse 3D assets through probabilistic joint prediction and skinning weight assignment.

- Support Arbitrary Input Pose. Generates high-quality skeletons for shapes in any pose through online joint pose augmentation during training, eliminating the common rest-pose requirement of existing methods and enabling broader real-world applications.

- Fast Rigging Speed. Achieves 20x faster performance than existing template-based methods, completing rigging in under 2 seconds per shape.

-

Skywork SkyReels – All-in-one open source AI video creation based on Hynyuan

https://github.com/SkyworkAI/SkyReels-V1

All-in-one AI platform for video creation, including voiceover, lipsync, SFX, and editing. One click turn text to video & image to video. Turns idea into stunning video in minutes. Check Pricing Details. Start For Free. All-In-One Platform.

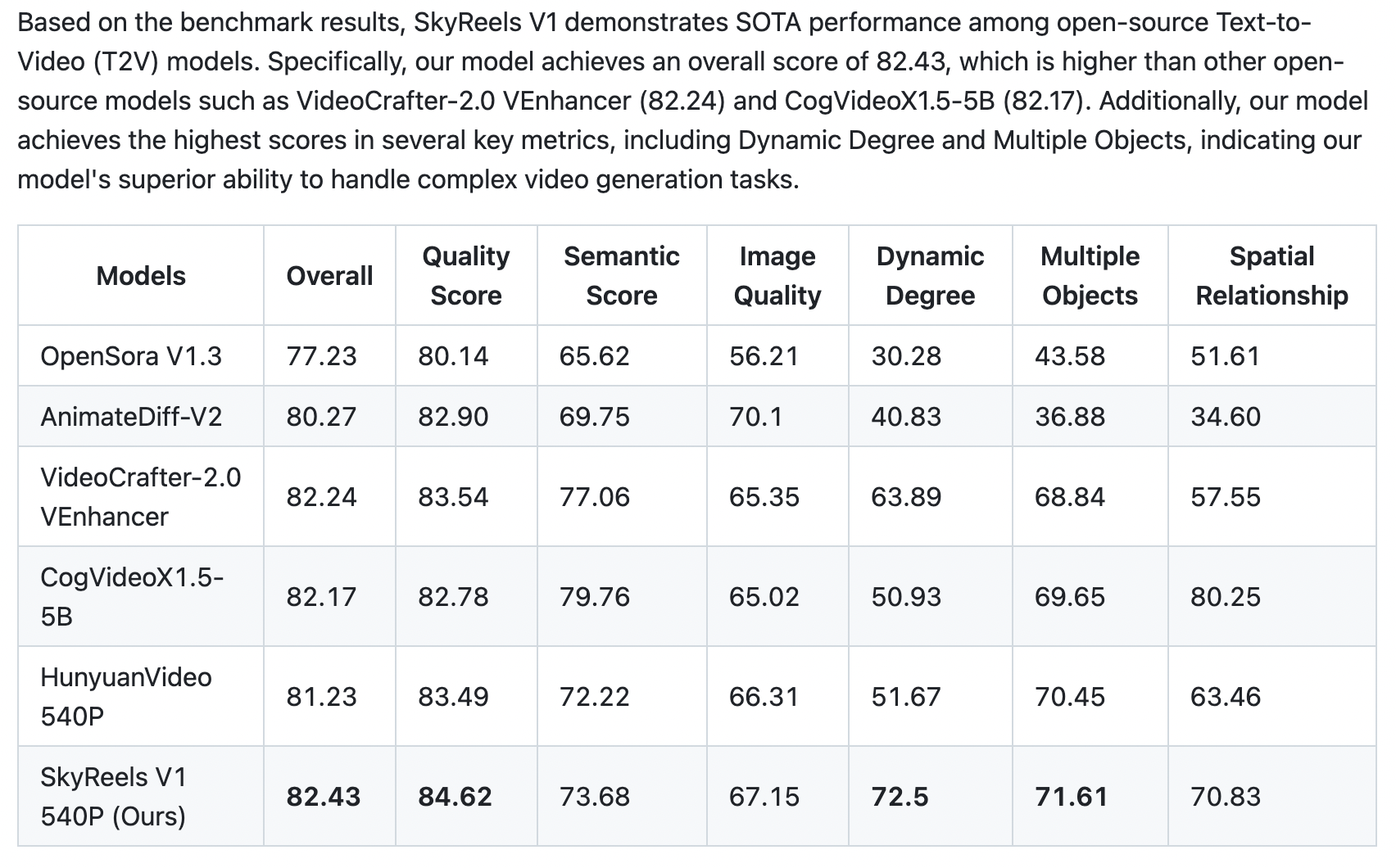

SkyReels-V1 is purpose-built for AI short video production based on Hynyuan. It achieves cinematic-grade micro-expression performances with 33 nuanced facial expressions and 400+ natural body movements that can be freely combined. The model integrates film-quality lighting aesthetics, generating visually stunning compositions and textures through text-to-video or image-to-video conversion – outperforming all existing open-source models across key metrics.

-

Shanhai based StepFun – Open source Step-Video-T2V

https://huggingface.co/stepfun-ai/stepvideo-t2v

The model generates videos up to 204 frames, using a high-compression Video-VAE (16×16 spatial, 8x temporal). It processes English and Chinese prompts via bilingual text encoders. A 3D full-attention DiT, trained with Flow Matching, denoises latent frames conditioned on text and timesteps. A video-based DPO further reduces artifacts, enhancing realism and smoothness.

FEATURED POSTS

-

59 AI Filmmaking Tools For Your Workflow

https://curiousrefuge.com/blog/ai-filmmaking-tools-for-filmmakers

- Runway

- PikaLabs

- Pixverse (free)

- Haiper (free)

- Moonvalley (free)

- Morph Studio (free)

- SORA

- Google Veo

- Stable Video Diffusion (free)

- Leonardo

- Krea

- Kaiber

- Letz.AI

- Midjourney

- Ideogram

- DALL-E

- Firefly

- Stable Diffusion

- Google Imagen 3

- Polycam

- LTX Studio

- Simulon

- Elevenlabs

- Auphonic

- Adobe Enhance

- Adobe’s AI Rotoscoping

- Adobe Photoshop Generative Fill

- Canva Magic Brush

- Akool

- Topaz Labs

- Magnific.AI

- FreePik

- BigJPG

- LeiaPix

- Move AI

- Mootion

- Heygen

- Synthesia

- Chat GPT-4

- Claude 3

- Nolan AI

- Google Gemini

- Meta Llama 3

- Suno

- Udio

- Stable Audio

- Soundful

- Google MusicML

- Viggle

- SyncLabs

- Lalamu

- LensGo

- D-ID

- WonderStudio

- Cuebric

- Blockade Labs

- Chat GPT-4o

- Luma Dream Machine

- Pallaidium (free)