BREAKING NEWS

LATEST POSTS

-

Narcis Calin’s Galaxy Engine – A free, open source simulation software

This 2025 I decided to start learning how to code, so I installed Visual Studio and I started looking into C++. After days of watching tutorials and guides about the basics of C++ and programming, I decided to make something physics-related. I started with a dot that fell to the ground and then I wanted to simulate gravitational attraction, so I made 2 circles attracting each other. I thought it was really cool to see something I made with code actually work, so I kept building on top of that small, basic program. And here we are after roughly 8 months of learning programming. This is Galaxy Engine, and it is a simulation software I have been making ever since I started my learning journey. It currently can simulate gravity, dark matter, galaxies, the Big Bang, temperature, fluid dynamics, breakable solids, planetary interactions, etc. The program can run many tens of thousands of particles in real time on the CPU thanks to the Barnes-Hut algorithm, mixed with Morton curves. It also includes its own PBR 2D path tracer with BVH optimizations. The path tracer can simulate a bunch of stuff like diffuse lighting, specular reflections, refraction, internal reflection, fresnel, emission, dispersion, roughness, IOR, nested IOR and more! I tried to make the path tracer closer to traditional 3D render engines like V-Ray. I honestly never imagined I would go this far with programming, and it has been an amazing learning experience so far. I think that mixing this knowledge with my 3D knowledge can unlock countless new possibilities. In case you are curious about Galaxy Engine, I made it completely free and Open-Source so that anyone can build and compile it locally! You can find the source code in GitHub

https://github.com/NarcisCalin/Galaxy-Engine

-

Introduction to BytesIO

When you’re working with binary data in Python—whether that’s image bytes, network payloads, or any in-memory binary stream—you often need a file-like interface without touching the disk. That’s where

BytesIOfrom the built-iniomodule comes in handy. It lets you treat a bytes buffer as if it were a file.What Is

BytesIO?- Module:

io - Class:

BytesIO - Purpose:

- Provides an in-memory binary stream.

- Acts like a file opened in binary mode (

'rb'/'wb'), but data lives in RAM rather than on disk.

from io import BytesIO

Why Use

BytesIO?- Speed

- No disk I/O—reads and writes happen in memory.

- Convenience

- Emulates file methods (

read(),write(),seek(), etc.). - Ideal for testing code that expects a file-like object.

- Emulates file methods (

- Safety

- No temporary files cluttering up your filesystem.

- Integration

- Libraries that accept file-like objects (e.g., PIL,

requests) will work withBytesIO.

- Libraries that accept file-like objects (e.g., PIL,

Basic Examples

1. Writing Bytes to a Buffer

(more…)from io import BytesIO # Create a BytesIO buffer buffer = BytesIO() # Write some binary data buffer.write(b'Hello, \xF0\x9F\x98\x8A') # includes a smiley emoji in UTF-8 # Retrieve the entire contents data = buffer.getvalue() print(data) # b'Hello, \xf0\x9f\x98\x8a' print(data.decode('utf-8')) # Hello, 😊 # Always close when done buffer.close() - Module:

-

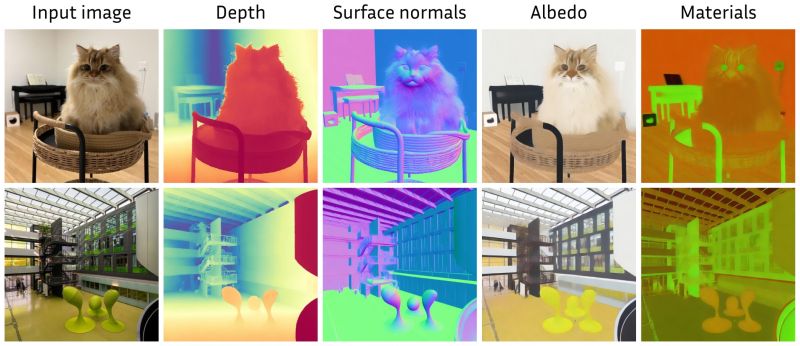

Marigold – repurposing diffusion-based image generators for dense predictions

Marigold repurposes Stable Diffusion for dense prediction tasks such as monocular depth estimation and surface normal prediction, delivering a level of detail often missing even in top discriminative models.

Key aspects that make it great:

– Reuses the original VAE and only lightly fine-tunes the denoising UNet

– Trained on just tens of thousands of synthetic image–modality pairs

– Runs on a single consumer GPU (e.g., RTX 4090)

– Zero-shot generalization to real-world, in-the-wild imageshttps://mlhonk.substack.com/p/31-marigold

https://arxiv.org/pdf/2505.09358

https://marigoldmonodepth.github.io/

-

Runway Aleph

https://runwayml.com/research/introducing-runway-aleph

Generate New Camera Angles

Generate the Next Shot

Use Any Style to Transfer to a Video

Change Environments, Locations, Seasons and Time of Day

Add Things to a Scene

Remove Things from a Scene

Change Objects in a Scene

Apply the Motion of a Video to an Image

Alter a Character’s Appearance

Recolor Elements of a Scene

Relight Shots

Green Screen Any Object, Person or Situation -

Mike Wong – AtoMeow – A Blue noise image stippling in Processing

https://github.com/mwkm/atoMeow

https://www.shadertoy.com/view/7s3XzX

This demo is created for coders who are familiar with this awesome creative coding platform. You may quickly modify the code to work for video or to stipple your own Procssing drawings by turning them into

PImageand run the simulation. This demo code also serves as a reference implementation of my article Blue noise sampling using an N-body simulation-based method. If you are interested in 2.5D, you may mod the code to achieve what I discussed in this artist friendly article.Convert your video to a dotted noise.

-

Aitor Echeveste – Free CG and Comp Projection Shot, Download the Assets & Follow the Workflow

What’s Included:

- Cleaned and extended base plates

- Full Maya and Nuke 3D projection layouts

- Bullet and environment CG renders with AOVs (RGB, normals, position, ID, etc.)

- Explosion FX in slow motion

- 3D scene geometry for projection

- Camera + lensing setup

- Light groups and passes for look development

FEATURED POSTS

-

Photography basics: Exposure Value vs Photographic Exposure vs Il/Luminance vs Pixel luminance measurements

Also see: https://www.pixelsham.com/2015/05/16/how-aperture-shutter-speed-and-iso-affect-your-photos/

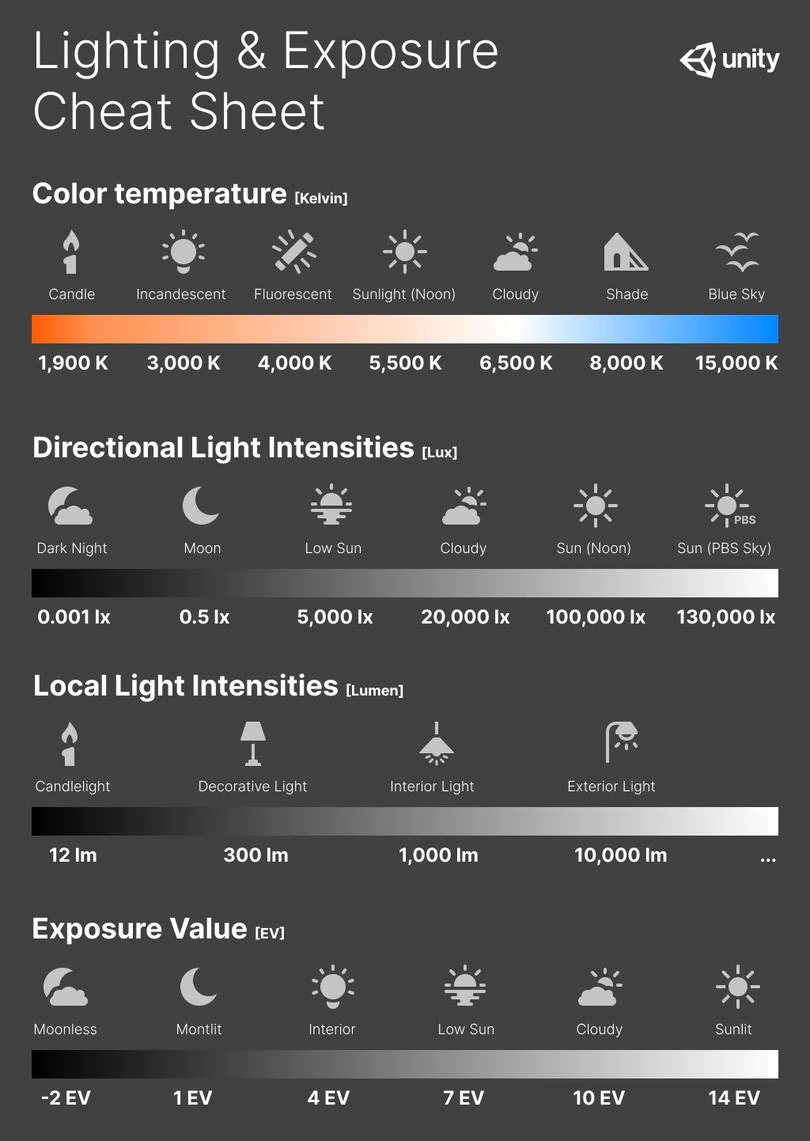

In photography, exposure value (EV) is a number that represents a combination of a camera’s shutter speed and f-number, such that all combinations that yield the same exposure have the same EV (for any fixed scene luminance).

The EV concept was developed in an attempt to simplify choosing among combinations of equivalent camera settings. Although all camera settings with the same EV nominally give the same exposure, they do not necessarily give the same picture. EV is also used to indicate an interval on the photographic exposure scale. 1 EV corresponding to a standard power-of-2 exposure step, commonly referred to as a stop

EV 0 corresponds to an exposure time of 1 sec and a relative aperture of f/1.0. If the EV is known, it can be used to select combinations of exposure time and f-number.Note EV does not equal to photographic exposure. Photographic Exposure is defined as how much light hits the camera’s sensor. It depends on the camera settings mainly aperture and shutter speed. Exposure value (known as EV) is a number that represents the exposure setting of the camera.

Thus, strictly, EV is not a measure of luminance (indirect or reflected exposure) or illuminance (incidentl exposure); rather, an EV corresponds to a luminance (or illuminance) for which a camera with a given ISO speed would use the indicated EV to obtain the nominally correct exposure. Nonetheless, it is common practice among photographic equipment manufacturers to express luminance in EV for ISO 100 speed, as when specifying metering range or autofocus sensitivity.

The exposure depends on two things: how much light gets through the lenses to the camera’s sensor and for how long the sensor is exposed. The former is a function of the aperture value while the latter is a function of the shutter speed. Exposure value is a number that represents this potential amount of light that could hit the sensor. It is important to understand that exposure value is a measure of how exposed the sensor is to light and not a measure of how much light actually hits the sensor. The exposure value is independent of how lit the scene is. For example a pair of aperture value and shutter speed represents the same exposure value both if the camera is used during a very bright day or during a dark night.

Each exposure value number represents all the possible shutter and aperture settings that result in the same exposure. Although the exposure value is the same for different combinations of aperture values and shutter speeds the resulting photo can be very different (the aperture controls the depth of field while shutter speed controls how much motion is captured).

EV 0.0 is defined as the exposure when setting the aperture to f-number 1.0 and the shutter speed to 1 second. All other exposure values are relative to that number. Exposure values are on a base two logarithmic scale. This means that every single step of EV – plus or minus 1 – represents the exposure (actual light that hits the sensor) being halved or doubled.Formulas

(more…)

-

What is physically correct lighting all about?

http://gamedev.stackexchange.com/questions/60638/what-is-physically-correct-lighting-all-about

2012-08 Nathan Reed wrote:

Physically-based shading means leaving behind phenomenological models, like the Phong shading model, which are simply built to “look good” subjectively without being based on physics in any real way, and moving to lighting and shading models that are derived from the laws of physics and/or from actual measurements of the real world, and rigorously obey physical constraints such as energy conservation.

For example, in many older rendering systems, shading models included separate controls for specular highlights from point lights and reflection of the environment via a cubemap. You could create a shader with the specular and the reflection set to wildly different values, even though those are both instances of the same physical process. In addition, you could set the specular to any arbitrary brightness, even if it would cause the surface to reflect more energy than it actually received.

In a physically-based system, both the point light specular and the environment reflection would be controlled by the same parameter, and the system would be set up to automatically adjust the brightness of both the specular and diffuse components to maintain overall energy conservation. Moreover you would want to set the specular brightness to a realistic value for the material you’re trying to simulate, based on measurements.

Physically-based lighting or shading includes physically-based BRDFs, which are usually based on microfacet theory, and physically correct light transport, which is based on the rendering equation (although heavily approximated in the case of real-time games).

It also includes the necessary changes in the art process to make use of these features. Switching to a physically-based system can cause some upsets for artists. First of all it requires full HDR lighting with a realistic level of brightness for light sources, the sky, etc. and this can take some getting used to for the lighting artists. It also requires texture/material artists to do some things differently (particularly for specular), and they can be frustrated by the apparent loss of control (e.g. locking together the specular highlight and environment reflection as mentioned above; artists will complain about this). They will need some time and guidance to adapt to the physically-based system.

On the plus side, once artists have adapted and gained trust in the physically-based system, they usually end up liking it better, because there are fewer parameters overall (less work for them to tweak). Also, materials created in one lighting environment generally look fine in other lighting environments too. This is unlike more ad-hoc models, where a set of material parameters might look good during daytime, but it comes out ridiculously glowy at night, or something like that.

Here are some resources to look at for physically-based lighting in games:

SIGGRAPH 2013 Physically Based Shading Course, particularly the background talk by Naty Hoffman at the beginning. You can also check out the previous incarnations of this course for more resources.

Sébastien Lagarde, Adopting a physically-based shading model and Feeding a physically-based shading model

And of course, I would be remiss if I didn’t mention Physically-Based Rendering by Pharr and Humphreys, an amazing reference on this whole subject and well worth your time, although it focuses on offline rather than real-time rendering.