BREAKING NEWS

LATEST POSTS

-

Comfy-Org comfy-cli – A Command Line Tool for ComfyUI

https://github.com/Comfy-Org/comfy-cli

comfy-cli is a command line tool that helps users easily install and manage ComfyUI, a powerful open-source machine learning framework. With comfy-cli, you can quickly set up ComfyUI, install packages, and manage custom nodes, all from the convenience of your terminal.

C:\<PATH_TO>\python.exe -m venv C:\comfyUI_cli_install cd C:\comfyUI_env C:\comfyUI_env\Scripts\activate.bat C:\<PATH_TO>\python.exe -m pip install comfy-cli comfy --workspace=C:\comfyUI_env\ComfyUI install # then comfy launch # or comfy launch -- --cpu --listen 0.0.0.0If you are trying to clone a different install, pip freeze it first. Then run those requirements.

# from the original env python.exe -m pip freeze > M:\requirements.txt # under the new venv env pip install -r M:\requirements.txt -

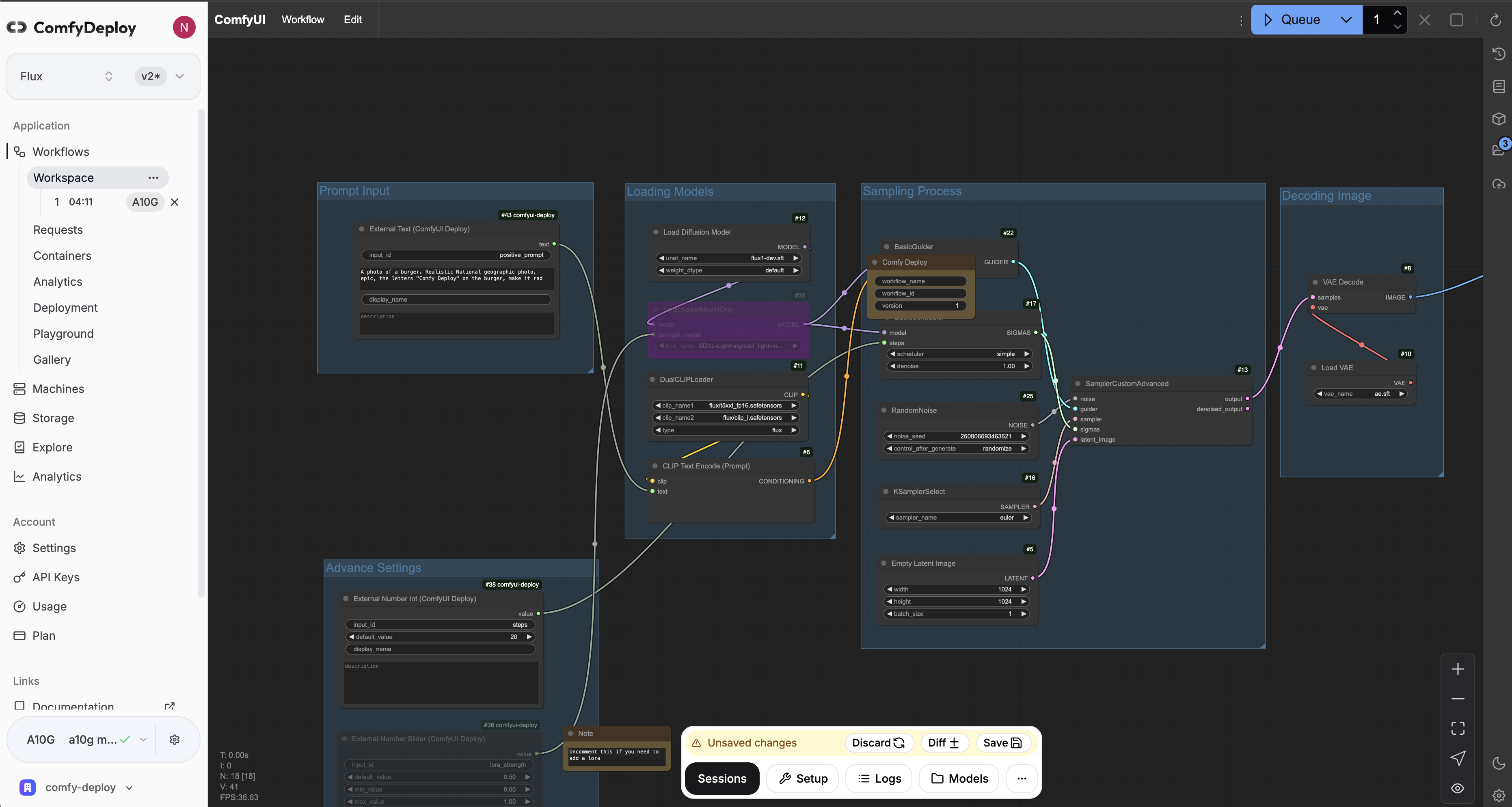

ComfyDeploy – A way for teams to use ComfyUI and power apps

https://www.comfydeploy.com/docs/v2/introduction

1 – Import your workflow

2 – Build a machine configuration to run your workflows on

3 – Download models into your private storage, to be used in your workflows and team.

4 – Run ComfyUI in the cloud to modify and test your workflows on cloud GPUs

5 – Expose workflow inputs with our custom nodes, for API and playground use

6 – Deploy APIs

7 – Let your team use your workflows in playground without using ComfyUI

-

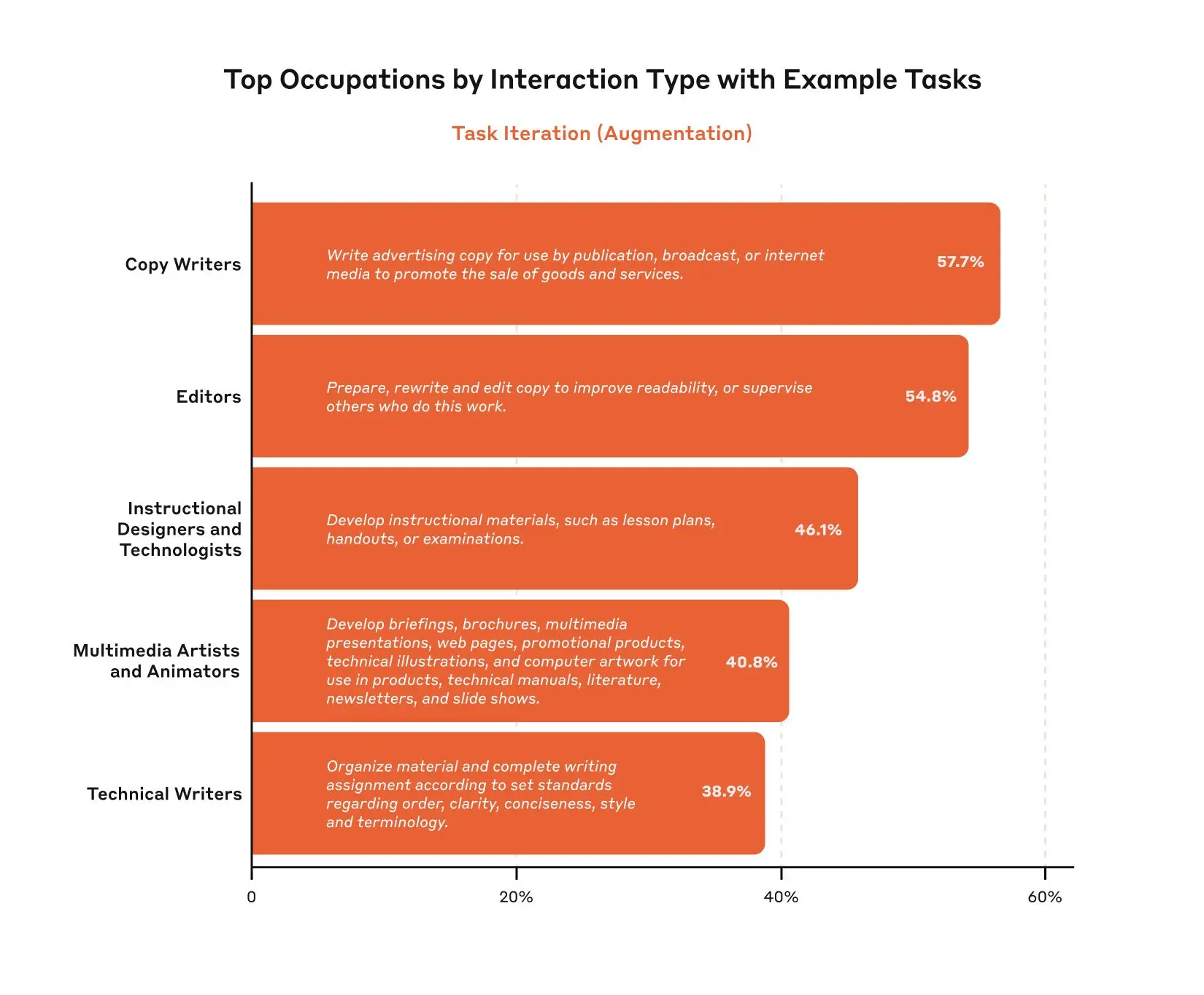

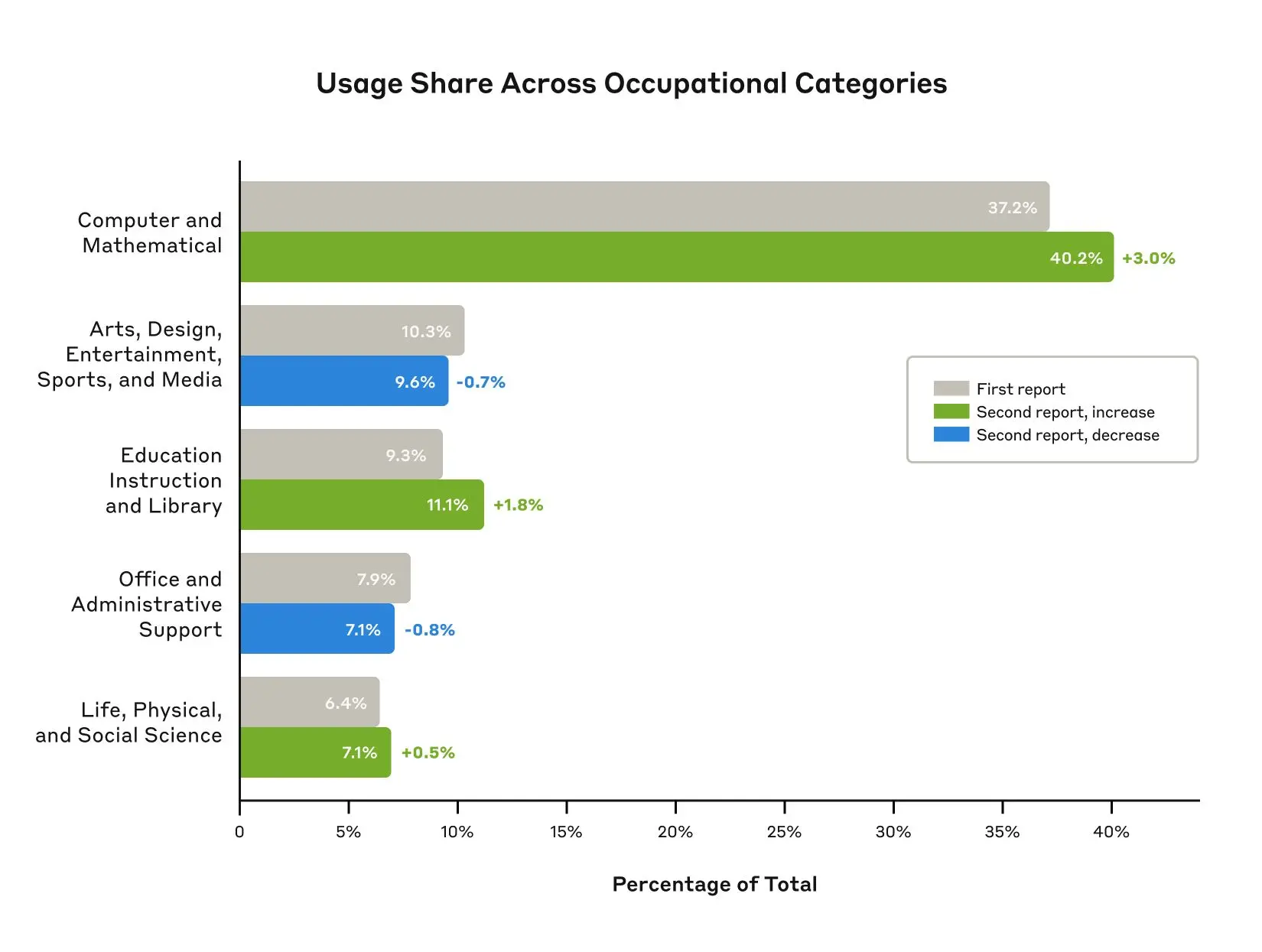

Anthropic Economic Index – Insights from Claude 3.7 Sonnet on AI future prediction

https://www.anthropic.com/news/anthropic-economic-index-insights-from-claude-sonnet-3-7

As models continue to advance, so too must our measurement of their economic impacts. In our second report, covering data since the launch of Claude 3.7 Sonnet, we find relatively modest increases in coding, education, and scientific use cases, and no change in the balance of augmentation and automation. We find that Claude’s new extended thinking mode is used with the highest frequency in technical domains and tasks, and identify patterns in automation / augmentation patterns across tasks and occupations. We release datasets for both of these analyses.

-

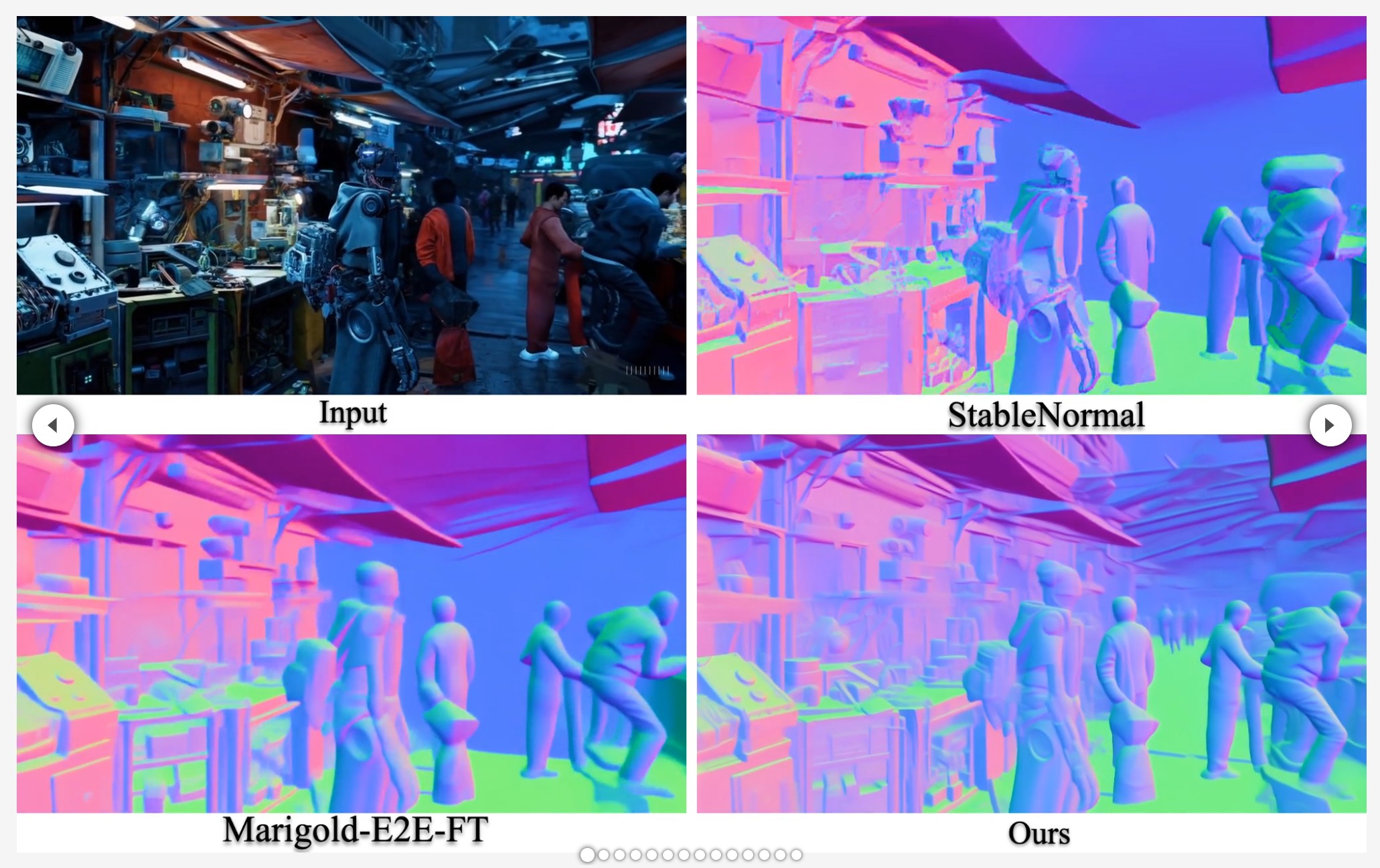

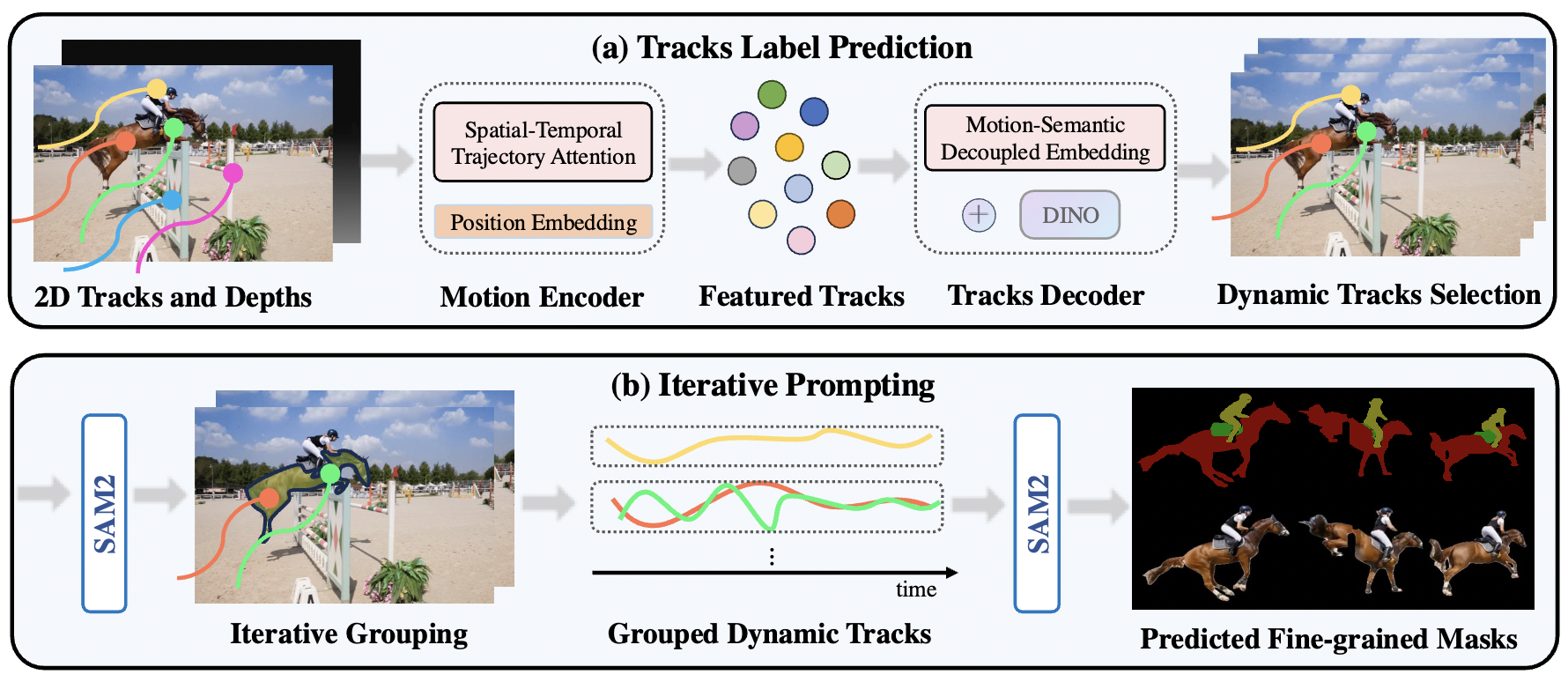

Segment Any Motion in Videos

https://github.com/nnanhuang/SegAnyMo

Overview of Our Pipeline. We take 2D tracks and depth maps generated by off-the-shelf models as input, which are then processed by a motion encoder to capture motion patterns, producing featured tracks. Next, we use tracks decoder that integrates DINO feature to decode the featured tracks by decoupling motion and semantic information and ultimately obtain the dynamic trajectories(a). Finally, using SAM2, we group dynamic tracks belonging to the same object and generate fine-grained moving object masks(b).

-

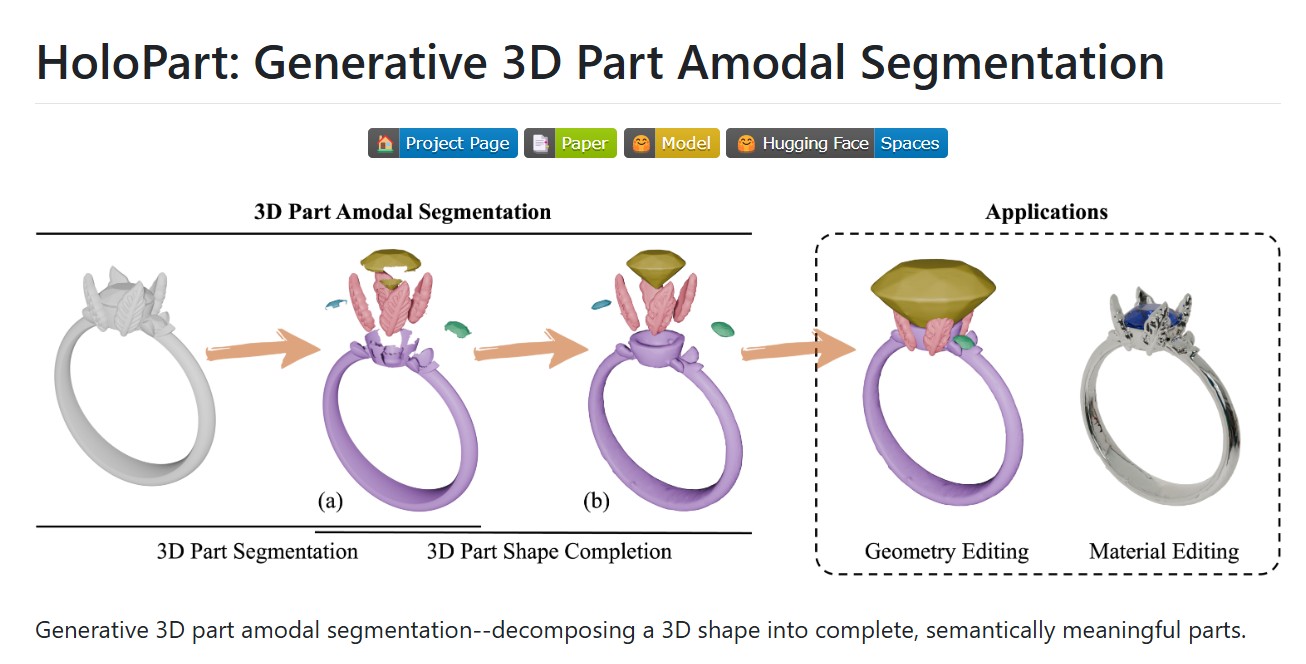

HoloPart -Generative 3D Models Part Amodal Segmentation

https://vast-ai-research.github.io/HoloPart

https://huggingface.co/VAST-AI/HoloPart

https://github.com/VAST-AI-Research/HoloPart

Applications:

– 3d printing segmentation

– texturing segmentation

– animation segmentation

– modeling segmentation

-

The Diary Of A CEO – A talk with Dr. Bessel Van Der Kolk described as “perhaps the most influential psychiatrist of the 21st century”

– Why traumatic memories are not like normal memories?

– What it was like working in a mental asylum.

– Does childhood trauma impact us permanently?

– Can yoga reverse deep past trauma? -

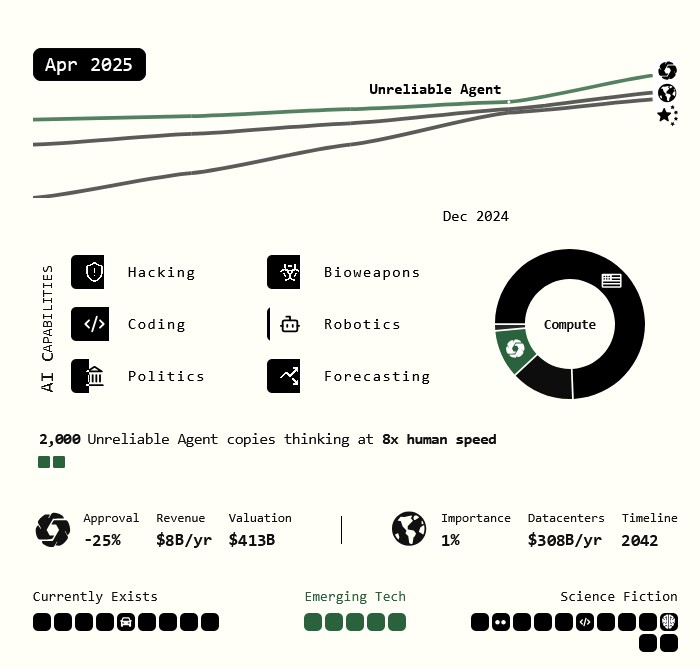

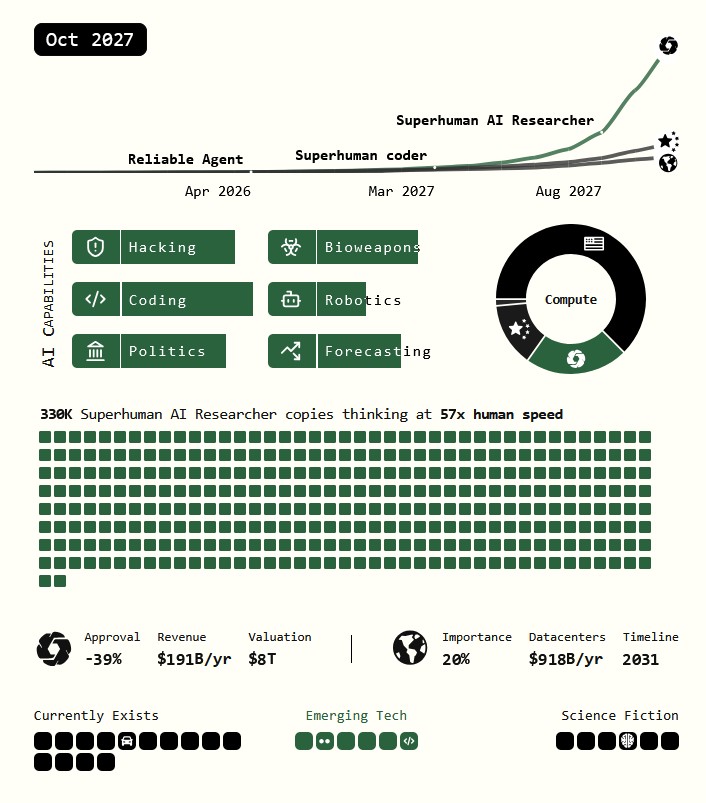

AI 2027 – Predicting the impact of superhuman AI over the next decade

We predict that the impact of superhuman AI over the next decade will be enormous, exceeding that of the Industrial Revolution.

We wrote a scenario that represents our best guess about what that might look like.1 It’s informed by trend extrapolations, wargames, expert feedback, experience at OpenAI, and previous forecasting successes.

-

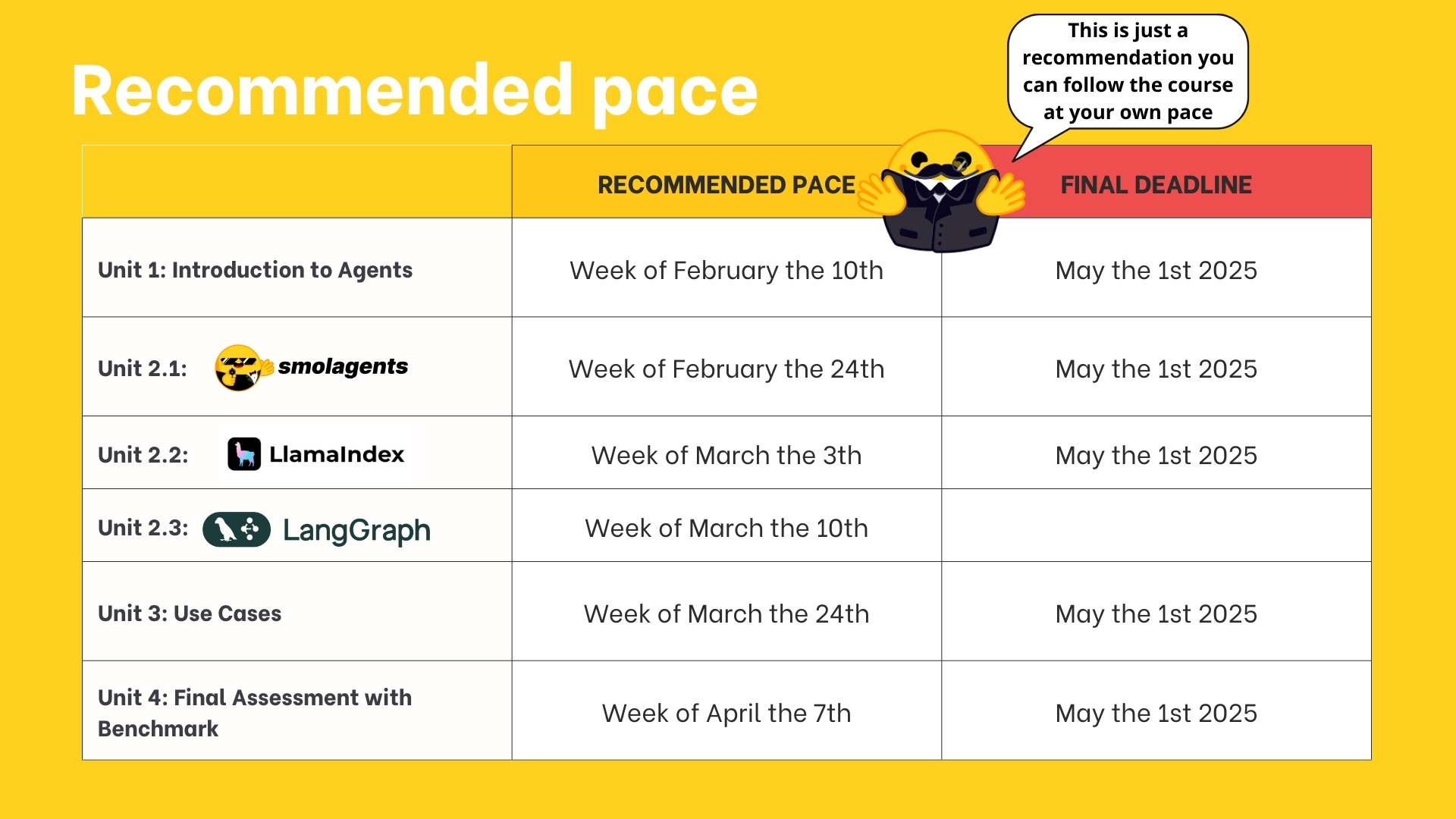

HuggingFace – AI Agents Course

https://huggingface.co/learn/agents-course/en/unit0/introduction

In this course, you will:

- 📖 Study AI Agents in theory, design, and practice.

- 🧑💻 Learn to use established AI Agent libraries such as smolagents, LlamaIndex, and LangGraph.

- 💾 Share your agents on the Hugging Face Hub and explore agents created by the community.

- 🏆 Participate in challenges where you will evaluate your agents against other students’.

- 🎓 Earn a certificate of completion by completing assignments.

-

The best music management software for PC, Android and iOS

https://audials.com/en/apps/manage-music?utm_source=chatgpt.com

https://umatechnology.org/the-best-free-music-management-tools-for-organizing-your-mp3s

- Audials Play

- Media Monkey

- Musicbee

- AIMP

- Mp3tag

- Helium

- MusicBrainz Picard

- iTunes

- Magix MP3 Deluxe 19

- Foobar 2000

- Clementine

- Tuneup Media

- Organize Your Music

- Musicnizer

- Bliss

- Music Connect

- VLC Media Player

- TagScanner

- Greenify

- KeepVid Music

-

NVIDIA Adds Native Python Support to CUDA

https://thenewstack.io/nvidia-finally-adds-native-python-support-to-cuda

https://nvidia.github.io/cuda-python/latest

Check your Cuda version, it will be the release version here:

>>> nvcc --version nvcc: NVIDIA (R) Cuda compiler driver Copyright (c) 2005-2024 NVIDIA Corporation Built on Wed_Apr_17_19:36:51_Pacific_Daylight_Time_2024 Cuda compilation tools, release 12.5, V12.5.40 Build cuda_12.5.r12.5/compiler.34177558_0or from here:

>>> nvidia-smi Mon Jun 16 12:35:20 2025 +-----------------------------------------------------------------------------------------+ | NVIDIA-SMI 555.85 Driver Version: 555.85 CUDA Version: 12.5 | |-----------------------------------------+------------------------+----------------------+

FEATURED POSTS

-

Scene Referred vs Display Referred color workflows

Display Referred it is tied to the target hardware, as such it bakes color requirements into every type of media output request.

Scene Referred uses a common unified wide gamut and targeting audience through CDL and DI libraries instead.

So that color information stays untouched and only “transformed” as/when needed.Sources:

– Victor Perez – Color Management Fundamentals & ACES Workflows in Nuke

– https://z-fx.nl/ColorspACES.pdf

– Wicus

-

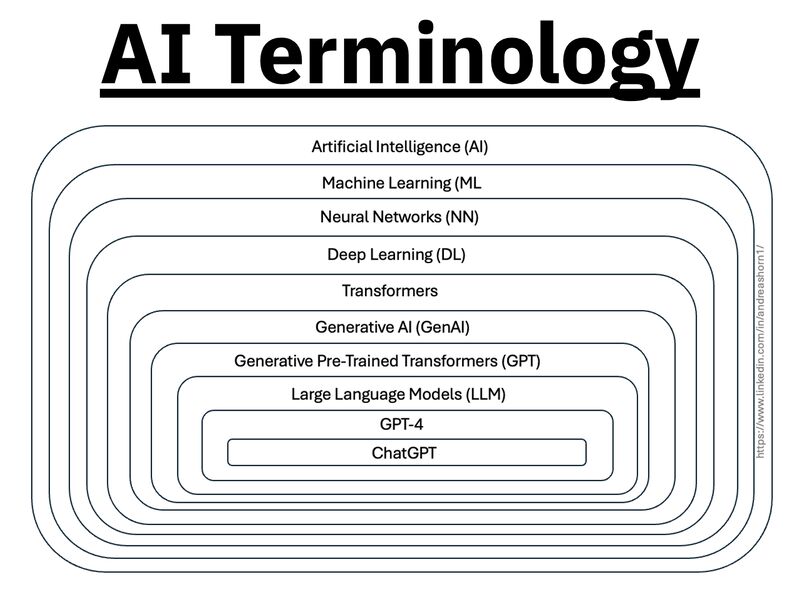

Types of AI Explained in a few Minutes – AI Glossary

1️⃣ 𝗔𝗿𝘁𝗶𝗳𝗶𝗰𝗶𝗮𝗹 𝗜𝗻𝘁𝗲𝗹𝗹𝗶𝗴𝗲𝗻𝗰𝗲 (𝗔𝗜) – The broadest category, covering automation, reasoning, and decision-making. Early AI was rule-based, but today, it’s mainly data-driven.

2️⃣ 𝗠𝗮𝗰𝗵𝗶𝗻𝗲 𝗟𝗲𝗮𝗿𝗻𝗶𝗻𝗴 (𝗠𝗟) – AI that learns patterns from data without explicit programming. Includes decision trees, clustering, and regression models.

3️⃣ 𝗡𝗲𝘂𝗿𝗮𝗹 𝗡𝗲𝘁𝘄𝗼𝗿𝗸𝘀 (𝗡𝗡) – A subset of ML, inspired by the human brain, designed for pattern recognition and feature extraction.

4️⃣ 𝗗𝗲𝗲𝗽 𝗟𝗲𝗮𝗿𝗻𝗶𝗻𝗴 (𝗗𝗟) – Multi-layered neural networks that drives a lot of modern AI advancements, for example enabling image recognition, speech processing, and more.

5️⃣ 𝗧𝗿𝗮𝗻𝘀𝗳𝗼𝗿𝗺𝗲𝗿𝘀 – A revolutionary deep learning architecture introduced by Google in 2017 that allows models to understand and generate language efficiently.

6️⃣ 𝗚𝗲𝗻𝗲𝗿𝗮𝘁𝗶𝘃𝗲 𝗔𝗜 (𝗚𝗲𝗻𝗔𝗜) – AI that doesn’t just analyze data—it creates. From text and images to music and code, this layer powers today’s most advanced AI models.

7️⃣ 𝗚𝗲𝗻𝗲𝗿𝗮𝘁𝗶𝘃𝗲 𝗣𝗿𝗲-𝗧𝗿𝗮𝗶𝗻𝗲𝗱 𝗧𝗿𝗮𝗻𝘀𝗳𝗼𝗿𝗺𝗲𝗿𝘀 (𝗚𝗣𝗧) – A specific subset of Generative AI that uses transformers for text generation.

8️⃣ 𝗟𝗮𝗿𝗴𝗲 𝗟𝗮𝗻𝗴𝘂𝗮𝗴𝗲 𝗠𝗼𝗱𝗲𝗹𝘀 (𝗟𝗟𝗠) – Massive AI models trained on extensive datasets to understand and generate human-like language.

9️⃣ 𝗚𝗣𝗧-4 – One of the most advanced LLMs, built on transformer architecture, trained on vast datasets to generate human-like responses.

🔟 𝗖𝗵𝗮𝘁𝗚𝗣𝗧 – A specific application of GPT-4, optimized for conversational AI and interactive use.