BREAKING NEWS

LATEST POSTS

-

Unpremult and Premult in compositing cycles

Steve Wright

https://www.linkedin.com/pulse/why-oh-premultiply-steve-wright/

James Pratt

https://jamesprattvfx.wordpress.com/2018/11/08/premult-unpremult/

The simple definition of premult is to multiply the alpha and the RGB of the input together.

Un-Premult suggests that this does the opposite operation to the premult node. Therefore instead of multiplying the RGB values by the alpha, it divides instead.

Alan Martinez

“Unpremult” and “premult” are terms used in digital compositing that are relevant for both those working with computer-generated graphics (CG) and those working with live-action plates.

“Unpremult” is short for “unpremultiply” and refers to the action of undoing the multiplication of a pixel by its alpha value. It is commonly used to avoid halos or unwanted edges when combining images. (This by making sure that edits to a layer are added independently from edges’ opacity levels.)

“Premult” is short for “premultiply” and is the opposite process of “unpremult.” In this case, each pixel in an image is multiplied by its alpha value.

In simple terms, premult crops the RGB by its alpha, while unpremult does the opposite.It’s important to perform color corrections on CG renders in a sort of sandwich approach. First, divide the image to extend the edges fully of the RGB channels. Then, apply the necessary color corrections. Finally, pre-multiply the image again to avoid artifacts on the edges.

Typically, most 3D rendered images are premultiplied. As a rule of thumb, if the background is black or even just very dark, the image may be premultiplied. Additionally, most of the time, the 3D render has antialiasing in the edges.

Aaron Strasbourg

https://www.aaronstrasbourgvfx.com/post/2017/06/23/002-unpremult-and-premult

-

KeenTools 2023.2: Introducing GeoTracker for Blender!

https://keentools.io/products/geotracker-for-blender

Changes in add-ons Blender

- GeoTracker open beta

- Up to 3 times faster texture generation

- Minor bug fixes and improvements in FaceBuilder

Changes in GeoTracker for After Effects

- Video analysis up to 2 times faster

- Improved tracking performance up to 20%

- Accelerated surface masking

- Fixed primitive scaling issue

- Minor bug fixes and improvements

Changes in Nuke package

- Video analysis up to 2 times faster

- Improved tracking performance up to 20%

- TextureBuilder up to 3 times faster

- Accelerated surface masking

- New build for Nuke 14 running newer linux systems (RHEL9)

- Minor bug fixes and improvements

-

Marvel VFX Artists Vote to Unionize

https://variety.com/2023/artisans/news/marvel-vfx-artists-unionize-1235690272/

BREAKING: Visual Effects (VFX) crews at Marvel Studios have filed for a unionization election with the National Labor Relations Board, Monday. The move signals a major shift in an industry that has largely remained non-union since VFX was pioneered during production of the first Star Wars films in the 1970s. A supermajority of Marvel’s more than 50-worker crew had signed authorization cards indicating they wished to be represented by the International Alliance of Theatrical Stage Employees (IATSE).

This marks the first time VFX professionals have joined together to demand the same rights and protections as their unionized colleagues in the film industry. Mark Patch, VFX Organizer for IATSE, highlighted the significance of this moment: “For almost half a century, workers in the visual effects industry have been denied the same protections and benefits their coworkers and crewmates have relied upon since the beginning of the Hollywood film industry. This is a historic first step for VFX workers coming together with a collective voice demanding respect for the work we do.”

While positions like Production Designers/Art Directors, Camera Operators, Sound, Editors, Hair and Makeup Artists, Costumes / Wardrobe, Script Supervisors, Grips, Lighting, Props, and Paint, among others, have historically been represented by IATSE in motion picture and television, workers in VFX classifications historically have not.

Bella Huffman, VFX Coordinator, highlighted the challenging nature of the industry: “Turnaround times don’t apply to us, protected hours don’t apply to us, and pay equity doesn’t apply to us. Visual Effects must become a sustainable and safe department for everyone who’s suffered far too long and for all newcomers who need to know they won’t be exploited.”

The Marvel VFX workers’ filing for a union election comes at a pivotal moment in the film and television industry, amidst ongoing strikes by both the Actors and Writers guilds as both seek fair contracts with the studios and the Alliance of Motion Picture and Television Producers (AMPTP).

IATSE International President Matthew D. Loeb put it in plain terms, “We are witnessing an unprecedented wave of solidarity that’s breaking down old barriers in the industry and proving we’re all in this fight together. That doesn’t happen in a vacuum. Entertainment workers everywhere are sticking up for each other’s rights, that’s what our movement is all about. I congratulate these workers on taking this important step and using their collective voice.”

-

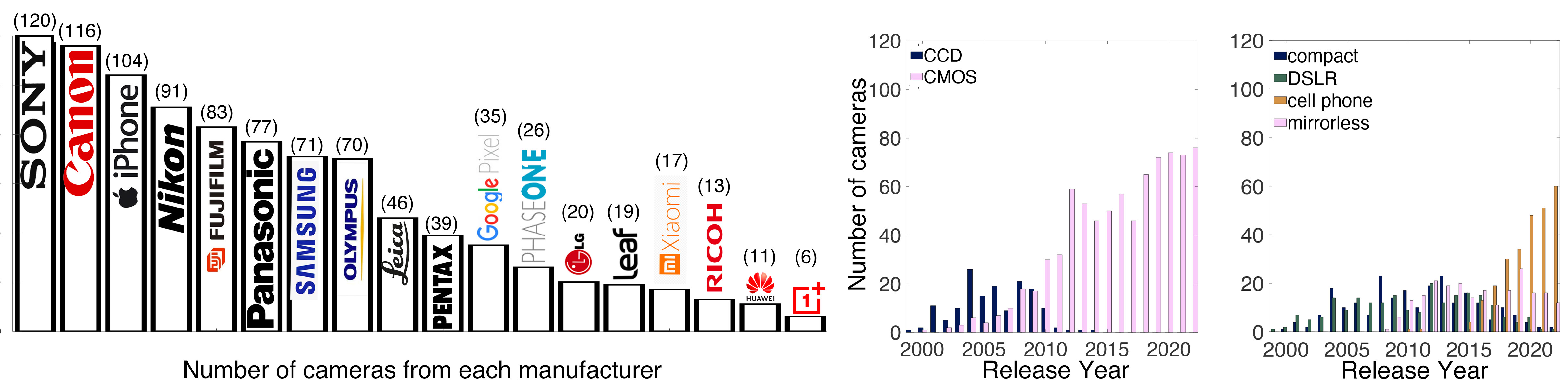

Photography Basics : Spectral Sensitivity Estimation Without a Camera

https://color-lab-eilat.github.io/Spectral-sensitivity-estimation-web/

A number of problems in computer vision and related fields would be mitigated if camera spectral sensitivities were known. As consumer cameras are not designed for high-precision visual tasks, manufacturers do not disclose spectral sensitivities. Their estimation requires a costly optical setup, which triggered researchers to come up with numerous indirect methods that aim to lower cost and complexity by using color targets. However, the use of color targets gives rise to new complications that make the estimation more difficult, and consequently, there currently exists no simple, low-cost, robust go-to method for spectral sensitivity estimation that non-specialized research labs can adopt. Furthermore, even if not limited by hardware or cost, researchers frequently work with imagery from multiple cameras that they do not have in their possession.

To provide a practical solution to this problem, we propose a framework for spectral sensitivity estimation that not only does not require any hardware (including a color target), but also does not require physical access to the camera itself. Similar to other work, we formulate an optimization problem that minimizes a two-term objective function: a camera-specific term from a system of equations, and a universal term that bounds the solution space.

Different than other work, we utilize publicly available high-quality calibration data to construct both terms. We use the colorimetric mapping matrices provided by the Adobe DNG Converter to formulate the camera-specific system of equations, and constrain the solutions using an autoencoder trained on a database of ground-truth curves. On average, we achieve reconstruction errors as low as those that can arise due to manufacturing imperfections between two copies of the same camera. We provide predicted sensitivities for more than 1,000 cameras that the Adobe DNG Converter currently supports, and discuss which tasks can become trivial when camera responses are available.

-

Denoisers available in Arnold

https://help.autodesk.com/view/ARNOL/ENU/?guid=arnold_user_guide_ac_denoising_html

AOV denoising: While all denoisers work on arbitrary AOVs, not all denoisers guarantee that the denoised AOVs composite together to match the denoised beauty. The AOV column indicates whether a denoiser has robust AOV denoising and can produce a result where denoised_AOV_1 + denoised_AOV_2 + … + denoised_AOV_N = denoised_Beauty.

OptiX™ Denoiser imager

This imager is available as a post-processing effect. The imager also exposes additional controls for clamping and blending the result. It is based on Nvidia AI technology and is integrated into Arnold for use with IPR and look dev. The OptiX™ denoiser is meant to be used during IPR (so that you get a very quickly denoised image as you’re moving the camera and making other adjustments).

OIDN Denoiser imager

The OIDN denoiser (based on Intel’s Open Image Denoise technology) is available as a post-processing effect. It is integrated into Arnold for use with IPR as an imager (so that you get a very quickly denoised image as you’re moving the camera and making other adjustments).

Arnold Denoiser (Noice)

The Arnold Denoiser (Noice) can be run from a dedicated UI, exposed in the Denoiser, or as an imager, you will need to render images out first via the Arnold EXR driver with variance AOVs enabled. It is also available as a stand-alone program (noice.exe).

This imager is available as a post-processing effect. You can automatically denoise images every time you render a scene, edit the denoising settings and see the resulting image directly in the render view. It favors quality over speed and is, therefore, more suitable for high-quality final frame denoising and animation sequences.

Note:imager_denoiser_noice does not support temporal denoising (required for denoising an animation).

-

Virtual Production volumes study

Color Fidelity in LED Volumes

https://theasc.com/articles/color-fidelity-in-led-volumesVirtual Production Glossary

https://vpglossary.com/What is Virtual Production – In depth analysis

https://www.leadingledtech.com/what-is-a-led-virtual-production-studio-in-depth-technical-analysis/A comparison of LED panels for use in Virtual Production:

Findings and recommendations

https://eprints.bournemouth.ac.uk/36826/1/LED_Comparison_White_Paper%281%29.pdf

FEATURED POSTS

-

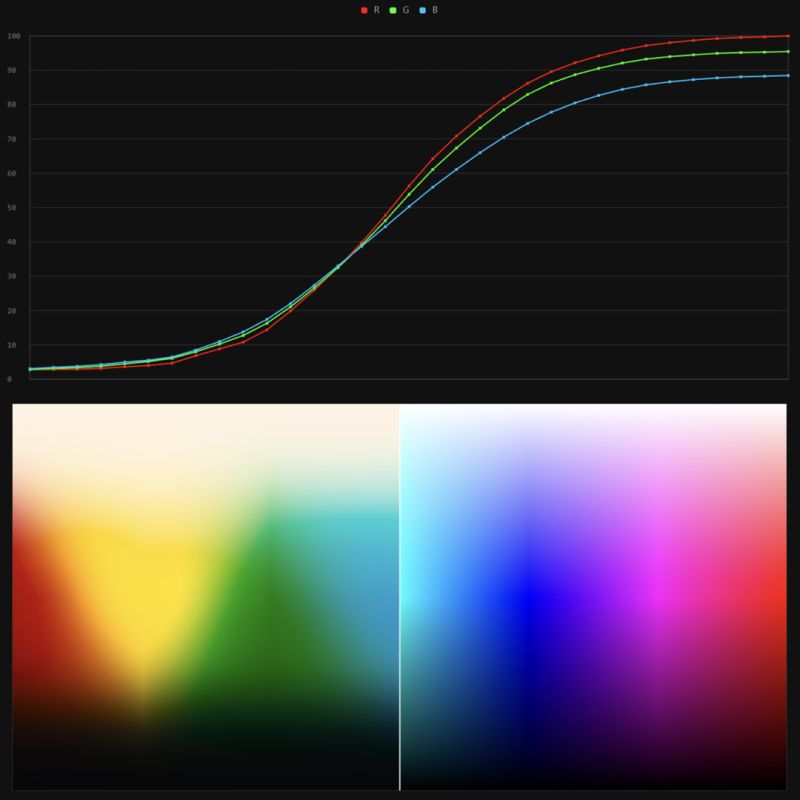

Stefan Ringelschwandtner – LUT Inspector tool

It lets you load any .cube LUT right in your browser, see the RGB curves, and use a split view on the Granger Test Image to compare the original vs. LUT-applied version in real time — perfect for spotting hue shifts, saturation changes, and contrast tweaks.

https://mononodes.com/lut-inspector/