BREAKING NEWS

LATEST POSTS

-

Crypto Mining Attack via ComfyUI/Ultralytics in 2024

https://github.com/ultralytics/ultralytics/issues/18037

zopieux on Dec 5, 2024 : Ultralytics was attacked (or did it on purpose, waiting for a post mortem there), 8.3.41 contains nefarious code downloading and running a crypto miner hosted as a GitHub blob.

-

Walt Disney Animation Abandons Longform Streaming Content

https://www.hollywoodreporter.com/business/business-news/tiana-disney-series-shelved-1236153297

A spokesperson confirmed there will be some layoffs in its Vancouver studio as a result of this shift in business strategy. In addition to the Tiana series, the studio is also scrapping an unannounced feature-length project that was set to go straight to Disney+.

Insiders say that Walt Disney Animation remains committed to releasing one theatrical film per year in addition to other shorts and special projects

-

Andreas Horn – The 9 algorithms

The illustration below highlights the algorithms most frequently utilized in our everyday activities: They play a key role in everything we do from online shopping recommendations, navigation apps, social media, email spam filters and even smart home devices.

🔹 𝗦𝗼𝗿𝘁𝗶𝗻𝗴 𝗔𝗹𝗴𝗼𝗿𝗶𝘁𝗵𝗺

– Organize data for efficiency.

➜ Example: Sorting email threads or search results.

🔹 𝗗𝗶𝗷𝗸𝘀𝘁𝗿𝗮’𝘀 𝗔𝗹𝗴𝗼𝗿𝗶𝘁𝗵𝗺

– Finds the shortest path in networks.

➜ Example: Google Maps driving routes.

🔹 𝗧𝗿𝗮𝗻𝘀𝗳𝗼𝗿𝗺𝗲𝗿𝘀

– AI models that understand context and meaning.

➜ Example: ChatGPT, Claude and other LLMs.

🔹 𝗟𝗶𝗻𝗸 𝗔𝗻𝗮𝗹𝘆𝘀𝗶𝘀

– Ranks pages and builds connections.

➜ Example: TikTok PageRank, LinkedIn recommendations.

🔹 𝗥𝗦𝗔 𝗔𝗹𝗴𝗼𝗿𝗶𝘁𝗵𝗺

– Encrypts and secures data communication.

➜ Example: WhatsApp encryption or online banking.

🔹 𝗜𝗻𝘁𝗲𝗴𝗲𝗿 𝗙𝗮𝗰𝘁𝗼𝗿𝗶𝘇𝗮𝘁𝗶𝗼𝗻

– Secures cryptographic systems.

➜ Example: Protecting sensitive data in blockchain.

🔹 𝗖𝗼𝗻𝘃𝗼𝗹𝘂𝘁𝗶𝗼𝗻𝗮𝗹 𝗡𝗲𝘂𝗿𝗮𝗹 𝗡𝗲𝘁𝘄𝗼𝗿𝗸𝘀 (𝗖𝗡𝗡𝘀)

– Recognizes patterns in images and videos.

➜ Example: Facial recognition, object detection in self-driving cars.

🔹 𝗛𝘂𝗳𝗳𝗺𝗮𝗻 𝗖𝗼𝗱𝗶𝗻𝗴

– Compresses data efficiently.

➜ Example: JPEG and MP3 file compression.

🔹 𝗦𝗲𝗰𝘂𝗿𝗲 𝗛𝗮𝘀𝗵 𝗔𝗹𝗴𝗼𝗿𝗶𝘁𝗵𝗺 (𝗦𝗛𝗔)

– Ensures data integrity.

➜ Example: Password encryption, digital signatures.

-

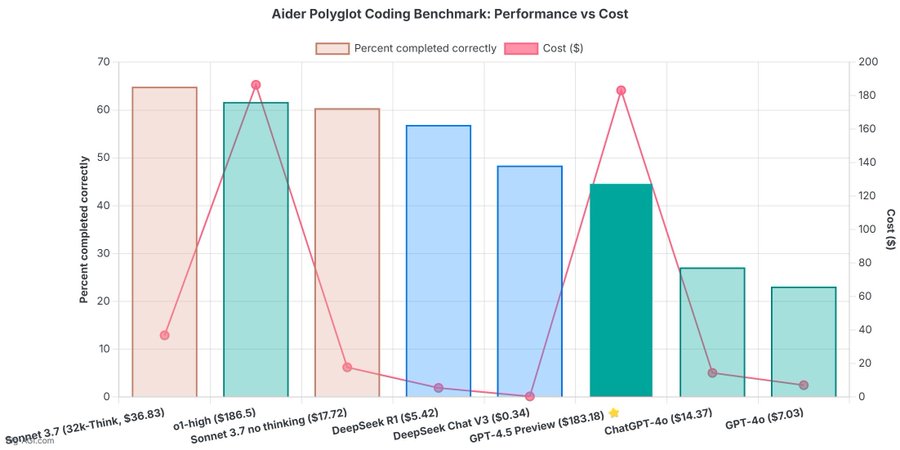

OpenAI 4.5 model arrives to mixed reviews

The verdict is in: OpenAI’s newest and most capable traditional AI model, GPT-4.5, is big, expensive, and slow, providing marginally better performance than GPT-4o at 30x the cost for input and 15x the cost for output. The new model seems to prove that longstanding rumors of diminishing returns in training unsupervised-learning LLMs were correct and that the so-called “scaling laws” cited by many for years have possibly met their natural end.

-

Walter Murch – “In the Blink of an Eye” – A perspective on cut editing

https://www.amazon.ca/Blink-Eye-Revised-2nd/dp/1879505622

Celebrated film editor Walter Murch’s vivid, multifaceted, thought-provoking essay on film editing. Starting with the most basic editing question — Why do cuts work? — Murch takes the reader on a wonderful ride through the aesthetics and practical concerns of cutting film. Along the way, he offers unique insights on such subjects as continuity and discontinuity in editing, dreaming, and reality; criteria for a good cut; the blink of the eye as an emotional cue; digital editing; and much more. In this second edition, Murch revises his popular first edition’s lengthy meditation on digital editing in light of technological changes. Francis Ford Coppola says about this book: “Nothing is as fascinating as spending hours listening to Walter’s theories of life, cinema and the countless tidbits of wisdom that he leaves behind like Hansel and Gretel’s trail of breadcrumbs…….”

Version 1.0.0 -

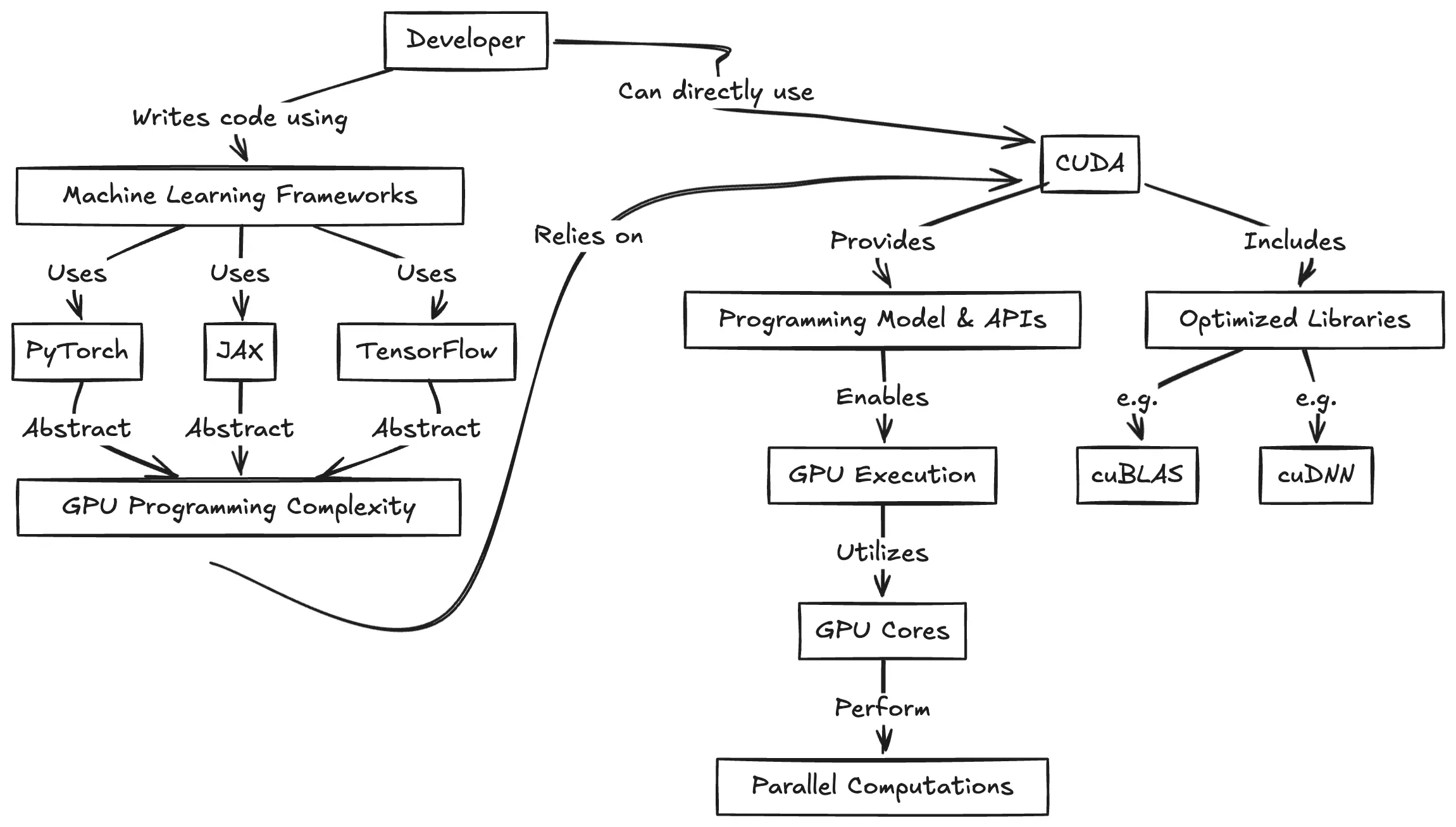

CUDA Programming for Python Developers

https://www.pyspur.dev/blog/introduction_cuda_programming

Check your Cuda version, it will be the release version here:

>>> nvcc --version nvcc: NVIDIA (R) Cuda compiler driver Copyright (c) 2005-2024 NVIDIA Corporation Built on Wed_Apr_17_19:36:51_Pacific_Daylight_Time_2024 Cuda compilation tools, release 12.5, V12.5.40 Build cuda_12.5.r12.5/compiler.34177558_0or from here:

>>> nvidia-smi Mon Jun 16 12:35:20 2025 +-----------------------------------------------------------------------------------------+ | NVIDIA-SMI 555.85 Driver Version: 555.85 CUDA Version: 12.5 | |-----------------------------------------+------------------------+----------------------+

-

PixVerse – Prompt, lypsync and extended video generation

https://app.pixverse.ai/onboard

PixVerse now has 3 main features:

text to video➡️ How To Generate Videos With Text Promptsimage to video➡️ How To Animate Your Images And Bring Them To Lifeupscale➡️ How to Upscale Your Video

Enhanced Capabilities

– Improved Prompt Understanding: Achieve more accurate prompt interpretation and stunning video dynamics.

– Supports Various Video Ratios: Choose from 16:9, 9:16, 3:4, 4:3, and 1:1 ratios.

– Upgraded Styles: Style functionality returns with options like Anime, Realistic, Clay, and 3D. It supports both text-to-video and image-to-video stylization.New Features

– Lipsync: The new Lipsync feature enables users to add text or upload audio, and PixVerse will automatically sync the characters’ lip movements in the generated video based on the text or audio.

– Effect: Offers 8 creative effects, including Zombie Transformation, Wizard Hat, Monster Invasion, and other Halloween-themed effects, enabling one-click creativity.

– Extend: Extend the generated video by an additional 5-8 seconds, with control over the content of the extended segment. -

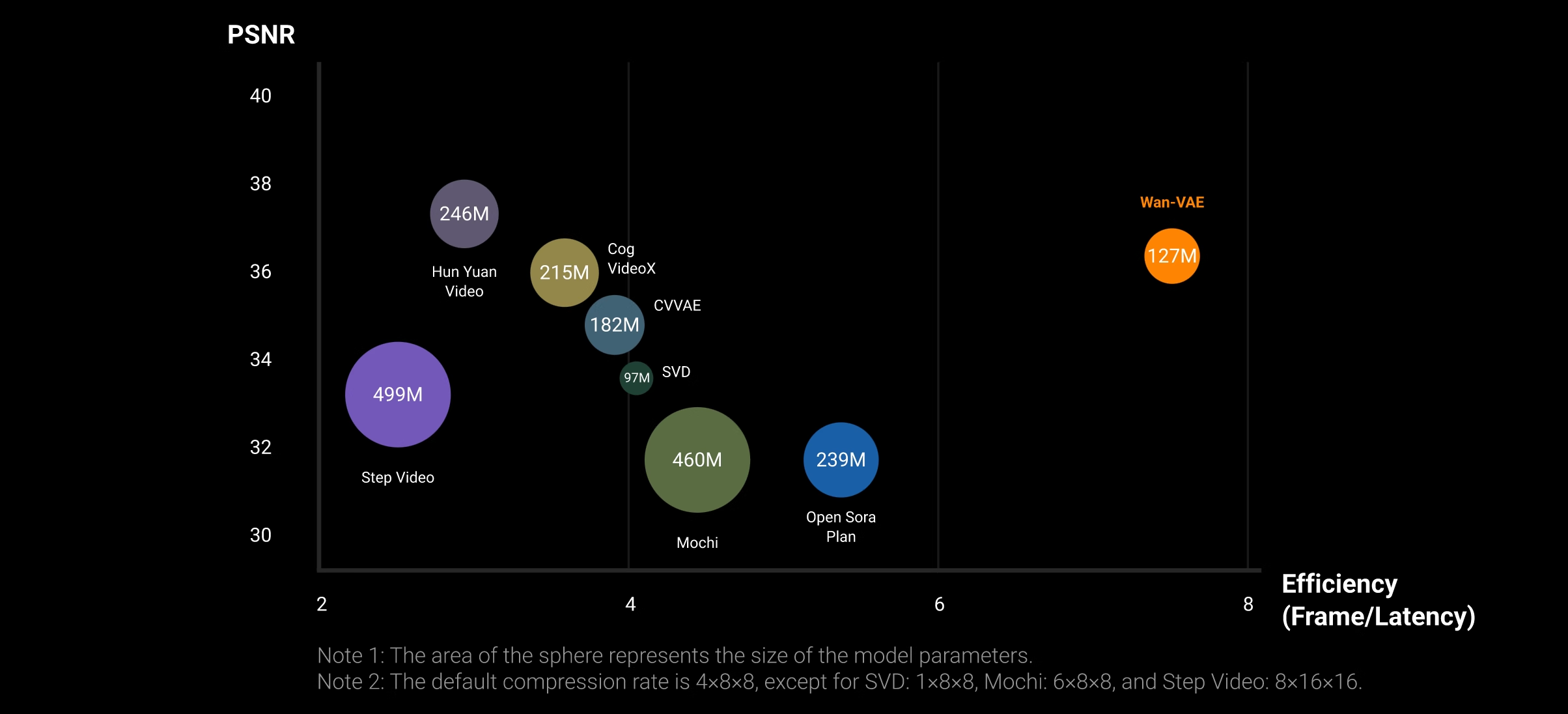

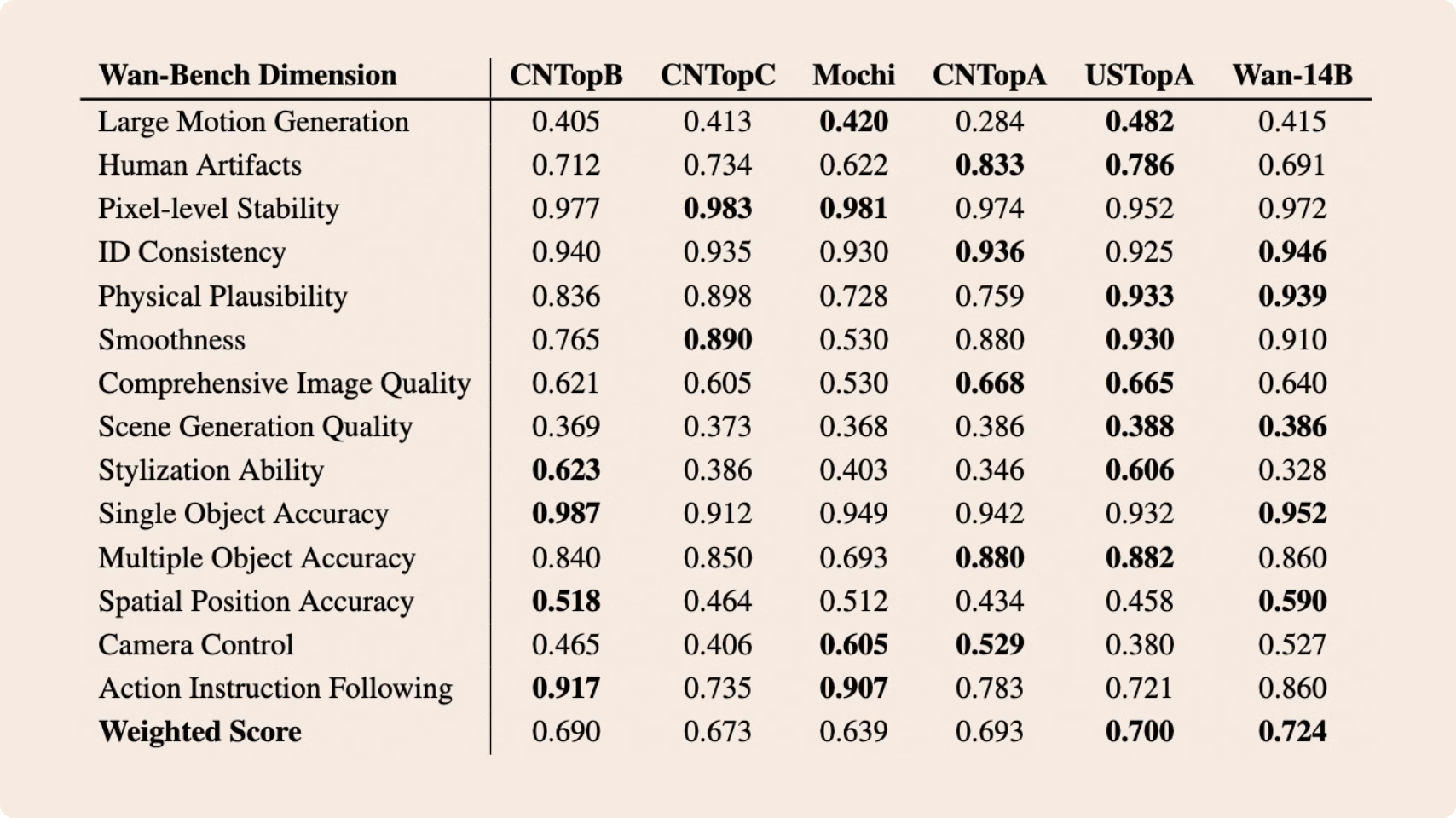

Alibaba Group Tongyi Lab WanxAI Wan2.1 – open source model

👍 SOTA Performance: Wan2.1 consistently outperforms existing open-source models and state-of-the-art commercial solutions across multiple benchmarks.

🚀 Supports Consumer-grade GPUs: The T2V-1.3B model requires only 8.19 GB VRAM, making it compatible with almost all consumer-grade GPUs. It can generate a 5-second 480P video on an RTX 4090 in about 4 minutes (without optimization techniques like quantization). Its performance is even comparable to some closed-source models.

🎉 Multiple tasks: Wan2.1 excels in Text-to-Video, Image-to-Video, Video Editing, Text-to-Image, and Video-to-Audio, advancing the field of video generation.

🔮 Visual Text Generation: Wan2.1 is the first video model capable of generating both Chinese and English text, featuring robust text generation that enhances its practical applications.

💪 Powerful Video VAE: Wan-VAE delivers exceptional efficiency and performance, encoding and decoding 1080P videos of any length while preserving temporal information, making it an ideal foundation for video and image generation.

https://huggingface.co/Comfy-Org/Wan_2.1_ComfyUI_repackaged/tree/main/split_files

https://huggingface.co/Comfy-Org/Wan_2.1_ComfyUI_repackaged/tree/main/example%20workflows_Wan2.1

https://huggingface.co/Wan-AI/Wan2.1-T2V-14B

https://huggingface.co/Kijai/WanVideo_comfy/tree/main

-

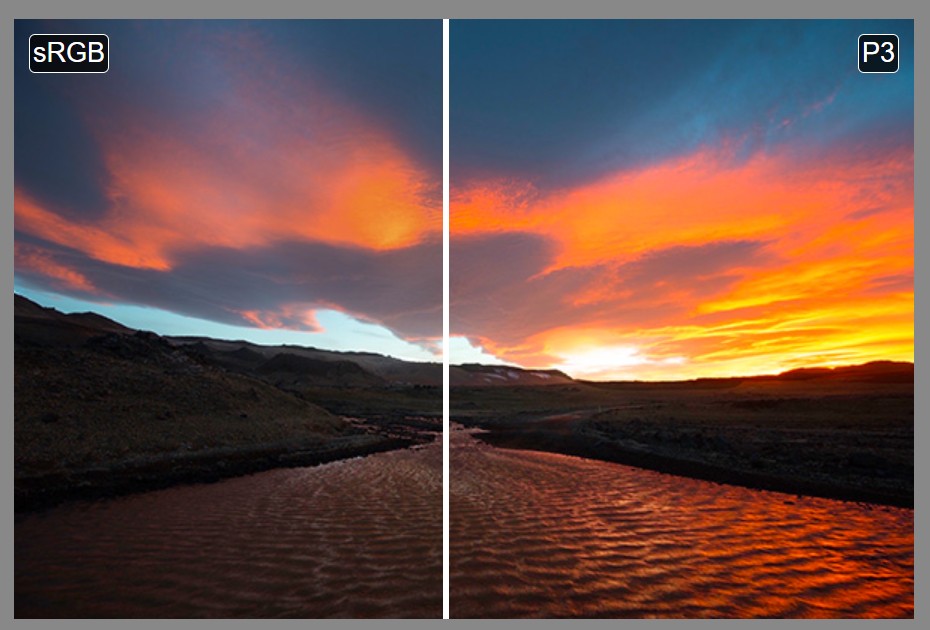

VES Cinematic Color – Motion-Picture Color Management

This paper presents an introduction to the color pipelines behind modern feature-film visual-effects and animation.

Authored by Jeremy Selan, and reviewed by the members of the VES Technology Committee including Rob Bredow, Dan Candela, Nick Cannon, Paul Debevec, Ray Feeney, Andy Hendrickson, Gautham Krishnamurti, Sam Richards, Jordan Soles, and Sebastian Sylwan.

FEATURED POSTS

-

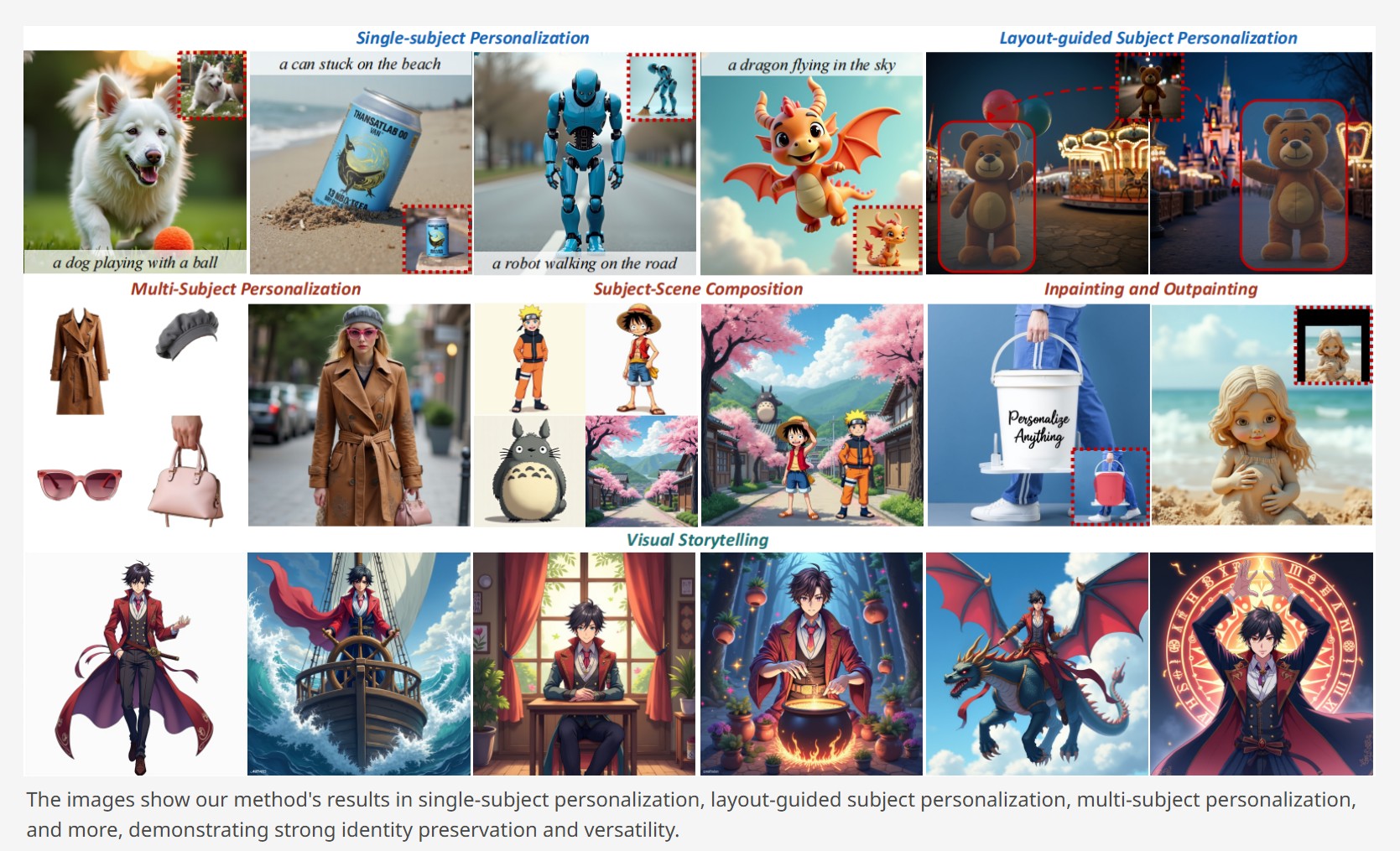

Personalize Anything – For Free with Diffusion Transformer

https://fenghora.github.io/Personalize-Anything-Page

Customize any subject with advanced DiT without additional fine-tuning.

-

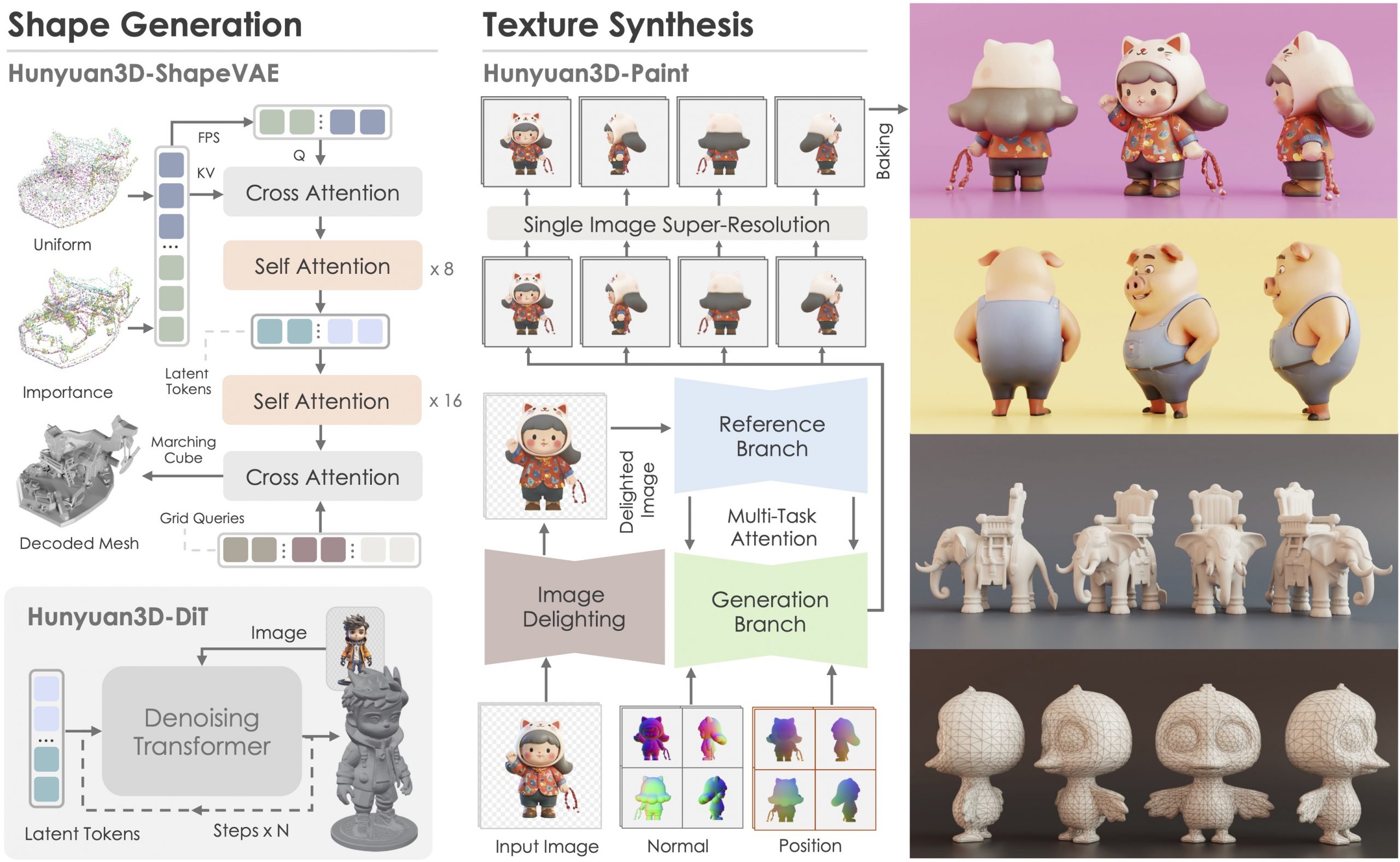

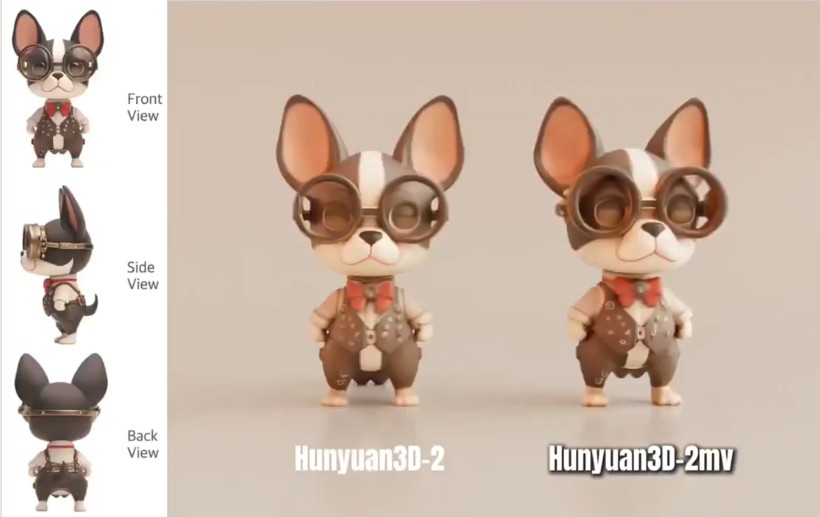

Tencent Hunyuan3D 2.1 goes Open Source and adds MV (Multi-view) and MV Mini

https://huggingface.co/tencent/Hunyuan3D-2mv

https://huggingface.co/tencent/Hunyuan3D-2mini

https://github.com/Tencent/Hunyuan3D-2

Tencent just made Hunyuan3D 2.1 open-source.

This is the first fully open-source, production-ready PBR 3D generative model with cinema-grade quality.

https://github.com/Tencent-Hunyuan/Hunyuan3D-2.1

What makes it special?

• Advanced PBR material synthesis brings realistic materials like leather, bronze, and more to life with stunning light interactions.

• Complete access to model weights, training/inference code, data pipelines.

• Optimized to run on accessible hardware.

• Built for real-world applications with professional-grade output quality.

They’re making it accessible to everyone:

• Complete open-source ecosystem with full documentation.

• Ready-to-use model weights and training infrastructure.

• Live demo available for instant testing.

• Comprehensive GitHub repository with implementation details.

-

ComfyDock – The Easiest (Free) Way to Safely Run ComfyUI Sessions in a Boxed Container

https://www.reddit.com/r/comfyui/comments/1j2x4qv/comfydock_the_easiest_free_way_to_run_comfyui_in/

ComfyDock is a tool that allows you to easily manage your ComfyUI environments via Docker.

Common Challenges with ComfyUI

- Custom Node Installation Issues: Installing new custom nodes can inadvertently change settings across the whole installation, potentially breaking the environment.

- Workflow Compatibility: Workflows are often tested with specific custom nodes and ComfyUI versions. Running these workflows on different setups can lead to errors and frustration.

- Security Risks: Installing custom nodes directly on your host machine increases the risk of malicious code execution.

How ComfyDock Helps

- Environment Duplication: Easily duplicate your current environment before installing custom nodes. If something breaks, revert to the original environment effortlessly.

- Deployment and Sharing: Workflow developers can commit their environments to a Docker image, which can be shared with others and run on cloud GPUs to ensure compatibility.

- Enhanced Security: Containers help to isolate the environment, reducing the risk of malicious code impacting your host machine.