BREAKING NEWS

LATEST POSTS

-

VES VFX INDUSTRY 2023 – GROWTH, GLOBALIZATION AND CHANGE

https://www.vfxvoice.com/vfx-industry-growth-globalization-and-change/

“The number of productions globally is now being rationalized to the reality of what can be produced. Demand was outstripping supply to such an extent that it was actually becoming unsustainable. What we are seeing now is more of a sensible and sustainable approach to content creation, and it is finding equilibrium – which is a good thing. There is still growth, but it is a lot more structured and sustainable.”

“VFX global market revenue will climb from $26.3 billion in 2021 to $48.9 billion in 2028”

“Demand for VFX looks likely to continue, though there is still the challenge of delivering the work within budgets and compressed schedules at a time of rising costs, particularly of labor.”

“The biggest challenge to our industry is bringing in the next generation and providing them with training and opportunities to succeed. Many of us were able to get a break somewhere or discover the potential for a career in VFX thanks to technical training or personal connections.”

-

Kristina Kashtanova – “This is how GPT-4 sees and hears itself”

“I used GPT-4 to describe itself. Then I used its description to generate an image, a video based on this image and a soundtrack.

Tools I used: GPT-4, Midjourney, Kaiber AI, Mubert, RunwayML

This is the description I used that GPT-4 had of itself as a prompt to text-to-image, image-to-video, and text-to-music. I put the video and sound together in RunwayML.

GPT-4 described itself as: “Imagine a sleek, metallic sphere with a smooth surface, representing the vast knowledge contained within the model. The sphere emits a soft, pulsating glow that shifts between various colors, symbolizing the dynamic nature of the AI as it processes information and generates responses. The sphere appears to float in a digital environment, surrounded by streams of data and code, reflecting the complex algorithms and computing power behind the AI”

-

ElportDigital – Adhesive TRANSPARENT LED NANO FILM

95% TRANSPARENCY – 4000NITS BRIGHTNESS – 3.9mm PIXEL PITCH INVISIBLE LED

https://www.onqdigitalgroup.com.au/post/adhesive-transparent-led-film-the-benefits-of-using

FEATURED POSTS

-

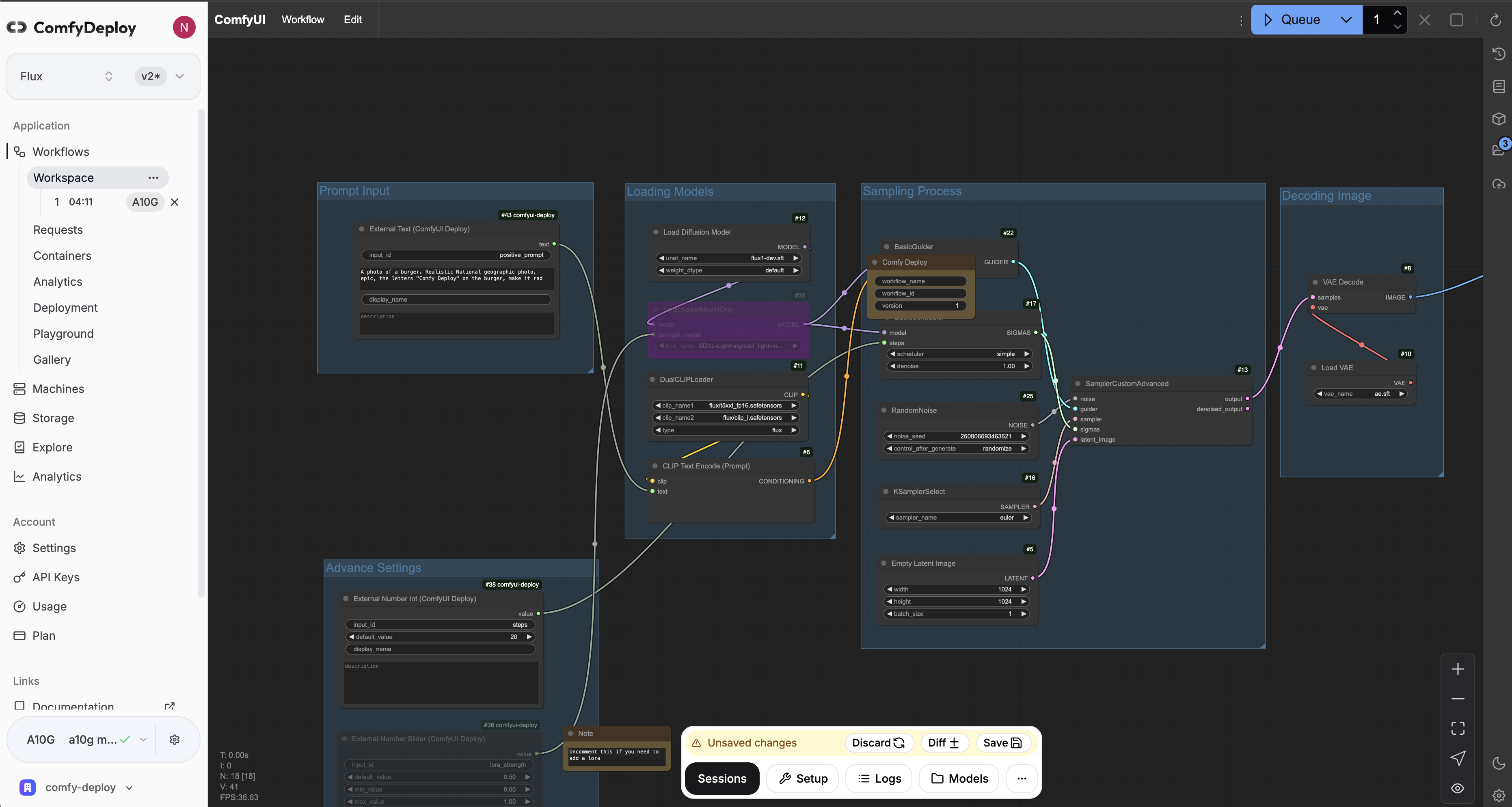

ComfyDeploy – A way for teams to use ComfyUI and power apps

https://www.comfydeploy.com/docs/v2/introduction

1 – Import your workflow

2 – Build a machine configuration to run your workflows on

3 – Download models into your private storage, to be used in your workflows and team.

4 – Run ComfyUI in the cloud to modify and test your workflows on cloud GPUs

5 – Expose workflow inputs with our custom nodes, for API and playground use

6 – Deploy APIs

7 – Let your team use your workflows in playground without using ComfyUI

-

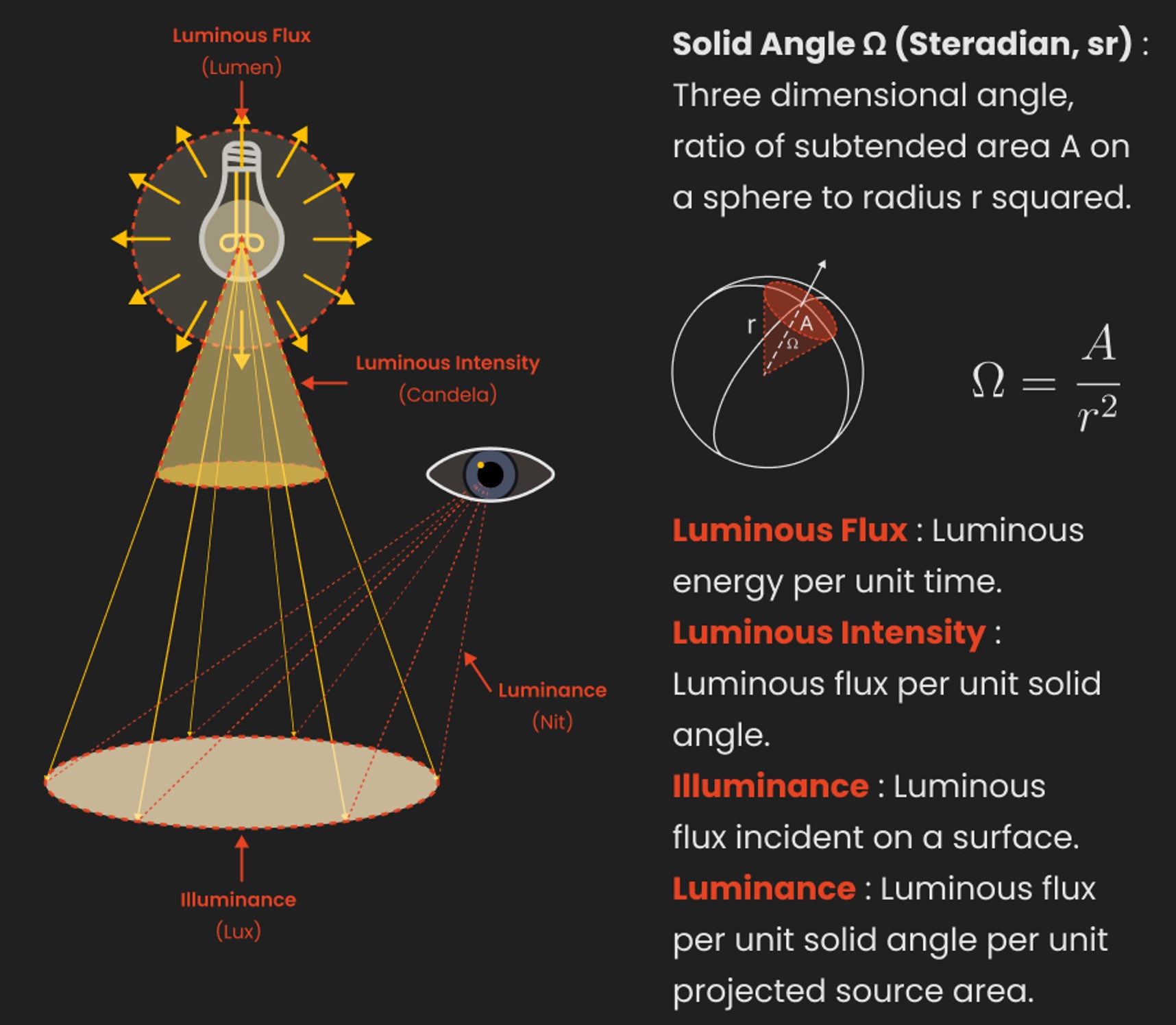

Björn Ottosson – How software gets color wrong

https://bottosson.github.io/posts/colorwrong/

Most software around us today are decent at accurately displaying colors. Processing of colors is another story unfortunately, and is often done badly.

To understand what the problem is, let’s start with an example of three ways of blending green and magenta:

- Perceptual blend – A smooth transition using a model designed to mimic human perception of color. The blending is done so that the perceived brightness and color varies smoothly and evenly.

- Linear blend – A model for blending color based on how light behaves physically. This type of blending can occur in many ways naturally, for example when colors are blended together by focus blur in a camera or when viewing a pattern of two colors at a distance.

- sRGB blend – This is how colors would normally be blended in computer software, using sRGB to represent the colors.

Let’s look at some more examples of blending of colors, to see how these problems surface more practically. The examples use strong colors since then the differences are more pronounced. This is using the same three ways of blending colors as the first example.

Instead of making it as easy as possible to work with color, most software make it unnecessarily hard, by doing image processing with representations not designed for it. Approximating the physical behavior of light with linear RGB models is one easy thing to do, but more work is needed to create image representations tailored for image processing and human perception.

Also see: