BREAKING NEWS

LATEST POSTS

-

3D TV is officially dead

http://www.businessinsider.com.au/3d-tv-is-dead-2017-1?r=US&IR=T

1- Not enough content. DirecTV and ESPN stopped broadcasting their 3D channels in 2012 and 2013.

2- The glasses needed for 3D were clunky and annoying, and they made people feel self-conscious while wearing them.

3- 3D TVs were and are perfectly good 2D TVs, so 3D features weren’t often used.

4- 3D movies were closely associated with Blu-ray Discs as movie streaming started to gain traction.

5- 3D TVs need careful calibration and can cause eye strain.

6- Maybe it was always a gimmick. Ask yourself: Have 3D effects ever really impressed you or affected your viewing experience?

-

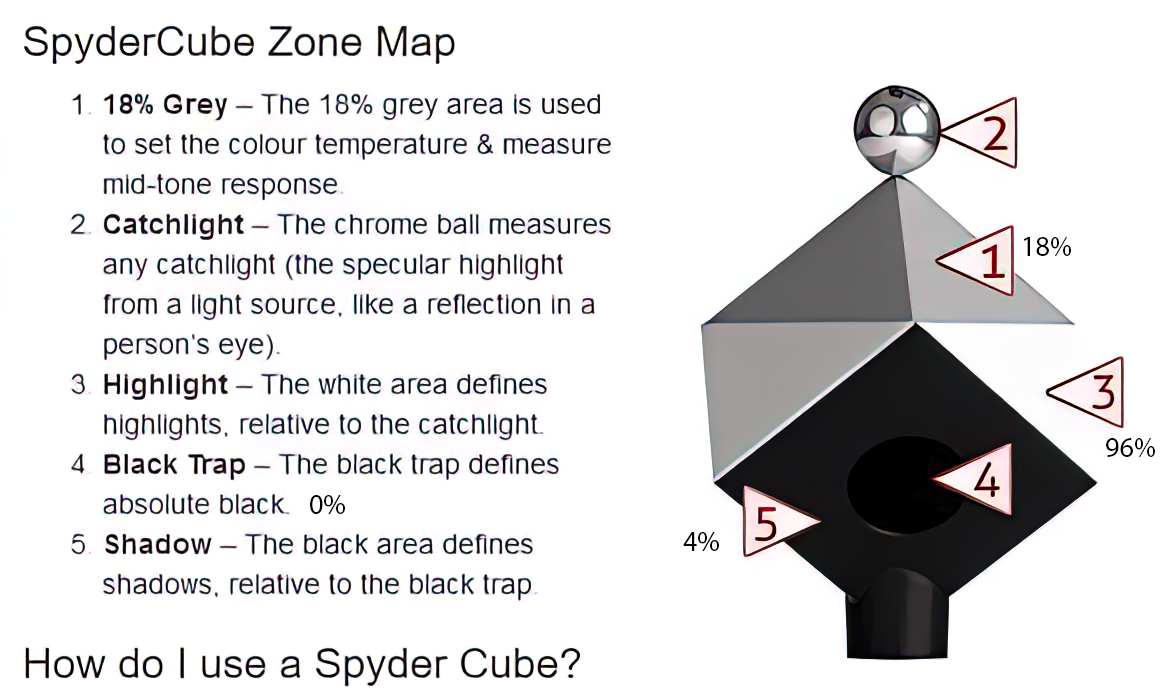

Composition – These are the basic lighting techniques you need to know for photography and film

http://www.diyphotography.net/basic-lighting-techniques-need-know-photography-film/

Amongst the basic techniques, there’s…

1- Side lighting – Literally how it sounds, lighting a subject from the side when they’re faced toward you

2- Rembrandt lighting – Here the light is at around 45 degrees over from the front of the subject, raised and pointing down at 45 degrees

3- Back lighting – Again, how it sounds, lighting a subject from behind. This can help to add drama with silouettes

4- Rim lighting – This produces a light glowing outline around your subject

5- Key light – The main light source, and it’s not necessarily always the brightest light source

6- Fill light – This is used to fill in the shadows and provide detail that would otherwise be blackness

7- Cross lighting – Using two lights placed opposite from each other to light two subjects

-

Composition – 5 tips for creating perfect cinematic lighting and making your work look stunning

http://www.diyphotography.net/5-tips-creating-perfect-cinematic-lighting-making-work-look-stunning/

1. Learn the rules of lighting

2. Learn when to break the rules

3. Make your key light larger

4. Reverse keying

5. Always be backlighting

-

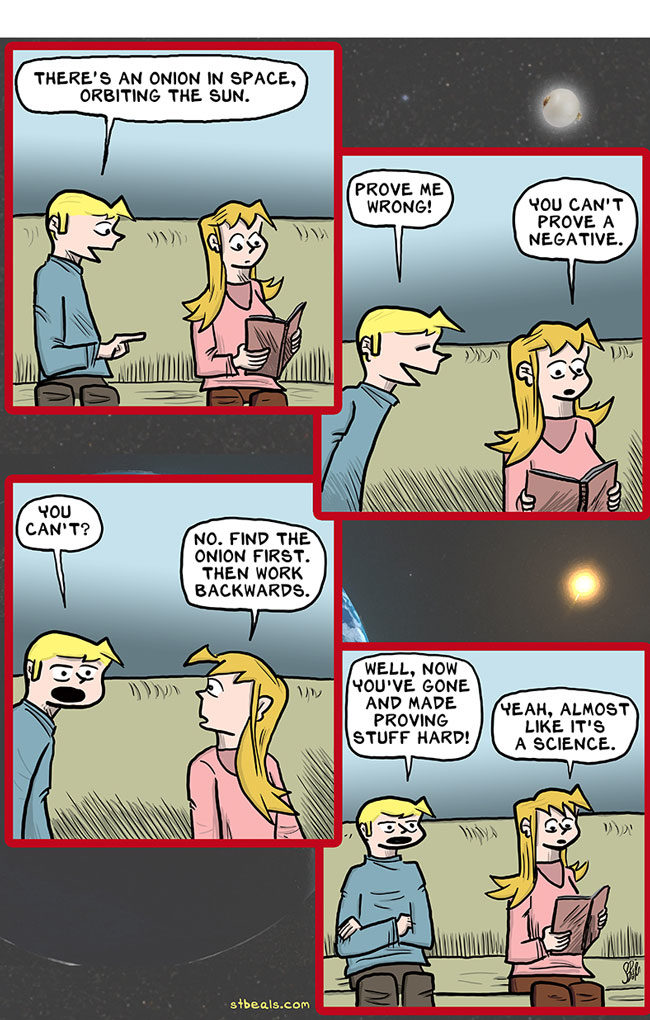

Prove me wrong. But. Cannot prove a negative! The argument from ignorance.

The argument from ignorance (or argumentum ad ignorantiam and negative proof) is a logical fallacy that claims the truth of a premise is based on the fact that it has not (yet) been proven false, or that a premise is false because it has not (yet) been proven true.

It is a common human’s logic fallacy, which simply states:

i dont know what that is… in which case it must be this or that…It is often used as an attempt to shift the burden of proof onto the skeptic rather than the proponent of the idea. But the burden of proof must always be on the individual proposing existence, not the one questioning existence.

Ignorance is ignorance; no right to believe anything can be derived from it. In other matters no sensible person will behave so irresponsibly or rest content with such feeble grounds for his opinions and for the line he takes.

A common retort to a negative proof is to reference the existence of the Invisible Pink Unicorn or the Flying Spaghetti Monster as just as valid as the proposed entity of the debate. This is similar to reductio ad absurdum, that taking negative proof as legitimate means that one can prove practically anything, regardless of how absurd.

A religious apologist using the argument from ignorance would state something like, “the existence of God is true because there is no proof that the existence of God is false”. But a counter-apologist can use that same “argument” to state, “the nonexistence of God is true because there is no proof that the nonexistence of God is false”. This immediately demonstrates how absurd the argument from ignorance is by turning the tables on those who use this “argument” fallacy, like some religious apologists.

FEATURED POSTS

-

AI and the Law – Laurence Van Elegem : The era of gigantic AI models like GPT-4 is coming to an end

https://www.linkedin.com/feed/update/urn:li:activity:7061987804548870144

Sam Altman, CEO of OpenAI, dropped a 💣 at a recent MIT event, declaring that the era of gigantic AI models like GPT-4 is coming to an end. He believes that future progress in AI needs new ideas, not just bigger models.

So why is that revolutionary? Well, this is how OpenAI’s LLMs (the models that ‘feed’ chatbots like ChatGPT & Google Bard) grew exponentially over the years:

➡️GPT-2 (2019): 1.5 billion parameters

➡️GPT-3 (2020): 175 billion parameters

➡️GPT-4: (2023): amount undisclosed – but likely trillions of parametersThat kind of parameter growth is no longer tenable, feels Altman.

Why?:

➡️RETURNS: scaling up model size comes with diminishing returns.

➡️PHYSICAL LIMITS: there’s a limit to how many & how quickly data centers can be built.

➡️COST: ChatGPT cost over over 100 million dollars to develop.What is he NOT saying? That access to data is becoming damned hard & expensive. So if you have a model that keeps needing more data to become better, that’s a problem.

Why is it becoming harder and more expensive to access data?

🎨Copyright conundrums: Getty Images, individual artists like Sarah Andersen, Kelly McKernan & Karloa Otiz are suing AI companies over unauthorized use of their content. Universal Music asked Spotify & Apple Music to stop AI companies from accessing their songs for training.

🔐Privacy matters & regulation: Italy banned ChatGPT over privacy concerns (now back after changes). Germany, France, Ireland, Canada, and Spain remain suspicious. Samsung even warned employees not to use AI tools like ChatGPT for security reasons.

💸Data monetization: Twitter, Reddit, Stack Overflow & others want AI companies to pay up for training on their data. Contrary to most artists, Grimes is allowing anyone to use her voice for AI-generated songs … for a 50% profit share.

🕸️Web3’s impact: If Web3 fulfills its promise, users could store data in personal vaults or cryptocurrency wallets, making it harder for LLMs to access the data they crave.

🌎Geopolitics: it’s increasingly difficult for data to cross country borders. Just think about China and TikTok.

😷Data contamination: We have this huge amount of ‘new’ – and sometimes hallucinated – data that is being generated by generative AI chatbots. What will happen if we feed that data back into their LLMs?

No wonder that people like Sam Altman are looking for ways to make the models better without having to use more data. If you want to know more, check our brand new Radar podcast episode (link in the comments), where I talked about this & more with Steven Van Belleghem, Peter Hinssen, Pascal Coppens & Julie Vens – De Vos. We also discussed Twitter, TikTok, Walmart, Amazon, Schmidt Futures, our Never Normal Tour with Mediafin in New York (link in the comments), the human energy crisis, Apple’s new high-yield savings account, the return of China, BYD, AI investment strategies, the power of proximity, the end of Buzzfeed news & much more.