BREAKING NEWS

LATEST POSTS

-

Microsoft is discontinuing its HoloLens headsets

https://www.theverge.com/2024/10/1/24259369/microsoft-hololens-2-discontinuation-support

Software support for the original HoloLens headset will end on December 10th.

Microsoft’s struggles with HoloLens have been apparent over the past two years.

-

Meta Horizon Hyperscape

𝐌𝐞𝐭𝐚 𝐇𝐲𝐩𝐞𝐫𝐬𝐜𝐚𝐩𝐞 𝐢𝐧 𝐚 𝐧𝐮𝐭𝐬𝐡𝐞𝐥𝐥

Hyperscape technology allows us to scan spaces with just a phone and create photorealistic replicas of the physical world with high fidelity. You can experience these digital replicas on the Quest 3 or on the just announced Quest 3S.https://www.youtube.com/clip/UgkxGlXM3v93kLg1D9qjJIKmvIYW-vHvdbd0

𝐇𝐢𝐠𝐡 𝐅𝐢𝐝𝐞𝐥𝐢𝐭𝐲 𝐄𝐧𝐚𝐛𝐥𝐞𝐬 𝐚 𝐍𝐞𝐰 𝐒𝐞𝐧𝐬𝐞 𝐨𝐟 𝐏𝐫𝐞𝐬𝐞𝐧𝐜𝐞

This level of photorealism will enable a new way to be together, where spaces look, sound, and feel like you are physically there.𝐒𝐢𝐦𝐩𝐥𝐞 𝐂𝐚𝐩𝐭𝐮𝐫𝐞 𝐩𝐫𝐨𝐜𝐞𝐬𝐬 𝐰𝐢𝐭𝐡 𝐲𝐨𝐮𝐫 𝐦𝐨𝐛𝐢𝐥𝐞 𝐩𝐡𝐨𝐧𝐞

Currently not available, but in the future, it will offer a new way to create worlds in Horizon and will be the easiest way to bring physical spaces to the digital world. Creators can capture physical environments on their mobile device and invite friends, fans, or customers to visit and engage in the digital replicas.𝐂𝐥𝐨𝐮𝐝-𝐛𝐚𝐬𝐞𝐝 𝐏𝐫𝐨𝐜𝐞𝐬𝐬𝐢𝐧𝐠 𝐚𝐧𝐝 𝐑𝐞𝐧𝐝𝐞𝐫𝐢𝐧𝐠

Using Gaussian Splatting, a 3D modeling technique that renders fine details with high accuracy and efficiency, we process the model input data in the cloud and render the created model through cloud rendering and streaming on Quest 3 and the just announced Quest 3S.𝐓𝐫𝐲 𝐢𝐭 𝐨𝐮𝐭 𝐲𝐨𝐮𝐫𝐬𝐞𝐥𝐟

If you are in the US and you have a Meta Quest 3 or 3S you can try it out here:https://www.meta.com/experiences/meta-horizon-hyperscape-demo/7972066712871980/

-

Principles of Interior Design – Balance

https://www.yankodesign.com/2024/09/18/principles-of-interior-design-balance

The three types of balance include:

- Symmetrical Balance

- Asymmetrical Balance

- Radial Balance

-

Netflix Art Of Nimona digital art book

-

Sam Altman – The Intelligence Age

In the next couple of decades, we will be able to do things that would have seemed like magic to our grandparents.

This phenomenon is not new, but it will be newly accelerated. People have become dramatically more capable over time; we can already accomplish things now that our predecessors would have believed to be impossible.

We are more capable not because of genetic change, but because we benefit from the infrastructure of society being way smarter and more capable than any one of us; in an important sense, society itself is a form of advanced intelligence. Our grandparents – and the generations that came before them – built and achieved great things. They contributed to the scaffolding of human progress that we all benefit from. AI will give people tools to solve hard problems and help us add new struts to that scaffolding that we couldn’t have figured out on our own. The story of progress will continue, and our children will be able to do things we can’t.

FEATURED POSTS

-

SlowMoVideo – How to make a slow motion shot with the open source program

http://slowmovideo.granjow.net/

slowmoVideo is an OpenSource program that creates slow-motion videos from your footage.

Slow motion cinematography is the result of playing back frames for a longer duration than they were exposed. For example, if you expose 240 frames of film in one second, then play them back at 24 fps, the resulting movie is 10 times longer (slower) than the original filmed event….

Film cameras are relatively simple mechanical devices that allow you to crank up the speed to whatever rate the shutter and pull-down mechanism allow. Some film cameras can operate at 2,500 fps or higher (although film shot in these cameras often needs some readjustment in postproduction). Video, on the other hand, is always captured, recorded, and played back at a fixed rate, with a current limit around 60fps. This makes extreme slow motion effects harder to achieve (and less elegant) on video, because slowing down the video results in each frame held still on the screen for a long time, whereas with high-frame-rate film there are plenty of frames to fill the longer durations of time. On video, the slow motion effect is more like a slide show than smooth, continuous motion.

One obvious solution is to shoot film at high speed, then transfer it to video (a case where film still has a clear advantage, sorry George). Another possibility is to cross dissolve or blur from one frame to the next. This adds a smooth transition from one still frame to the next. The blur reduces the sharpness of the image, and compared to slowing down images shot at a high frame rate, this is somewhat of a cheat. However, there isn’t much you can do about it until video can be recorded at much higher rates. Of course, many film cameras can’t shoot at high frame rates either, so the whole super-slow-motion endeavor is somewhat specialized no matter what medium you are using. (There are some high speed digital cameras available now that allow you to capture lots of digital frames directly to your computer, so technology is starting to catch up with film. However, this feature isn’t going to appear in consumer camcorders any time soon.)

-

59 AI Filmmaking Tools For Your Workflow

https://curiousrefuge.com/blog/ai-filmmaking-tools-for-filmmakers

- Runway

- PikaLabs

- Pixverse (free)

- Haiper (free)

- Moonvalley (free)

- Morph Studio (free)

- SORA

- Google Veo

- Stable Video Diffusion (free)

- Leonardo

- Krea

- Kaiber

- Letz.AI

- Midjourney

- Ideogram

- DALL-E

- Firefly

- Stable Diffusion

- Google Imagen 3

- Polycam

- LTX Studio

- Simulon

- Elevenlabs

- Auphonic

- Adobe Enhance

- Adobe’s AI Rotoscoping

- Adobe Photoshop Generative Fill

- Canva Magic Brush

- Akool

- Topaz Labs

- Magnific.AI

- FreePik

- BigJPG

- LeiaPix

- Move AI

- Mootion

- Heygen

- Synthesia

- Chat GPT-4

- Claude 3

- Nolan AI

- Google Gemini

- Meta Llama 3

- Suno

- Udio

- Stable Audio

- Soundful

- Google MusicML

- Viggle

- SyncLabs

- Lalamu

- LensGo

- D-ID

- WonderStudio

- Cuebric

- Blockade Labs

- Chat GPT-4o

- Luma Dream Machine

- Pallaidium (free)

-

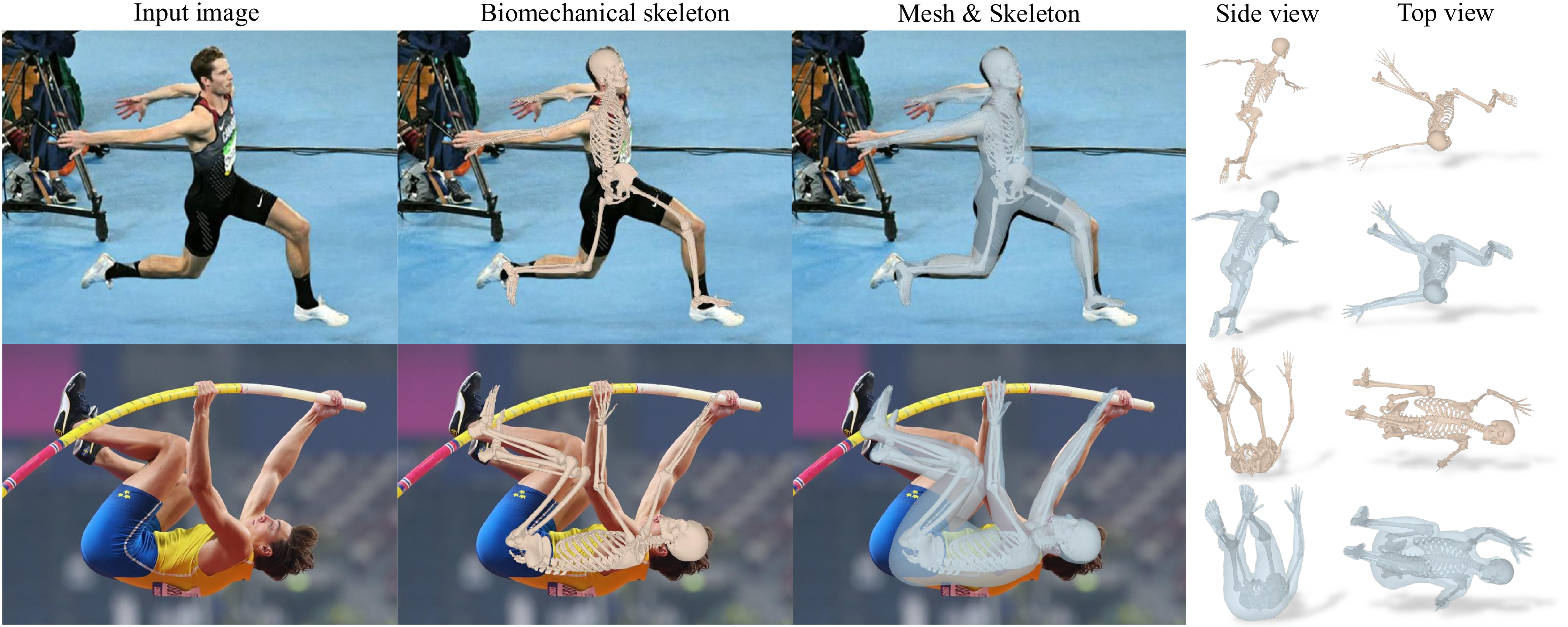

Eyeline Labs VChain – Chain-of-Visual-Thought for Reasoning in Video Generation for better AI physics

https://eyeline-labs.github.io/VChain/

https://github.com/Eyeline-Labs/VChain

Recent video generation models can produce smooth and visually appealing clips, but they often struggle to synthesize complex dynamics with a coherent chain of consequences. Accurately modeling visual outcomes and state transitions over time remains a core challenge. In contrast, large language and multimodal models (e.g., GPT-4o) exhibit strong visual state reasoning and future prediction capabilities. To bridge these strengths, we introduce VChain, a novel inference-time chain-of-visual-thought framework that injects visual reasoning signals from multimodal models into video generation. Specifically, VChain contains a dedicated pipeline that leverages large multimodal models to generate a sparse set of critical keyframes as snapshots, which are then used to guide the sparse inference-time tuning of a pre-trained video generator only at these key moments. Our approach is tuning-efficient, introduces minimal overhead and avoids dense supervision. Extensive experiments on complex, multi-step scenarios show that VChain significantly enhances the quality of generated videos.