BREAKING NEWS

LATEST POSTS

-

Painting boardgame miniatures with acrylic paint markers

What to consider / pros & cons

(more…)Feature Why it matters for miniatures Tip size/type (fine brush, bullet, chisel) Miniatures have small, detailed areas. If the tip is too thick you’ll lose detail, paint blobs easy. Brush or very fine bullet/needle tips are best. Opacity / pigment strength You want strong pigment so you don’t need many layers. Thin/translucent marker paint can be frustrating. Flow / consistency Too thick → clogs, blobs; too runny → loses control or bleeds over edges. Drying time Slower drying can let you blend or correct mistakes; too fast and you might get streaks. Also, layers might lift earlier coatings if not dry enough. Adherence & primer Marker paint may not stick well on smooth plastic/resin if unprimed. Priming helps a lot. Also sealing afterwards helps protect the work. Durability Miniatures get handled; you want paint & sealer that resist chipping. -

Looking Glass Factory – 4K Hololuminescent™ Displays (HLD)

𝐇𝐨𝐥𝐨𝐥𝐮𝐦𝐢𝐧𝐞𝐬𝐜𝐞𝐧𝐭™ 𝐃𝐢𝐬𝐩𝐥𝐚𝐲 (HLD for short) — razor thin (as thin as a 17mm), full 4K resolution, and capable of generating holographic presence at a magical price.

A breakthrough display that creates a holographic stage for people, products, and characters, delivering the magic of spatial presence in a razor-thin form factor.

Our patented hybrid technology creates embedded holographic layer creates the 3D volume, transforming standard video into dimensional, lifelike experiences.Create impossible spatial experiences from standard video

- Characters, people, and products appear physically present in the room

- The embedded holographic layer creates depth and dimension that makes subjects appear to float in space

- First scalable holographic illusion that doesn’t require a room-sized installation

- The dimensional depth and presence of pepper’s ghost illusions, without the bulk

Built for the real world

- FHD clarity (HLD 16″) and 4K clarity (HLD 27″ and HLD 86″), high brightness for any lighting environment

- Thin profile fits anywhere traditional displays go

- Wall-mounted, it creates the illusion of punching a hole through the wall into another dimension

- Films beautifully for social sharing – the magic translates on camera

Works with what you have

- Runs on your existing digital signage solution, CMS, and 4K video distribution infrastructure

- Standard HDMI input or USB loading

- 2D video workflow with straightforward, specific requirements: full-size subjects on green/white backgrounds or created with our templates (Cinema4D, Unity, Adobe Premiere Pro)

16″ $2000 usd

27″ $4000 usd

86″ $20000 usd -

Luma AI releases Ray3 – 16bit HDR, reasoning video model

This is Ray3. The world’s first reasoning video model, and the first to generate studio-grade HDR. Now with an all-new Draft Mode for rapid iteration in creative workflows, and state of the art physics and consistency. Available now for free in Dream Machine.

Ray3’s native HDR delivers studio-grade fidelity. It generates video in 10, 12 & 16-bit high dynamic range with details in shadows and highlights in vivid color. Convert SDR to HDR, export EXR for seamless integration and unprecedented control in post-production workflows.

Reasoning enables Ray3 to understand nuanced directions, think in visuals and language tokens, and judge its generations to give you reliably better results. With Ray3 you can create more complex scenes, intricate multi-step motion, and do it all faster.

With reasoning, Ray3 can interpret visual annotations enabling creatives to now draw or scribble on images to direct performance, blocking, and camera movement. Refine motion, objects, and composition for precise visual control, all without prompting.

Draft Mode is a new way to iterate video ideas, fast. Explore ideas in a state of flow and get to your perfect shot. With Ray3’s new Hi-Fi diffusion pass, master your best shots into production-ready high-fidelity 4K HDR footage. 5x faster. 5x cheaper. 100x more fun.

Ray3 offers production-ready fidelity, high octane motion, preserved anatomy, physics simulations, world exploration, complex crowds, interactive lighting, caustics, motion blur, photorealism, and detail nuance, delivering visuals ready for high-end creative production pipelines.Ray3 is an intelligent video model designed to tell stories. Ray3 is capable of thinking and reasoning in visuals and offers state of the art physics and consistency. In a world’s first, Ray3 generates videos in 16bit High Dynamic Range color bringing generative video to pro studio pipelines.The all-new Draft Mode enables you to explore many more ideas, much faster and tell better stories than ever before.

-

AI and the Law – Disney, Warner Bros. Discovery and NBCUniversal sue Chinese AI firm MiniMax

On Tuesday, the three media companies filed a lawsuit against MiniMax, a Chinese AI company that is reportedly valued at $4 billion, alleging “willful and brazen” copyright infringement

MiniMax operates Hailuo AI

-

Mariko Mori – Kamitate Stone at Sean Kelly Gallery

Mariko Mori, the internationally celebrated artist who blends technology, spirituality, and nature, debuts Kamitate Stone I this October at Sean Kelly Gallery in New York. The work continues her exploration of luminous form, energy, and transcendence.

-

Vimeo Enters into Definitive Agreement to Be Acquired by Bending Spoons for $1.38 Billion

-

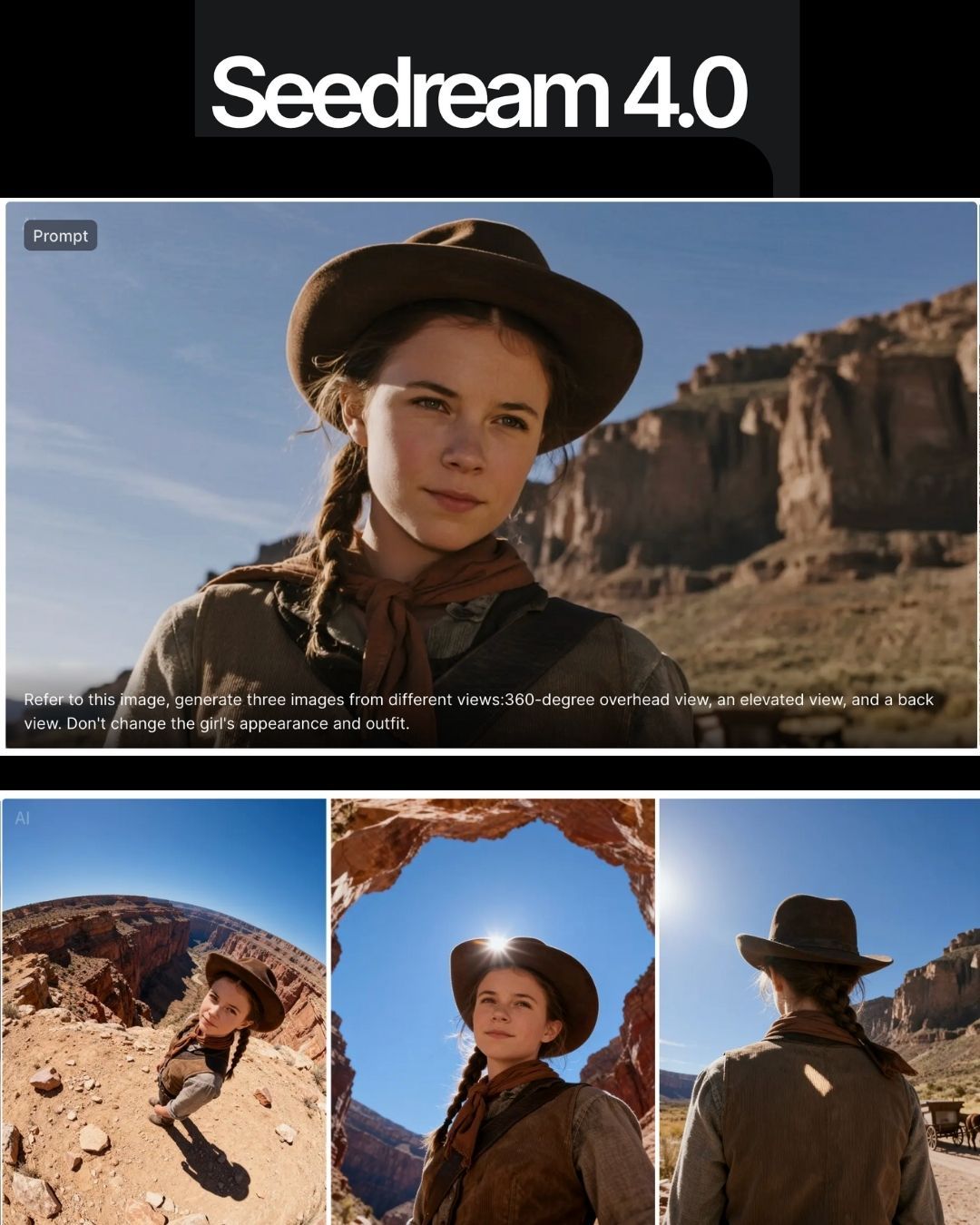

ByteDance Seedream 4.0 – Super‑fast, 4K, multi image support

https://seed.bytedance.com/en/seedream4_0

➤ Super‑fast, high‑resolution results : resolutions up to 4K, producing a 2K image in less than 1.8 seconds, all while maintining sharpness and realism.

➤ At 4K, cost as low as 0.03 $ per generation.

➤ Natural‑language editing – You can instruct the model to “remove the people in the background,” “add a helmet” or “replace this with that,” and it executes without needing complicated prompts.

➤ Multi‑image input and output – It can combine multiple images, transfer styles and produce storyboards or series with consistent characters and themes.

FEATURED POSTS

-

The new Blackmagic URSA Cine Immersive camera – 8160 x 7200 resolution per eye

Based on the new Blackmagic URSA Cine platform, the new Blackmagic URSA Cine Immersive model features a fixed, custom lens system with a sensor that features 8160 x 7200 resolution per eye with pixel level synchronization. It has an extremely wide 16 stops of dynamic range, and shoots at 90 fps stereoscopic into a Blackmagic RAW Immersive file. The new Blackmagic RAW Immersive file format is an enhanced version of Blackmagic RAW that’s been designed to make immersive video simple to use through the post production workflow.

-

Methods for creating motion blur in Stop motion

en.wikipedia.org/wiki/Go_motion

Petroleum jelly

This crude but reasonably effective technique involves smearing petroleum jelly (“Vaseline”) on a plate of glass in front of the camera lens, also known as vaselensing, then cleaning and reapplying it after each shot — a time-consuming process, but one which creates a blur around the model. This technique was used for the endoskeleton in The Terminator. This process was also employed by Jim Danforth to blur the pterodactyl’s wings in Hammer Films’ When Dinosaurs Ruled the Earth, and by Randal William Cook on the terror dogs sequence in Ghostbusters.[citation needed]Bumping the puppet

Gently bumping or flicking the puppet before taking the frame will produce a slight blur; however, care must be taken when doing this that the puppet does not move too much or that one does not bump or move props or set pieces.Moving the table

Moving the table on which the model is standing while the film is being exposed creates a slight, realistic blur. This technique was developed by Ladislas Starevich: when the characters ran, he moved the set in the opposite direction. This is seen in The Little Parade when the ballerina is chased by the devil. Starevich also used this technique on his films The Eyes of the Dragon, The Magical Clock and The Mascot. Aardman Animations used this for the train chase in The Wrong Trousers and again during the lorry chase in A Close Shave. In both cases the cameras were moved physically during a 1-2 second exposure. The technique was revived for the full-length Wallace & Gromit: The Curse of the Were-Rabbit.Go motion

The most sophisticated technique was originally developed for the film The Empire Strikes Back and used for some shots of the tauntauns and was later used on films like Dragonslayer and is quite different from traditional stop motion. The model is essentially a rod puppet. The rods are attached to motors which are linked to a computer that can record the movements as the model is traditionally animated. When enough movements have been made, the model is reset to its original position, the camera rolls and the model is moved across the table. Because the model is moving during shots, motion blur is created.A variation of go motion was used in E.T. the Extra-Terrestrial to partially animate the children on their bicycles.

-

Scene Referred vs Display Referred color workflows

Display Referred it is tied to the target hardware, as such it bakes color requirements into every type of media output request.

Scene Referred uses a common unified wide gamut and targeting audience through CDL and DI libraries instead.

So that color information stays untouched and only “transformed” as/when needed.Sources:

– Victor Perez – Color Management Fundamentals & ACES Workflows in Nuke

– https://z-fx.nl/ColorspACES.pdf

– Wicus

-

Is a MacBeth Colour Rendition Chart the Safest Way to Calibrate a Camera?

www.colour-science.org/posts/the-colorchecker-considered-mostly-harmless/

“Unless you have all the relevant spectral measurements, a colour rendition chart should not be used to perform colour-correction of camera imagery but only for white balancing and relative exposure adjustments.”

“Using a colour rendition chart for colour-correction might dramatically increase error if the scene light source spectrum is different from the illuminant used to compute the colour rendition chart’s reference values.”

“other factors make using a colour rendition chart unsuitable for camera calibration:

– Uncontrolled geometry of the colour rendition chart with the incident illumination and the camera.

– Unknown sample reflectances and ageing as the colour of the samples vary with time.

– Low samples count.

– Camera noise and flare.

– Etc…“Those issues are well understood in the VFX industry, and when receiving plates, we almost exclusively use colour rendition charts to white balance and perform relative exposure adjustments, i.e. plate neutralisation.”