BREAKING NEWS

LATEST POSTS

-

Microsoft DAViD – Data-efficient and Accurate Vision Models from Synthetic Data

Our human-centric dense prediction model delivers high-quality, detailed (depth) results while achieving remarkable efficiency, running orders of magnitude faster than competing methods, with inference speeds as low as 21 milliseconds per frame (the large multi-task model on an NVIDIA A100). It reliably captures a wide range of human characteristics under diverse lighting conditions, preserving fine-grained details such as hair strands and subtle facial features. This demonstrates the model’s robustness and accuracy in complex, real-world scenarios.

https://microsoft.github.io/DAViD

The state of the art in human-centric computer vision achieves high accuracy and robustness across a diverse range of tasks. The most effective models in this domain have billions of parameters, thus requiring extremely large datasets, expensive training regimes, and compute-intensive inference. In this paper, we demonstrate that it is possible to train models on much smaller but high-fidelity synthetic datasets, with no loss in accuracy and higher efficiency. Using synthetic training data provides us with excellent levels of detail and perfect labels, while providing strong guarantees for data provenance, usage rights, and user consent. Procedural data synthesis also provides us with explicit control on data diversity, that we can use to address unfairness in the models we train. Extensive quantitative assessment on real input images demonstrates accuracy of our models on three dense prediction tasks: depth estimation, surface normal estimation, and soft foreground segmentation. Our models require only a fraction of the cost of training and inference when compared with foundational models of similar accuracy.

-

-

Embedding frame ranges into Quicktime movies with FFmpeg

QuickTime (.mov) files are fundamentally time-based, not frame-based, and so don’t have a built-in, uniform “first frame/last frame” field you can set as numeric frame IDs. Instead, tools like Shotgun Create rely on the timecode track and the movie’s duration to infer frame numbers. If you want Shotgun to pick up a non-default frame range (e.g. start at 1001, end at 1064), you must bake in an SMPTE timecode that corresponds to your desired start frame, and ensure the movie’s duration matches your clip length.

How Shotgun Reads Frame Ranges

- Default start frame is 1. If no timecode metadata is present, Shotgun assumes the movie begins at frame 1.

- Timecode ⇒ frame number. Shotgun Create “honors the timecodes of media sources,” mapping the embedded TC to frame IDs. For example, a 24 fps QuickTime tagged with a start timecode of 00:00:41:17 will be interpreted as beginning on frame 1001 (1001 ÷ 24 fps ≈ 41.71 s).

Embedding a Start Timecode

QuickTime uses a

tmcd(timecode) track. You can bake in an SMPTE track via FFmpeg’s-timecodeflag or via Compressor/encoder settings:- Compute your start TC.

- Desired start frame = 1001

- Frame 1001 at 24 fps ⇒ 1001 ÷ 24 ≈ 41.708 s ⇒ TC 00:00:41:17

- FFmpeg example:

ffmpeg -i input.mov \ -c copy \ -timecode 00:00:41:17 \ output.movThis adds a timecode track beginning at 00:00:41:17, which Shotgun maps to frame 1001.

Ensuring the Correct End Frame

Shotgun infers the last frame from the movie’s duration. To end on frame 1064:

- Frame count = 1064 – 1001 + 1 = 64 frames

- Duration = 64 ÷ 24 fps ≈ 2.667 s

FFmpeg trim example:

ffmpeg -i input.mov \ -c copy \ -timecode 00:00:41:17 \ -t 00:00:02.667 \ output_trimmed.movThis results in a 64-frame clip (1001→1064) at 24 fps.

-

Aider.chat – A free, open-source AI pair-programming CLI tool

Aider enables developers to interactively generate, modify, and test code by leveraging both cloud-hosted and local LLMs directly from the terminal or within an IDE. Key capabilities include comprehensive codebase mapping, support for over 100 programming languages, automated git commit messages, voice-to-code interactions, and built-in linting and testing workflows. Installation is straightforward via pip or uv, and while the tool itself has no licensing cost, actual usage costs stem from the underlying LLM APIs, which are billed separately by providers like OpenAI or Anthropic.

Key Features

- Cloud & Local LLM Support

Connect to most major LLM providers out of the box, or run models locally for privacy and cost control aider.chat. - Codebase Mapping

Automatically indexes all project files so that even large repositories can be edited contextually aider.chat. - 100+ Language Support

Works with Python, JavaScript, Rust, Ruby, Go, C++, PHP, HTML, CSS, and dozens more aider.chat. - Git Integration

Generates sensible commit messages and automates diffs/undo operations through familiar git tooling aider.chat. - Voice-to-Code

Speak commands to Aider to request features, tests, or fixes without typing aider.chat. - Images & Web Pages

Attach screenshots, diagrams, or documentation URLs to provide visual context for edits aider.chat. - Linting & Testing

Runs lint and test suites automatically after each change, and can fix issues it detects

- Cloud & Local LLM Support

-

SourceTree vs Github Desktop – Which one to use

Sourcetree and GitHub Desktop are both free, GUI-based Git clients aimed at simplifying version control for developers. While they share the same core purpose—making Git more accessible—they differ in features, UI design, integration options, and target audiences.

Installation & Setup

- Sourcetree

- Download: https://www.sourcetreeapp.com/

- Supported OS: Windows 10+, macOS 10.13+

- Prerequisites: Comes bundled with its own Git, or can be pointed to a system Git install.

- Initial Setup: Wizard guides SSH key generation, authentication with Bitbucket/GitHub/GitLab.

- GitHub Desktop

- Download: https://desktop.github.com/

- Supported OS: Windows 10+, macOS 10.15+

- Prerequisites: Bundled Git; seamless login with GitHub.com or GitHub Enterprise.

- Initial Setup: One-click sign-in with GitHub; auto-syncs repositories from your GitHub account.

Feature Comparison

(more…)Feature Sourcetree GitHub Desktop Branch Visualization Detailed graph view with drag-and-drop for rebasing/merging Linear graph, simpler but less configurable Staging & Commit File-by-file staging, inline diff view All-or-nothing staging, side-by-side diff Interactive Rebase Full support via UI Basic support via command line only Conflict Resolution Built-in merge tool integration (DiffMerge, Beyond Compare) Contextual conflict editor with choice panels Submodule Management Native submodule support Limited; requires CLI Custom Actions / Hooks Define custom actions (e.g., launch scripts) No UI for custom Git hooks Git Flow / Hg Flow Built-in support None Performance Can lag on very large repos Generally snappier on medium-sized repos Memory Footprint Higher RAM usage Lightweight Platform Integration Atlassian Bitbucket, Jira Deep GitHub.com / Enterprise integration Learning Curve Steeper for beginners Beginner-friendly - Sourcetree

FEATURED POSTS

-

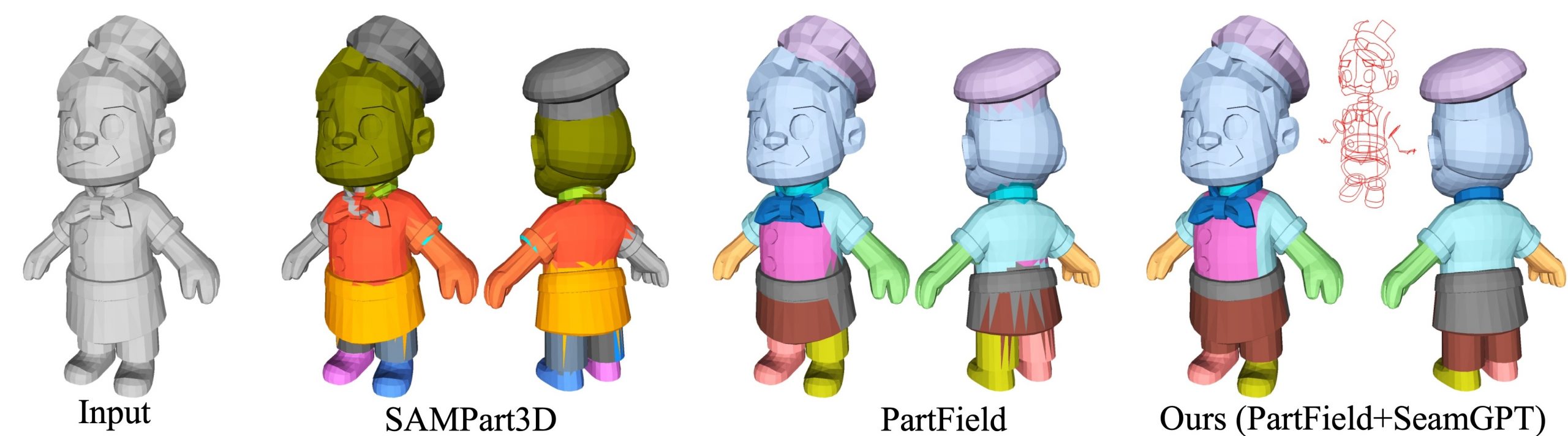

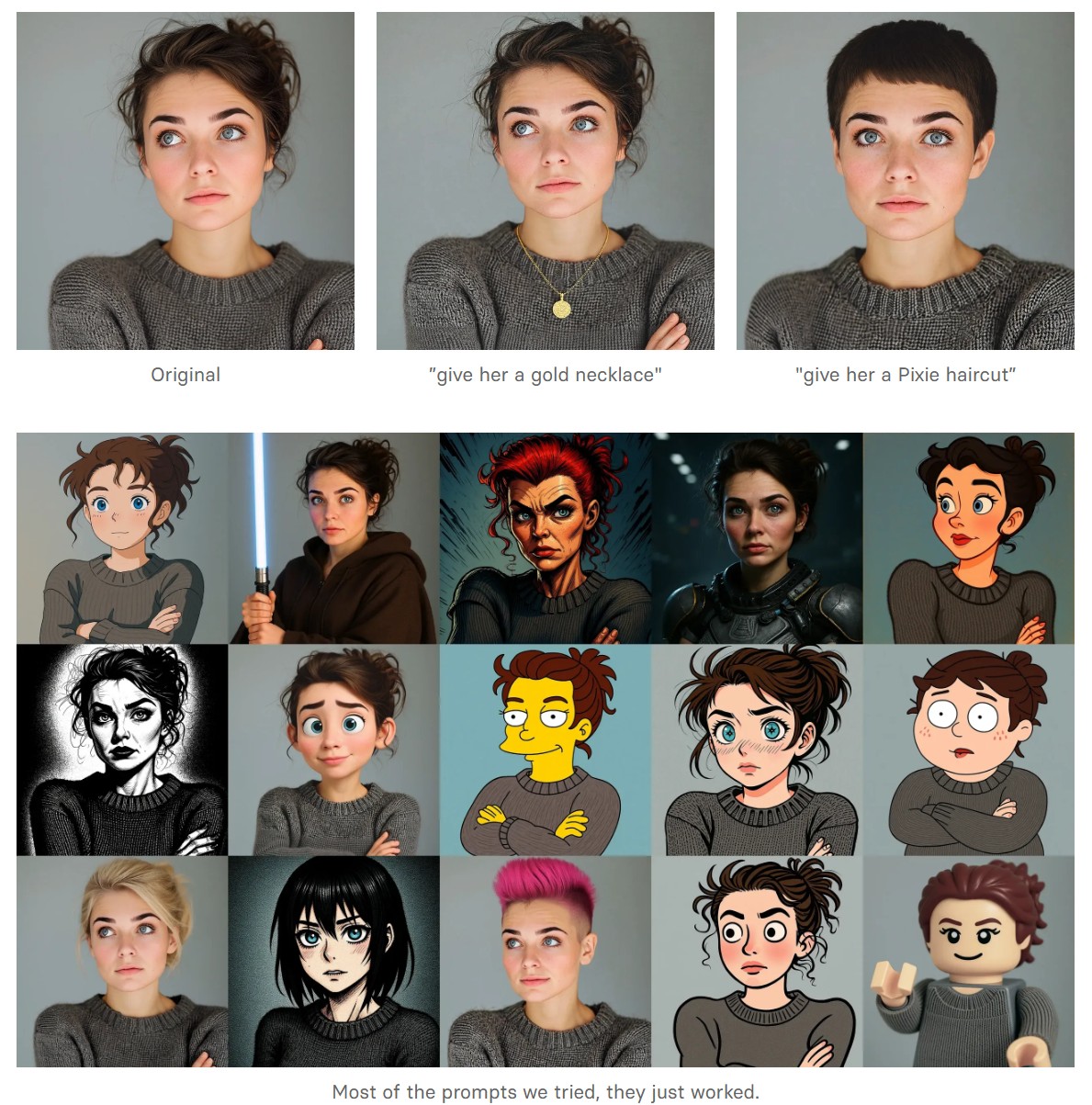

Black Forest Labs released FLUX.1 Kontext

https://replicate.com/blog/flux-kontext

https://replicate.com/black-forest-labs/flux-kontext-pro

There are three models, two are available now, and a third open-weight version is coming soon:

- FLUX.1 Kontext [pro]: State-of-the-art performance for image editing. High-quality outputs, great prompt following, and consistent results.

- FLUX.1 Kontext [max]: A premium model that brings maximum performance, improved prompt adherence, and high-quality typography generation without compromise on speed.

- Coming soon: FLUX.1 Kontext [dev]: An open-weight, guidance-distilled version of Kontext.

We’re so excited with what Kontext can do, we’ve created a collection of models on Replicate to give you ideas:

- Multi-image kontext: Combine two images into one.

- Portrait series: Generate a series of portraits from a single image

- Change haircut: Change a person’s hair style and color

- Iconic locations: Put yourself in front of famous landmarks

- Professional headshot: Generate a professional headshot from any image

-

AI Data Laundering: How Academic and Nonprofit Researchers Shield Tech Companies from Accountability

“Simon Willison created a Datasette browser to explore WebVid-10M, one of the two datasets used to train the video generation model, and quickly learned that all 10.7 million video clips were scraped from Shutterstock, watermarks and all.”

“In addition to the Shutterstock clips, Meta also used 10 million video clips from this 100M video dataset from Microsoft Research Asia. It’s not mentioned on their GitHub, but if you dig into the paper, you learn that every clip came from over 3 million YouTube videos.”

“It’s become standard practice for technology companies working with AI to commercially use datasets and models collected and trained by non-commercial research entities like universities or non-profits.”

“Like with the artists, photographers, and other creators found in the 2.3 billion images that trained Stable Diffusion, I can’t help but wonder how the creators of those 3 million YouTube videos feel about Meta using their work to train their new model.”