BREAKING NEWS

LATEST POSTS

-

Blackmagic DaVinci Resolve 20

A major new update which includes more than 100 new features including powerful AI tools designed to assist you with all stages of your workflow. Use AI IntelliScript to create timelines based on a text script, AI Animated Subtitles to animate words as they are spoken, and AI Multicam SmartSwitch to create a timeline with camera angles based on speaker detection. The cut and edit pages also include a dedicated keyframe editor and voiceover palettes, and AI Fairlight IntelliCut can remove silence and checkerboard dialogue between speakers. In Fusion, explore advanced multi layer compositing workflows. The Color Warper now includes Chroma Warp, and the Magic Mask and Depth Map have huge updates.

https://www.blackmagicdesign.com/products/davinciresolve

-

ZAppLink – a plugin that allows you to seamlessly integrate your favorite image editing software — such as Adobe Photoshop — into your ZBrush workflow

While in ZBrush, call up your image editing package and use it to modify the active ZBrush document or tool, then go straight back into ZBrush.

ZAppLink can work on different saved points of view for your model. What you paint in your image editor is then projected to the model’s PolyPaint or texture for more creative freedom.

With ZAppLink you can combine ZBrush’s powerful capabilities with all the painting power of the PSD-capable 2D editor of your choice, making it easy to create stunning textures.

ZAppLink features

- Send your document view to the PSD file editor of your choice for texture creation and modification: Photoshop, Gimp and more!

- Projections in orthogonal or perspective mode.

- Multiple view support: With a single click, send your front, back, left, right, top, bottom and two custom views in dedicated layers to your 2D editor. When your painting is done, automatically reproject all the views back in ZBrush!

- Create character sheets based on your saved views with a single click.

- ZAppLink works with PolyPaint, Textures based on UV’s and canvas pixols.

-

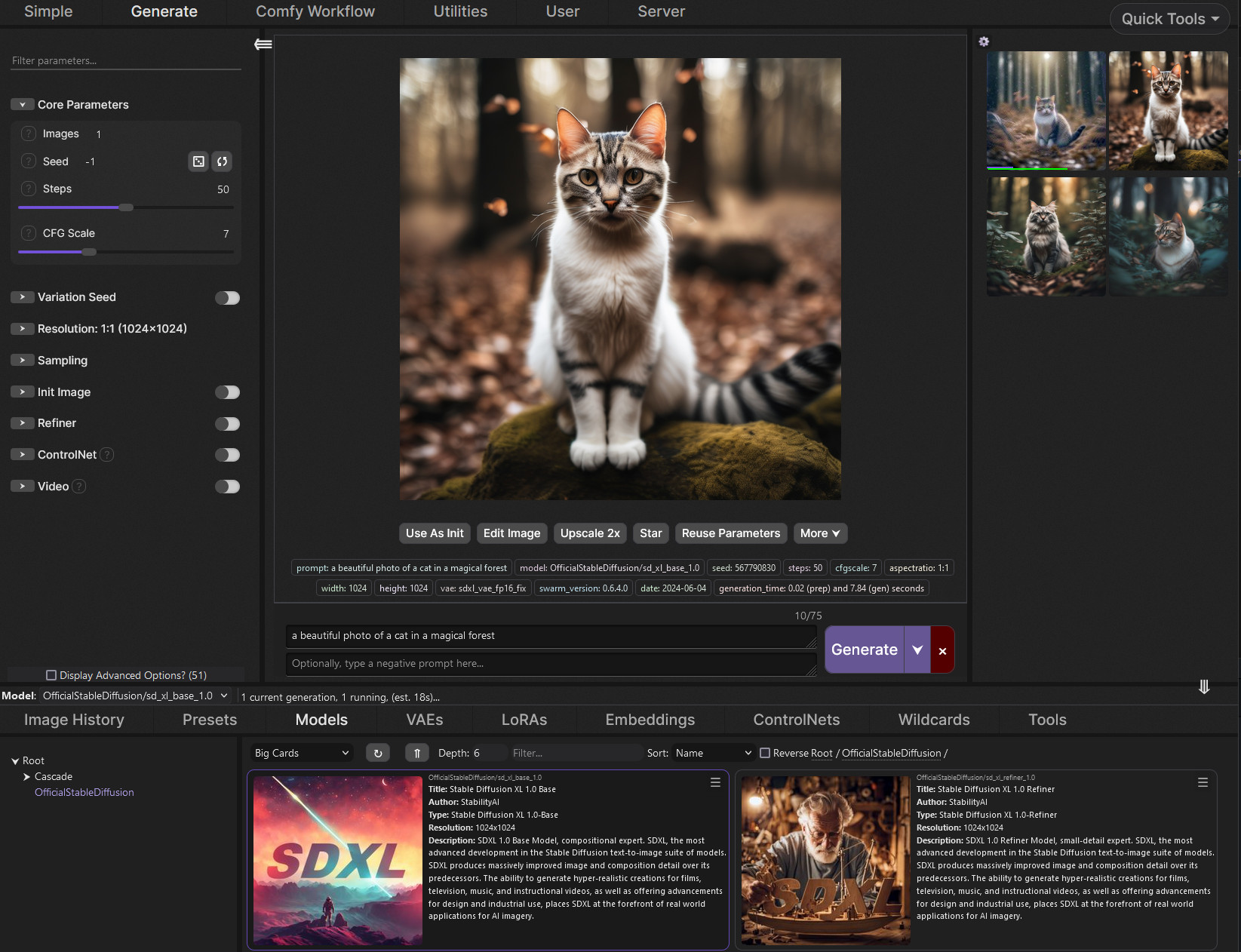

SwarmUI.net – A free, open source, modular AI image generation Web-User-Interface

https://github.com/mcmonkeyprojects/SwarmUI

A Modular AI Image Generation Web-User-Interface, with an emphasis on making powertools easily accessible, high performance, and extensibility. Supports AI image models (Stable Diffusion, Flux, etc.), and AI video models (LTX-V, Hunyuan Video, Cosmos, Wan, etc.), with plans to support eg audio and more in the future.

SwarmUI by default runs entirely locally on your own computer. It does not collect any data from you.

SwarmUI is 100% Free-and-Open-Source software, under the MIT License. You can do whatever you want with it.

-

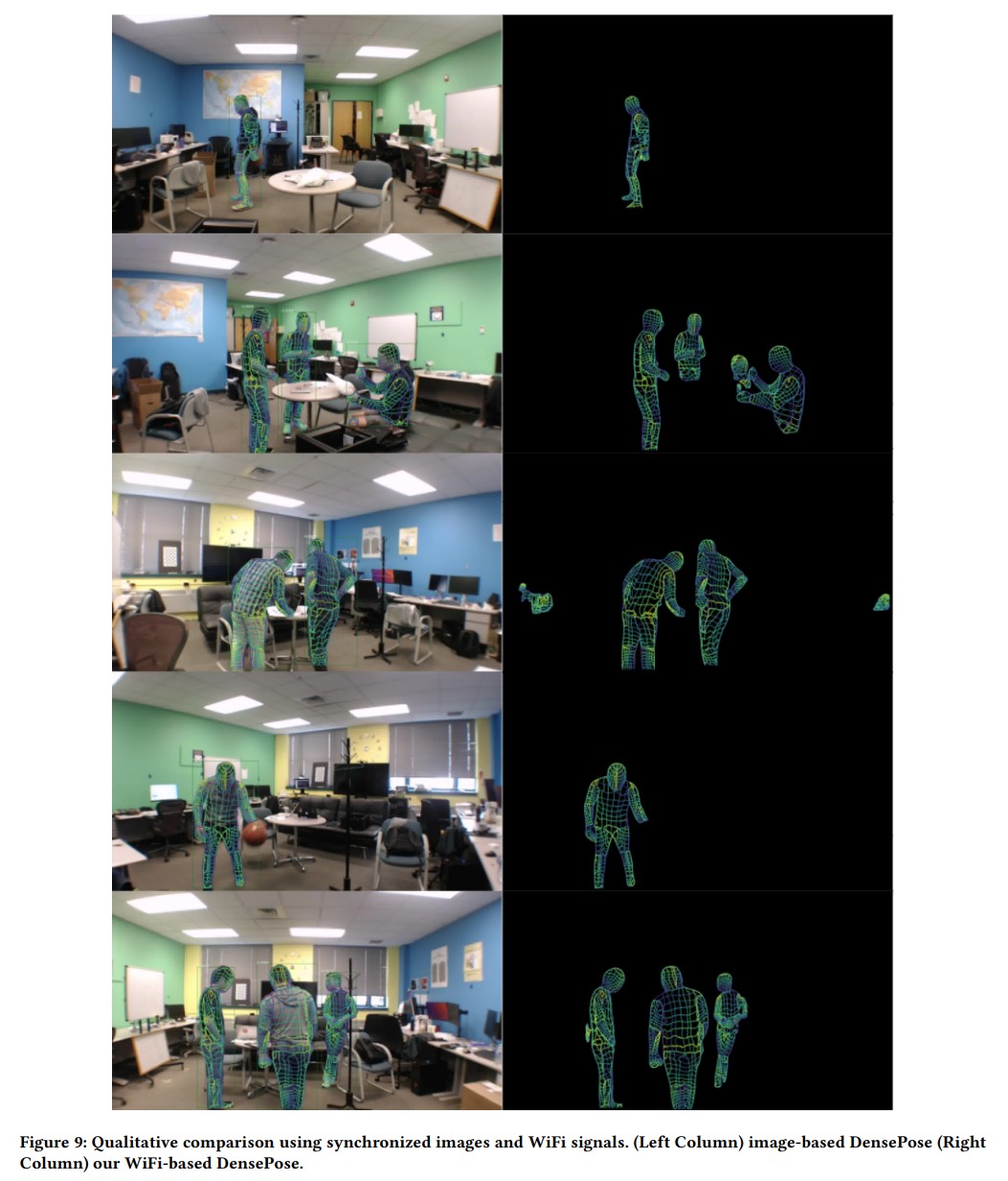

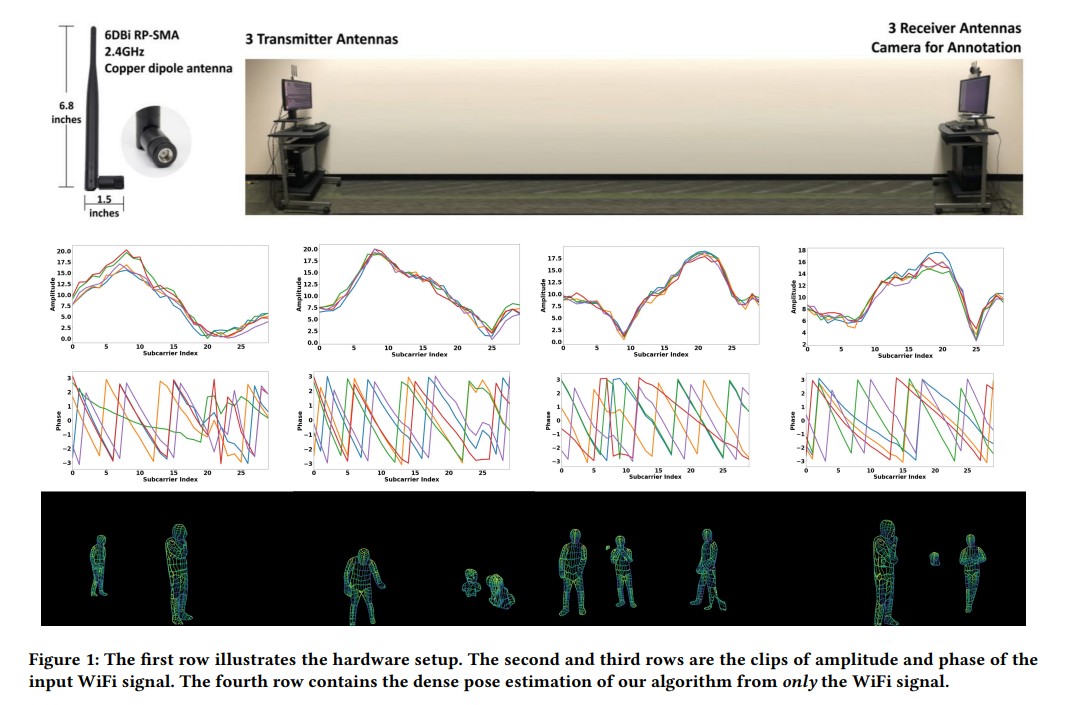

DensePose From WiFi using ML

https://arxiv.org/pdf/2301.00250

https://www.xrstager.com/en/ai-based-motion-detection-without-cameras-using-wifi

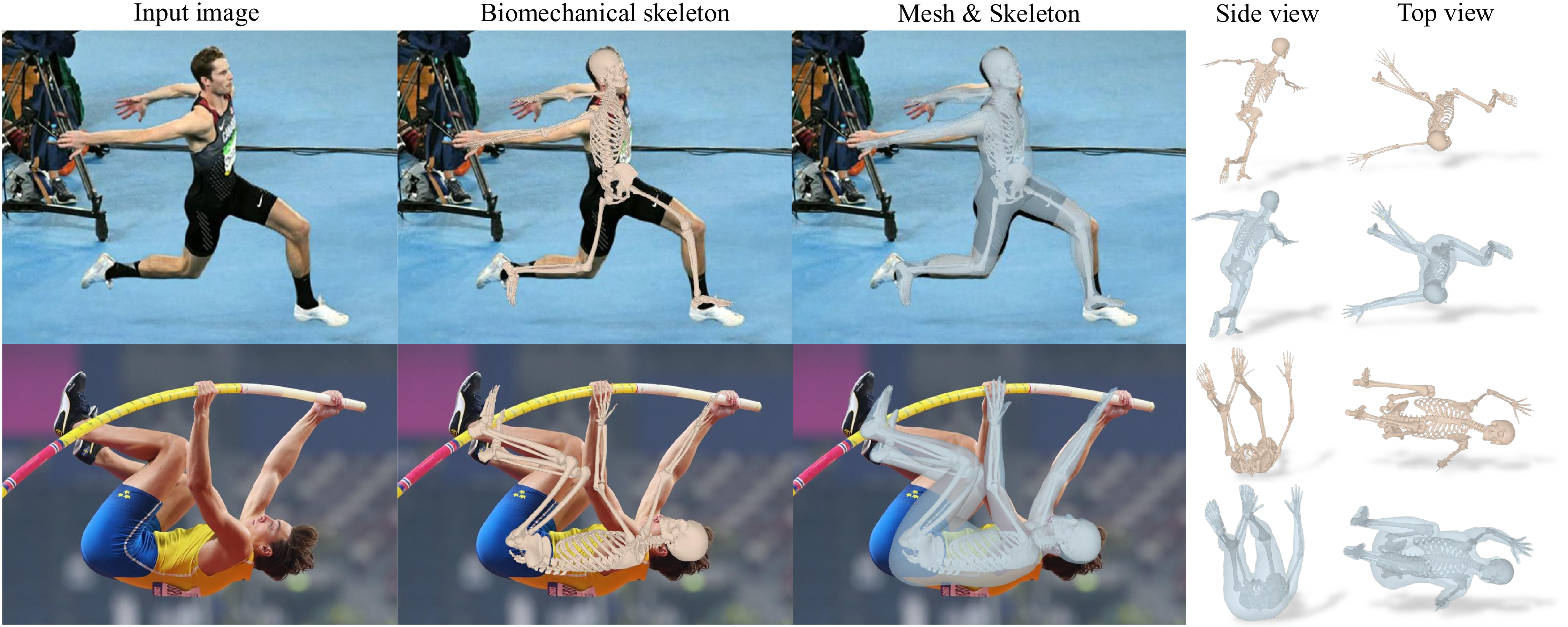

Advances in computer vision and machine learning techniques have led to significant development in 2D and 3D human pose estimation using RGB cameras, LiDAR, and radars. However, human pose estimation from images is adversely affected by common issues such as occlusion and lighting, which can significantly hinder performance in various scenarios.

Radar and LiDAR technologies, while useful, require specialized hardware that is both expensive and power-intensive. Moreover, deploying these sensors in non-public areas raises important privacy concerns, further limiting their practical applications.

To overcome these limitations, recent research has explored the use of WiFi antennas, which are one-dimensional sensors, for tasks like body segmentation and key-point body detection. Building on this idea, the current study expands the use of WiFi signals in combination with deep learning architectures—techniques typically used in computer vision—to estimate dense human pose correspondence.

In this work, a deep neural network was developed to map the phase and amplitude of WiFi signals to UV coordinates across 24 human regions. The results demonstrate that the model is capable of estimating the dense pose of multiple subjects with performance comparable to traditional image-based approaches, despite relying solely on WiFi signals. This breakthrough paves the way for developing low-cost, widely accessible, and privacy-preserving algorithms for human sensing.

-

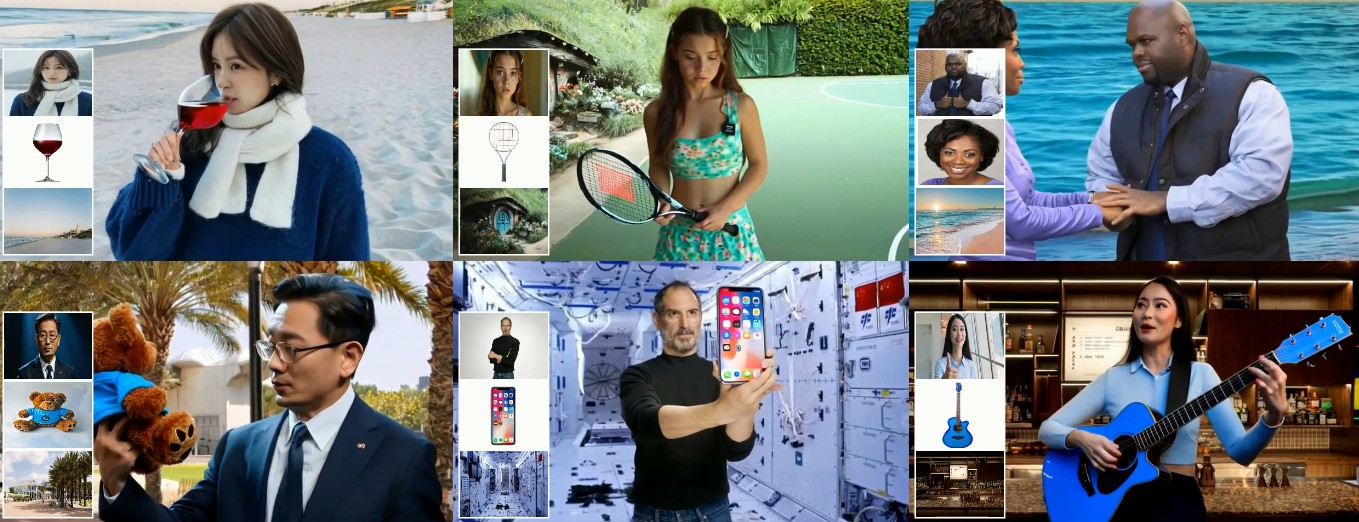

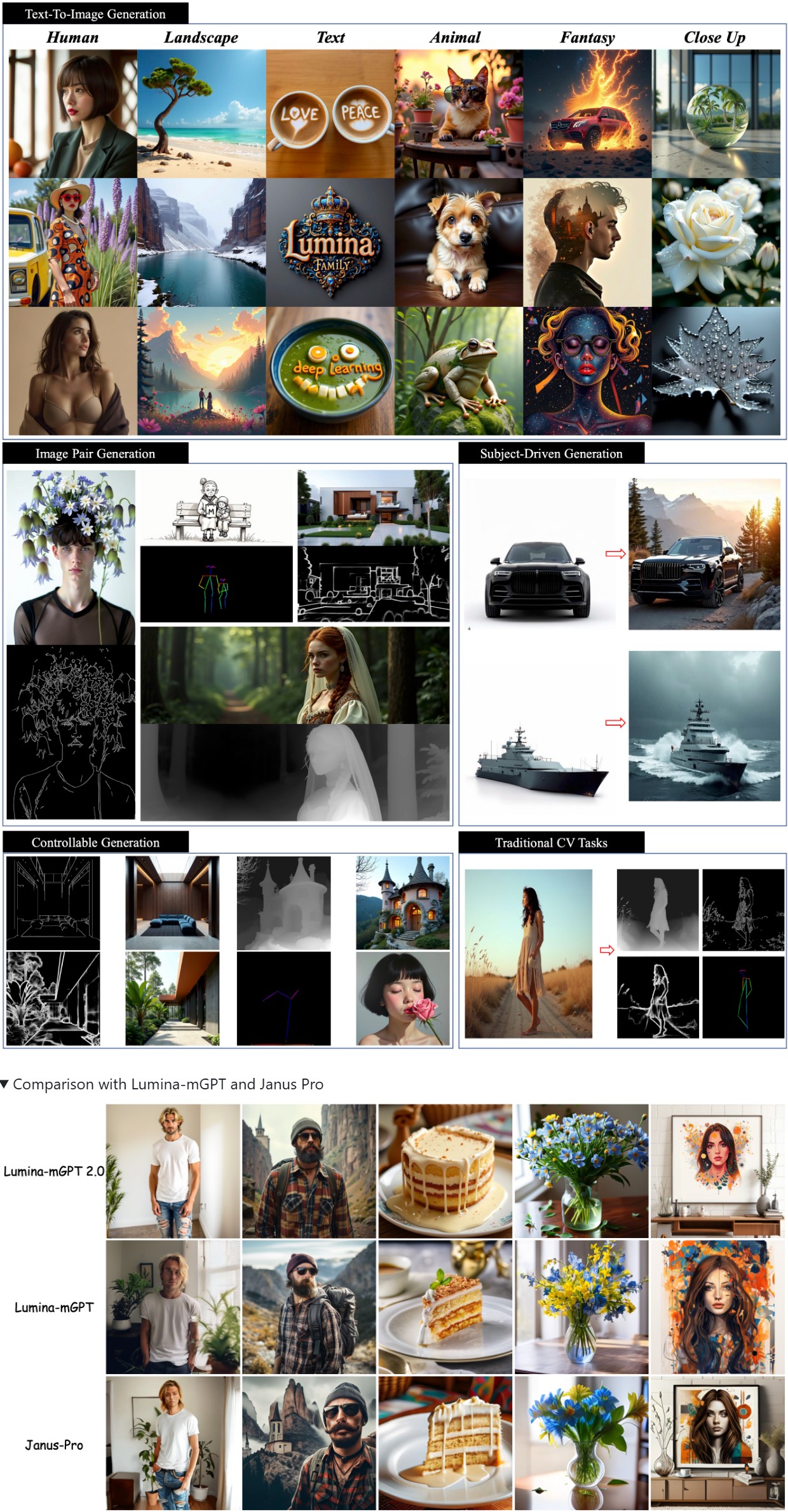

Lumina-mGPT 2.0 – Stand-alone Autoregressive Image Modeling

A stand-alone, decoder-only autoregressive model, trained from scratch, that unifies a broad spectrum of image generation tasks, including text-to-image generation, image pair generation, subject-driven generation, multi-turn image editing, controllable generation, and dense prediction.

https://github.com/Alpha-VLLM/Lumina-mGPT-2.0

FEATURED POSTS

-

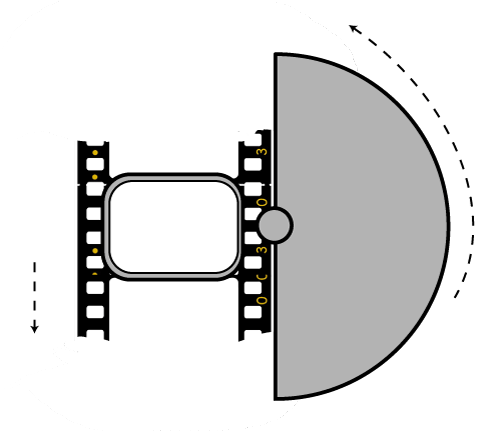

Photography basics: Shutter angle and shutter speed and motion blur

http://www.shutterangle.com/2012/cinematic-look-frame-rate-shutter-speed/

https://www.cinema5d.com/global-vs-rolling-shutter

https://www.wikihow.com/Choose-a-Camera-Shutter-Speed

Shutter is the device that controls the amount of light through a lens. Basically in general it controls the amount of time a film is exposed.

Shutter speed is how long this device is open for, which also defines motion blur… the longer it stays open the blurrier the image captured.

The number refers to the amount of light actually allowed through.As a reference, shooting at 24fps, at 180 shutter angle or 1/48th of shutter speed (0.0208 exposure time) will produce motion blur which is similar to what we perceive at naked eye

Talked of as in (shutter) angles, for historical reasons, as the original exposure mechanism was controlled through a pie shaped mirror in front of the lens.

A shutter of 180 degrees is blocking/allowing light for half circle. (half blocked, half open). 270 degrees is one quarter pie shaped, which would allow for a higher exposure time (3 quarter pie open, vs one quarter closed) 90 degrees is three quarter pie shaped, which would allow for a lower exposure (one quarter open, three quarters closed)

(more…)

-

Ross Pettit on The Agile Manager – How tech firms went for prioritizing cash flow instead of talent (and artists)

For years, tech firms were fighting a war for talent. Now they are waging war on talent.

This shift has led to a weakening of the social contract between employees and employers, with culture and employee values being sidelined in favor of financial discipline and free cash flow.

The operating environment has changed from a high tolerance for failure (where cheap capital and willing spenders accepted slipped dates and feature lag) to a very low – if not zero – tolerance for failure (fiscal discipline is in vogue again).

While preventing and containing mistakes staves off shocks to the income statement, it doesn’t fundamentally reduce costs. Years of payroll bloat – aggressive hiring, aggressive comp packages to attract and retain people – make labor the biggest cost in tech.

…Of course, companies can reduce their labor force through natural attrition. Other labor policy changes – return to office mandates, contraction of fringe benefits, reduction of job promotions, suspension of bonuses and comp freezes – encourage more people to exit voluntarily. It’s cheaper to let somebody self-select out than it is to lay them off.

…Employees recruited in more recent years from outside the ranks of tech were given the expectation that we’ll teach you what you need to know, we want you to join because we value what you bring to the table. That is no longer applicable. Runway for individual growth is very short in zero-tolerance-for-failure operating conditions. Job preservation, at least in the short term for this cohort, comes from completing corporate training and acquiring professional certifications. Training through community or experience is not in the cards.

…The ability to perform competently in multiple roles, the extra-curriculars, the self-directed enrichment, the ex-company leadership – all these things make no matter. The calculus is what you got paid versus how you performed on objective criteria relative to your cohort. Nothing more.

…Here is where the change in the social contract is perhaps the most blatant. In the “destination employer” years, the employee invested in the community and its values, and the employer rewarded the loyalty of its employees through things like runway for growth (stretch roles and sponsored work innovation) and tolerance for error (valuing demonstrable learning over perfection in execution). No longer.

…http://www.rosspettit.com/2024/08/for-years-tech-was-fighting-war-for.html

-

What is physically correct lighting all about?

http://gamedev.stackexchange.com/questions/60638/what-is-physically-correct-lighting-all-about

2012-08 Nathan Reed wrote:

Physically-based shading means leaving behind phenomenological models, like the Phong shading model, which are simply built to “look good” subjectively without being based on physics in any real way, and moving to lighting and shading models that are derived from the laws of physics and/or from actual measurements of the real world, and rigorously obey physical constraints such as energy conservation.

For example, in many older rendering systems, shading models included separate controls for specular highlights from point lights and reflection of the environment via a cubemap. You could create a shader with the specular and the reflection set to wildly different values, even though those are both instances of the same physical process. In addition, you could set the specular to any arbitrary brightness, even if it would cause the surface to reflect more energy than it actually received.

In a physically-based system, both the point light specular and the environment reflection would be controlled by the same parameter, and the system would be set up to automatically adjust the brightness of both the specular and diffuse components to maintain overall energy conservation. Moreover you would want to set the specular brightness to a realistic value for the material you’re trying to simulate, based on measurements.

Physically-based lighting or shading includes physically-based BRDFs, which are usually based on microfacet theory, and physically correct light transport, which is based on the rendering equation (although heavily approximated in the case of real-time games).

It also includes the necessary changes in the art process to make use of these features. Switching to a physically-based system can cause some upsets for artists. First of all it requires full HDR lighting with a realistic level of brightness for light sources, the sky, etc. and this can take some getting used to for the lighting artists. It also requires texture/material artists to do some things differently (particularly for specular), and they can be frustrated by the apparent loss of control (e.g. locking together the specular highlight and environment reflection as mentioned above; artists will complain about this). They will need some time and guidance to adapt to the physically-based system.

On the plus side, once artists have adapted and gained trust in the physically-based system, they usually end up liking it better, because there are fewer parameters overall (less work for them to tweak). Also, materials created in one lighting environment generally look fine in other lighting environments too. This is unlike more ad-hoc models, where a set of material parameters might look good during daytime, but it comes out ridiculously glowy at night, or something like that.

Here are some resources to look at for physically-based lighting in games:

SIGGRAPH 2013 Physically Based Shading Course, particularly the background talk by Naty Hoffman at the beginning. You can also check out the previous incarnations of this course for more resources.

Sébastien Lagarde, Adopting a physically-based shading model and Feeding a physically-based shading model

And of course, I would be remiss if I didn’t mention Physically-Based Rendering by Pharr and Humphreys, an amazing reference on this whole subject and well worth your time, although it focuses on offline rather than real-time rendering.