BREAKING NEWS

LATEST POSTS

-

HumanDiT – Pose-Guided Diffusion Transformer for Long-form Human Motion Video Generation

https://agnjason.github.io/HumanDiT-page

By inputting a single character image and template pose video, our method can generate vocal avatar videos featuring not only pose-accurate rendering but also realistic body shapes.

-

DynVFX – Augmenting Real Videoswith Dynamic Content

Given an input video and a simple user-provided text instruction describing the desired content, our method synthesizes dynamic objects or complex scene effects that naturally interact with the existing scene over time. The position, appearance, and motion of the new content are seamlessly integrated into the original footage while accounting for camera motion, occlusions, and interactions with other dynamic objects in the scene, resulting in a cohesive and realistic output video.

https://dynvfx.github.io/sm/index.html

-

ByteDance OmniHuman-1

https://omnihuman-lab.github.io

They propose an end-to-end multimodality-conditioned human video generation framework named OmniHuman, which can generate human videos based on a single human image and motion signals (e.g., audio only, video only, or a combination of audio and video). In OmniHuman, we introduce a multimodality motion conditioning mixed training strategy, allowing the model to benefit from data scaling up of mixed conditioning. This overcomes the issue that previous end-to-end approaches faced due to the scarcity of high-quality data. OmniHuman significantly outperforms existing methods, generating extremely realistic human videos based on weak signal inputs, especially audio. It supports image inputs of any aspect ratio, whether they are portraits, half-body, or full-body images, delivering more lifelike and high-quality results across various scenarios.

-

Conda – an open source management system for installing multiple versions of software packages and their dependencies into a virtual environment

https://anaconda.org/anaconda/conda

https://docs.conda.io/projects/conda/en/latest/user-guide/getting-started.html

NOTE The company recently changed their TOS and this service now incurs into costs for teams above a threshold.

Use MicroMamba instead. -

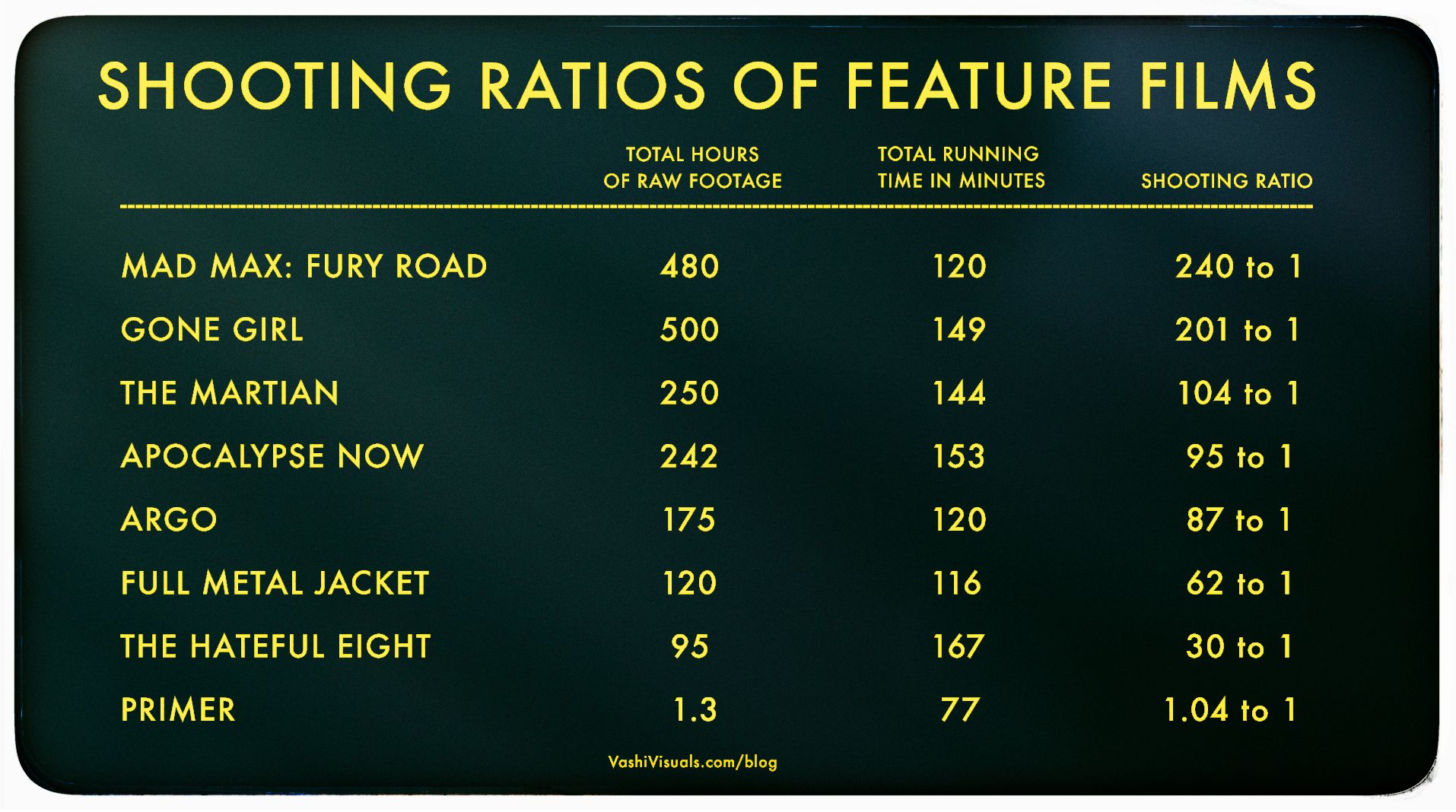

Vashi Nedomansky – Shooting ratios of feature films

In the Golden Age of Hollywood (1930-1959), a 10:1 shooting ratio was the norm—a 90-minute film meant about 15 hours of footage. Directors like Alfred Hitchcock famously kept it tight with a 3:1 ratio, giving studios little wiggle room in the edit.

Fast forward to today: the digital era has sent shooting ratios skyrocketing. Affordable cameras roll endlessly, capturing multiple takes, resets, and everything in between. Gone are the disciplined “Action to Cut” days of film.https://en.wikipedia.org/wiki/Shooting_ratio

-

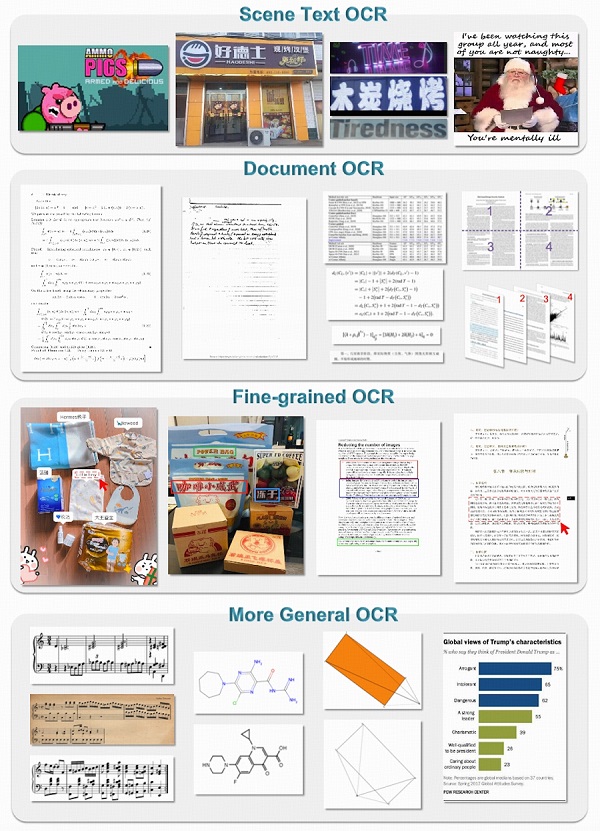

General OCR Theory – Towards OCR-2.0 via a Unified End-to-end Model – HF Transformers implementation

https://huggingface.co/stepfun-ai/GOT-OCR-2.0-hf

GOT-OCR2 works on a wide range of tasks, including plain document OCR, scene text OCR, formatted document OCR, and even OCR for tables, charts, mathematical formulas, geometric shapes, molecular formulas and sheet music.

-

QNTM – Developer Philosophy

- Avoid, at all costs, arriving at a scenario where the ground-up rewrite starts to look attractive

- Aim to be 90% done in 50% of the available time

- Automate good practice

- Think about pathological data

- There is usually a simpler way to write it

- Write code to be testable

- It is insufficient for code to be provably correct; it should be obviously, visibly, trivially correct

-

Arminas Valunas – “Coca-Cola: Wherever you are.”

Arminas created this using Juggernaut Xl model and QR Code Monster SDXL ControlNet.

His pipeline:

Static Images – Forge UI.

Upscaled with Leonardo AI universal upscaler.

Animated with Runway ML and Minimax.

Video upscale – Topaz Video AI.

Composited in Adobe Premiere.

Juggernaut Xl download here:

https://civitai.com/models/133005/juggernaut-xl

QR Code Monster SDXL:

https://civitai.com/models/197247?modelVersionId=221829

FEATURED POSTS

-

59 AI Filmmaking Tools For Your Workflow

https://curiousrefuge.com/blog/ai-filmmaking-tools-for-filmmakers

- Runway

- PikaLabs

- Pixverse (free)

- Haiper (free)

- Moonvalley (free)

- Morph Studio (free)

- SORA

- Google Veo

- Stable Video Diffusion (free)

- Leonardo

- Krea

- Kaiber

- Letz.AI

- Midjourney

- Ideogram

- DALL-E

- Firefly

- Stable Diffusion

- Google Imagen 3

- Polycam

- LTX Studio

- Simulon

- Elevenlabs

- Auphonic

- Adobe Enhance

- Adobe’s AI Rotoscoping

- Adobe Photoshop Generative Fill

- Canva Magic Brush

- Akool

- Topaz Labs

- Magnific.AI

- FreePik

- BigJPG

- LeiaPix

- Move AI

- Mootion

- Heygen

- Synthesia

- Chat GPT-4

- Claude 3

- Nolan AI

- Google Gemini

- Meta Llama 3

- Suno

- Udio

- Stable Audio

- Soundful

- Google MusicML

- Viggle

- SyncLabs

- Lalamu

- LensGo

- D-ID

- WonderStudio

- Cuebric

- Blockade Labs

- Chat GPT-4o

- Luma Dream Machine

- Pallaidium (free)

-

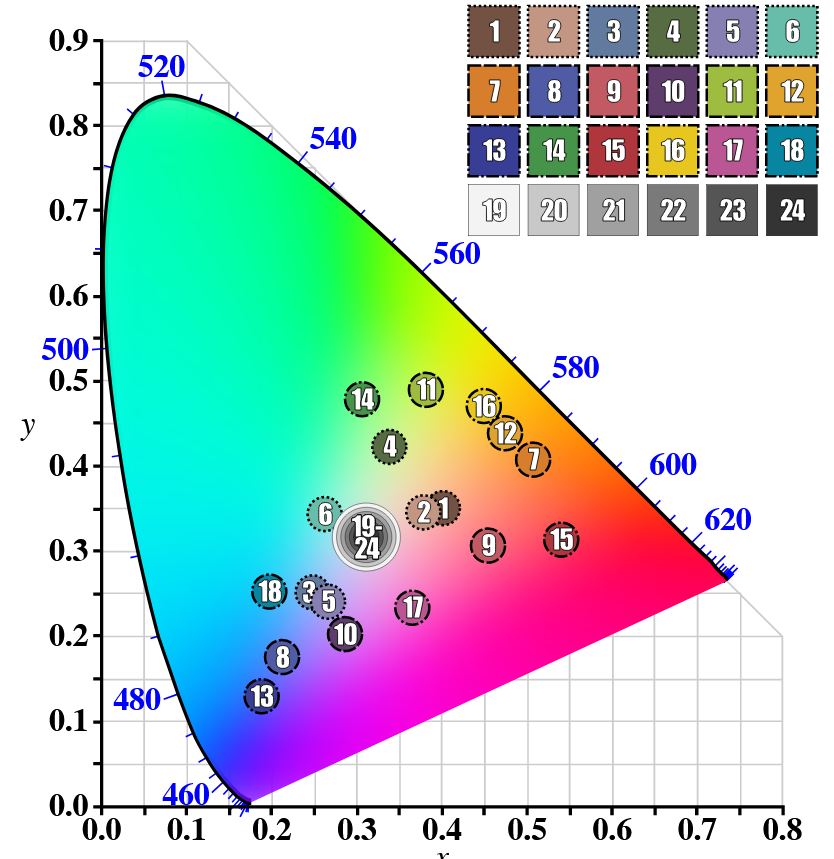

GretagMacbeth Color Checker Numeric Values and Middle Gray

The human eye perceives half scene brightness not as the linear 50% of the present energy (linear nature values) but as 18% of the overall brightness. We are biased to perceive more information in the dark and contrast areas. A Macbeth chart helps with calibrating back into a photographic capture into this “human perspective” of the world.

https://en.wikipedia.org/wiki/Middle_gray

In photography, painting, and other visual arts, middle gray or middle grey is a tone that is perceptually about halfway between black and white on a lightness scale in photography and printing, it is typically defined as 18% reflectance in visible light

Light meters, cameras, and pictures are often calibrated using an 18% gray card[4][5][6] or a color reference card such as a ColorChecker. On the assumption that 18% is similar to the average reflectance of a scene, a grey card can be used to estimate the required exposure of the film.

https://en.wikipedia.org/wiki/ColorChecker

(more…)

-

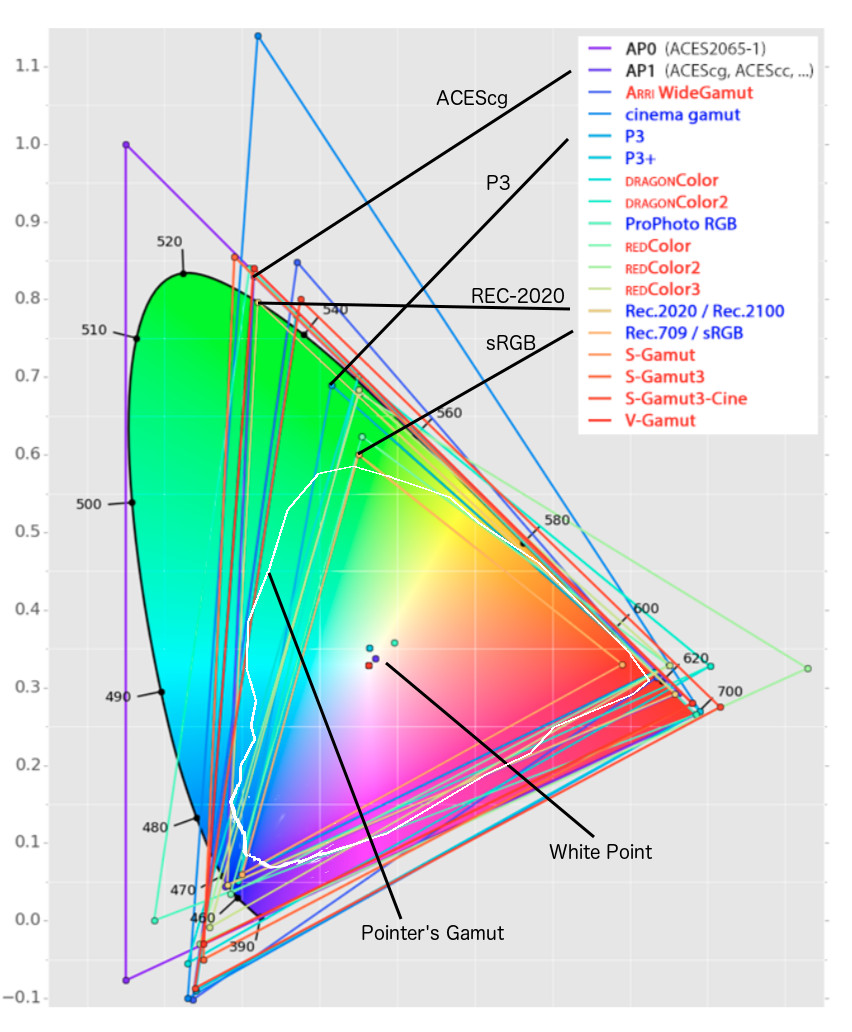

Rec-2020 – TVs new color gamut standard used by Dolby Vision?

https://www.hdrsoft.com/resources/dri.html#bit-depth

The dynamic range is a ratio between the maximum and minimum values of a physical measurement. Its definition depends on what the dynamic range refers to.

For a scene: Dynamic range is the ratio between the brightest and darkest parts of the scene.

For a camera: Dynamic range is the ratio of saturation to noise. More specifically, the ratio of the intensity that just saturates the camera to the intensity that just lifts the camera response one standard deviation above camera noise.

For a display: Dynamic range is the ratio between the maximum and minimum intensities emitted from the screen.

The Dynamic Range of real-world scenes can be quite high — ratios of 100,000:1 are common in the natural world. An HDR (High Dynamic Range) image stores pixel values that span the whole tonal range of real-world scenes. Therefore, an HDR image is encoded in a format that allows the largest range of values, e.g. floating-point values stored with 32 bits per color channel. Another characteristics of an HDR image is that it stores linear values. This means that the value of a pixel from an HDR image is proportional to the amount of light measured by the camera.

For TVs HDR is great, but it’s not the only new TV feature worth discussing.

(more…)

-

Outpost VFX lighting tips

www.outpost-vfx.com/en/news/18-pro-tips-and-tricks-for-lighting

Get as much information regarding your plate lighting as possible

- Always use a reference

- Replicate what is happening in real life

- Invest into a solid HDRI

- Start Simple

- Observe real world lighting, photography and cinematography

- Don’t neglect the theory

- Learn the difference between realism and photo-realism.

- Keep your scenes organised