BREAKING NEWS

LATEST POSTS

-

memerwala_londa – Ghibli like Midjourney and Kling video

https://www.reddit.com/r/midjourney/comments/1lbblfq/ghibli_style_game_guide_included/

Made everything on Edits App

Image Generation on Midjourney

Video Generation on Kling 2.1I used Joystick png to add buttons,then some asmr video sounds to make it look more lively,I used text as Buttons,

Prompts:

All Prompts are in order just like in video

First-person POV video game screenshot, playing as a young anime protagonist in a slightly oversized white t-shirt and knee-length blue shorts. Visible hands pushing open a sun-faded wooden door, forearms resting on the frame. In a dusty hallway mirror reflection: character’s soft Ghibli-style face with windblown hair. Inside a cozy coastal cottage: slanted sunlight through lace curtains, pastel walls with watercolor seascapes, overstuffed bookshelf spilling seashells. Foreground: ‘E: Rest’ prompt over a quilted sofa. Background: steaming teacup on a driftwood table, open window revealing distant lighthouse and Miyazaki fluffy clouds. Soft painterly textures, slight fisheye lens, identical HUD (minimap corner, health bar)

First-person POV video game screenshot, playing as a young anime protagonist in a slightly oversized white t-shirt and knee-length blue shorts. View includes visible hands gripping a steering wheel, sunlit arms resting on car door, and rearview mirror showing character’s soft Ghibli-style face with windblown hair. Driving through a vibrant coastal town: cobblestone streets, pastel houses with flower boxes, distant lighthouse. Soft painterly textures, Miyazaki skies with fluffy clouds, slight fisheye lens effect, HUD elements (minimap corner, health bar).

First-person POV video game screenshot, playing as a young protagonist in a loose white t-shirt and faded denim shorts. Visible arms holding a woven basket, sneakers stepping on rain-damp cobblestones. Walking through a chaotic Ghibli street market: cramped stalls selling glowing mushrooms, floating lanterns, and spiral-cut fruits. Fishmonger shouts while soot sprites dart between crates. Foreground: vendor handing you a peach (interactive ‘E’ prompt). Background: yakuza thugs lurking near a steaming noodle cart. Soft painterly lighting, depth of field, subtle HUD (minimap corner, health bar). Studio Ghibli meets Grand Theft Auto

First-person POV video game screenshot, playing as a young anime protagonist in a slightly oversized white t-shirt (salt-stained sleeves) and knee-length blue shorts, visible hands gripping a bamboo fishing rod. Kneeling on a mossy dock pier at sunset, arms resting on knees. Foreground: ‘E: Reel In’ prompt as line pulls taut. Background: pastel fishing boats, distant lighthouse under Miyazaki’s fluffy clouds. Glowing koi fish breaching turquoise water, soot sprites stealing bait from a tin. Identical soft painterly textures, fisheye lens effect, HUD (minimap corner, health bar).

Video Prompts :

All Prompts are in order just like in video

The black-haired boy strides from the rustic house toward the ocean, the camera tracking his movement in a GTA-style third-person perspective as coastal winds flutter white curtains and sunlight glimmers on distant sailboats, blending warm interior details with expanding seaside horizons under a tranquil sky.

The brown-haired boy drives a vintage blue convertible along the coastal cobblestone street, colorful flower-adorned buildings passing by as the camera follows the car’s journey toward the sunlit ocean horizon, sea breeze gently tousling his hair under a serene sky.

The young boy navigates the bustling cobblestone market, basket of oranges in arm, as vibrant stalls and fluttering awnings frame his journey, the camera tracking his focused stride through chattering crowds under swaying traditional lanterns.

A school of fish swims gracefully through crystal-clear water, sunlight filtering through the surface, coral reefs swaying gently, creating a serene underwater scene with the camera stationary.

-

Free or Open Source VFX Asset Management Systems

There are several free or open-source VFX asset management systems available that can be used in production environments. These tools vary in scope—from lightweight tools to full-fledged pipeline frameworks. Below is a breakdown of the most notable ones and what makes them stand out.

1. Free & Open-Source VFX Asset Management Systems

1.1 OpenPype (formerly Pype)

License: Open source (Apache 2.0)

– Asset management and project structure setup

– Integrates with Maya, Houdini, Nuke, Blender, and others

– Includes publishing, versioning, and task tracking

– Web interface (OpenPype Studio) for overview and managementStrengths: Actively developed, modular and extendable, production-proven in real studios

URL: https://openpype.io/

1.2 Kitsu

License: GNU GPL v3

– Production tracking, shot management

– Web-based interface with intuitive UX

– Built-in review and feedback system

– API for integration into pipelinesStrengths: Great for team collaboration, focuses on communication between departments

URL: https://www.cg-wire.com/kitsu

https://github.com/cgwire/kitsu

1.3 ftrack Community Edition

License: Proprietary (older versions may be available for small studios/educational users)

– Project management, review, and pipeline integration

– Strength: Industry-proven

Note: Current versions are commercial; older community editions may still be used.

1.4 Tactic

License: Open source (EPL 1.0)

– General-purpose asset and workflow management

– Web-based, highly configurableStrengths: Adaptable to VFX pipelines, powerful templating/scripting

Drawbacks: Steep learning curve, not VFX-specific out of the box

URL: https://www.southpawtech.com/

2. Most Powerful Open Source Option

Best Overall: OpenPype

Why:

– Specifically built for VFX and animation pipelines

– Extensively integrates with key DCCs

– Actively maintained with a large community

– Includes both asset and task management

– Works out-of-the-box but is customizable -

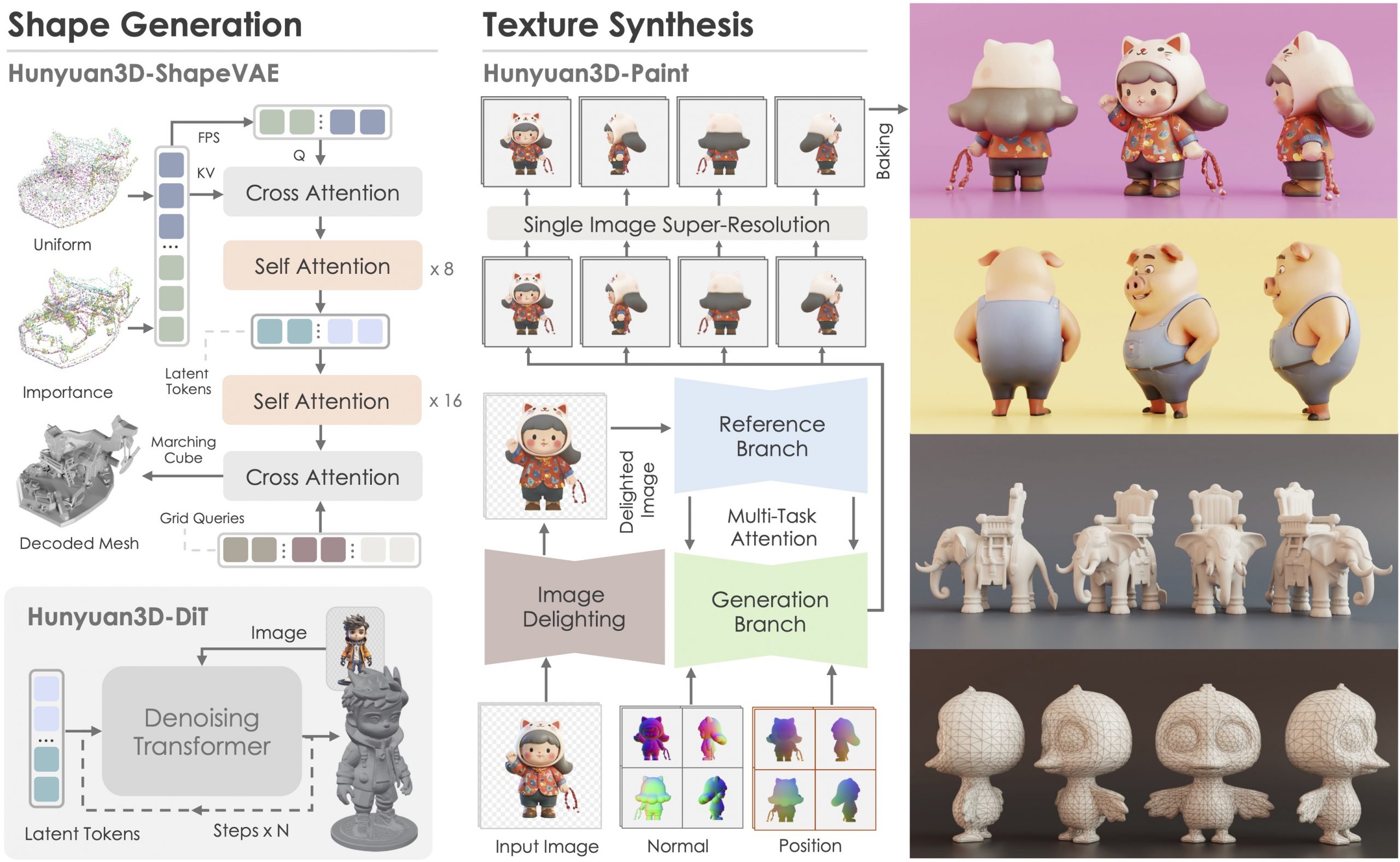

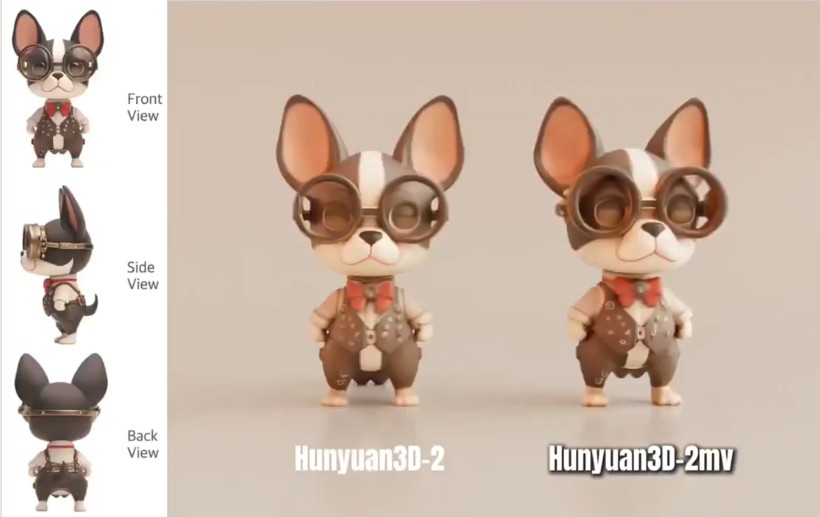

Tencent Hunyuan3D 2.1 goes Open Source and adds MV (Multi-view) and MV Mini

https://huggingface.co/tencent/Hunyuan3D-2mv

https://huggingface.co/tencent/Hunyuan3D-2mini

https://github.com/Tencent/Hunyuan3D-2

Tencent just made Hunyuan3D 2.1 open-source.

This is the first fully open-source, production-ready PBR 3D generative model with cinema-grade quality.

https://github.com/Tencent-Hunyuan/Hunyuan3D-2.1

What makes it special?

• Advanced PBR material synthesis brings realistic materials like leather, bronze, and more to life with stunning light interactions.

• Complete access to model weights, training/inference code, data pipelines.

• Optimized to run on accessible hardware.

• Built for real-world applications with professional-grade output quality.

They’re making it accessible to everyone:

• Complete open-source ecosystem with full documentation.

• Ready-to-use model weights and training infrastructure.

• Live demo available for instant testing.

• Comprehensive GitHub repository with implementation details.

-

Lovis Odin ComfyUI-8iPlayer – Seamlessly integrate 8i volumetric videos into your AI workflows

Load holograms, animate cameras, capture frames, and feed them to your favorite AI models. Developed by Lovis Odin for Kartel.ai

You can obtain the MPD URL directly from the official 8i Web Player.https://github.com/Kartel-ai/ComfyUI-8iPlayer/

-

Thomas Müller nv-tlabs GEN3C – 3D-Informed World-Consistent Video Generation with Precise Camera Control

https://github.com/nv-tlabs/GEN3C

Load a picture, define a camera path in 3D, and then render a photoreal video.

-

AI and the Law – Disney, NBCU sue Midjourney over copyright infringement

https://www.axios.com/2025/06/11/disney-nbcu-midjourney-copyright

Why it matters: It’s the first legal action that major Hollywood studios have taken against a generative AI company.

The complaint, filed in a U.S. District Court in central California, accuses Midjourney of both direct and secondary copyright infringement by using the studios’ intellectual property to train their large language model and by displaying AI-generated images of their copyrighted characters.

FEATURED POSTS

-

TurboSquid move towards supporting AI against its own policies

https://www.turbosquid.com/ai-3d-generator

The AI is being trained using a mix of Shutterstock 2D imagery and 3D models drawn from the TurboSquid marketplace. However, it’s only being trained on models that artists have approved for this use.

People cannot generate a model and then immediately sell it. However, a generated 3D model can be used as a starting point for further customization, which could then be sold on the TurboSquid marketplace. However, models created using our generative 3D tool—and their derivatives—can only be sold on the TurboSquid marketplace.

TurboSquid does not accept AI-generated content from our artists

As AI-powered tools become more accessible, it is important for us to address the impact AI has on our artist community as it relates to content made licensable on TurboSquid. TurboSquid, in line with its parent company Shutterstock, is taking an ethically responsible approach to AI on its platforms. We want to ensure that artists are properly compensated for their contributions to AI projects while supporting customers with the protections and coverage issued through the TurboSquid license.In order to ensure that customers are protected, that intellectual property is not misused, and that artists’ are compensated for their work, TurboSquid will not accept content uploaded and sold on our marketplace that is generated by AI. Per our Publisher Agreement, artists must have proven IP ownership of all content that is submitted. AI-generated content is produced using machine learning models that are trained using many other creative assets. As a result, we cannot accept content generated by AI because its authorship cannot be attributed to an individual person, and we would be unable to ensure that all artists who were involved in the generation of that content are compensated.

-

ComfyDock – The Easiest (Free) Way to Safely Run ComfyUI Sessions in a Boxed Container

https://www.reddit.com/r/comfyui/comments/1j2x4qv/comfydock_the_easiest_free_way_to_run_comfyui_in/

ComfyDock is a tool that allows you to easily manage your ComfyUI environments via Docker.

Common Challenges with ComfyUI

- Custom Node Installation Issues: Installing new custom nodes can inadvertently change settings across the whole installation, potentially breaking the environment.

- Workflow Compatibility: Workflows are often tested with specific custom nodes and ComfyUI versions. Running these workflows on different setups can lead to errors and frustration.

- Security Risks: Installing custom nodes directly on your host machine increases the risk of malicious code execution.

How ComfyDock Helps

- Environment Duplication: Easily duplicate your current environment before installing custom nodes. If something breaks, revert to the original environment effortlessly.

- Deployment and Sharing: Workflow developers can commit their environments to a Docker image, which can be shared with others and run on cloud GPUs to ensure compatibility.

- Enhanced Security: Containers help to isolate the environment, reducing the risk of malicious code impacting your host machine.