BREAKING NEWS

LATEST POSTS

-

OpenAI Backs Critterz, an AI-Made Animated Feature Film

https://www.wsj.com/tech/ai/openai-backs-ai-made-animated-feature-film-389f70b0

Film, called ‘Critterz,’ aims to debut at Cannes Film Festival and will leverage startup’s AI tools and resources.

“Critterz,” about forest creatures who go on an adventure after their village is disrupted by a stranger, is the brainchild of Chad Nelson, a creative specialist at OpenAI. Nelson started sketching out the characters three years ago while trying to make a short film with what was then OpenAI’s new DALL-E image-generation tool.

-

AI and the Law: Anthropic to Pay $1.5 Billion to Settle Book Piracy Class Action Lawsuit

https://variety.com/2025/digital/news/anthropic-class-action-settlement-billion-1236509571

The settlement amounts to about $3,000 per book and is believed to be the largest ever recovery in a U.S. copyright case, according to the plaintiffs’ attorneys.

-

Sir Peter Jackson’s Wētā FX records $140m loss in two years, amid staff layoffs

https://www.thepost.co.nz/business/360813799/weta-fx-posts-59m-loss-amid-industry-headwinds

Wētā FX, Sir Peter Jackson’s largest business has posted a $59.3 million loss for the year to March 31, an improvement on an $83m loss last year.

-

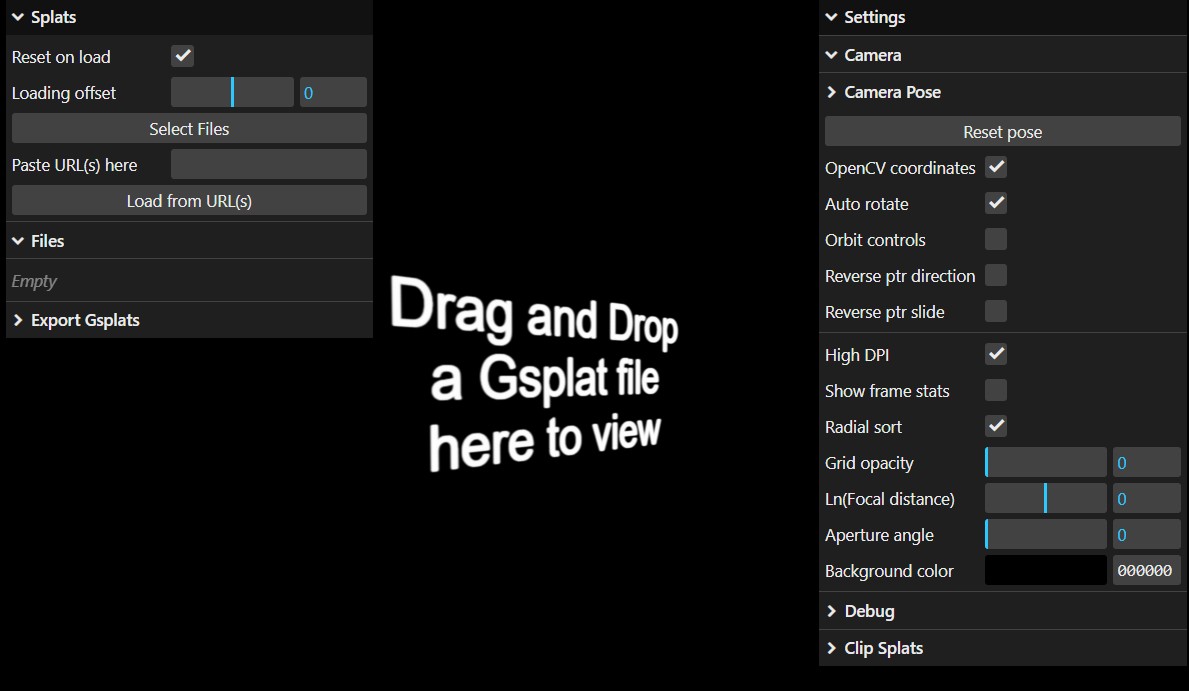

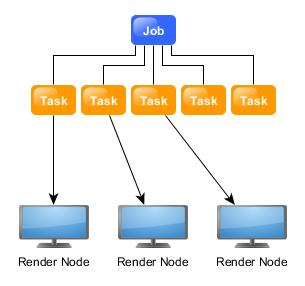

ComfyUI Thinkbox Deadline plugin

Submit ComfyUI workflows to Thinkbox Deadline render farm.

Features

- Submit ComfyUI workflows directly to Deadline

- Batch rendering with seed variation

- Real-time progress monitoring via Deadline Monitor

- Configurable pools, groups, and priorities

https://github.com/doubletwisted/ComfyUI-Deadline-Plugin

https://docs.thinkboxsoftware.com/products/deadline/latest/1_User%20Manual/manual/overview.html

Deadline 10 is a cross-platform render farm management tool for Windows, Linux, and macOS. It gives users control of their rendering resources and can be used on-premises, in the cloud, or both. It handles asset syncing to the cloud, manages data transfers, and supports tagging for cost tracking purposes.

Deadline 10’s Remote Connection Server allows for communication over HTTPS, improving performance and scalability. Where supported, users can use usage-based licensing to supplement their existing fixed pool of software licenses when rendering through Deadline 10.

-

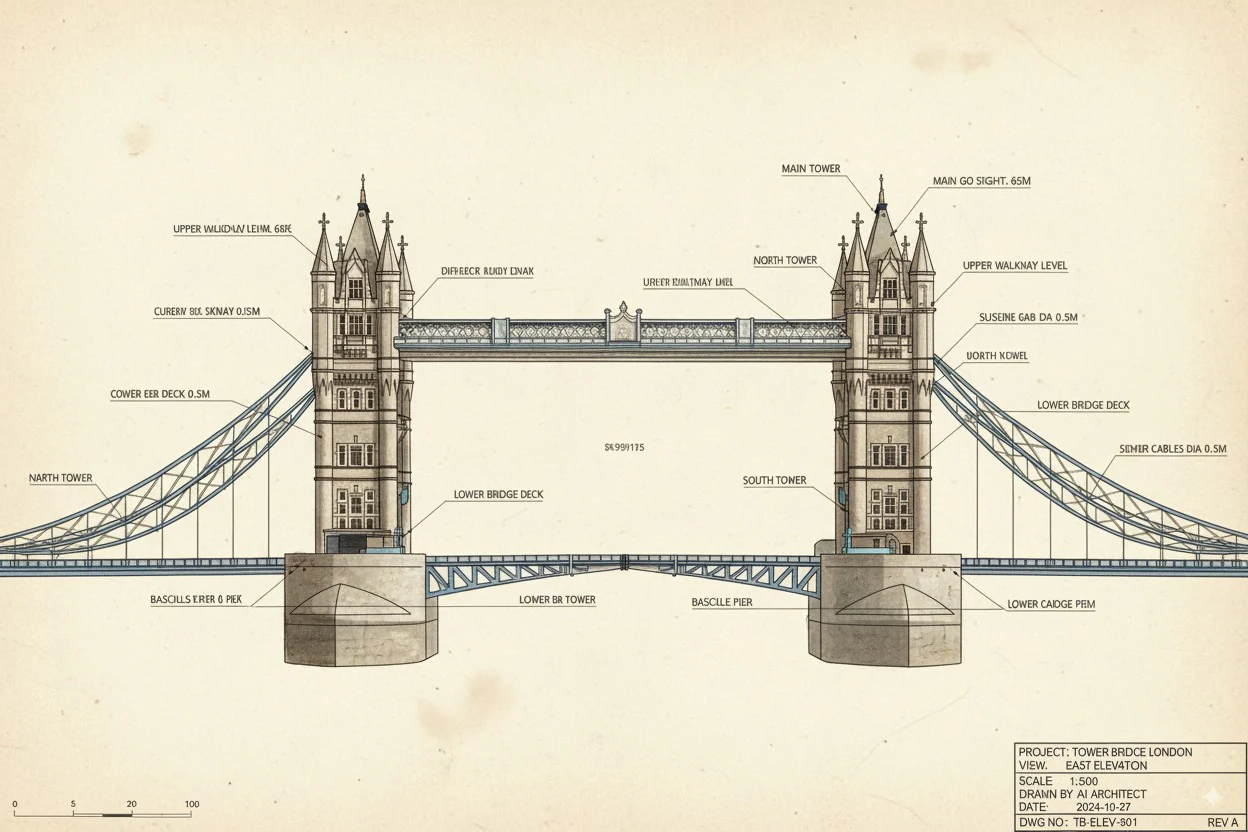

Google’s Nano Banana AI: Free Tool for 3D Architecture Models

https://landscapearchitecture.store/blogs/news/nano-banana-ai-free-tool-for-3d-architecture-models

How to Use Nano Banana AI for Architecture- Go to Google AI Studio.

- Log in with your Gmail and select Gemini 2.5 (Nano Banana).

- Upload a photo — either from your laptop or a Google Street View screenshot.

- Paste this example prompt:

“Use the provided architectural photo as reference. Generate a high-fidelity 3D building model in the look of a 3D-printed architecture model.” - Wait a few seconds, and your 3D architecture model will be ready.

Pro tip: If you want more accuracy, upload two images — a street photo for the facade and an aerial view for the roof/top.

-

Blender 4.5 switches from OpenGL to Vulkan support

Blender is switching from OpenGL to Vulkan as its default graphics backend, starting significantly with Blender 4.5, to achieve better performance and prepare for future features like real-time ray tracing and global illumination. To enable this switch, go to Edit > Preferences > System and set the “Backend” option to “Vulkan,” then restart Blender. This change offers substantial benefits, including faster startup times, improved viewport responsiveness, and more efficient handling of complex scenes by better utilizing your CPU and GPU resources.

Why the Switch to Vulkan?

- Modern Graphics API: Vulkan is a newer, lower-level, and more efficient API that provides developers with greater control over hardware, unlike the older, higher-level OpenGL.

- Performance Boost: This change significantly improves performance in various areas, such as viewport rendering, material loading, and overall UI responsiveness, especially in complex scenes with many textures.

- Better Resource Utilization: Vulkan distributes work more effectively across the CPU and reduces driver overhead, allowing Blender to make better use of your computer’s power.

- Future-Proofing: The Vulkan backend paves the way for advanced features like real-time ray tracing and global illumination in future versions of Blender.

-

Diffuman4D – 4D Consistent Human View Synthesis from Sparse-View Videos with Spatio-Temporal Diffusion Models

Given sparse-view videos, Diffuman4D (1) generates 4D-consistent multi-view videos conditioned on these inputs, and (2) reconstructs a high-fidelity 4DGS model of the human performance using both the input and the generated videos.

FEATURED POSTS

-

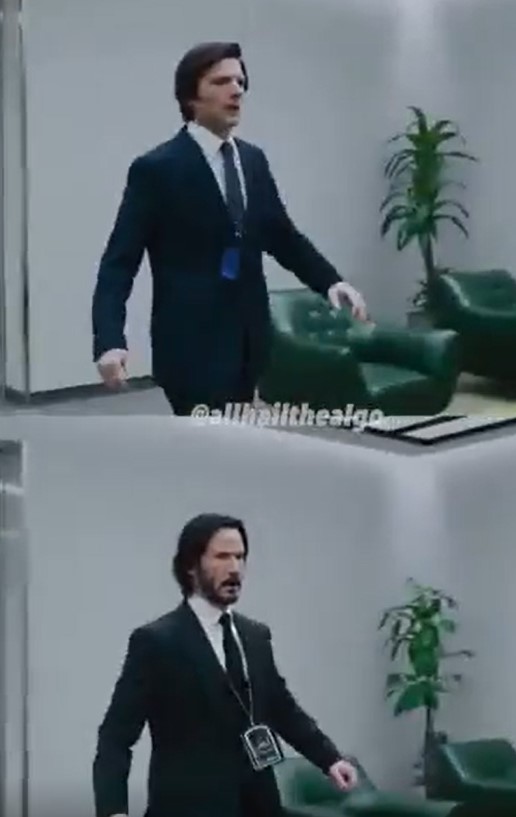

Hunyuan video-to-video re-styling

The open-source community has figured out how to run Hunyuan V2V using LoRAs.

You’ll need to install Kijai’s ComfyUI-HunyuanLoom and LoRAs, which you can either train yourself or find on Civitai.

1) you’ll need HunyuanLoom, after install, workflow found in the repo.

https://github.com/logtd/ComfyUI-HunyuanLoom

2) John Wick lora found here.

https://civitai.com/models/1131159/john-wick-hunyuan-video-lora

-

AI Data Laundering: How Academic and Nonprofit Researchers Shield Tech Companies from Accountability

“Simon Willison created a Datasette browser to explore WebVid-10M, one of the two datasets used to train the video generation model, and quickly learned that all 10.7 million video clips were scraped from Shutterstock, watermarks and all.”

“In addition to the Shutterstock clips, Meta also used 10 million video clips from this 100M video dataset from Microsoft Research Asia. It’s not mentioned on their GitHub, but if you dig into the paper, you learn that every clip came from over 3 million YouTube videos.”

“It’s become standard practice for technology companies working with AI to commercially use datasets and models collected and trained by non-commercial research entities like universities or non-profits.”

“Like with the artists, photographers, and other creators found in the 2.3 billion images that trained Stable Diffusion, I can’t help but wonder how the creators of those 3 million YouTube videos feel about Meta using their work to train their new model.”

-

Types of Film Lights and their efficiency – CRI, Color Temperature and Luminous Efficacy

nofilmschool.com/types-of-film-lights

“Not every light performs the same way. Lights and lighting are tricky to handle. You have to plan for every circumstance. But the good news is, lighting can be adjusted. Let’s look at different factors that affect lighting in every scene you shoot. “

Use CRI, Luminous Efficacy and color temperature controls to match your needs.Color Temperature

Color temperature describes the “color” of white light by a light source radiated by a perfect black body at a given temperature measured in degrees Kelvinhttps://www.pixelsham.com/2019/10/18/color-temperature/

CRI

“The Color Rendering Index is a measurement of how faithfully a light source reveals the colors of whatever it illuminates, it describes the ability of a light source to reveal the color of an object, as compared to the color a natural light source would provide. The highest possible CRI is 100. A CRI of 100 generally refers to a perfect black body, like a tungsten light source or the sun. “https://www.studiobinder.com/blog/what-is-color-rendering-index

(more…)