BREAKING NEWS

LATEST POSTS

-

ZAppLink – a plugin that allows you to seamlessly integrate your favorite image editing software — such as Adobe Photoshop — into your ZBrush workflow

While in ZBrush, call up your image editing package and use it to modify the active ZBrush document or tool, then go straight back into ZBrush.

ZAppLink can work on different saved points of view for your model. What you paint in your image editor is then projected to the model’s PolyPaint or texture for more creative freedom.

With ZAppLink you can combine ZBrush’s powerful capabilities with all the painting power of the PSD-capable 2D editor of your choice, making it easy to create stunning textures.

ZAppLink features

- Send your document view to the PSD file editor of your choice for texture creation and modification: Photoshop, Gimp and more!

- Projections in orthogonal or perspective mode.

- Multiple view support: With a single click, send your front, back, left, right, top, bottom and two custom views in dedicated layers to your 2D editor. When your painting is done, automatically reproject all the views back in ZBrush!

- Create character sheets based on your saved views with a single click.

- ZAppLink works with PolyPaint, Textures based on UV’s and canvas pixols.

-

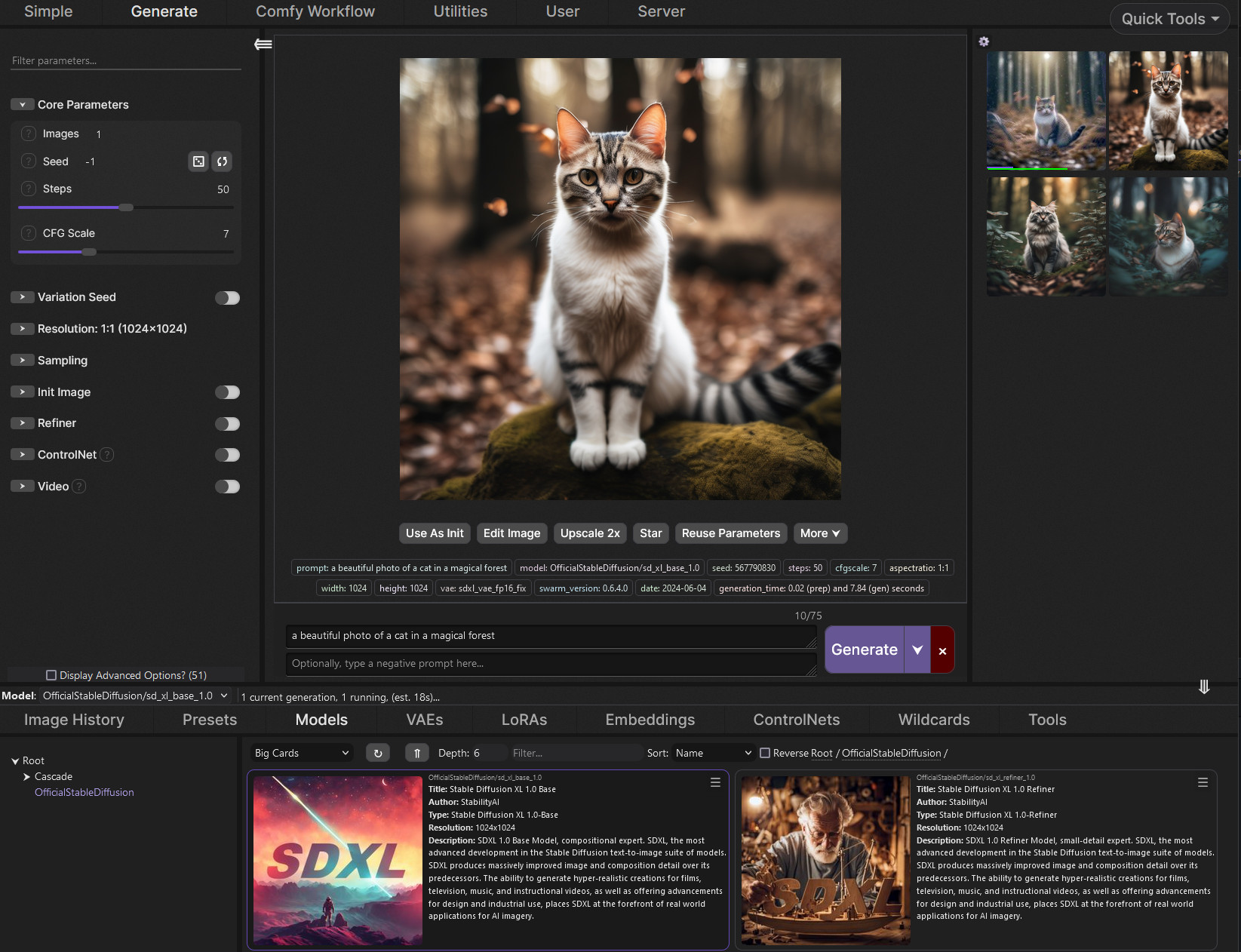

SwarmUI.net – A free, open source, modular AI image generation Web-User-Interface

https://github.com/mcmonkeyprojects/SwarmUI

A Modular AI Image Generation Web-User-Interface, with an emphasis on making powertools easily accessible, high performance, and extensibility. Supports AI image models (Stable Diffusion, Flux, etc.), and AI video models (LTX-V, Hunyuan Video, Cosmos, Wan, etc.), with plans to support eg audio and more in the future.

SwarmUI by default runs entirely locally on your own computer. It does not collect any data from you.

SwarmUI is 100% Free-and-Open-Source software, under the MIT License. You can do whatever you want with it.

-

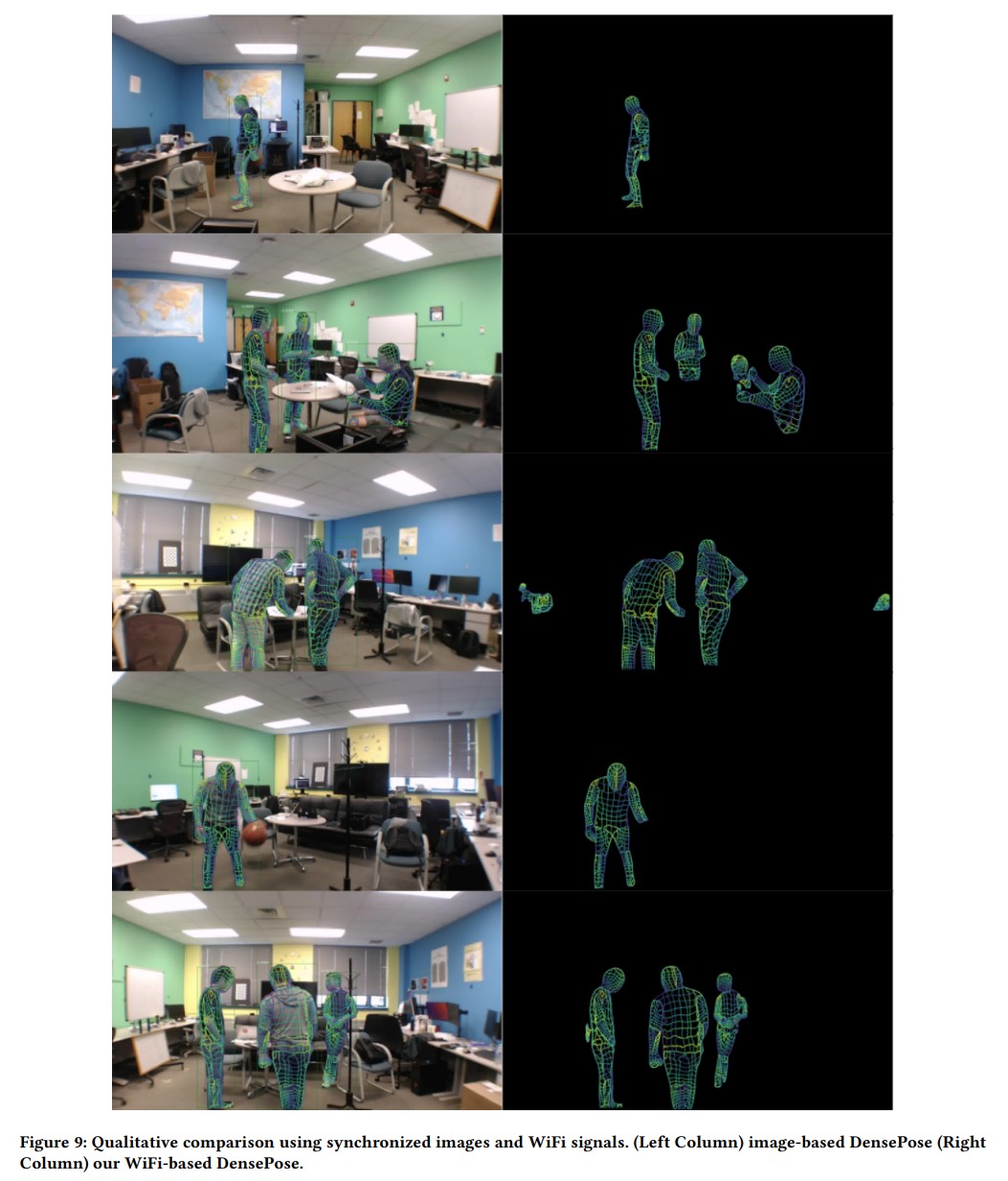

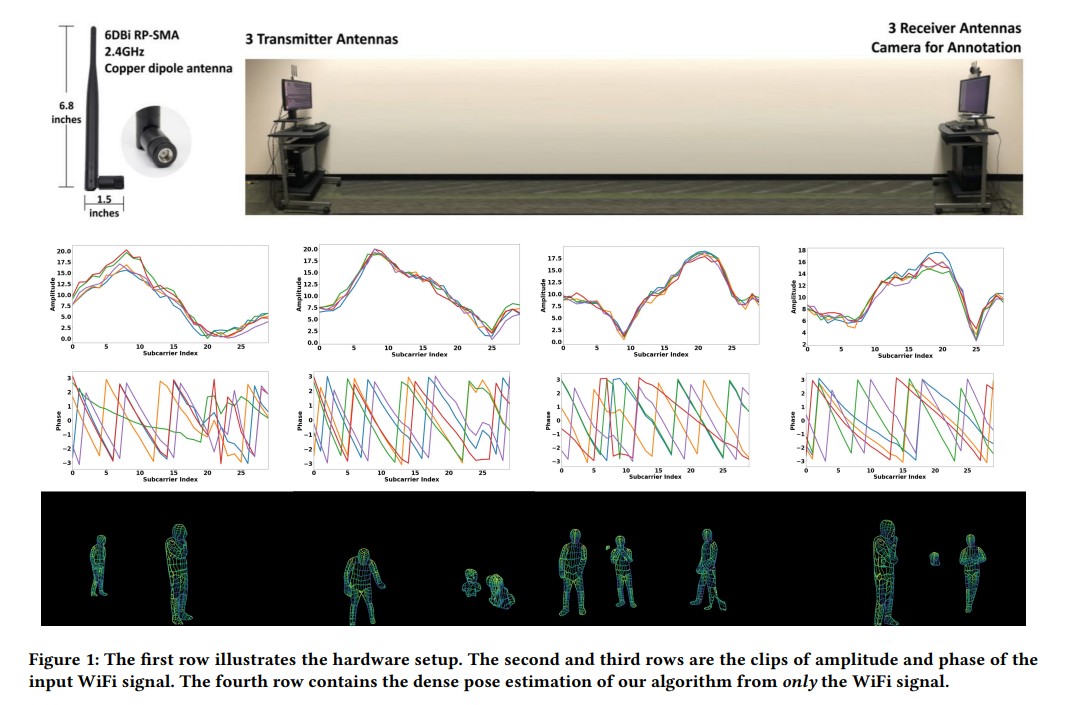

DensePose From WiFi using ML

https://arxiv.org/pdf/2301.00250

https://www.xrstager.com/en/ai-based-motion-detection-without-cameras-using-wifi

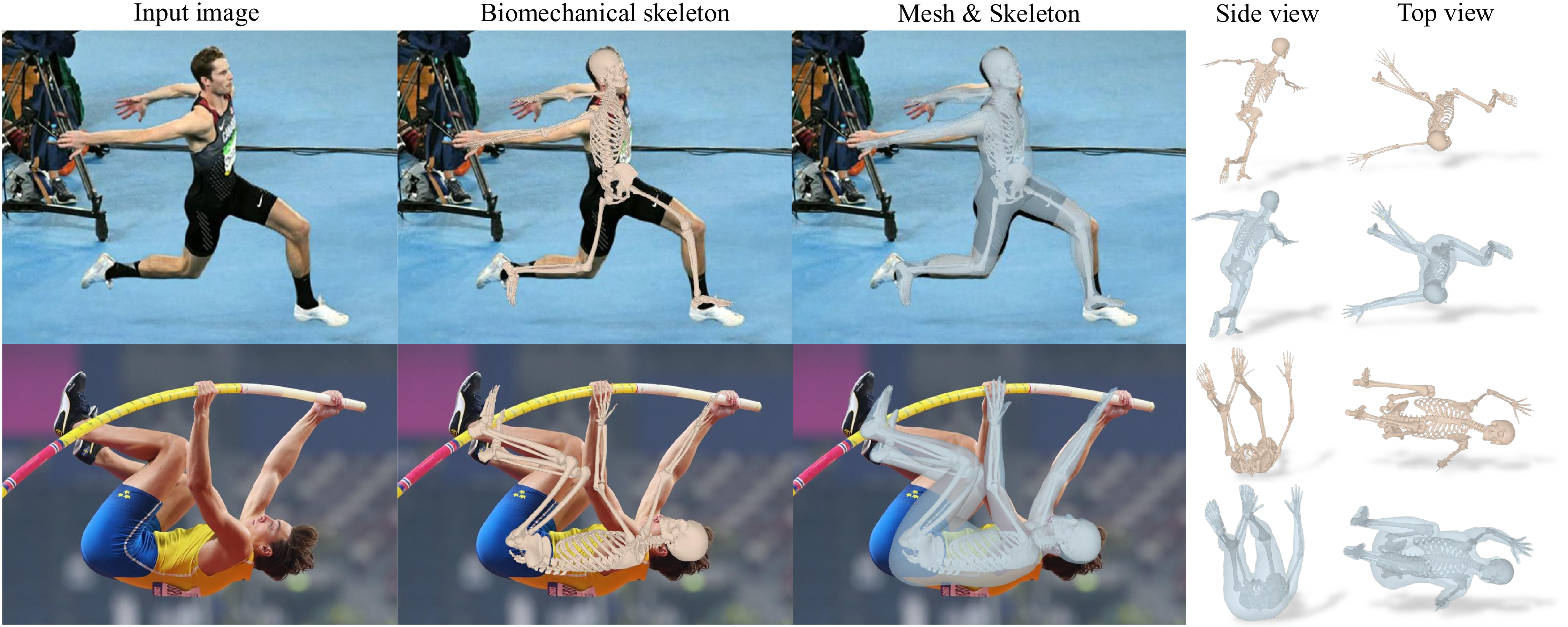

Advances in computer vision and machine learning techniques have led to significant development in 2D and 3D human pose estimation using RGB cameras, LiDAR, and radars. However, human pose estimation from images is adversely affected by common issues such as occlusion and lighting, which can significantly hinder performance in various scenarios.

Radar and LiDAR technologies, while useful, require specialized hardware that is both expensive and power-intensive. Moreover, deploying these sensors in non-public areas raises important privacy concerns, further limiting their practical applications.

To overcome these limitations, recent research has explored the use of WiFi antennas, which are one-dimensional sensors, for tasks like body segmentation and key-point body detection. Building on this idea, the current study expands the use of WiFi signals in combination with deep learning architectures—techniques typically used in computer vision—to estimate dense human pose correspondence.

In this work, a deep neural network was developed to map the phase and amplitude of WiFi signals to UV coordinates across 24 human regions. The results demonstrate that the model is capable of estimating the dense pose of multiple subjects with performance comparable to traditional image-based approaches, despite relying solely on WiFi signals. This breakthrough paves the way for developing low-cost, widely accessible, and privacy-preserving algorithms for human sensing.

-

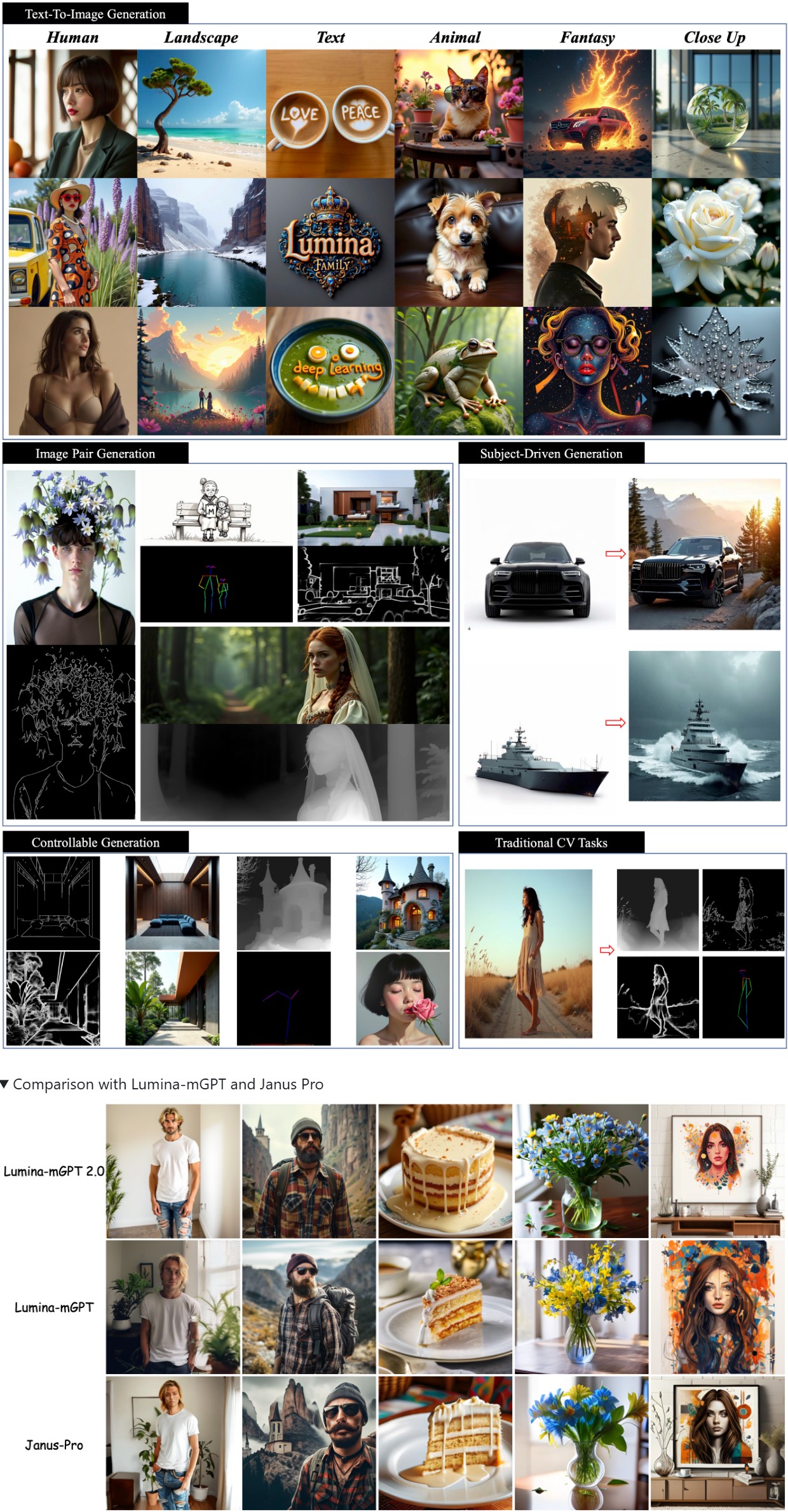

Lumina-mGPT 2.0 – Stand-alone Autoregressive Image Modeling

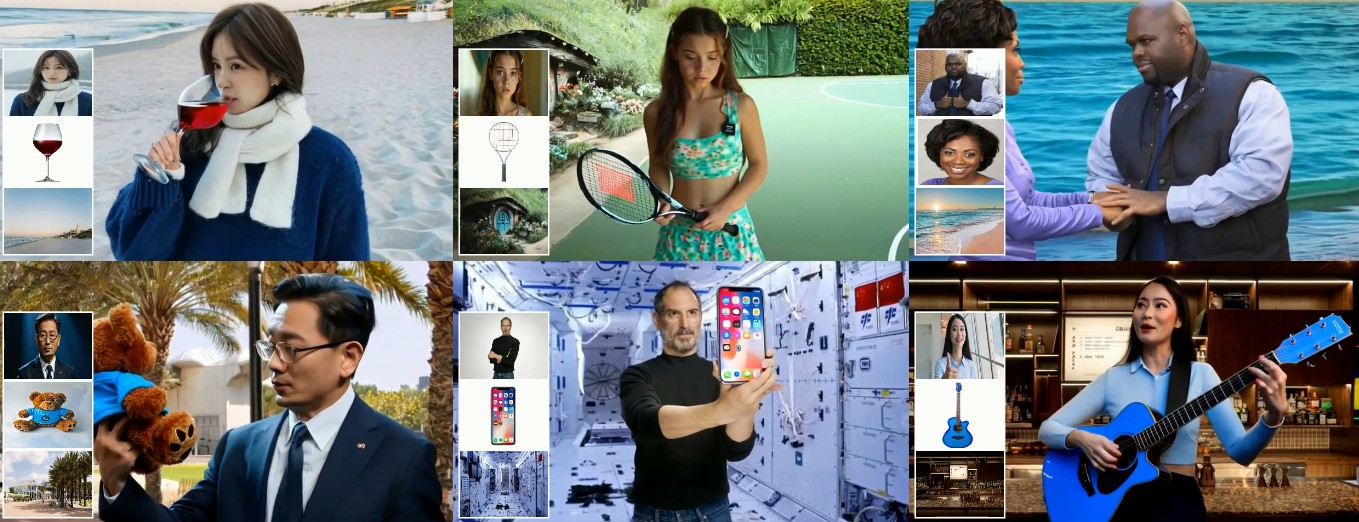

A stand-alone, decoder-only autoregressive model, trained from scratch, that unifies a broad spectrum of image generation tasks, including text-to-image generation, image pair generation, subject-driven generation, multi-turn image editing, controllable generation, and dense prediction.

https://github.com/Alpha-VLLM/Lumina-mGPT-2.0

-

Mamba and MicroMamba – A free, open source general software package managers for any kind of software and all operating systems

https://mamba.readthedocs.io/en/latest/user_guide/micromamba.html

https://mamba.readthedocs.io/en/latest/installation/micromamba-installation.html

https://micro.mamba.pm/api/micromamba/win-64/latest

https://prefix.dev/docs/mamba/overview

With mamba, it’s easy to set up

software environments. A software environment is simply a set of different libraries, applications and their dependencies. The power of environments is that they can co-exist: you can easily have an environment called py27 for Python 2.7 and one called py310 for Python 3.10, so that multiple of your projects with different requirements have their dedicated environments. This is similar to “containers” and images. However, mamba makes it easy to add, update or remove software from the environments.

Download the latest executable from https://micro.mamba.pm/api/micromamba/win-64/latest

You can install it or just run the executable to create a python environment under Windows:

(more…)

FEATURED POSTS

-

AI Data Laundering: How Academic and Nonprofit Researchers Shield Tech Companies from Accountability

“Simon Willison created a Datasette browser to explore WebVid-10M, one of the two datasets used to train the video generation model, and quickly learned that all 10.7 million video clips were scraped from Shutterstock, watermarks and all.”

“In addition to the Shutterstock clips, Meta also used 10 million video clips from this 100M video dataset from Microsoft Research Asia. It’s not mentioned on their GitHub, but if you dig into the paper, you learn that every clip came from over 3 million YouTube videos.”

“It’s become standard practice for technology companies working with AI to commercially use datasets and models collected and trained by non-commercial research entities like universities or non-profits.”

“Like with the artists, photographers, and other creators found in the 2.3 billion images that trained Stable Diffusion, I can’t help but wonder how the creators of those 3 million YouTube videos feel about Meta using their work to train their new model.”

-

Composition – cinematography Cheat Sheet

Where is our eye attracted first? Why?

Size. Focus. Lighting. Color.

Size. Mr. White (Harvey Keitel) on the right.

Focus. He’s one of the two objects in focus.

Lighting. Mr. White is large and in focus and Mr. Pink (Steve Buscemi) is highlighted by

a shaft of light.

Color. Both are black and white but the read on Mr. White’s shirt now really stands out.

(more…)

What type of lighting?