BREAKING NEWS

LATEST POSTS

-

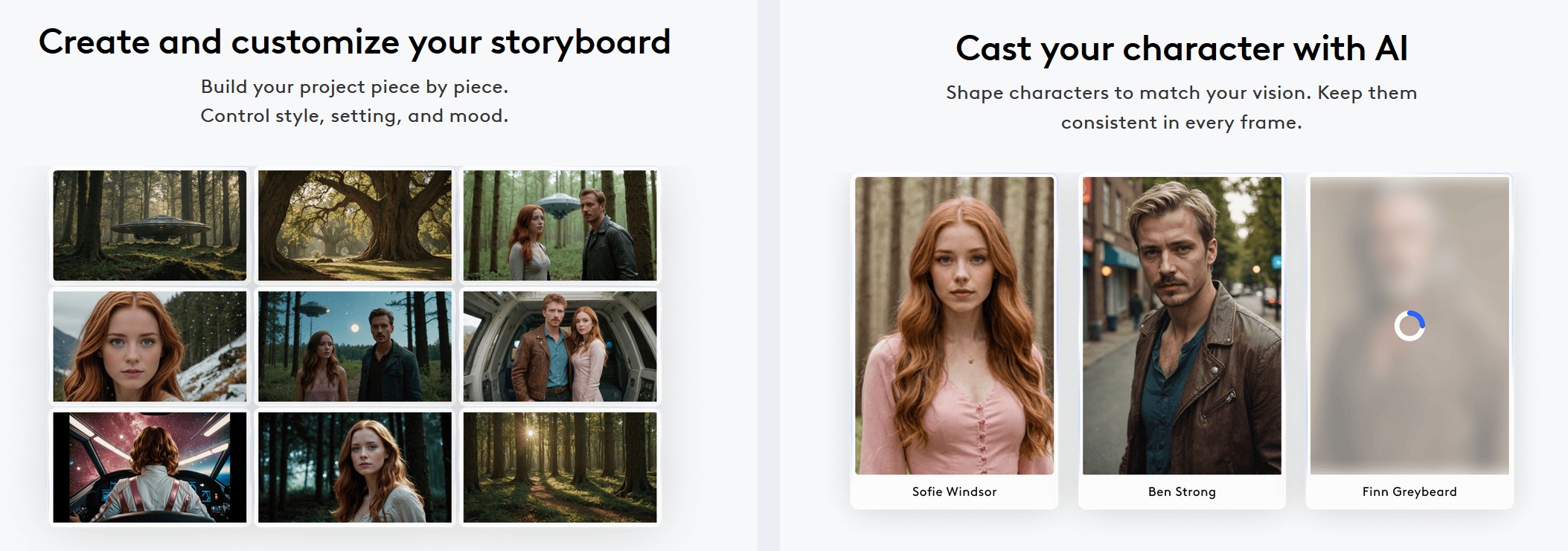

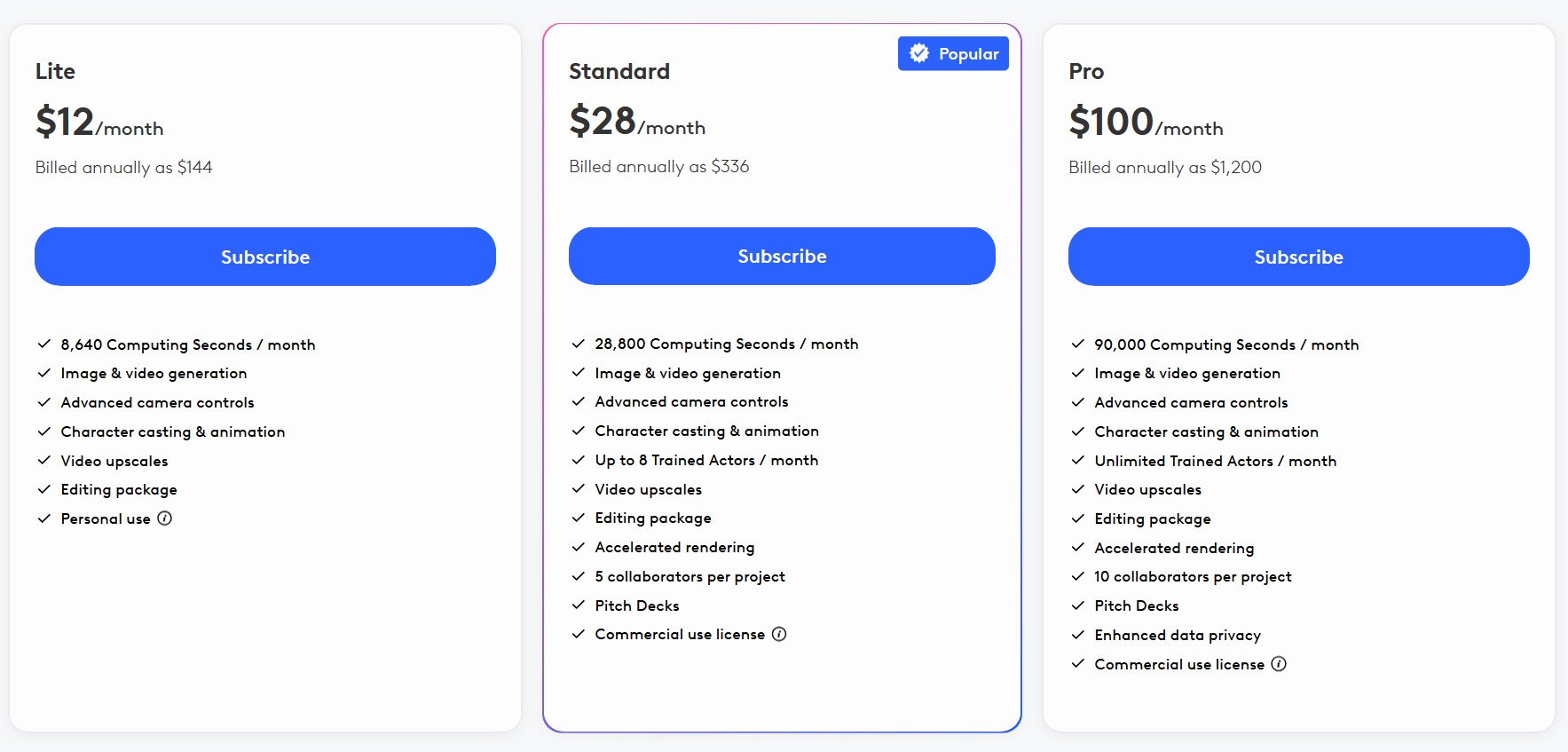

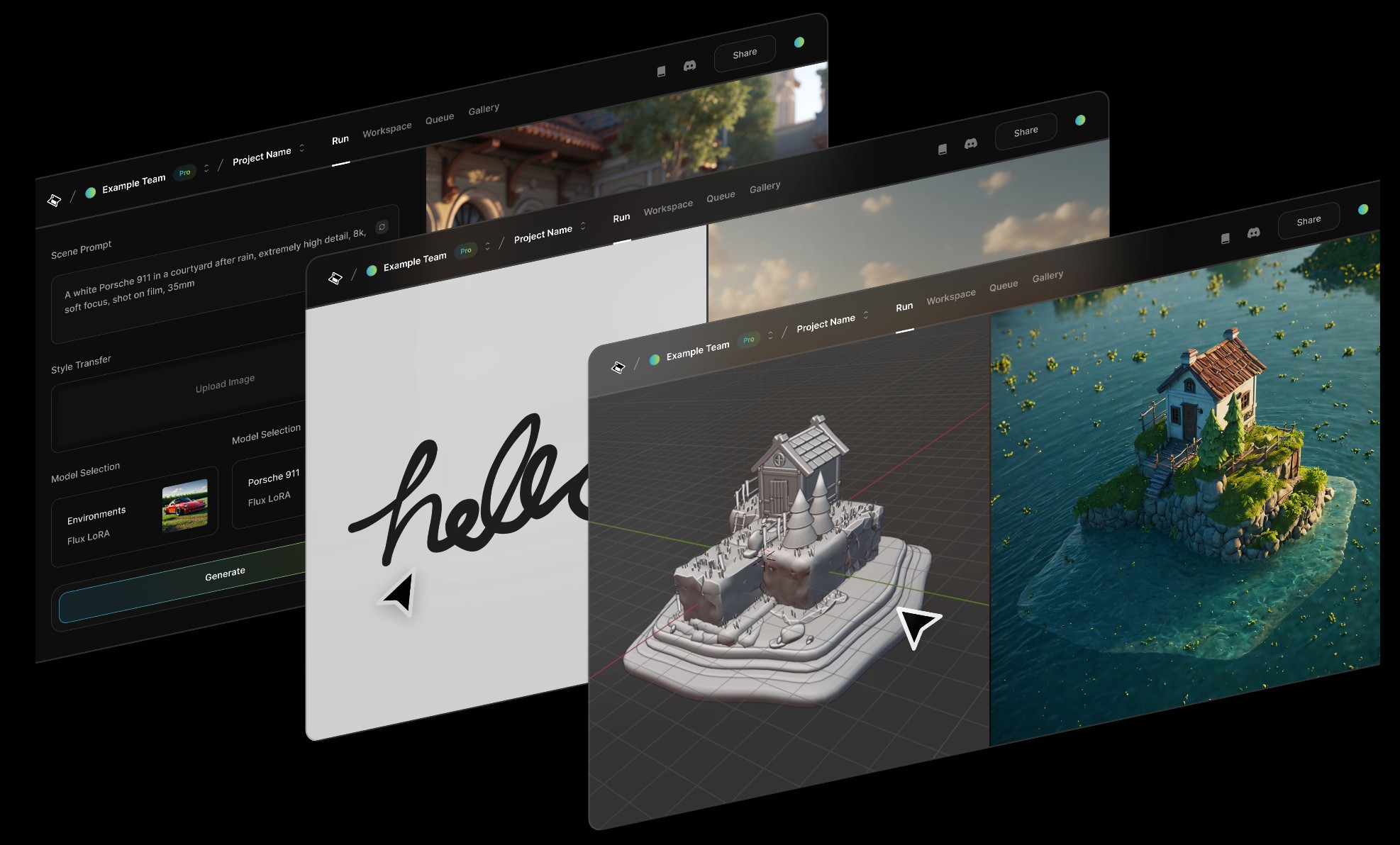

PlayBook3D – Creative controls for all media formats

Playbook3d.com is a diffusion-based render engine that reduces the time to final image with AI. It is accessible via web editor and API with support for scene segmentation and re-lighting, integration with production pipelines and frame-to-frame consistency for image, video, and real-time 3D formats.

-

AI and the Law – AI Creativity – Genius or Gimmick?

7:59-9:50 Justine Bateman:

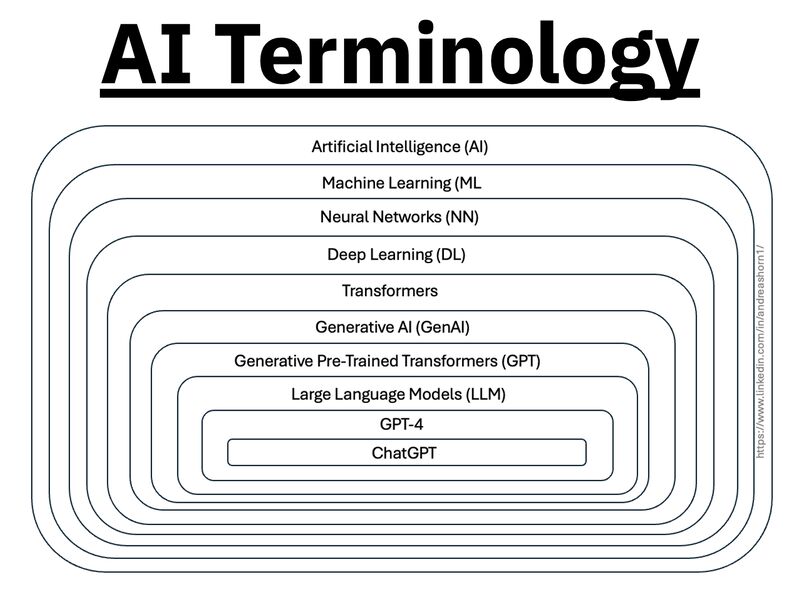

“I mean first I want to give people, help people have a little bit of a definition of what generative AI is—

think of it as like a blender and if you have a blender at home and you turn it on, what does it do? It depends on what I put into it, so it cannot function unless it’s fed things.

Then you turn on the blender and you give it a prompt, which is your little spoon, and you get a little spoonful—little Frankenstein spoonful—out of what you asked for.

So what is going into the blender? Every but a hundred years of film and television or many, many years of, you know, doctor’s reports or students’ essays or whatever it is.

In the film business, in particular, that’s what we call theft; it’s the biggest violation. And the term that continues to be used is “all we did.” I think the CTO of OpenAI—believe that’s her position; I forget her name—when she was asked in an interview recently what she had to say about the fact that they didn’t ask permission to take it in, she said, “Well, it was all publicly available.”

And I will say this: if you own a car—I know we’re in New York City, so it’s not going to be as applicable—but if I see a car in the street, it’s publicly available, but somehow it’s illegal for me to take it. That’s what we have the copyright office for, and I don’t know how well staffed they are to handle something like this, but this is the biggest copyright violation in the history of that office and the US government” -

Aze Alter – What If Humans and AI Unite? | AGE OF BEYOND

https://www.patreon.com/AzeAlter

Voices & Sound Effects: https://elevenlabs.io/

Video Created mainly with Luma: https://lumalabs.ai/

LUMA LABS

KLING

RUNWAY

ELEVEN LABS

MINIMAX

MIDJOURNEY

Music By Scott Buckley -

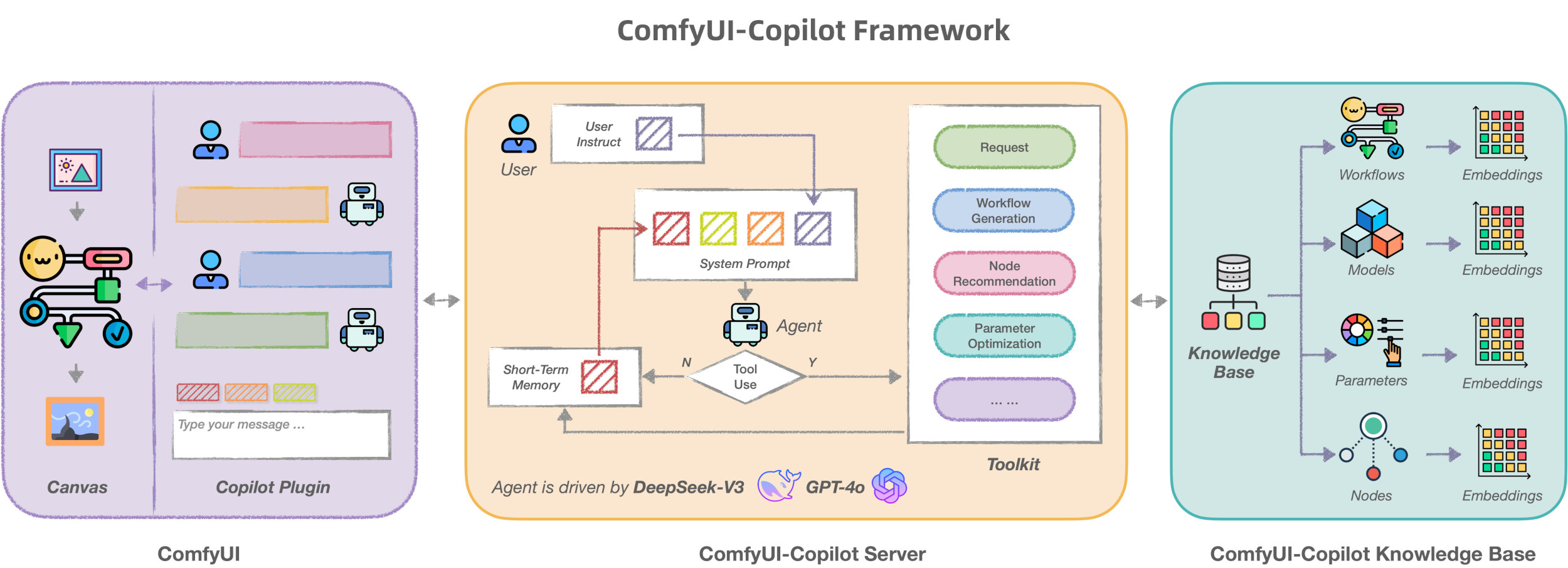

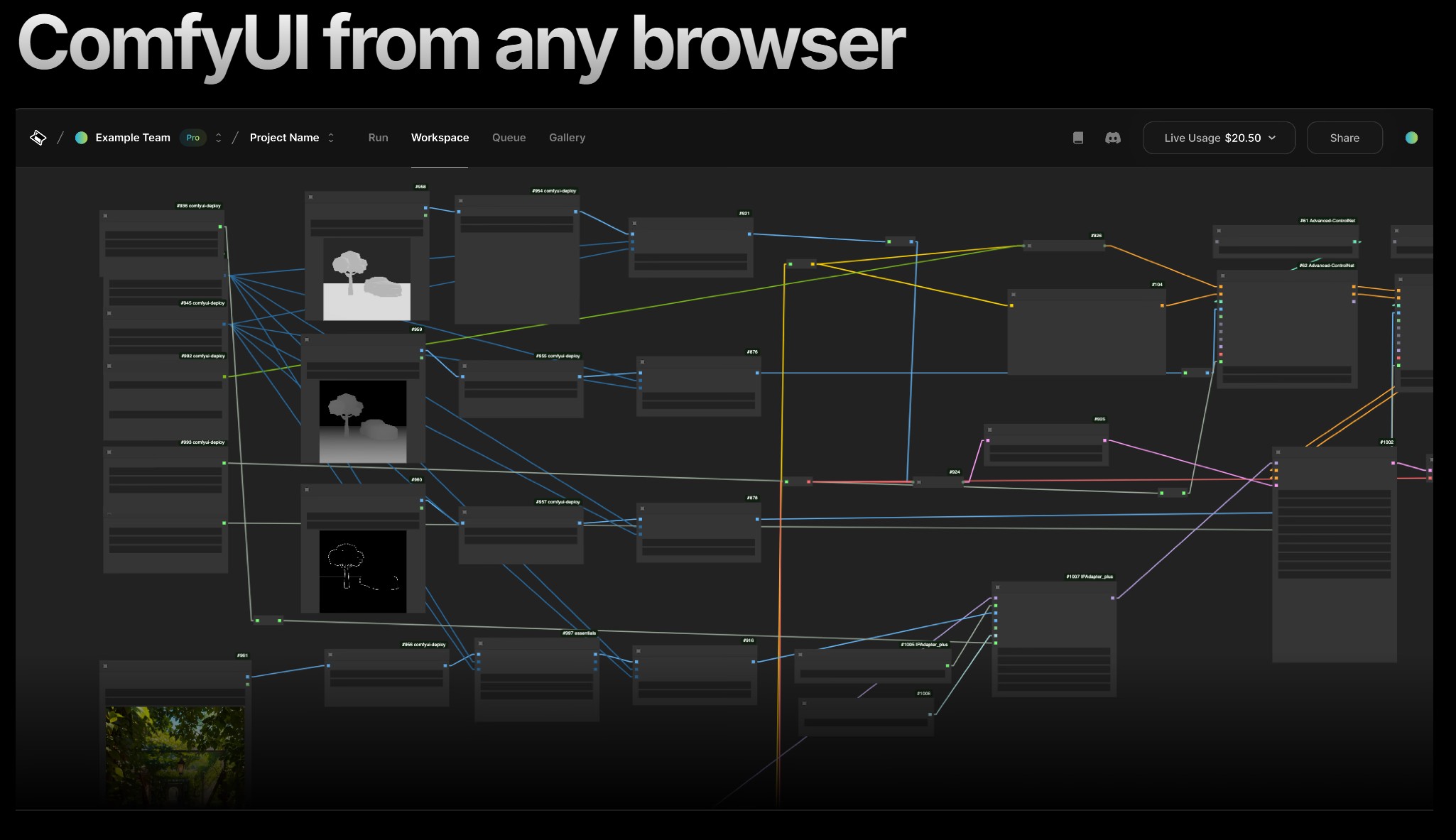

ComfyUI-Manager Joins Comfy-Org

https://blog.comfy.org/p/comfyui-manager-joins-comfy-org

On March 28, ComfyUI-Manager will be moving to the Comfy-Org GitHub organization as Comfy-Org/ComfyUI-Manager. This represents a natural evolution as they continue working to improve the custom node experience for all ComfyUI users.

What This Means For You

This change is primarily about improving support and development velocity. There are a few practical considerations:

- Automatic GitHub redirects will ensure all existing links, git commands, and references to the repository will continue to work seamlessly without any action needed

- For developers: Any existing PRs and issues will be transferred to the new repository location

- For users: ComfyUI-Manager will continue to function exactly as before—no action needed

- For workflow authors: Resources that reference ComfyUI-Manager will continue to work without interruption

-

AccVideo – Accelerating Video Diffusion Model with Synthetic Dataset

https://aejion.github.io/accvideo

https://github.com/aejion/AccVideo

https://huggingface.co/aejion/AccVideo

AccVideo is a novel efficient distillation method to accelerate video diffusion models with synthetic datset. This method is 8.5x faster than HunyuanVideo.

-

Mastering Camera Shots and Angles: A Guide for Filmmakers

https://website.ltx.studio/blog/mastering-camera-shots-and-angles

1. Extreme Wide Shot

2. Wide Shot

3. Medium Shot

4. Close Up

5. Extreme Close Up

-

Bennett Waisbren – ChatGPT 4 video generation

1. Rankin/Bass – That nostalgic stop-motion look like Rudolph the Red-Nosed Reindeer. Cozy and janky.

2. Don Bluth – Lavish hand-drawn fantasy. Lush lighting, expressive eyes, dramatic weight.

3. Fleischer Studios – 1930s rubber-hose style, like Betty Boop and Popeye. Surreal, bouncy, jazz-age energy.

4. Pixar – Clean, subtle facial animation, warm lighting, and impeccable shot composition.

5. Toei Animation (Classic Era) – Foundation of mainstream anime. Big eyes, clean lines, iconic nostalgia.

6. Cow and Chicken / Cartoon Network Gross-Out – Elastic, grotesque, hyper-exaggerated. Ugly-cute characters, zoom-ins on feet and meat, lowbrow chaos.

7. Max Fleischer’s Superman – Retro-futurist noir from the ’40s, bold shadows and heroic lighting.

8. Sylvain Chomet – French surrealist like The Triplets of Belleville. Slender, elongated, moody weirdness.

FEATURED POSTS

-

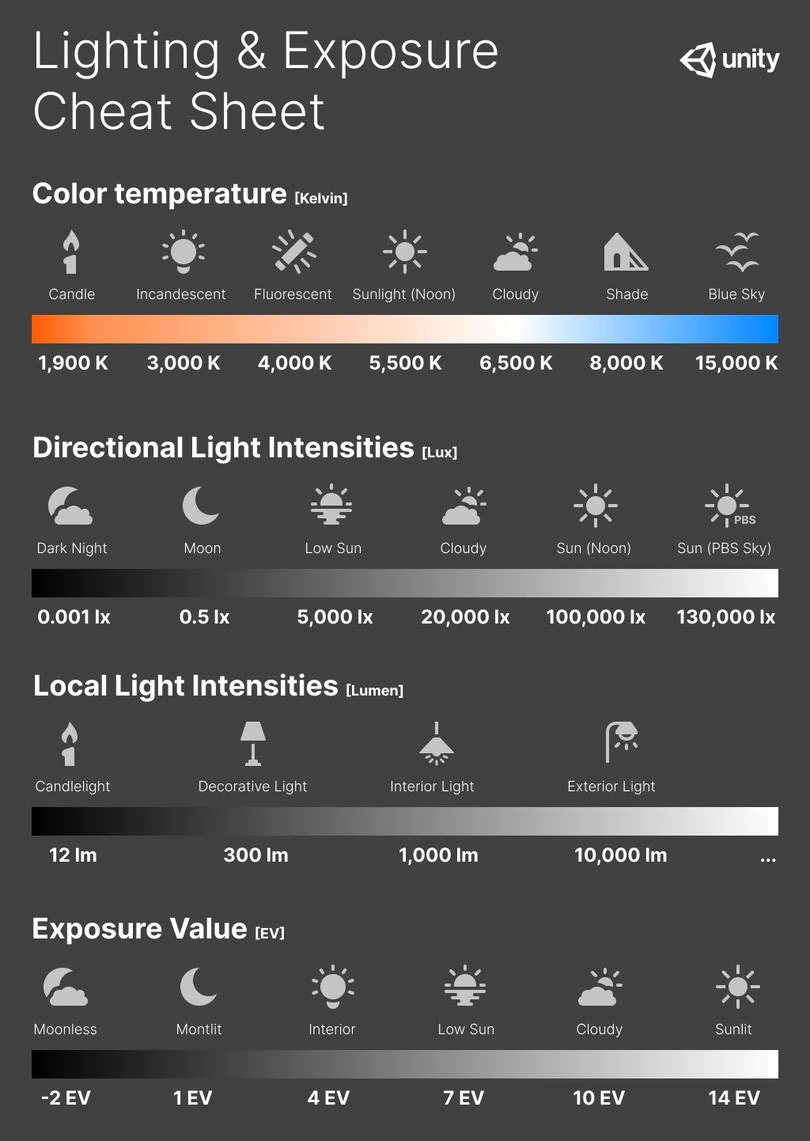

Photography basics: Exposure Value vs Photographic Exposure vs Il/Luminance vs Pixel luminance measurements

Also see: https://www.pixelsham.com/2015/05/16/how-aperture-shutter-speed-and-iso-affect-your-photos/

In photography, exposure value (EV) is a number that represents a combination of a camera’s shutter speed and f-number, such that all combinations that yield the same exposure have the same EV (for any fixed scene luminance).

The EV concept was developed in an attempt to simplify choosing among combinations of equivalent camera settings. Although all camera settings with the same EV nominally give the same exposure, they do not necessarily give the same picture. EV is also used to indicate an interval on the photographic exposure scale. 1 EV corresponding to a standard power-of-2 exposure step, commonly referred to as a stop

EV 0 corresponds to an exposure time of 1 sec and a relative aperture of f/1.0. If the EV is known, it can be used to select combinations of exposure time and f-number.Note EV does not equal to photographic exposure. Photographic Exposure is defined as how much light hits the camera’s sensor. It depends on the camera settings mainly aperture and shutter speed. Exposure value (known as EV) is a number that represents the exposure setting of the camera.

Thus, strictly, EV is not a measure of luminance (indirect or reflected exposure) or illuminance (incidentl exposure); rather, an EV corresponds to a luminance (or illuminance) for which a camera with a given ISO speed would use the indicated EV to obtain the nominally correct exposure. Nonetheless, it is common practice among photographic equipment manufacturers to express luminance in EV for ISO 100 speed, as when specifying metering range or autofocus sensitivity.

The exposure depends on two things: how much light gets through the lenses to the camera’s sensor and for how long the sensor is exposed. The former is a function of the aperture value while the latter is a function of the shutter speed. Exposure value is a number that represents this potential amount of light that could hit the sensor. It is important to understand that exposure value is a measure of how exposed the sensor is to light and not a measure of how much light actually hits the sensor. The exposure value is independent of how lit the scene is. For example a pair of aperture value and shutter speed represents the same exposure value both if the camera is used during a very bright day or during a dark night.

Each exposure value number represents all the possible shutter and aperture settings that result in the same exposure. Although the exposure value is the same for different combinations of aperture values and shutter speeds the resulting photo can be very different (the aperture controls the depth of field while shutter speed controls how much motion is captured).

EV 0.0 is defined as the exposure when setting the aperture to f-number 1.0 and the shutter speed to 1 second. All other exposure values are relative to that number. Exposure values are on a base two logarithmic scale. This means that every single step of EV – plus or minus 1 – represents the exposure (actual light that hits the sensor) being halved or doubled.Formulas

(more…)

-

Narcis Calin’s Galaxy Engine – A free, open source simulation software

This 2025 I decided to start learning how to code, so I installed Visual Studio and I started looking into C++. After days of watching tutorials and guides about the basics of C++ and programming, I decided to make something physics-related. I started with a dot that fell to the ground and then I wanted to simulate gravitational attraction, so I made 2 circles attracting each other. I thought it was really cool to see something I made with code actually work, so I kept building on top of that small, basic program. And here we are after roughly 8 months of learning programming. This is Galaxy Engine, and it is a simulation software I have been making ever since I started my learning journey. It currently can simulate gravity, dark matter, galaxies, the Big Bang, temperature, fluid dynamics, breakable solids, planetary interactions, etc. The program can run many tens of thousands of particles in real time on the CPU thanks to the Barnes-Hut algorithm, mixed with Morton curves. It also includes its own PBR 2D path tracer with BVH optimizations. The path tracer can simulate a bunch of stuff like diffuse lighting, specular reflections, refraction, internal reflection, fresnel, emission, dispersion, roughness, IOR, nested IOR and more! I tried to make the path tracer closer to traditional 3D render engines like V-Ray. I honestly never imagined I would go this far with programming, and it has been an amazing learning experience so far. I think that mixing this knowledge with my 3D knowledge can unlock countless new possibilities. In case you are curious about Galaxy Engine, I made it completely free and Open-Source so that anyone can build and compile it locally! You can find the source code in GitHub

https://github.com/NarcisCalin/Galaxy-Engine