BREAKING NEWS

LATEST POSTS

-

Moondream Gaze Detection – Open source code

This is convenient for captioning videos, understanding social dynamics, and for specific cases such as sports analytics, or detecting when drivers or operators are distracted.

https://huggingface.co/spaces/moondream/gaze-demo

https://moondream.ai/blog/announcing-gaze-detection

-

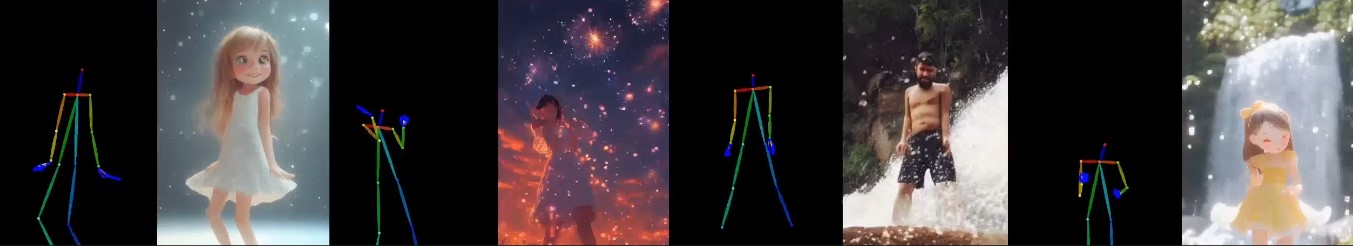

X-Dyna – Expressive Dynamic Human Image Animation

https://x-dyna.github.io/xdyna.github.io

A novel zero-shot, diffusion-based pipeline for animating a single human image using facial expressions and body movements derived from a driving video, that generates realistic, context-aware dynamics for both the subject and the surrounding environment.

-

Flex 1 Alpha – a pre-trained base 8 billion parameter rectified flow transformer

https://huggingface.co/ostris/Flex.1-alpha

Flex.1 started as the FLUX.1-schnell-training-adapter to make training LoRAs on FLUX.1-schnell possible.

-

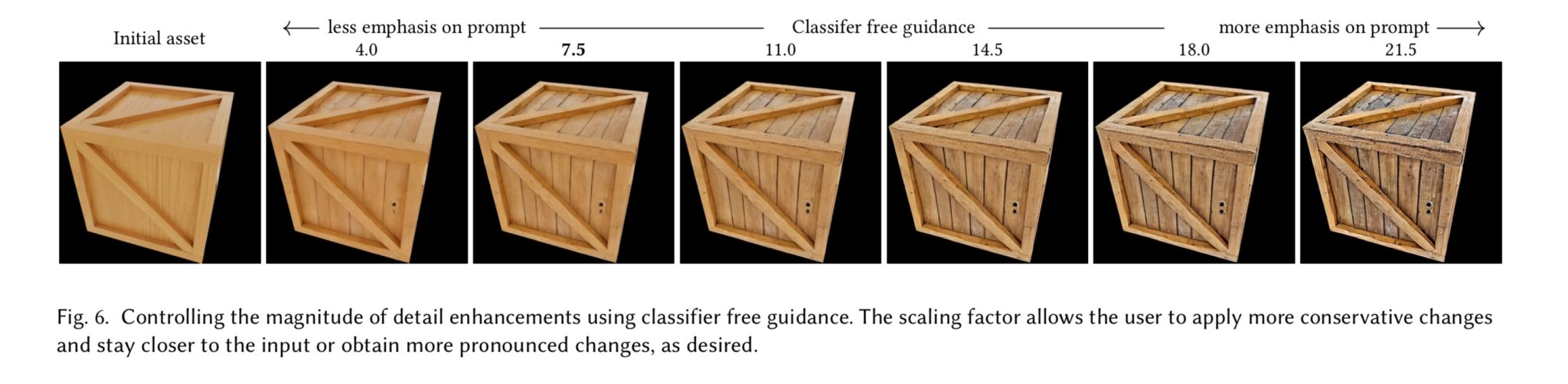

Generative Detail Enhancement for Physically Based Materials

https://arxiv.org/html/2502.13994v1

https://arxiv.org/pdf/2502.13994

A tool for enhancing the detail of physically based materials using an off-the-shelf diffusion model and inverse rendering.

-

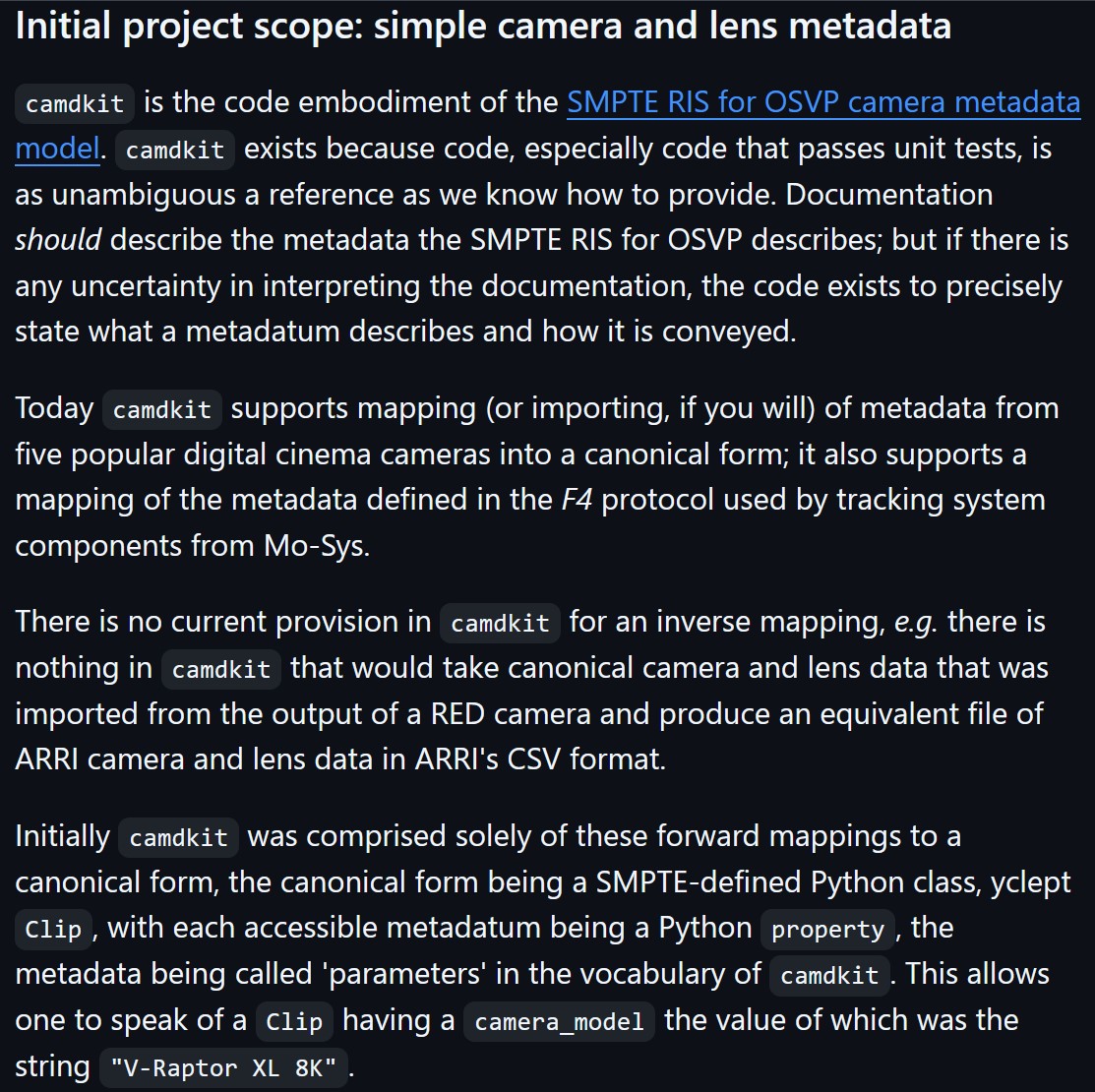

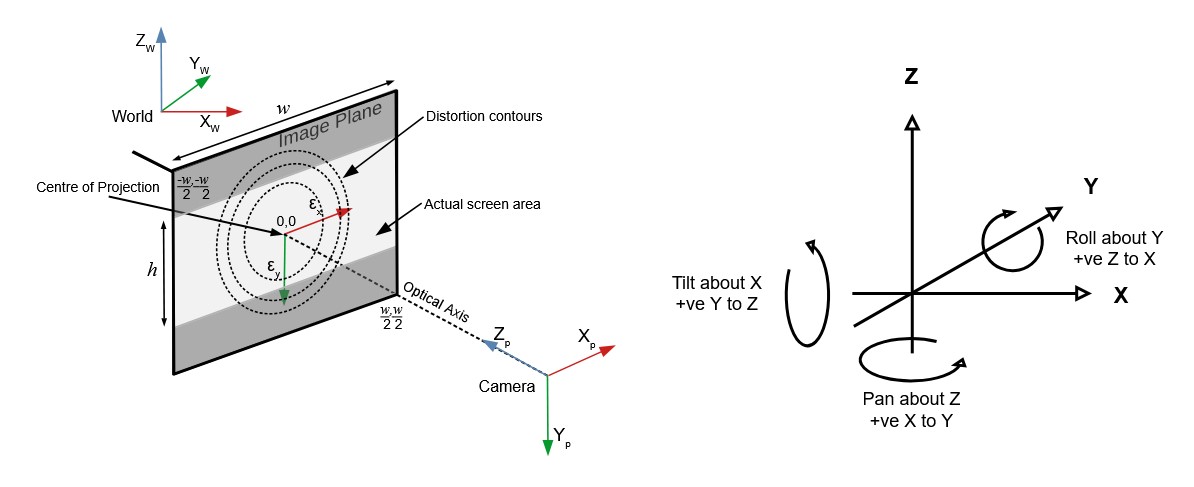

Camera Metadata Toolkit (camdkit) for Virtual Production

https://github.com/SMPTE/ris-osvp-metadata-camdkit

Today

camdkitsupports mapping (or importing, if you will) of metadata from five popular digital cinema cameras into a canonical form; it also supports a mapping of the metadata defined in the F4 protocol used by tracking system components from Mo-Sys.

-

OpenTrackIO – free and open-source protocol designed to improve interoperability in Virtual Production

OpenTrackIO defines the schema of JSON samples that contain a wide range of metadata about the device, its transform(s), associated camera and lens. The full schema is given below and can be downloaded here.

-

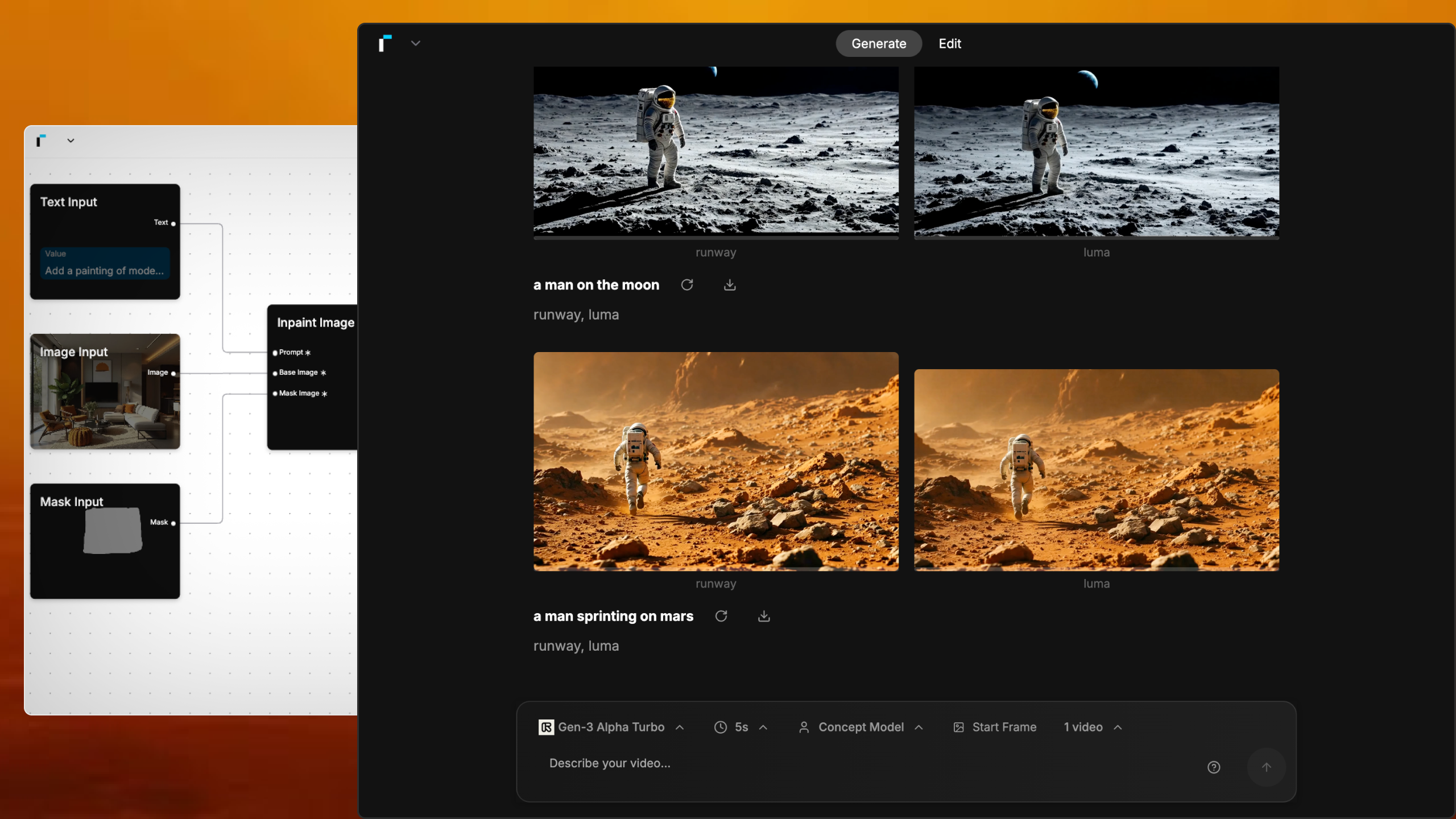

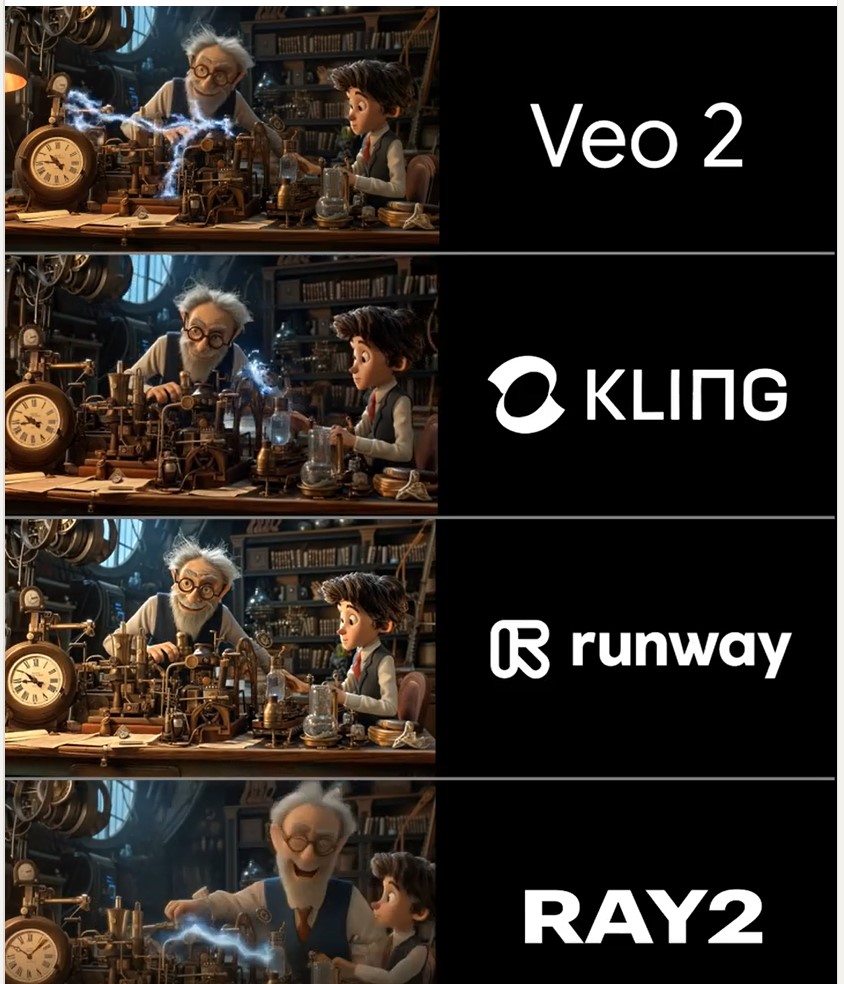

Martin Gent – Comparing current video AI models

https://www.linkedin.com/posts/martingent_imagineapp-veo2-kling-activity-7298979787962806272-n0Sn

🔹 𝗩𝗲𝗼 2 – After the legendary prompt adherence of Veo 2 T2V, I have to say I2V is a little disappointing, especially when it comes to camera moves. You often get those Sora-like jump-cuts too which can be annoying.

🔹 𝗞𝗹𝗶𝗻𝗴 1.6 Pro – Still the one to beat for I2V, both for image quality and prompt adherence. It’s also a lot cheaper than Veo 2. Generations can be slow, but are usually worth the wait.

🔹 𝗥𝘂𝗻𝘄𝗮𝘆 Gen 3 – Useful for certain shots, but overdue an update. The worst performer here by some margin. Bring on Gen 4!

🔹 𝗟𝘂𝗺𝗮 Ray 2 – I love the energy and inventiveness Ray 2 brings, but those came with some image quality issues. I want to test more with this model though for sure.

-

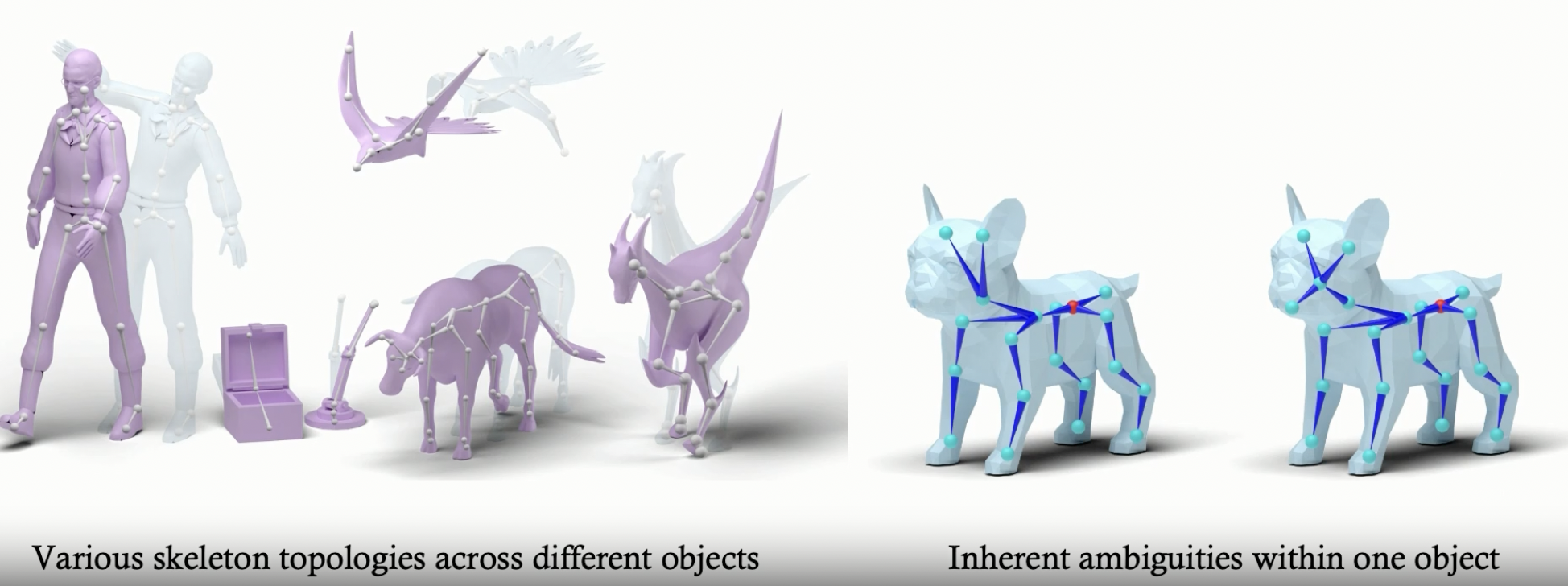

RigAnything – Template-Free Autoregressive Rigging for Diverse 3D Assets

https://www.liuisabella.com/RigAnything

RigAnything was developed through a collaboration between UC San Diego, Adobe Research, and Hillbot Inc. It addresses one of 3D animation’s most persistent challenges: automatic rigging.

- Template-Free Autoregressive Rigging. A transformer-based model that sequentially generates skeletons without predefined templates, enabling automatic rigging across diverse 3D assets through probabilistic joint prediction and skinning weight assignment.

- Support Arbitrary Input Pose. Generates high-quality skeletons for shapes in any pose through online joint pose augmentation during training, eliminating the common rest-pose requirement of existing methods and enabling broader real-world applications.

- Fast Rigging Speed. Achieves 20x faster performance than existing template-based methods, completing rigging in under 2 seconds per shape.

FEATURED POSTS

-

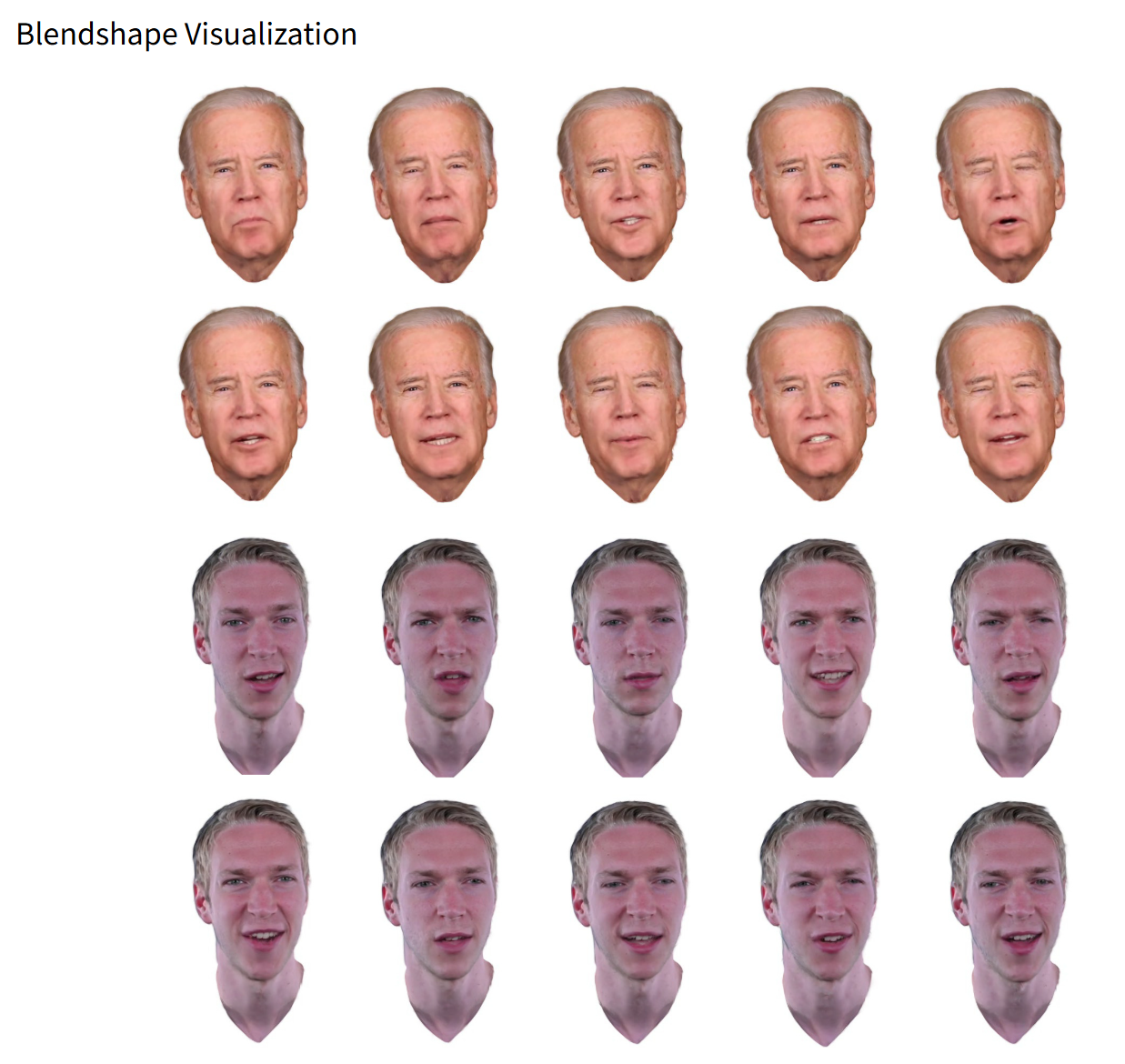

RGBAvatar – Reduced Gaussian Blendshapes for Online Modeling of Head Avatars

https://gapszju.github.io/RGBAvatar

A method for reconstructing photorealistic, animatable head avatars at speeds sufficient for on-the-fly reconstruction. Unlike prior approaches that utilize linear bases from 3D morphable models (3DMM) to model Gaussian blendshapes, our method maps tracked 3DMM parameters into reduced blendshape weights with an MLP, leading to a compact set of blendshape bases.

https://github.com/gapszju/RGBAvatar

-

Capturing textures albedo

Building a Portable PBR Texture Scanner by Stephane Lb

http://rtgfx.com/pbr-texture-scanner/How To Split Specular And Diffuse In Real Images, by John Hable

http://filmicworlds.com/blog/how-to-split-specular-and-diffuse-in-real-images/Capturing albedo using a Spectralon

https://www.activision.com/cdn/research/Real_World_Measurements_for_Call_of_Duty_Advanced_Warfare.pdfReal_World_Measurements_for_Call_of_Duty_Advanced_Warfare.pdf

Spectralon is a teflon-based pressed powderthat comes closest to being a pure Lambertian diffuse material that reflects 100% of all light. If we take an HDR photograph of the Spectralon alongside the material to be measured, we can derive thediffuse albedo of that material.

The process to capture diffuse reflectance is very similar to the one outlined by Hable.

1. We put a linear polarizing filter in front of the camera lens and a second linear polarizing filterin front of a modeling light or a flash such that the two filters are oriented perpendicular to eachother, i.e. cross polarized.

2. We place Spectralon close to and parallel with the material we are capturing and take brack-eted shots of the setup7. Typically, we’ll take nine photographs, from -4EV to +4EV in 1EVincrements.

3. We convert the bracketed shots to a linear HDR image. We found that many HDR packagesdo not produce an HDR image in which the pixel values are linear. PTGui is an example of apackage which does generate a linear HDR image. At this point, because of the cross polarization,the image is one of surface diffuse response.

4. We open the file in Photoshop and normalize the image by color picking the Spectralon, filling anew layer with that color and setting that layer to “Divide”. This sets the Spectralon to 1 in theimage. All other color values are relative to this so we can consider them as diffuse albedo.

-

SourceTree vs Github Desktop – Which one to use

Sourcetree and GitHub Desktop are both free, GUI-based Git clients aimed at simplifying version control for developers. While they share the same core purpose—making Git more accessible—they differ in features, UI design, integration options, and target audiences.

Installation & Setup

- Sourcetree

- Download: https://www.sourcetreeapp.com/

- Supported OS: Windows 10+, macOS 10.13+

- Prerequisites: Comes bundled with its own Git, or can be pointed to a system Git install.

- Initial Setup: Wizard guides SSH key generation, authentication with Bitbucket/GitHub/GitLab.

- GitHub Desktop

- Download: https://desktop.github.com/

- Supported OS: Windows 10+, macOS 10.15+

- Prerequisites: Bundled Git; seamless login with GitHub.com or GitHub Enterprise.

- Initial Setup: One-click sign-in with GitHub; auto-syncs repositories from your GitHub account.

Feature Comparison

(more…)Feature Sourcetree GitHub Desktop Branch Visualization Detailed graph view with drag-and-drop for rebasing/merging Linear graph, simpler but less configurable Staging & Commit File-by-file staging, inline diff view All-or-nothing staging, side-by-side diff Interactive Rebase Full support via UI Basic support via command line only Conflict Resolution Built-in merge tool integration (DiffMerge, Beyond Compare) Contextual conflict editor with choice panels Submodule Management Native submodule support Limited; requires CLI Custom Actions / Hooks Define custom actions (e.g., launch scripts) No UI for custom Git hooks Git Flow / Hg Flow Built-in support None Performance Can lag on very large repos Generally snappier on medium-sized repos Memory Footprint Higher RAM usage Lightweight Platform Integration Atlassian Bitbucket, Jira Deep GitHub.com / Enterprise integration Learning Curve Steeper for beginners Beginner-friendly - Sourcetree

-

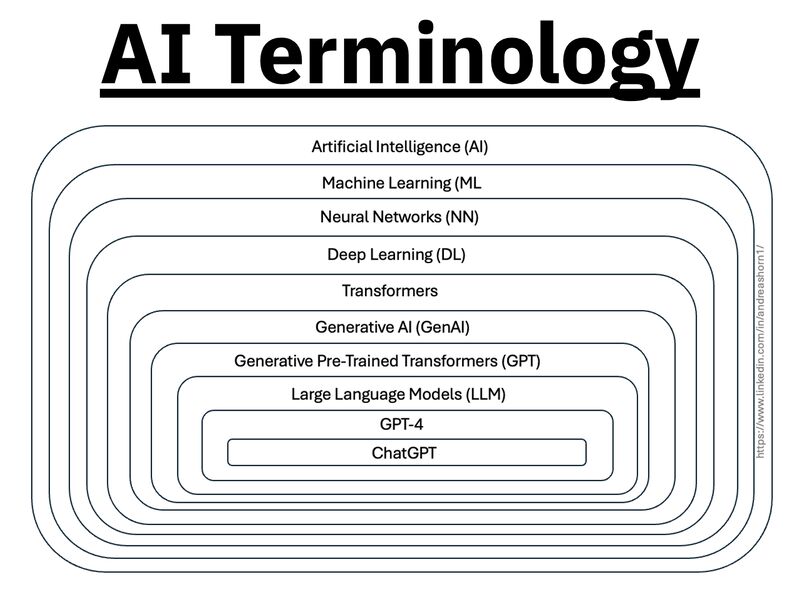

Types of AI Explained in a few Minutes – AI Glossary

1️⃣ 𝗔𝗿𝘁𝗶𝗳𝗶𝗰𝗶𝗮𝗹 𝗜𝗻𝘁𝗲𝗹𝗹𝗶𝗴𝗲𝗻𝗰𝗲 (𝗔𝗜) – The broadest category, covering automation, reasoning, and decision-making. Early AI was rule-based, but today, it’s mainly data-driven.

2️⃣ 𝗠𝗮𝗰𝗵𝗶𝗻𝗲 𝗟𝗲𝗮𝗿𝗻𝗶𝗻𝗴 (𝗠𝗟) – AI that learns patterns from data without explicit programming. Includes decision trees, clustering, and regression models.

3️⃣ 𝗡𝗲𝘂𝗿𝗮𝗹 𝗡𝗲𝘁𝘄𝗼𝗿𝗸𝘀 (𝗡𝗡) – A subset of ML, inspired by the human brain, designed for pattern recognition and feature extraction.

4️⃣ 𝗗𝗲𝗲𝗽 𝗟𝗲𝗮𝗿𝗻𝗶𝗻𝗴 (𝗗𝗟) – Multi-layered neural networks that drives a lot of modern AI advancements, for example enabling image recognition, speech processing, and more.

5️⃣ 𝗧𝗿𝗮𝗻𝘀𝗳𝗼𝗿𝗺𝗲𝗿𝘀 – A revolutionary deep learning architecture introduced by Google in 2017 that allows models to understand and generate language efficiently.

6️⃣ 𝗚𝗲𝗻𝗲𝗿𝗮𝘁𝗶𝘃𝗲 𝗔𝗜 (𝗚𝗲𝗻𝗔𝗜) – AI that doesn’t just analyze data—it creates. From text and images to music and code, this layer powers today’s most advanced AI models.

7️⃣ 𝗚𝗲𝗻𝗲𝗿𝗮𝘁𝗶𝘃𝗲 𝗣𝗿𝗲-𝗧𝗿𝗮𝗶𝗻𝗲𝗱 𝗧𝗿𝗮𝗻𝘀𝗳𝗼𝗿𝗺𝗲𝗿𝘀 (𝗚𝗣𝗧) – A specific subset of Generative AI that uses transformers for text generation.

8️⃣ 𝗟𝗮𝗿𝗴𝗲 𝗟𝗮𝗻𝗴𝘂𝗮𝗴𝗲 𝗠𝗼𝗱𝗲𝗹𝘀 (𝗟𝗟𝗠) – Massive AI models trained on extensive datasets to understand and generate human-like language.

9️⃣ 𝗚𝗣𝗧-4 – One of the most advanced LLMs, built on transformer architecture, trained on vast datasets to generate human-like responses.

🔟 𝗖𝗵𝗮𝘁𝗚𝗣𝗧 – A specific application of GPT-4, optimized for conversational AI and interactive use.