BREAKING NEWS

LATEST POSTS

-

Pyper – a flexible framework for concurrent and parallel data-processing in Python

Pyper is a flexible framework for concurrent and parallel data-processing, based on functional programming patterns.

https://github.com/pyper-dev/pyper

-

Jacob Bartlett – Apple is Killing Swift

https://blog.jacobstechtavern.com/p/apple-is-killing-swift

Jacob Bartlett argues that Swift, once envisioned as a simple and composable programming language by its creator Chris Lattner, has become overly complex due to Apple’s governance. Bartlett highlights that Swift now contains 217 reserved keywords, deviating from its original goal of simplicity. He contrasts Swift’s governance model, where Apple serves as the project lead and arbiter, with other languages like Python and Rust, which have more community-driven or balanced governance structures. Bartlett suggests that Apple’s control has led to Swift’s current state, moving away from Lattner’s initial vision.

-

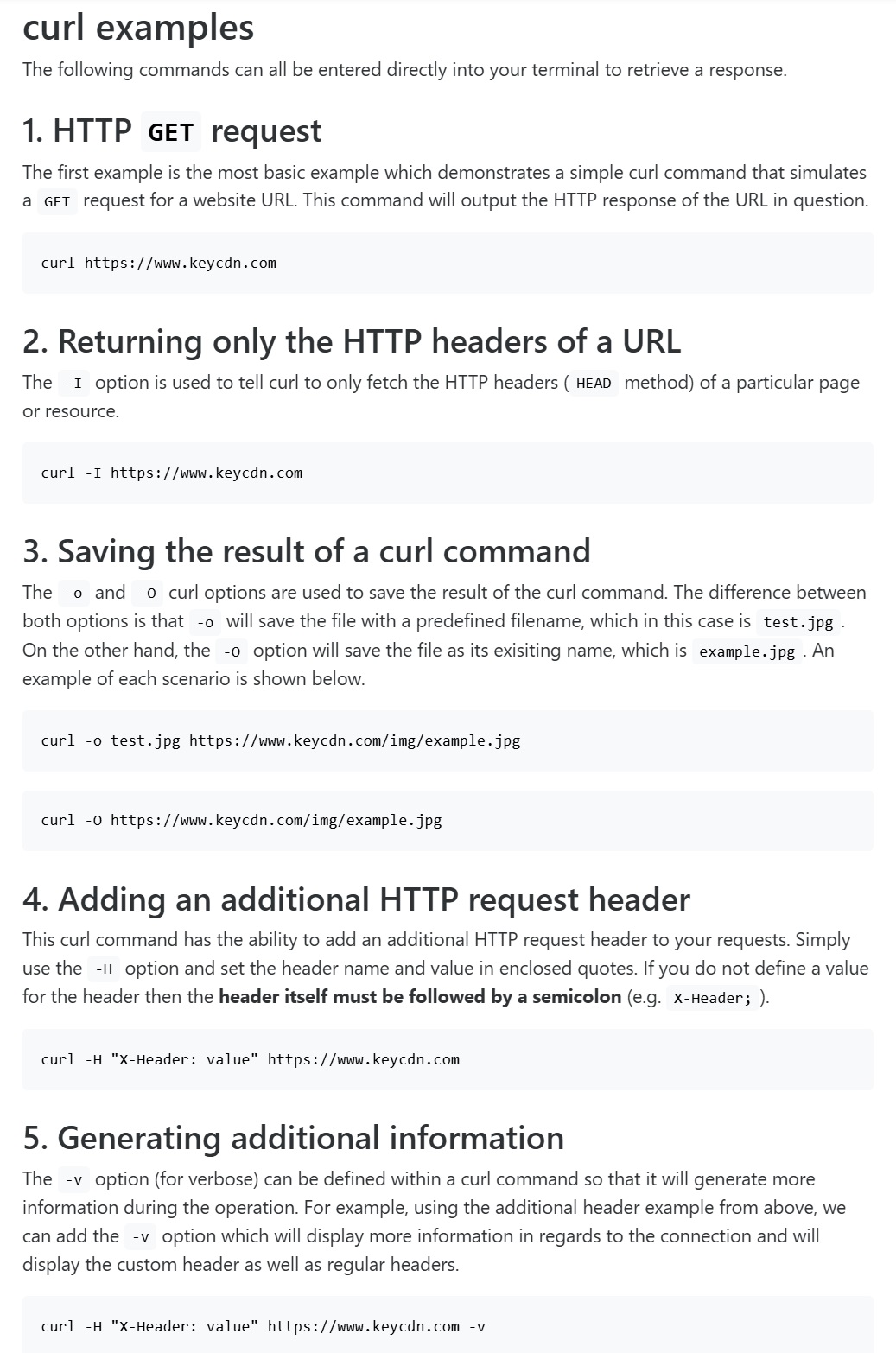

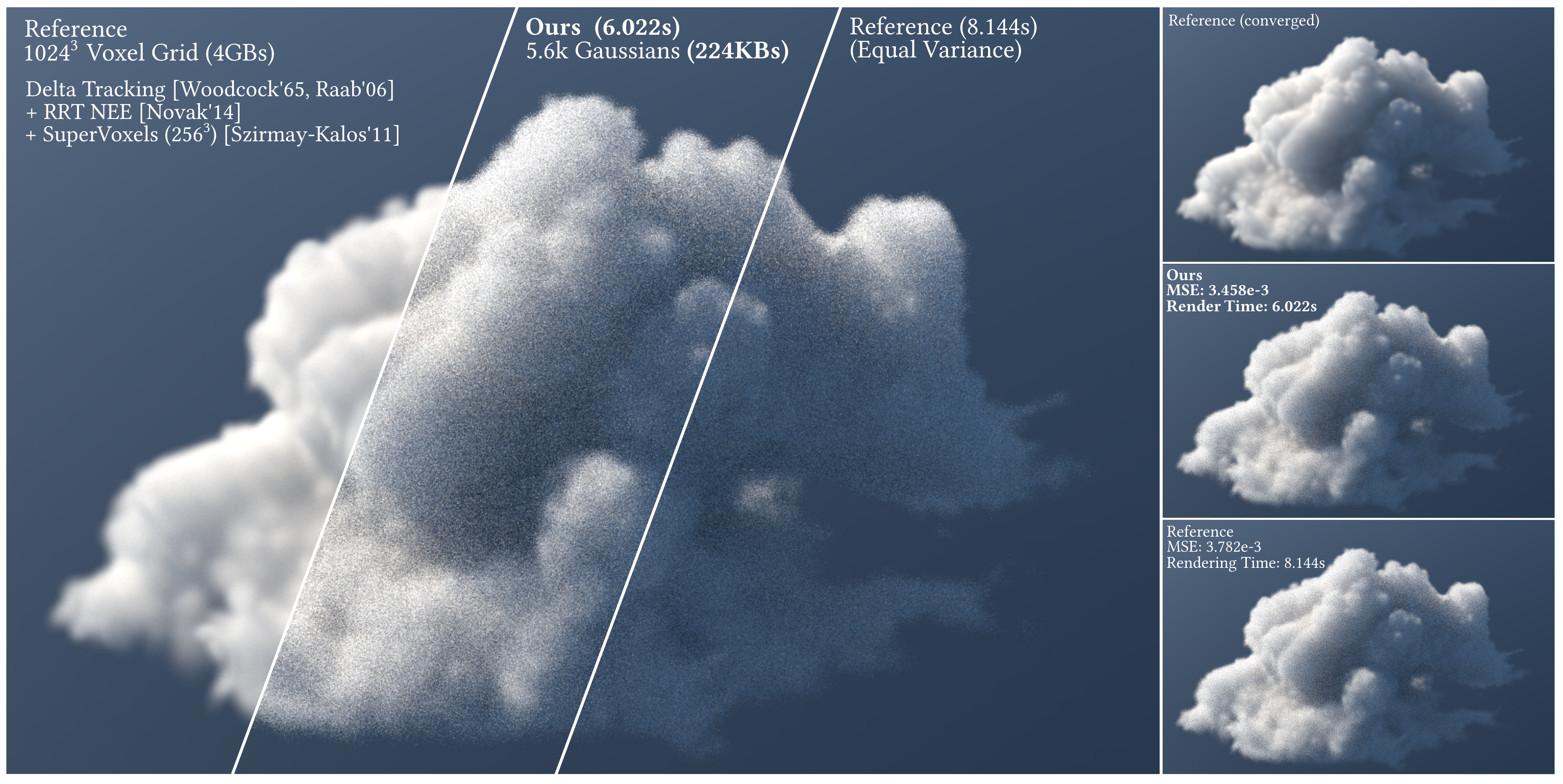

Don’t Splat your Gaussians – Volumetric Ray-Traced Primitives for Modeling and Rendering Scattering and Emissive Media

https://arcanous98.github.io/projectPages/gaussianVolumes.html

We propose a compact and efficient alternative to existing volumetric representations for rendering such as voxel grids.

-

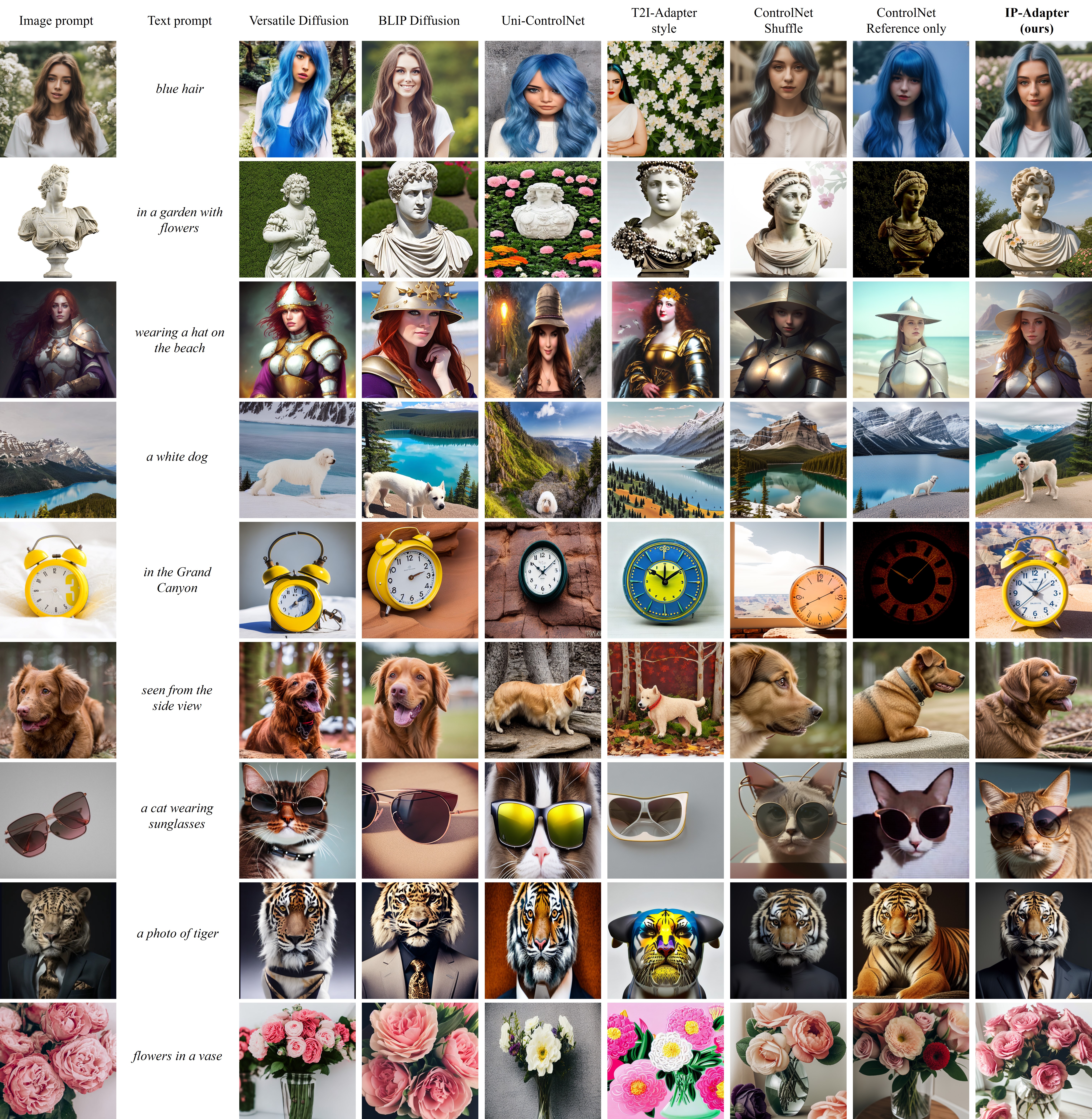

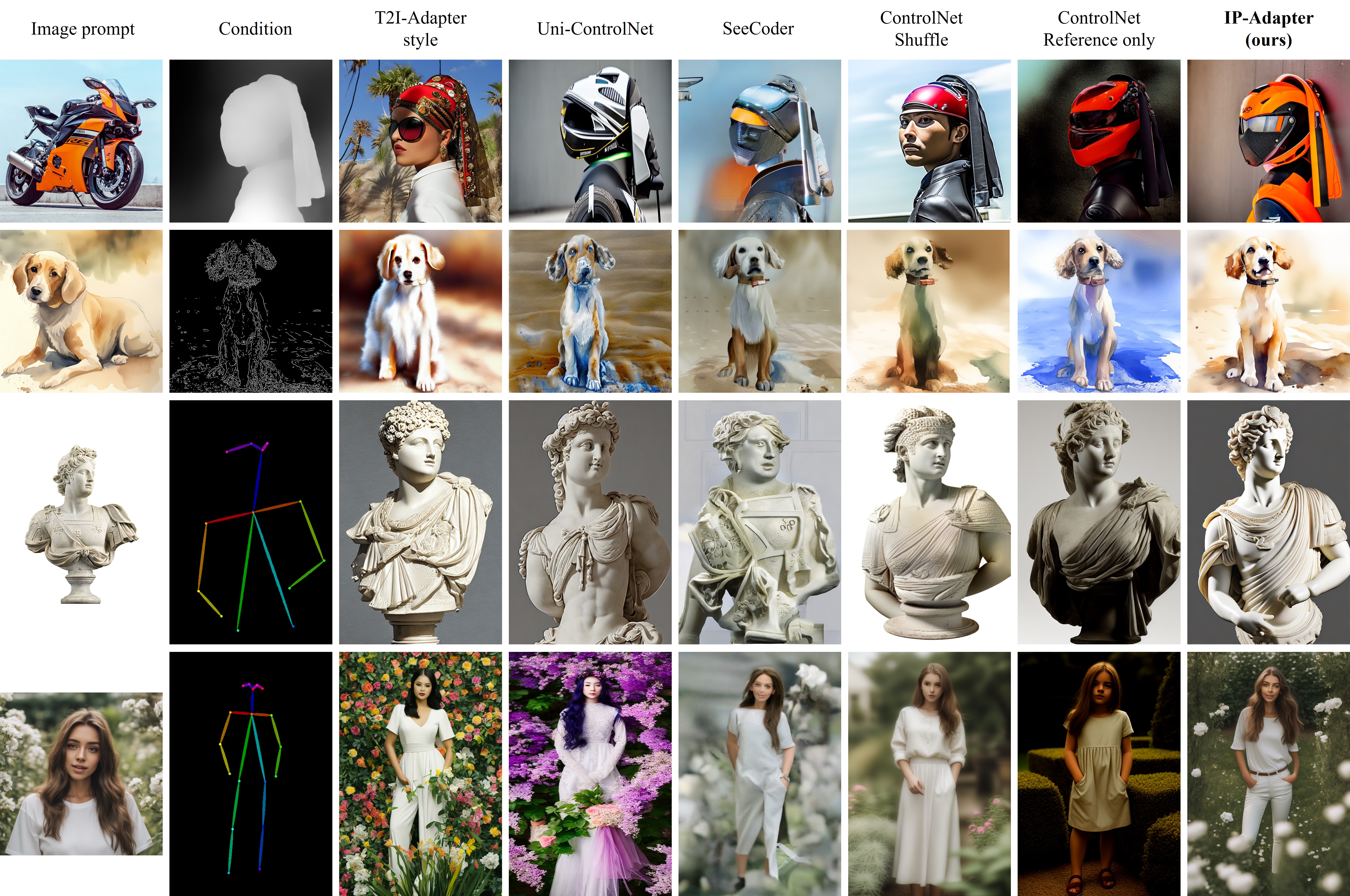

IPAdapter – Text Compatible Image Prompt Adapter for Text-to-Image Image-to-Image Diffusion Models and ComfyUI implementation

github.com/tencent-ailab/IP-Adapter

The IPAdapter are very powerful models for image-to-image conditioning. The subject or even just the style of the reference image(s) can be easily transferred to a generation. Think of it as a 1-image lora. They are an effective and lightweight adapter to achieve image prompt capability for the pre-trained text-to-image diffusion models. An IP-Adapter with only 22M parameters can achieve comparable or even better performance to a fine-tuned image prompt model.

Once the IP-Adapter is trained, it can be directly reusable on custom models fine-tuned from the same base model.The IP-Adapter is fully compatible with existing controllable tools, e.g., ControlNet and T2I-Adapter.

-

SPAR3D – Stable Point-Aware Reconstruction of 3D Objects from Single Images

SPAR3D is a fast single-image 3D reconstructor with intermediate point cloud generation, which allows for interactive user edits and achieves state-of-the-art performance.

https://github.com/Stability-AI/stable-point-aware-3d

https://stability.ai/news/stable-point-aware-3d?utm_source=x&utm_medium=social&utm_campaign=SPAR3D

-

MiniMax-01 goes open source

MiniMax is thrilled to announce the release of the MiniMax-01 series, featuring two groundbreaking models:

MiniMax-Text-01: A foundational language model.

MiniMax-VL-01: A visual multi-modal model.Both models are now open-source, paving the way for innovation and accessibility in AI development!

🔑 Key Innovations

1. Lightning Attention Architecture: Combines 7/8 Lightning Attention with 1/8 Softmax Attention, delivering unparalleled performance.

2. Massive Scale with MoE (Mixture of Experts): 456B parameters with 32 experts and 45.9B activated parameters.

3. 4M-Token Context Window: Processes up to 4 million tokens, 20–32x the capacity of leading models, redefining what’s possible in long-context AI applications.💡 Why MiniMax-01 Matters

1. Innovative Architecture for Top-Tier Performance

The MiniMax-01 series introduces the Lightning Attention mechanism, a bold alternative to traditional Transformer architectures, delivering unmatched efficiency and scalability.2. 4M Ultra-Long Context: Ushering in the AI Agent Era

With the ability to handle 4 million tokens, MiniMax-01 is designed to lead the next wave of agent-based applications, where extended context handling and sustained memory are critical.3. Unbeatable Cost-Effectiveness

Through proprietary architectural innovations and infrastructure optimization, we’re offering the most competitive pricing in the industry:

$0.2 per million input tokens

$1.1 per million output tokens🌟 Experience the Future of AI Today

We believe MiniMax-01 is poised to transform AI applications across industries. Whether you’re building next-gen AI agents, tackling ultra-long context tasks, or exploring new frontiers in AI, MiniMax-01 is here to empower your vision.✅ Try it now for free: hailuo.ai

📄 Read the technical paper: filecdn.minimax.chat/_Arxiv_MiniMax_01_Report.pdf

🌐 Learn more: minimaxi.com/en/news/minimax-01-series-2

💡API Platform: intl.minimaxi.com/

FEATURED POSTS

-

OpenAI may go bankrupt by 2024, AI bot costs company $700,000 every day

While OpenAI and ChatGPT opened up to a wild start and had a record-breaking number of sign-ups in its initial days, it has steadily seen its user base decline over the last couple of months. According to SimilarWeb, July 2023 saw its user base drop by 12 per cent compared to June – it went from 1.7 billion users to 1.5 billion users. Do note that this data only shows users who visited the ChatGPT website, and does not account for users who are using OpenAI’s APIs

-

Using a $50 Schneider Enlarging lens for negatives scanning for macro photography

https://www.closeuphotography.com/50-dollar-componon-vs-mitutoyo-objective

What if you could find a lens for less than $100 that could produce image quality as good as a microscope objective

-

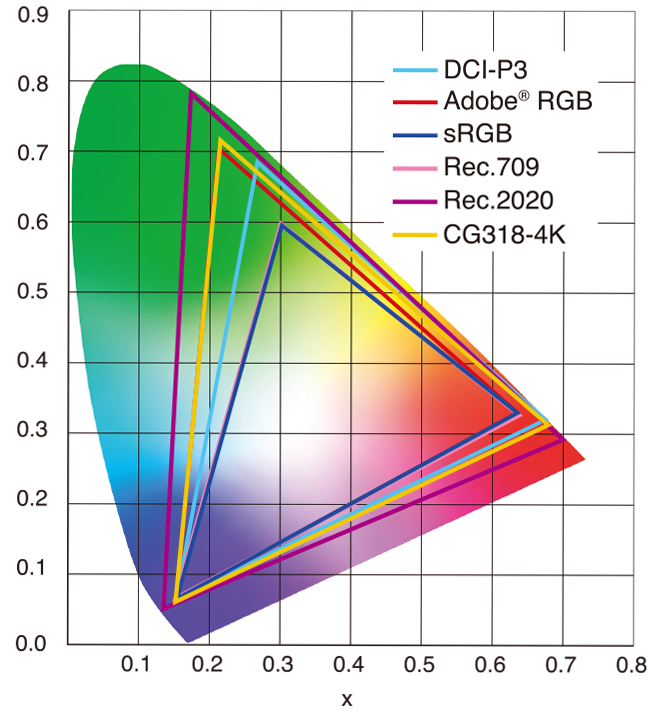

sRGB vs REC709 – An introduction and FFmpeg implementations

1. Basic Comparison

- What they are

- sRGB: A standard “web”/computer-display RGB color space defined by IEC 61966-2-1. It’s used for most monitors, cameras, printers, and the vast majority of images on the Internet.

- Rec. 709: An HD-video color space defined by ITU-R BT.709. It’s the go-to standard for HDTV broadcasts, Blu-ray discs, and professional video pipelines.

- Why they exist

- sRGB: Ensures consistent colors across different consumer devices (PCs, phones, webcams).

- Rec. 709: Ensures consistent colors across video production and playback chains (cameras → editing → broadcast → TV).

- What you’ll see

- On your desktop or phone, images tagged sRGB will look “right” without extra tweaking.

- On an HDTV or video-editing timeline, footage tagged Rec. 709 will display accurate contrast and hue on broadcast-grade monitors.

2. Digging Deeper

Feature sRGB Rec. 709 White point D65 (6504 K), same for both D65 (6504 K) Primaries (x,y) R: (0.640, 0.330) G: (0.300, 0.600) B: (0.150, 0.060) R: (0.640, 0.330) G: (0.300, 0.600) B: (0.150, 0.060) Gamut size Identical triangle on CIE 1931 chart Identical to sRGB Gamma / transfer Piecewise curve: approximate 2.2 with linear toe Pure power-law γ≈2.4 (often approximated as 2.2 in practice) Matrix coefficients N/A (pure RGB usage) Y = 0.2126 R + 0.7152 G + 0.0722 B (Rec. 709 matrix) Typical bit-depth 8-bit/channel (with 16-bit variants) 8-bit/channel (10-bit for professional video) Usage metadata Tagged as “sRGB” in image files (PNG, JPEG, etc.) Tagged as “bt709” in video containers (MP4, MOV) Color range Full-range RGB (0–255) Studio-range Y′CbCr (Y′ [16–235], Cb/Cr [16–240])

Why the Small Differences Matter

(more…) - What they are

-

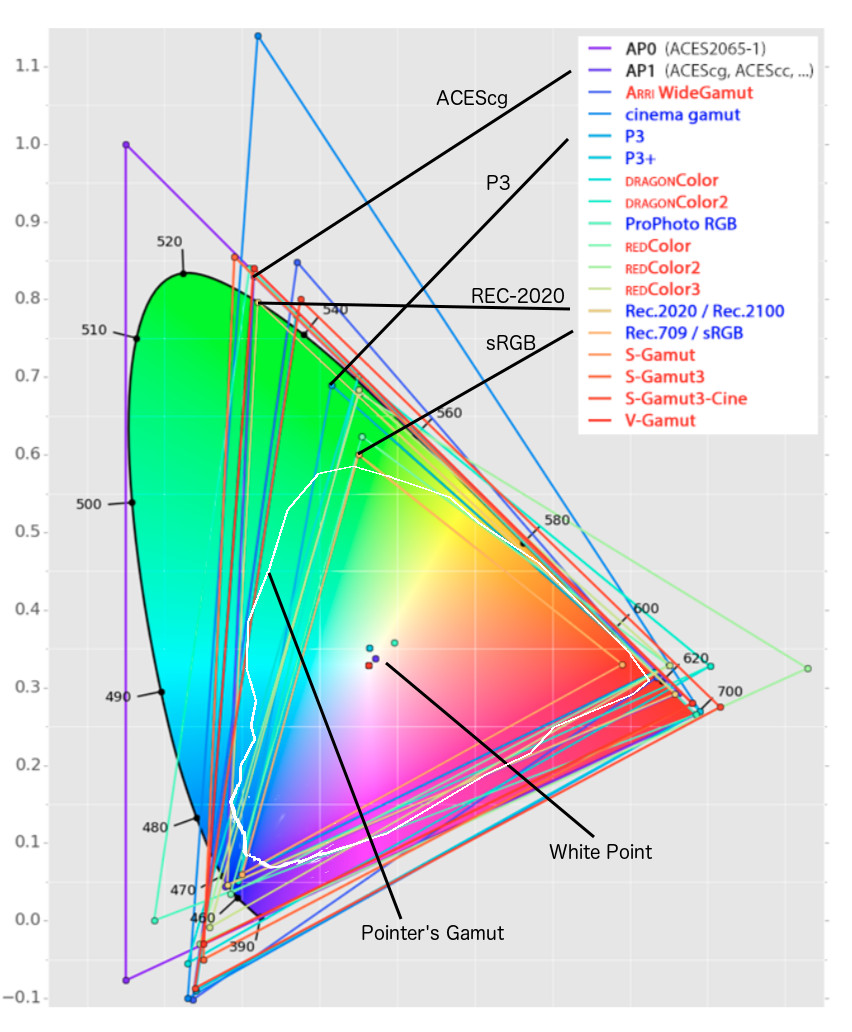

Rec-2020 – TVs new color gamut standard used by Dolby Vision?

https://www.hdrsoft.com/resources/dri.html#bit-depth

The dynamic range is a ratio between the maximum and minimum values of a physical measurement. Its definition depends on what the dynamic range refers to.

For a scene: Dynamic range is the ratio between the brightest and darkest parts of the scene.

For a camera: Dynamic range is the ratio of saturation to noise. More specifically, the ratio of the intensity that just saturates the camera to the intensity that just lifts the camera response one standard deviation above camera noise.

For a display: Dynamic range is the ratio between the maximum and minimum intensities emitted from the screen.

The Dynamic Range of real-world scenes can be quite high — ratios of 100,000:1 are common in the natural world. An HDR (High Dynamic Range) image stores pixel values that span the whole tonal range of real-world scenes. Therefore, an HDR image is encoded in a format that allows the largest range of values, e.g. floating-point values stored with 32 bits per color channel. Another characteristics of an HDR image is that it stores linear values. This means that the value of a pixel from an HDR image is proportional to the amount of light measured by the camera.

For TVs HDR is great, but it’s not the only new TV feature worth discussing.

(more…)