BREAKING NEWS

LATEST POSTS

-

OpenAI Backs Critterz, an AI-Made Animated Feature Film

https://www.wsj.com/tech/ai/openai-backs-ai-made-animated-feature-film-389f70b0

Film, called ‘Critterz,’ aims to debut at Cannes Film Festival and will leverage startup’s AI tools and resources.

“Critterz,” about forest creatures who go on an adventure after their village is disrupted by a stranger, is the brainchild of Chad Nelson, a creative specialist at OpenAI. Nelson started sketching out the characters three years ago while trying to make a short film with what was then OpenAI’s new DALL-E image-generation tool.

-

AI and the Law: Anthropic to Pay $1.5 Billion to Settle Book Piracy Class Action Lawsuit

https://variety.com/2025/digital/news/anthropic-class-action-settlement-billion-1236509571

The settlement amounts to about $3,000 per book and is believed to be the largest ever recovery in a U.S. copyright case, according to the plaintiffs’ attorneys.

-

Sir Peter Jackson’s Wētā FX records $140m loss in two years, amid staff layoffs

https://www.thepost.co.nz/business/360813799/weta-fx-posts-59m-loss-amid-industry-headwinds

Wētā FX, Sir Peter Jackson’s largest business has posted a $59.3 million loss for the year to March 31, an improvement on an $83m loss last year.

-

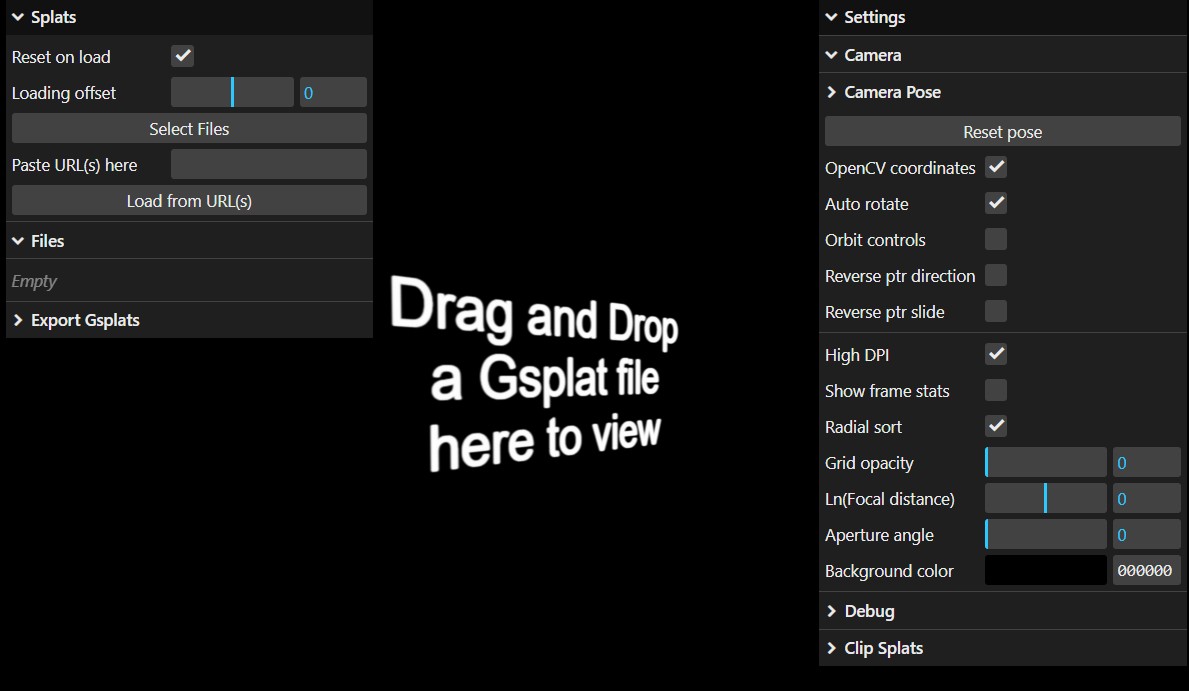

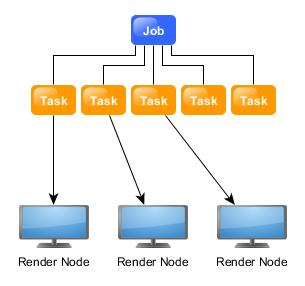

ComfyUI Thinkbox Deadline plugin

Submit ComfyUI workflows to Thinkbox Deadline render farm.

Features

- Submit ComfyUI workflows directly to Deadline

- Batch rendering with seed variation

- Real-time progress monitoring via Deadline Monitor

- Configurable pools, groups, and priorities

https://github.com/doubletwisted/ComfyUI-Deadline-Plugin

https://docs.thinkboxsoftware.com/products/deadline/latest/1_User%20Manual/manual/overview.html

Deadline 10 is a cross-platform render farm management tool for Windows, Linux, and macOS. It gives users control of their rendering resources and can be used on-premises, in the cloud, or both. It handles asset syncing to the cloud, manages data transfers, and supports tagging for cost tracking purposes.

Deadline 10’s Remote Connection Server allows for communication over HTTPS, improving performance and scalability. Where supported, users can use usage-based licensing to supplement their existing fixed pool of software licenses when rendering through Deadline 10.

-

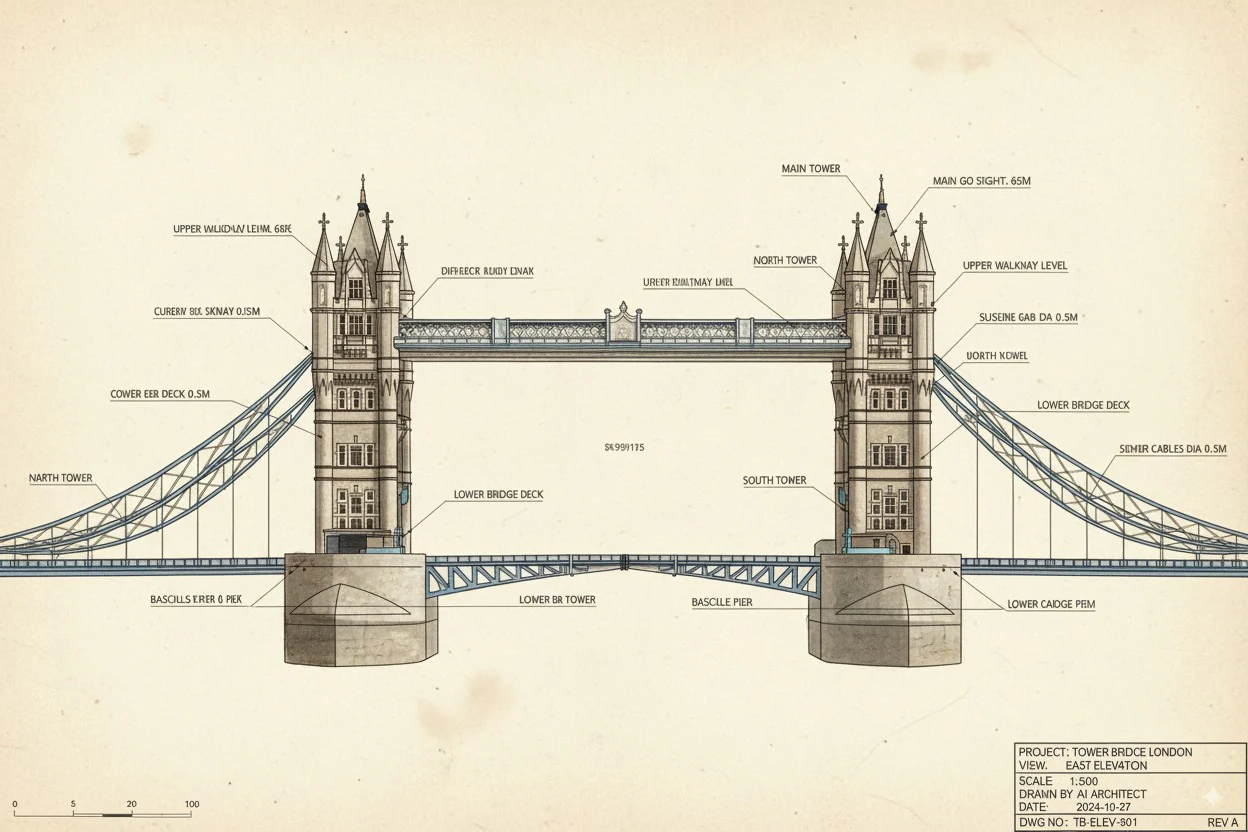

Google’s Nano Banana AI: Free Tool for 3D Architecture Models

https://landscapearchitecture.store/blogs/news/nano-banana-ai-free-tool-for-3d-architecture-models

How to Use Nano Banana AI for Architecture- Go to Google AI Studio.

- Log in with your Gmail and select Gemini 2.5 (Nano Banana).

- Upload a photo — either from your laptop or a Google Street View screenshot.

- Paste this example prompt:

“Use the provided architectural photo as reference. Generate a high-fidelity 3D building model in the look of a 3D-printed architecture model.” - Wait a few seconds, and your 3D architecture model will be ready.

Pro tip: If you want more accuracy, upload two images — a street photo for the facade and an aerial view for the roof/top.

-

Blender 4.5 switches from OpenGL to Vulkan support

Blender is switching from OpenGL to Vulkan as its default graphics backend, starting significantly with Blender 4.5, to achieve better performance and prepare for future features like real-time ray tracing and global illumination. To enable this switch, go to Edit > Preferences > System and set the “Backend” option to “Vulkan,” then restart Blender. This change offers substantial benefits, including faster startup times, improved viewport responsiveness, and more efficient handling of complex scenes by better utilizing your CPU and GPU resources.

Why the Switch to Vulkan?

- Modern Graphics API: Vulkan is a newer, lower-level, and more efficient API that provides developers with greater control over hardware, unlike the older, higher-level OpenGL.

- Performance Boost: This change significantly improves performance in various areas, such as viewport rendering, material loading, and overall UI responsiveness, especially in complex scenes with many textures.

- Better Resource Utilization: Vulkan distributes work more effectively across the CPU and reduces driver overhead, allowing Blender to make better use of your computer’s power.

- Future-Proofing: The Vulkan backend paves the way for advanced features like real-time ray tracing and global illumination in future versions of Blender.

-

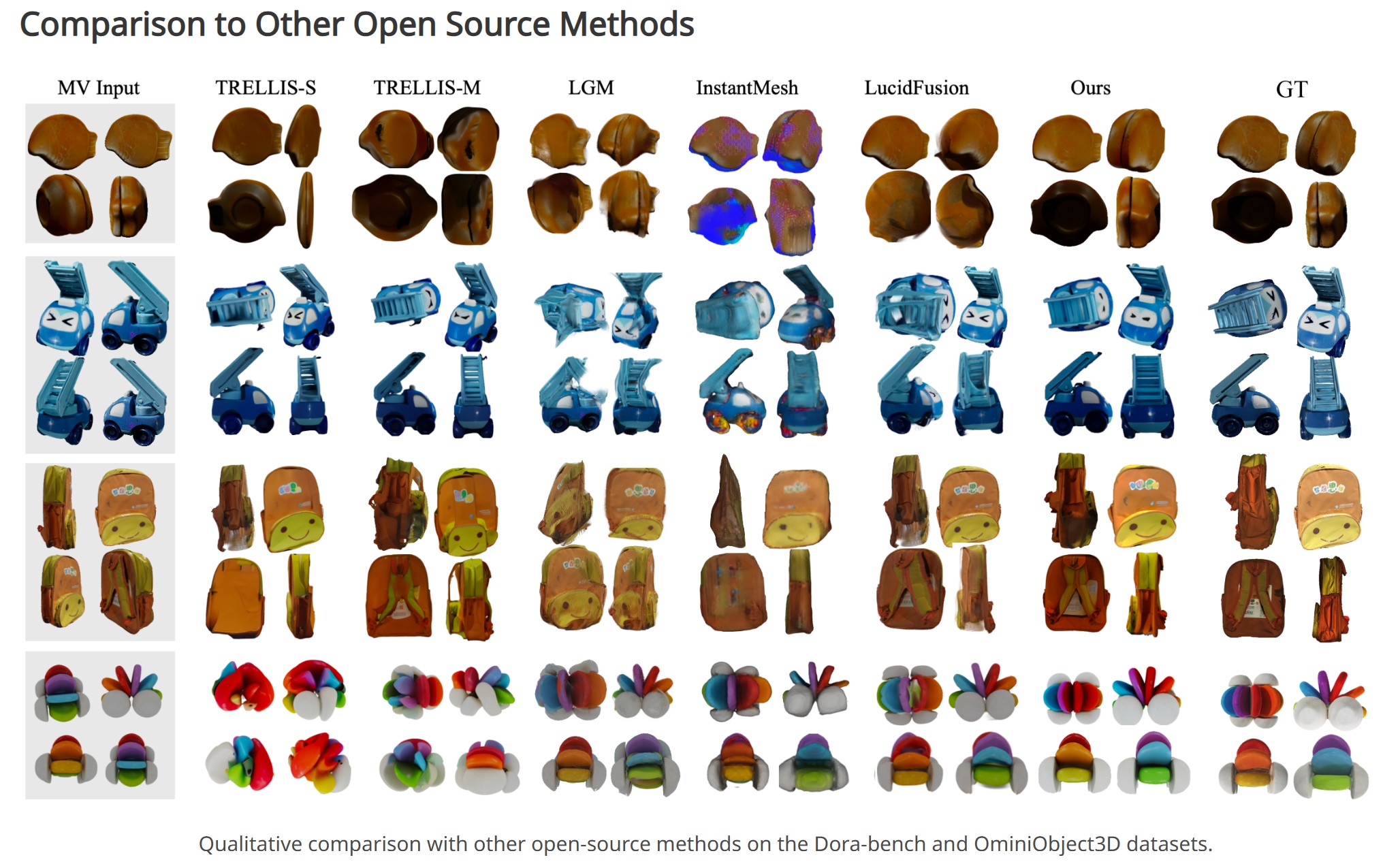

Diffuman4D – 4D Consistent Human View Synthesis from Sparse-View Videos with Spatio-Temporal Diffusion Models

Given sparse-view videos, Diffuman4D (1) generates 4D-consistent multi-view videos conditioned on these inputs, and (2) reconstructs a high-fidelity 4DGS model of the human performance using both the input and the generated videos.

FEATURED POSTS

-

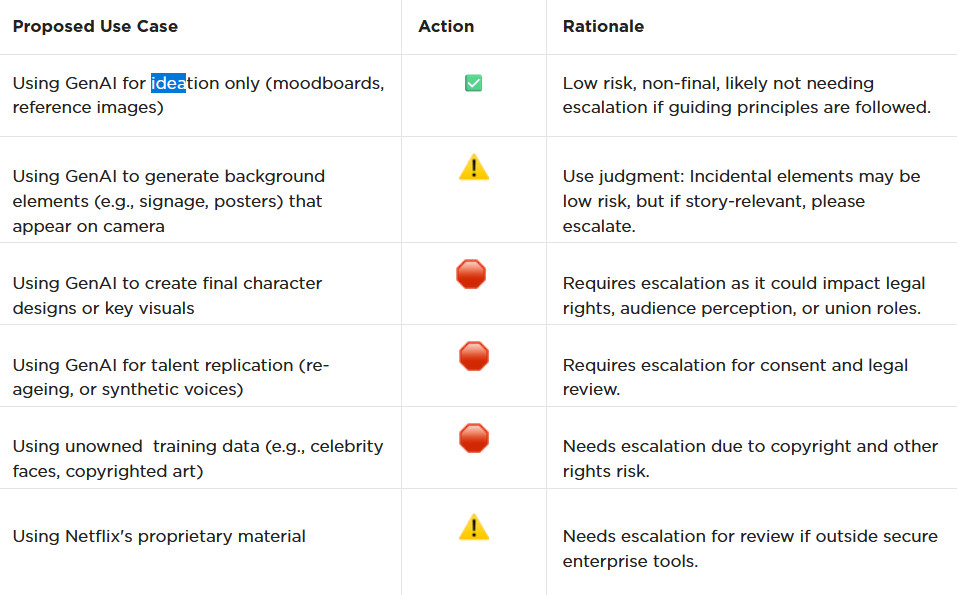

AI and the Law – Netflix : Using Generative AI in Content Production

https://www.cartoonbrew.com/business/netflix-generative-ai-use-guidelines-253300.html

- Temporary Use: AI-generated material can be used for ideation, visualization, and exploration—but is currently considered temporary and not part of final deliverables.

- Ownership & Rights: All outputs must be carefully reviewed to ensure rights, copyright, and usage are properly cleared before integrating into production.

- Transparency: Productions are expected to document and disclose how generative AI is used.

- Human Oversight: AI tools are meant to support creative teams, not replace them—final decision-making rests with human creators.

- Security & Compliance: Any use of AI tools must align with Netflix’s security protocols and protect confidential production material.

-

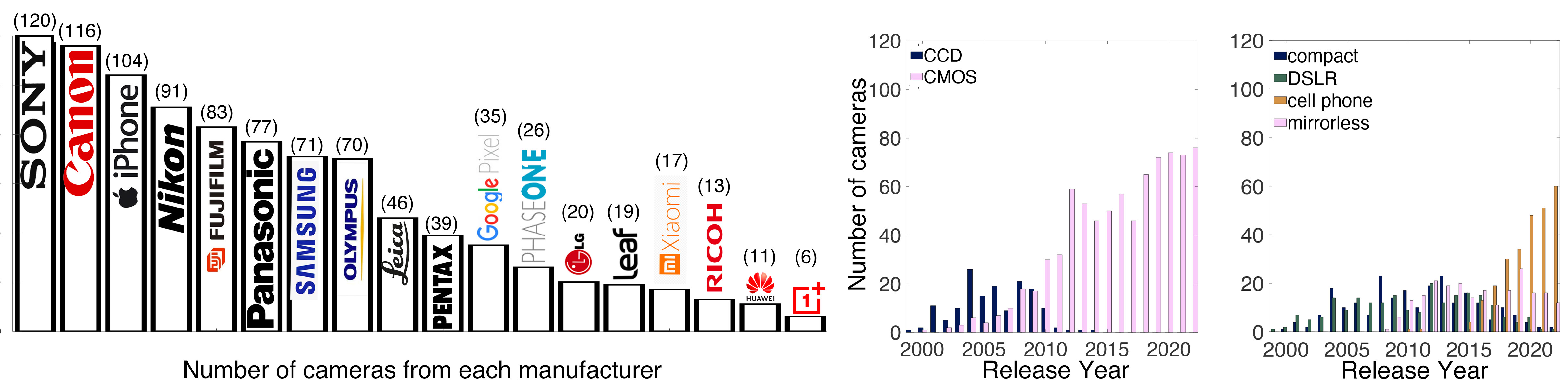

Photography Basics : Spectral Sensitivity Estimation Without a Camera

https://color-lab-eilat.github.io/Spectral-sensitivity-estimation-web/

A number of problems in computer vision and related fields would be mitigated if camera spectral sensitivities were known. As consumer cameras are not designed for high-precision visual tasks, manufacturers do not disclose spectral sensitivities. Their estimation requires a costly optical setup, which triggered researchers to come up with numerous indirect methods that aim to lower cost and complexity by using color targets. However, the use of color targets gives rise to new complications that make the estimation more difficult, and consequently, there currently exists no simple, low-cost, robust go-to method for spectral sensitivity estimation that non-specialized research labs can adopt. Furthermore, even if not limited by hardware or cost, researchers frequently work with imagery from multiple cameras that they do not have in their possession.

To provide a practical solution to this problem, we propose a framework for spectral sensitivity estimation that not only does not require any hardware (including a color target), but also does not require physical access to the camera itself. Similar to other work, we formulate an optimization problem that minimizes a two-term objective function: a camera-specific term from a system of equations, and a universal term that bounds the solution space.

Different than other work, we utilize publicly available high-quality calibration data to construct both terms. We use the colorimetric mapping matrices provided by the Adobe DNG Converter to formulate the camera-specific system of equations, and constrain the solutions using an autoencoder trained on a database of ground-truth curves. On average, we achieve reconstruction errors as low as those that can arise due to manufacturing imperfections between two copies of the same camera. We provide predicted sensitivities for more than 1,000 cameras that the Adobe DNG Converter currently supports, and discuss which tasks can become trivial when camera responses are available.

-

StudioBinder.com – CRI color rendering index

www.studiobinder.com/blog/what-is-color-rendering-index

“The Color Rendering Index is a measurement of how faithfully a light source reveals the colors of whatever it illuminates, it describes the ability of a light source to reveal the color of an object, as compared to the color a natural light source would provide. The highest possible CRI is 100. A CRI of 100 generally refers to a perfect black body, like a tungsten light source or the sun. ”

www.pixelsham.com/2021/04/28/types-of-film-lights-and-their-efficiency