BREAKING NEWS

LATEST POSTS

-

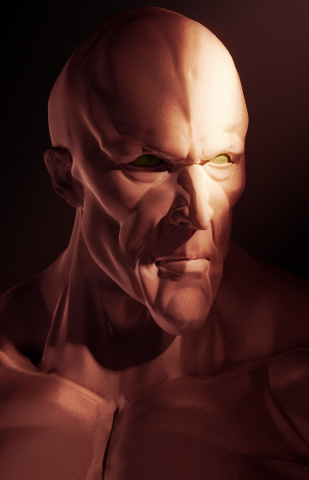

3D Lighting Tutorial by Amaan Kram

http://www.amaanakram.com/lightingT/part1.htm

The goals of lighting in 3D computer graphics are more or less the same as those of real world lighting.

Lighting serves a basic function of bringing out, or pushing back the shapes of objects visible from the camera’s view.

It gives a two-dimensional image on the monitor an illusion of the third dimension-depth.But it does not just stop there. It gives an image its personality, its character. A scene lit in different ways can give a feeling of happiness, of sorrow, of fear etc., and it can do so in dramatic or subtle ways. Along with personality and character, lighting fills a scene with emotion that is directly transmitted to the viewer.

Trying to simulate a real environment in an artificial one can be a daunting task. But even if you make your 3D rendering look absolutely photo-realistic, it doesn’t guarantee that the image carries enough emotion to elicit a “wow” from the people viewing it.

Making 3D renderings photo-realistic can be hard. Putting deep emotions in them can be even harder. However, if you plan out your lighting strategy for the mood and emotion that you want your rendering to express, you make the process easier for yourself.

Each light source can be broken down in to 4 distinct components and analyzed accordingly.

· Intensity

· Direction

· Color

· SizeThe overall thrust of this writing is to produce photo-realistic images by applying good lighting techniques.

-

Insect eyes

https://www.boredpanda.com/20-incredible-eye-macros/

The structure of the eye, similar to many other insects, is termed a compound eye and is one of the most precise and ordered patterns in Biology.

FEATURED POSTS

-

Alberto Taiuti – World Models, the restyle and control AI technology that could displace 3D

https://substack.com/inbox/post/153106976

https://techcrunch.com/2024/12/14/what-are-ai-world-models-and-why-do-they-matter/

A model that can generate the next frame of a 3D scene based on the previous frame(s) and user input, trained on video data, and running in real-time.

World models enable AI systems to simulate and reason about their environments, pushing forward autonomous decision-making and real-world problem-solving.

The key insight is that by training on video data, these models learn not just how to generate images, but also:

- the physics of our world (objects fall down, water flows, etc)

- how objects look from different angles (that chair should look the same as you walk around it)

- how things move and interact (a ball bouncing off a wall, a character walking on sand)

- basic spatial understanding (you can’t walk through walls)

Some companies, like World Labs, are taking a hybrid approach: using World Models to generate static 3D representations that can then be rendered using traditional 3D engines (in this case, Gaussian Splatting). This gives you the best of both worlds: the creative power of AI generation with the multiview consistency and performance of traditional rendering.