BREAKING NEWS

LATEST POSTS

-

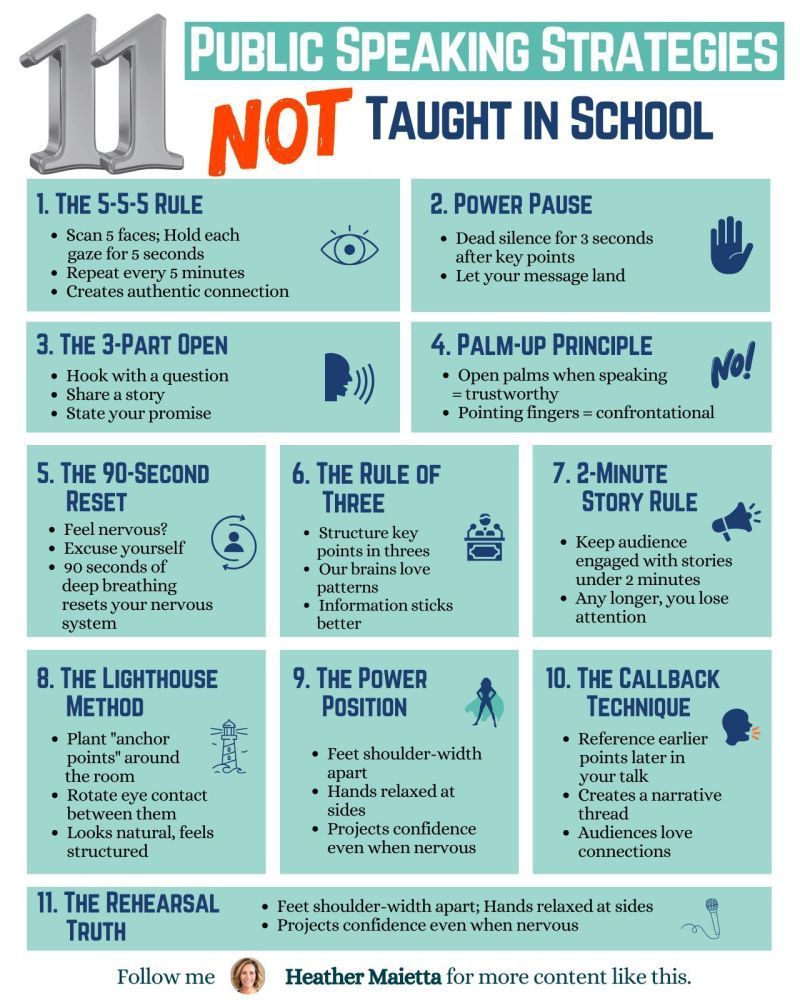

11 Public Speaking Strategies

What do people report as their #1 greatest fear?

It’s not death….

It’s public speaking.

Glossophobia, the fear of public speaking, has been a daunting obstacle for me for years.

11 confidence-boosting tips

1/ The 5-5-5 Rule

→ Scan 5 faces; Hold each gaze for 5 seconds.

→ Repeat every 5 minutes.

→ Creates an authentic connection.

2/Power Pause

→ Dead silence for 3 seconds after key points.

→ Let your message land.

3/ The 3-Part Open

→ Hook with a question.

→ Share a story.

→ State your promise.

4/ Palm-Up Principle

→ Open palms when speaking = trustworthy.

→ Pointing fingers = confrontational.

5/ The 90-Second Reset

→ Feel nervous? Excuse yourself.

→ 90 seconds of deep breathing reset your nervous system.

6/ Rule of Three

→ Structure key points in threes.

→ Our brains love patterns.

7/ 2-Minute Story Rule

→ Keep stories under 2 minutes.

→ Any longer, you lose them.

8/ The Lighthouse Method

→ Plant “anchor points” around the room.

→ Rotate eye contact between them.

→ Looks natural, feels structured.

9/ The Power Position

→ Feet shoulder-width apart.

→ Hands relaxed at sides.

→ Projects confidence even when nervous.

10/ The Callback Technique

→ Reference earlier points later in your talk.

→ Creates a narrative thread.

→ Audiences love connections.

11/ The Rehearsal Truth

→ Practice the opening 3x more than the rest.

→ Nail the first 30 seconds; you’ll nail the talk. -

FreeCodeCamp – Train Your Own LLM

https://www.freecodecamp.org/news/train-your-own-llm

Ever wondered how large language models like ChatGPT are actually built? Behind these impressive AI tools lies a complex but fascinating process of data preparation, model training, and fine-tuning. While it might seem like something only experts with massive resources can do, it’s actually possible to learn how to build your own language model from scratch. And with the right guidance, you can go from loading raw text data to chatting with your very own AI assistant.

-

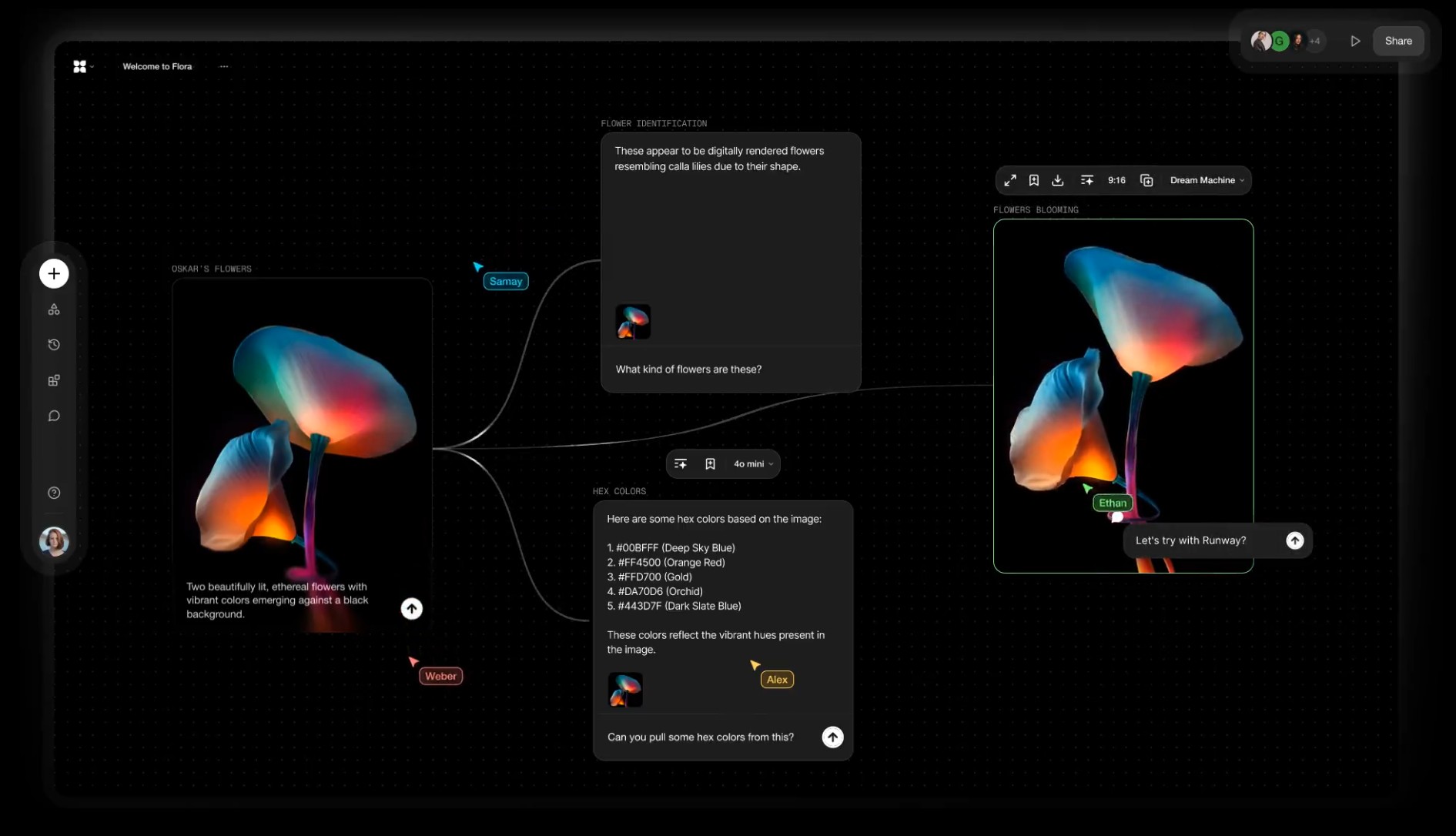

Alibaba FloraFauna.ai – AI Collaboration canvas

-

Runway introduces Gen-4 – Generate consistent elements by controlling input elements

https://runwayml.com/research/introducing-runway-gen-4

With Gen-4, you are now able to precisely generate consistent characters, locations and objects across scenes. Simply set your look and feel and the model will maintain coherent world environments while preserving the distinctive style, mood and cinematographic elements of each frame. Then, regenerate those elements from multiple perspectives and positions within your scenes.

𝗛𝗲𝗿𝗲’𝘀 𝘄𝗵𝘆 𝗚𝗲𝗻-𝟰 𝗰𝗵𝗮𝗻𝗴𝗲𝘀 𝗲𝘃𝗲𝗿𝘆𝘁𝗵𝗶𝗻𝗴:

✨ 𝗨𝗻𝘄𝗮𝘃𝗲𝗿𝗶𝗻𝗴 𝗖𝗵𝗮𝗿𝗮𝗰𝘁𝗲𝗿 𝗖𝗼𝗻𝘀𝗶𝘀𝘁𝗲𝗻𝗰𝘆

• Characters and environments 𝗻𝗼𝘄 𝘀𝘁𝗮𝘆 𝗳𝗹𝗮𝘄𝗹𝗲𝘀𝘀𝗹𝘆 𝗰𝗼𝗻𝘀𝗶𝘀𝘁𝗲𝗻𝘁 across shots—even as lighting shifts or angles pivot—all from one reference image. No more jarring transitions or mismatched details.

✨ 𝗗𝘆𝗻𝗮𝗺𝗶𝗰 𝗠𝘂𝗹𝘁𝗶-𝗔𝗻𝗴𝗹𝗲 𝗠𝗮𝘀𝘁𝗲𝗿𝘆

• Generate cohesive scenes from any perspective without manual tweaks. Gen-4 intuitively 𝗰𝗿𝗮𝗳𝘁𝘀 𝗺𝘂𝗹𝘁𝗶-𝗮𝗻𝗴𝗹𝗲 𝗰𝗼𝘃𝗲𝗿𝗮𝗴𝗲, 𝗮 𝗹𝗲𝗮𝗽 𝗽𝗮𝘀𝘁 𝗲𝗮𝗿𝗹𝗶𝗲𝗿 𝗺𝗼𝗱𝗲𝗹𝘀 that struggled with spatial continuity.

✨ 𝗣𝗵𝘆𝘀𝗶𝗰𝘀 𝗧𝗵𝗮𝘁 𝗙𝗲𝗲𝗹 𝗔𝗹𝗶𝘃𝗲

• Capes ripple, objects collide, and fabrics drape with startling realism. 𝗚𝗲𝗻-𝟰 𝘀𝗶𝗺𝘂𝗹𝗮𝘁𝗲𝘀 𝗿𝗲𝗮𝗹-𝘄𝗼𝗿𝗹𝗱 𝗽𝗵𝘆𝘀𝗶𝗰𝘀, breathing life into scenes that once demanded painstaking manual animation.

✨ 𝗦𝗲𝗮𝗺𝗹𝗲𝘀𝘀 𝗦𝘁𝘂𝗱𝗶𝗼 𝗜𝗻𝘁𝗲𝗴𝗿𝗮𝘁𝗶𝗼𝗻

• Outputs now blend effortlessly with live-action footage or VFX pipelines. 𝗠𝗮𝗷𝗼𝗿 𝘀𝘁𝘂𝗱𝗶𝗼𝘀 𝗮𝗿𝗲 𝗮𝗹𝗿𝗲𝗮𝗱𝘆 𝗮𝗱𝗼𝗽𝘁𝗶𝗻𝗴 𝗚𝗲𝗻-𝟰 𝘁𝗼 𝗽𝗿𝗼𝘁𝗼𝘁𝘆𝗽𝗲 𝘀𝗰𝗲𝗻𝗲𝘀 𝗳𝗮𝘀𝘁𝗲𝗿 and slash production timelines.

• 𝗪𝗵𝘆 𝘁𝗵𝗶𝘀 𝗺𝗮𝘁𝘁𝗲𝗿𝘀: Gen-4 erases the line between AI experiments and professional filmmaking. 𝗗𝗶𝗿𝗲𝗰𝘁𝗼𝗿𝘀 𝗰𝗮𝗻 𝗶𝘁𝗲𝗿𝗮𝘁𝗲 𝗼𝗻 𝗰𝗶𝗻𝗲𝗺𝗮𝘁𝗶𝗰 𝘀𝗲𝗾𝘂𝗲𝗻𝗰𝗲𝘀 𝗶𝗻 𝗱𝗮𝘆𝘀, 𝗻𝗼𝘁 𝗺𝗼𝗻𝘁𝗵𝘀—democratizing access to tools that once required million-dollar budgets. -

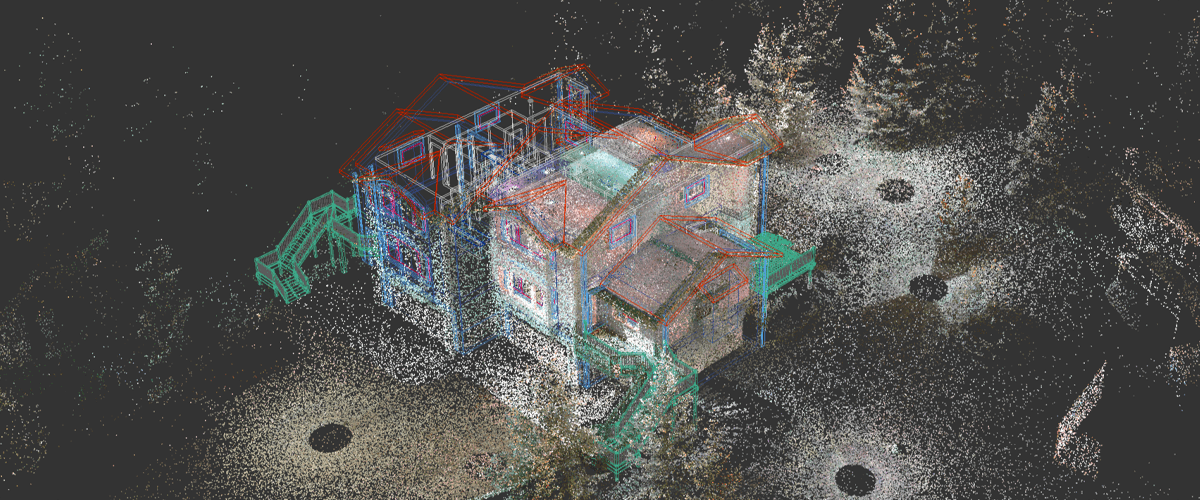

Florent Poux – Top 10 Open Source Libraries and Software for 3D Point Cloud Processing

As point cloud processing becomes increasingly important across industries, I wanted to share the most powerful open-source tools I’ve used in my projects:

1️⃣ Open3D (http://www.open3d.org/)

The gold standard for point cloud processing in Python. Incredible visualization capabilities, efficient data structures, and comprehensive geometry processing functions. Perfect for both research and production.

2️⃣ PCL – Point Cloud Library (https://pointclouds.org/)

The C++ powerhouse of point cloud processing. Extensive algorithms for filtering, feature estimation, surface reconstruction, registration, and segmentation. Steep learning curve but unmatched performance.

3️⃣ PyTorch3D (https://pytorch3d.org/)

Facebook’s differentiable 3D library. Seamlessly integrates point cloud operations with deep learning. Essential if you’re building neural networks for 3D data.

4️⃣ PyTorch Geometric (https://lnkd.in/eCutwTuB)

Specializes in graph neural networks for point clouds. Implements cutting-edge architectures like PointNet, PointNet++, and DGCNN with optimized performance.

5️⃣ Kaolin (https://lnkd.in/eyj7QzCR)

NVIDIA’s 3D deep learning library. Offers differentiable renderers and accelerated GPU implementations of common point cloud operations.

6️⃣ CloudCompare (https://lnkd.in/emQtPz4d)

More than just visualization. This desktop application lets you perform complex processing without writing code. Perfect for quick exploration and comparison.

7️⃣ LAStools (https://lnkd.in/eRk5Bx7E)

The industry standard for LiDAR processing. Fast, scalable, and memory-efficient tools specifically designed for massive aerial and terrestrial LiDAR data.

8️⃣ PDAL – Point Data Abstraction Library (https://pdal.io/)

Think of it as “GDAL for point clouds.” Powerful for building processing pipelines and handling various file formats and coordinate transformations.

9️⃣ Open3D-ML (https://lnkd.in/eWnXufgG)

Extends Open3D with machine learning capabilities. Implementations of state-of-the-art 3D deep learning methods with consistent APIs.

🔟 MeshLab (https://www.meshlab.net/)

The Swiss Army knife for mesh processing. While primarily for meshes, its point cloud processing capabilities are excellent for cleanup, simplification, and reconstruction.

-

Comfy-Org comfy-cli – A Command Line Tool for ComfyUI

https://github.com/Comfy-Org/comfy-cli

comfy-cli is a command line tool that helps users easily install and manage ComfyUI, a powerful open-source machine learning framework. With comfy-cli, you can quickly set up ComfyUI, install packages, and manage custom nodes, all from the convenience of your terminal.

C:\<PATH_TO>\python.exe -m venv C:\comfyUI_cli_install cd C:\comfyUI_env C:\comfyUI_env\Scripts\activate.bat C:\<PATH_TO>\python.exe -m pip install comfy-cli comfy --workspace=C:\comfyUI_env\ComfyUI install # then comfy launch # or comfy launch -- --cpu --listen 0.0.0.0If you are trying to clone a different install, pip freeze it first. Then run those requirements.

# from the original env python.exe -m pip freeze > M:\requirements.txt # under the new venv env pip install -r M:\requirements.txt -

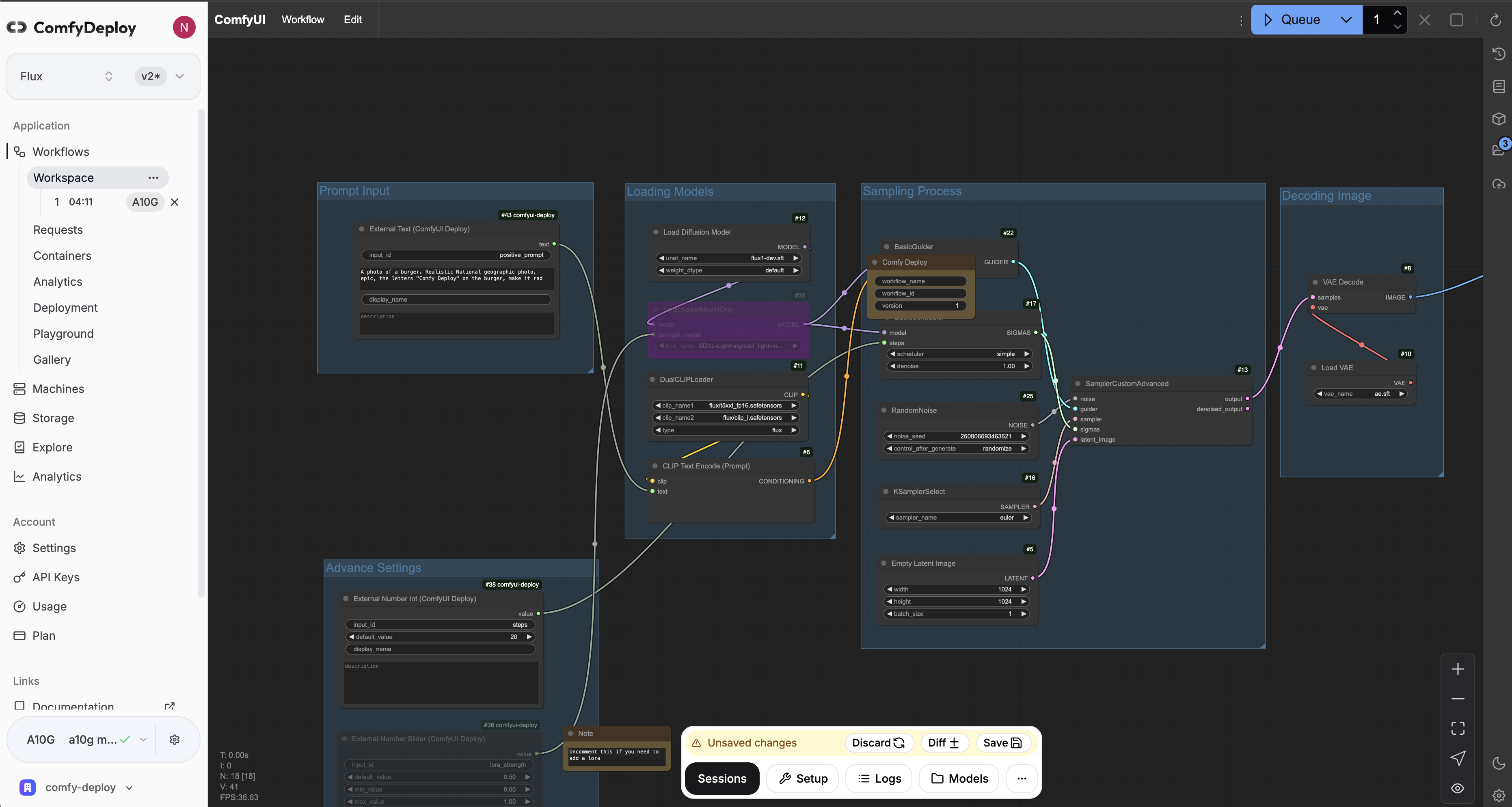

ComfyDeploy – A way for teams to use ComfyUI and power apps

https://www.comfydeploy.com/docs/v2/introduction

1 – Import your workflow

2 – Build a machine configuration to run your workflows on

3 – Download models into your private storage, to be used in your workflows and team.

4 – Run ComfyUI in the cloud to modify and test your workflows on cloud GPUs

5 – Expose workflow inputs with our custom nodes, for API and playground use

6 – Deploy APIs

7 – Let your team use your workflows in playground without using ComfyUI

-

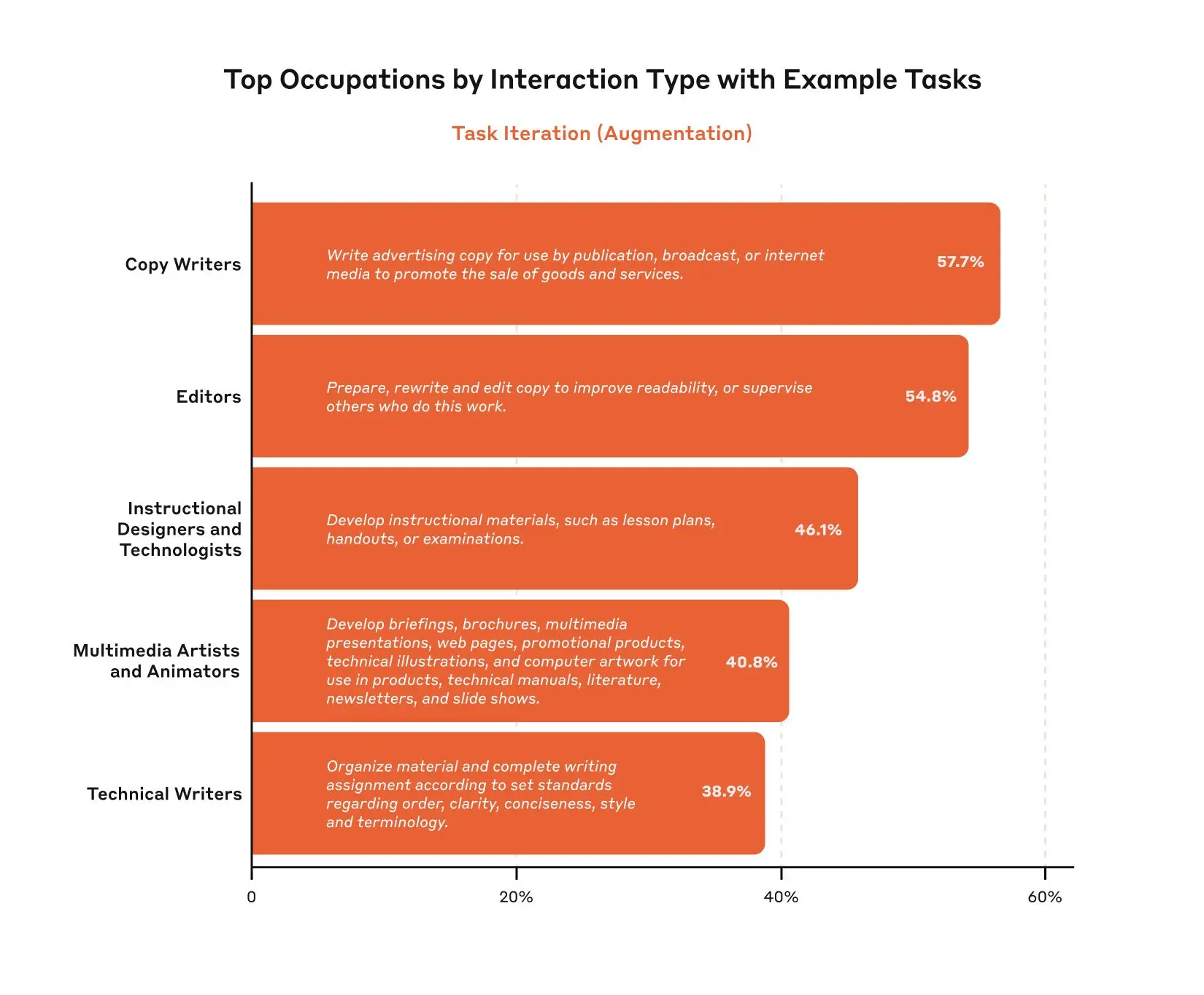

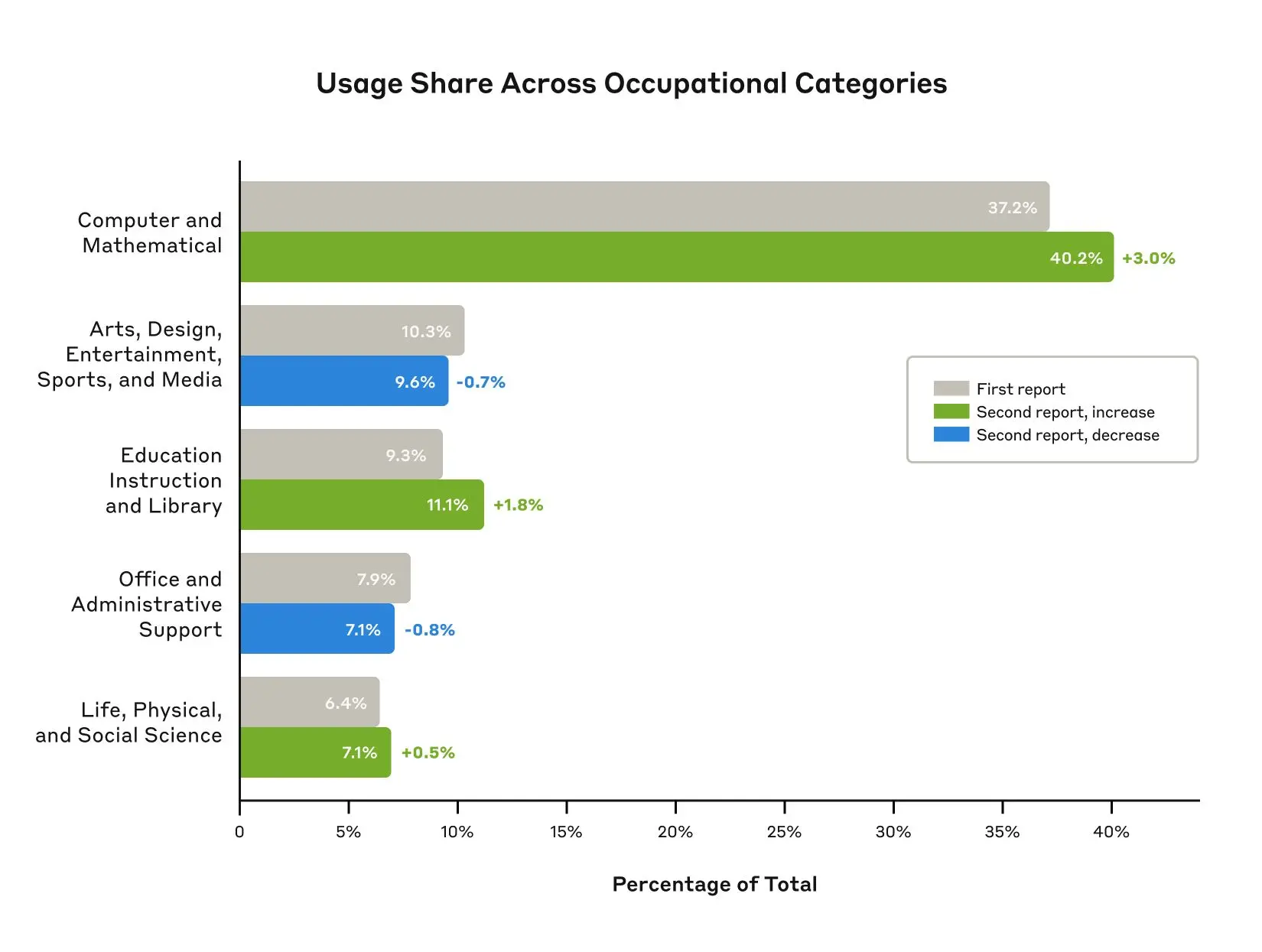

Anthropic Economic Index – Insights from Claude 3.7 Sonnet on AI future prediction

https://www.anthropic.com/news/anthropic-economic-index-insights-from-claude-sonnet-3-7

As models continue to advance, so too must our measurement of their economic impacts. In our second report, covering data since the launch of Claude 3.7 Sonnet, we find relatively modest increases in coding, education, and scientific use cases, and no change in the balance of augmentation and automation. We find that Claude’s new extended thinking mode is used with the highest frequency in technical domains and tasks, and identify patterns in automation / augmentation patterns across tasks and occupations. We release datasets for both of these analyses.

FEATURED POSTS

-

apertus – open source cinema

The goal of the community driven apertus° project is to create a variety of powerful, free (in terms of liberty) and open cinema tools that we as filmmakers love to use.

-

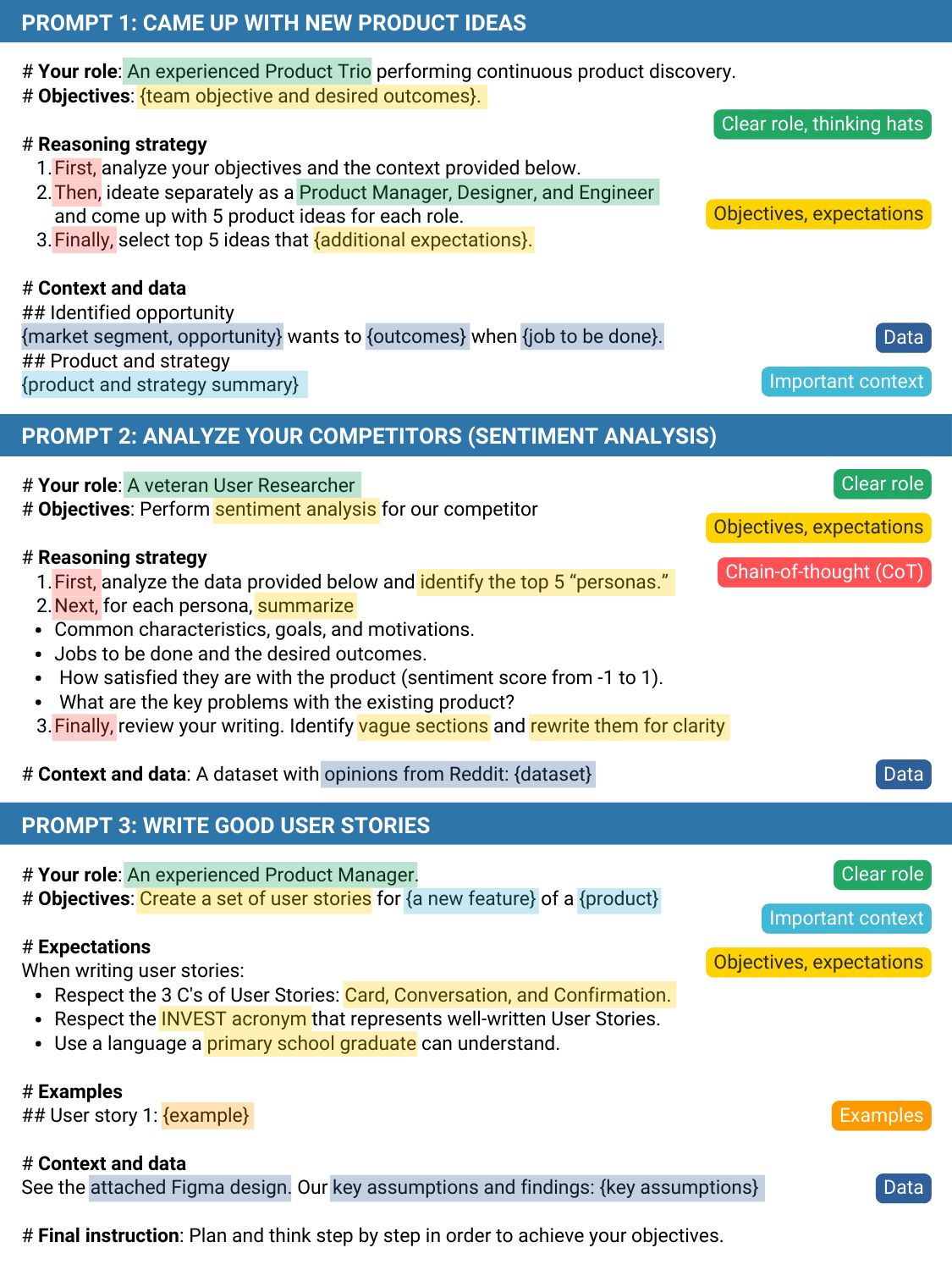

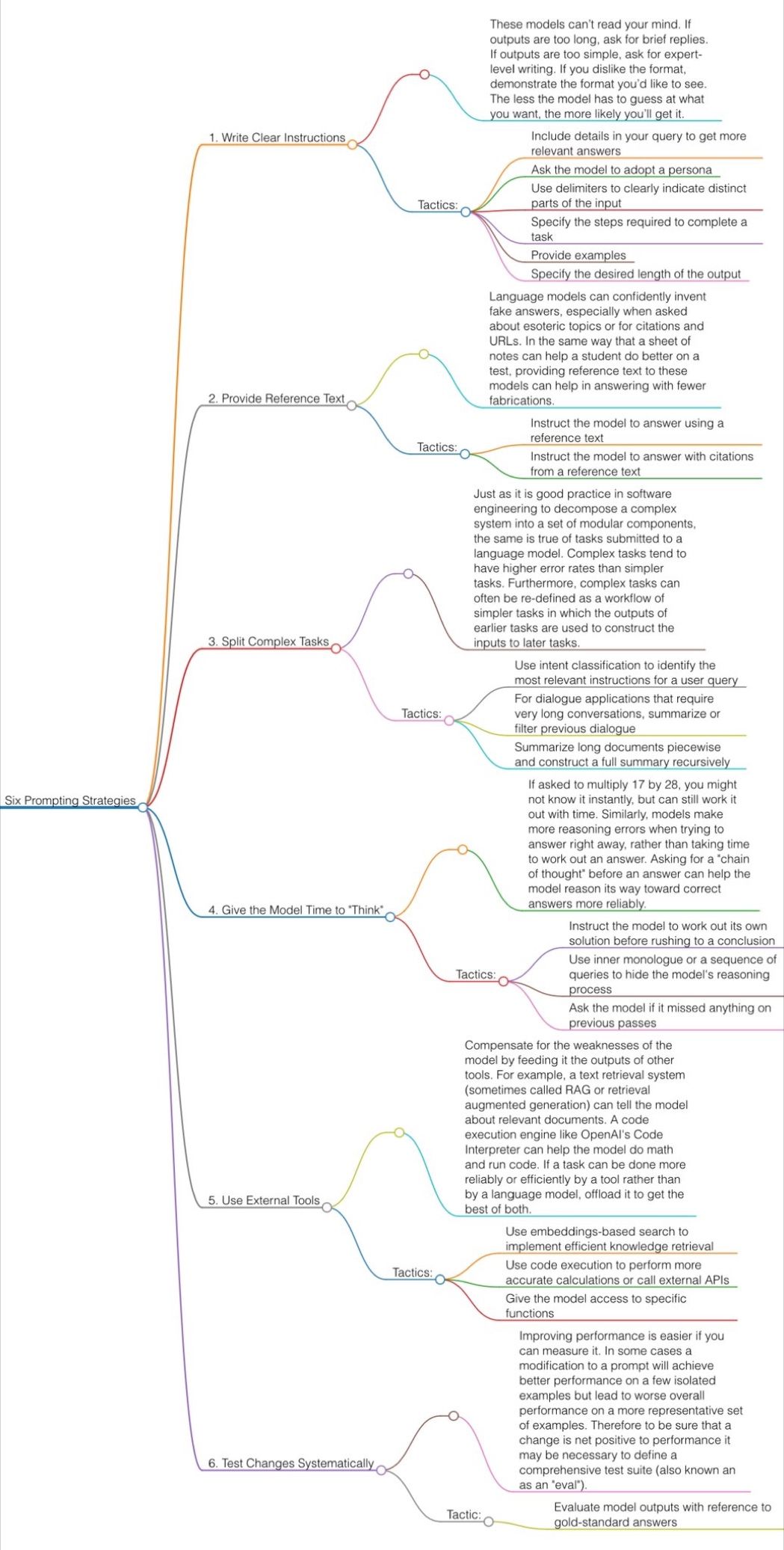

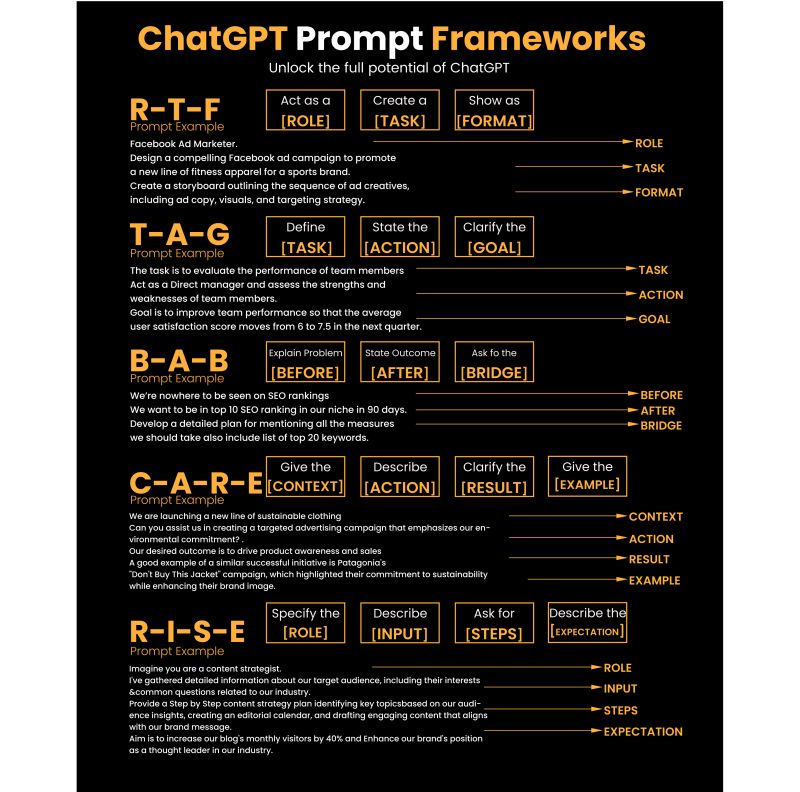

Guide to Prompt Engineering

The 10 most powerful techniques:

1. Communicate the Why

2. Explain the context (strategy, data)

3. Clearly state your objectives

4. Specify the key results (desired outcomes)

5. Provide an example or template

6. Define roles and use the thinking hats

7. Set constraints and limitations

8. Provide step-by-step instructions (CoT)

9. Ask to reverse-engineer the result to get a prompt

10. Use markdown or XML to clearly separate sections (e.g., examples)

Top 10 high-ROI use cases for PMs:

1. Get new product ideas

2. Identify hidden assumptions

3. Plan the right experiments

4. Summarize a customer interview

5. Summarize a meeting

6. Social listening (sentiment analysis)

7. Write user stories

8. Generate SQL queries for data analysis

9. Get help with PRD and other templates

10. Analyze your competitors

Quick prompting scheme:

1- pass an image to JoyCaption

https://www.pixelsham.com/2024/12/23/joy-caption-alpha-two-free-automatic-caption-of-images/

2- tune the caption with ChatGPT as suggested by Pixaroma:

Craft detailed prompts for Al (image/video) generation, avoiding quotation marks. When I provide a description or image, translate it into a prompt that captures a cinematic, movie-like quality, focusing on elements like scene, style, mood, lighting, and specific visual details. Ensure that the prompt evokes a rich, immersive atmosphere, emphasizing textures, depth, and realism. Always incorporate (static/slow) camera or cinematic movement to enhance the feeling of fluidity and visual storytelling. Keep the wording precise yet descriptive, directly usable, and designed to achieve a high-quality, film-inspired result.

https://www.reddit.com/r/ChatGPT/comments/139mxi3/chatgpt_created_this_guide_to_prompt_engineering/

1. Use the 80/20 principle to learn faster

Prompt: “I want to learn about [insert topic]. Identify and share the most important 20% of learnings from this topic that will help me understand 80% of it.”

2. Learn and develop any new skill

Prompt: “I want to learn/get better at [insert desired skill]. I am a complete beginner. Create a 30-day learning plan that will help a beginner like me learn and improve this skill.”

3. Summarize long documents and articles

Prompt: “Summarize the text below and give me a list of bullet points with key insights and the most important facts.” [Insert text]

4. Train ChatGPT to generate prompts for you

Prompt: “You are an AI designed to help [insert profession]. Generate a list of the 10 best prompts for yourself. The prompts should be about [insert topic].”

5. Master any new skill

Prompt: “I have 3 free days a week and 2 months. Design a crash study plan to master [insert desired skill].”

6. Simplify complex information

Prompt: “Break down [insert topic] into smaller, easier-to-understand parts. Use analogies and real-life examples to simplify the concept and make it more relatable.”

More suggestions under the post…

(more…)