BREAKING NEWS

LATEST POSTS

-

VillageRoadShow production studio files for bankruptcy

Village Roadshow (prod company/financier: Wonka, the Matrix series, and Ocean’s 11) has filed for bankruptcy.

It’s a rough indicator of where we are in 2025 when one of the last independent production companies working with the studios goes under.

Here’s their balance sheet:

$400 M in library value of 100+ films (89 of which they co-own with Warner Bros.)

$500 M – $1bn total debt

$1.4 M in debt to WGA, whose members were told to stop working with Roadshow in December

$794 K owed to Bryan Cranston’s prod company

$250 K owed to Sony Pictures TV

$300 K/month overhead

The crowning expense that brought down this 36-year-old production company is the $18 M in (unpaid) legal fees from a lengthy and currently unresolved arbitration with their long-time partner Warner Bros, who they’ve had a co-financing arrangement since the late 90s.

Roadshow sued when WBD released their Matrix Resurrections (2021) film in theaters and on Max simultaneously, causing Roadshow to withhold their portion of the $190 M production costs.

Due to mounting financial pressures, Village Roadshow’s CEO, Steve Mosko, a veteran film and TV exec, left the company in January.

Now, this all falls on the shoulders of Jim Moore, CEO of Vine, an equity firm that owns Village Roadshow, as well as Luc Besson’s prod company EuropaCorp.

-

Google Gemini Robotics

For safety considerations, Google mentions a “layered, holistic approach” that maintains traditional robot safety measures like collision avoidance and force limitations. The company describes developing a “Robot Constitution” framework inspired by Isaac Asimov’s Three Laws of Robotics and releasing a dataset unsurprisingly called “ASIMOV” to help researchers evaluate safety implications of robotic actions.

This new ASIMOV dataset represents Google’s attempt to create standardized ways to assess robot safety beyond physical harm prevention. The dataset appears designed to help researchers test how well AI models understand the potential consequences of actions a robot might take in various scenarios. According to Google’s announcement, the dataset will “help researchers to rigorously measure the safety implications of robotic actions in real-world scenarios.”

-

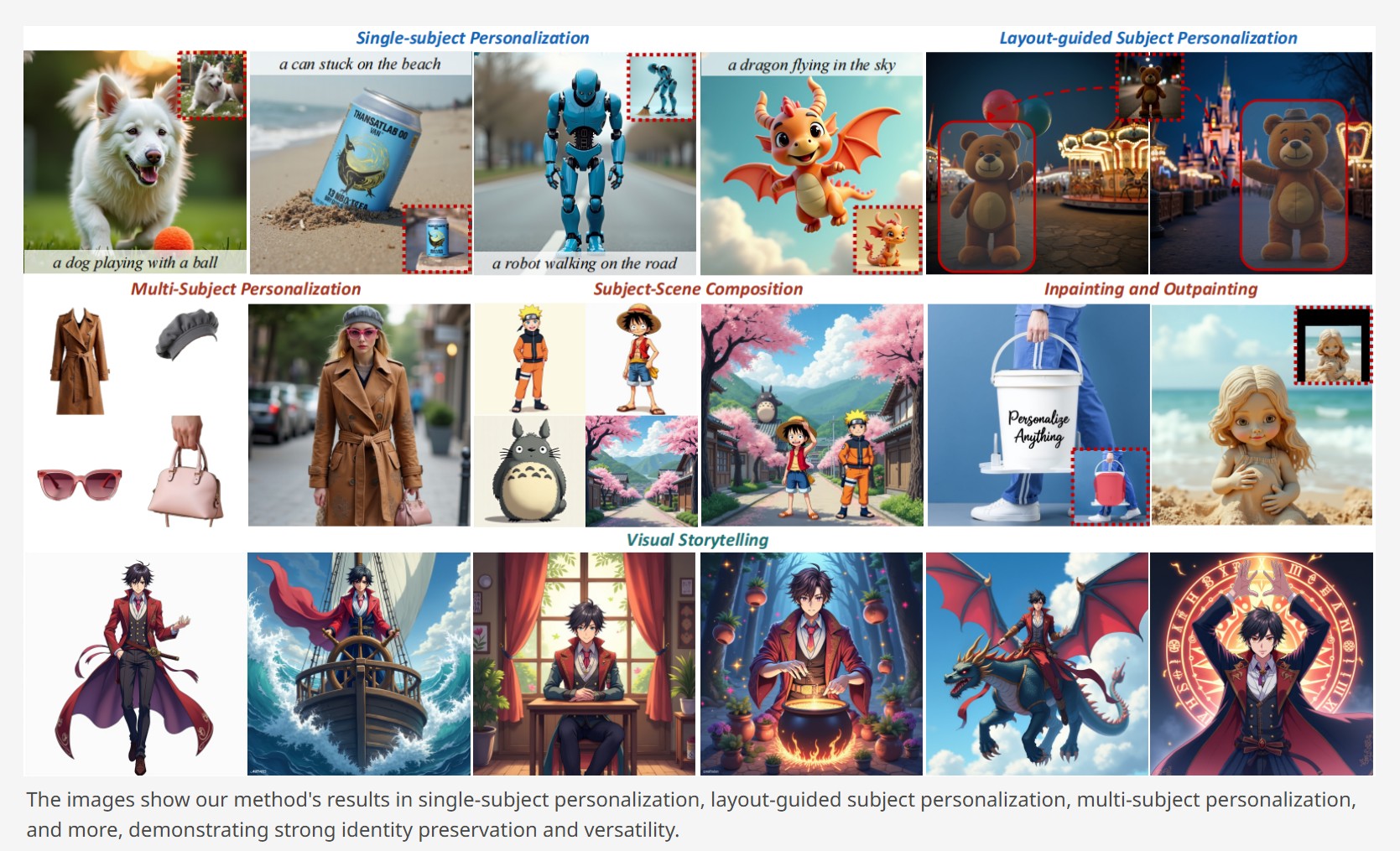

Personalize Anything – For Free with Diffusion Transformer

https://fenghora.github.io/Personalize-Anything-Page

Customize any subject with advanced DiT without additional fine-tuning.

-

Google Gemini 2.0 Flash new AI model extremely proficient at removing watermarks from images

-

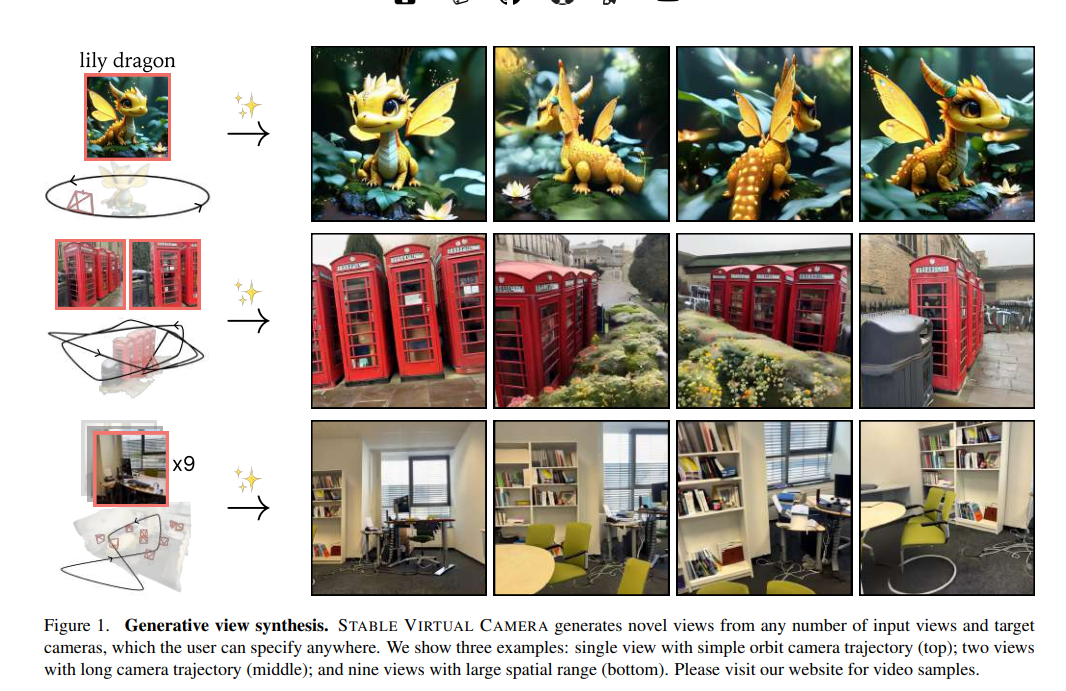

Stability.ai – Introducing Stable Virtual Camera: Multi-View Video Generation with 3D Camera Control

Capabilities

Stable Virtual Camera offers advanced capabilities for generating 3D videos, including:

- Dynamic Camera Control: Supports user-defined camera trajectories as well as multiple dynamic camera paths, including: 360°, Lemniscate (∞ shaped path), Spiral, Dolly Zoom In, Dolly Zoom Out, Zoom In, Zoom Out, Move Forward, Move Backward, Pan Up, Pan Down, Pan Left, Pan Right, and Roll.

- Flexible Inputs: Generates 3D videos from just one input image or up to 32.

- Multiple Aspect Ratios: Capable of producing videos in square (1:1), portrait (9:16), landscape (16:9), and other custom aspect ratios without additional training.

- Long Video Generation: Ensures 3D consistency in videos up to 1,000 frames, enabling seamless

Model limitations

In its initial version, Stable Virtual Camera may produce lower-quality results in certain scenarios. Input images featuring humans, animals, or dynamic textures like water often lead to degraded outputs. Additionally, highly ambiguous scenes, complex camera paths that intersect objects or surfaces, and irregularly shaped objects can cause flickering artifacts, especially when target viewpoints differ significantly from the input images.

FEATURED POSTS

-

Photography basics: Why Use a (MacBeth) Color Chart?

Start here: https://www.pixelsham.com/2013/05/09/gretagmacbeth-color-checker-numeric-values/

https://www.studiobinder.com/blog/what-is-a-color-checker-tool/

In LightRoom

in Final Cut

in Nuke

Note: In Foundry’s Nuke, the software will map 18% gray to whatever your center f/stop is set to in the viewer settings (f/8 by default… change that to EV by following the instructions below).

You can experiment with this by attaching an Exposure node to a Constant set to 0.18, setting your viewer read-out to Spotmeter, and adjusting the stops in the node up and down. You will see that a full stop up or down will give you the respective next value on the aperture scale (f8, f11, f16 etc.).One stop doubles or halves the amount or light that hits the filmback/ccd, so everything works in powers of 2.

So starting with 0.18 in your constant, you will see that raising it by a stop will give you .36 as a floating point number (in linear space), while your f/stop will be f/11 and so on.If you set your center stop to 0 (see below) you will get a relative readout in EVs, where EV 0 again equals 18% constant gray.

In other words. Setting the center f-stop to 0 means that in a neutral plate, the middle gray in the macbeth chart will equal to exposure value 0. EV 0 corresponds to an exposure time of 1 sec and an aperture of f/1.0.

This will set the sun usually around EV12-17 and the sky EV1-4 , depending on cloud coverage.

To switch Foundry’s Nuke’s SpotMeter to return the EV of an image, click on the main viewport, and then press s, this opens the viewer’s properties. Now set the center f-stop to 0 in there. And the SpotMeter in the viewport will change from aperture and fstops to EV.

-

Cinematographers Blueprint 300dpi poster

The 300dpi digital poster is now available to all PixelSham.com subscribers.

If you have already subscribed and wish a copy, please send me a note through the contact page.