BREAKING NEWS

LATEST POSTS

-

Erik Winquist – The Definitive Weta Digital Guide to IBL hdri capture

www.fxguide.com/fxfeatured/the-definitive-weta-digital-guide-to-ibl

Notes:

- Camera type: full frame with exposure bracketing and an 8mm circular fish eye lens.

- Bracketing: 7 exposures at 2 stops increments.

- Tripod: supporting 120 degrees locked offsets

- Camera angle: should point up 7.5 degrees for better sky or upper dome coverage.

- Camera focus: set and tape locked to manual

- Start shooting looking towards the sun direction with and without the ND3 filter; The other angles will not require the ND3 filter.

- Documenting shooting with a slate (measure distance to slate, day, location, camera info, camera temperature, camera position)

NOTE: The goal is to clean the initial individual brackets before or at merging time as much as possible.

This means:- keeping original shooting metadata

- de-fringing

- removing aberration (through camera lens data or automatically)

- at 32 bit

- in ACEScg (or ACES) wherever possible

-

Open Source OpenVDB Version 9.0.0 Available Now and Introduces GPU Support

First introduced in 2012, nowadays OpenVDB is commonly applied in simulation tools such as Houdini, EmberGen, Blender, and used in feature film production for creating realistic volumetric images. This format, however, lacks the GPUs support and can not be applied in games due to the considerable file size (on average at least a few Gigabytes) and computational effort required to render 3D volumes.

Volumetric data has numerous important applications in computer graphics and VFX production. It’s used for volume rendering, fluid simulation, fracture simulation, modeling with implicit surfaces, etc. However, this data is not so easy to work with. In most cases volumetric data is represented on spatially uniform, regular 3D grids. Although dense regular grids are convenient for several reasons, they have one major drawback – their memory footprint grows cubically with respect to grid resolution.

OpenVDB format, developed by DreamWorksAnimation, partially solves this issue by storing voxel data in a tree-like data structure that allows the creation of sparse volumes. The beauty behind this system is that it completely ignores empty cells, which drastically decreases memory and disk usage, simultaneously making the rendering of volumes much faster.

www.aswf.io/blog/project-update-openvdb-version-9-0-0-available-now-introduces-gpu-support/

github.com/AcademySoftwareFoundation/openvdb/releases/tag/v9.0.0

FEATURED POSTS

-

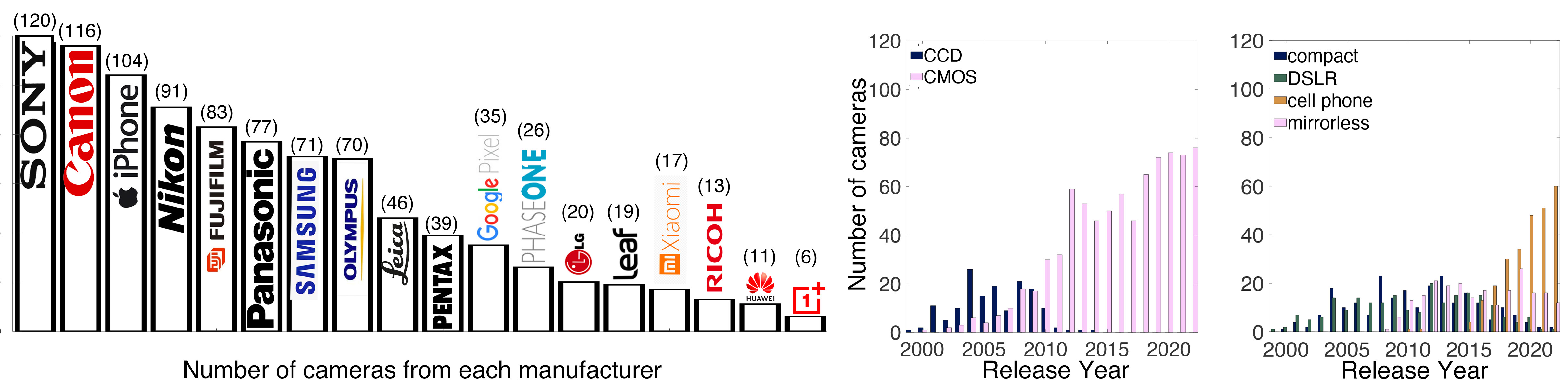

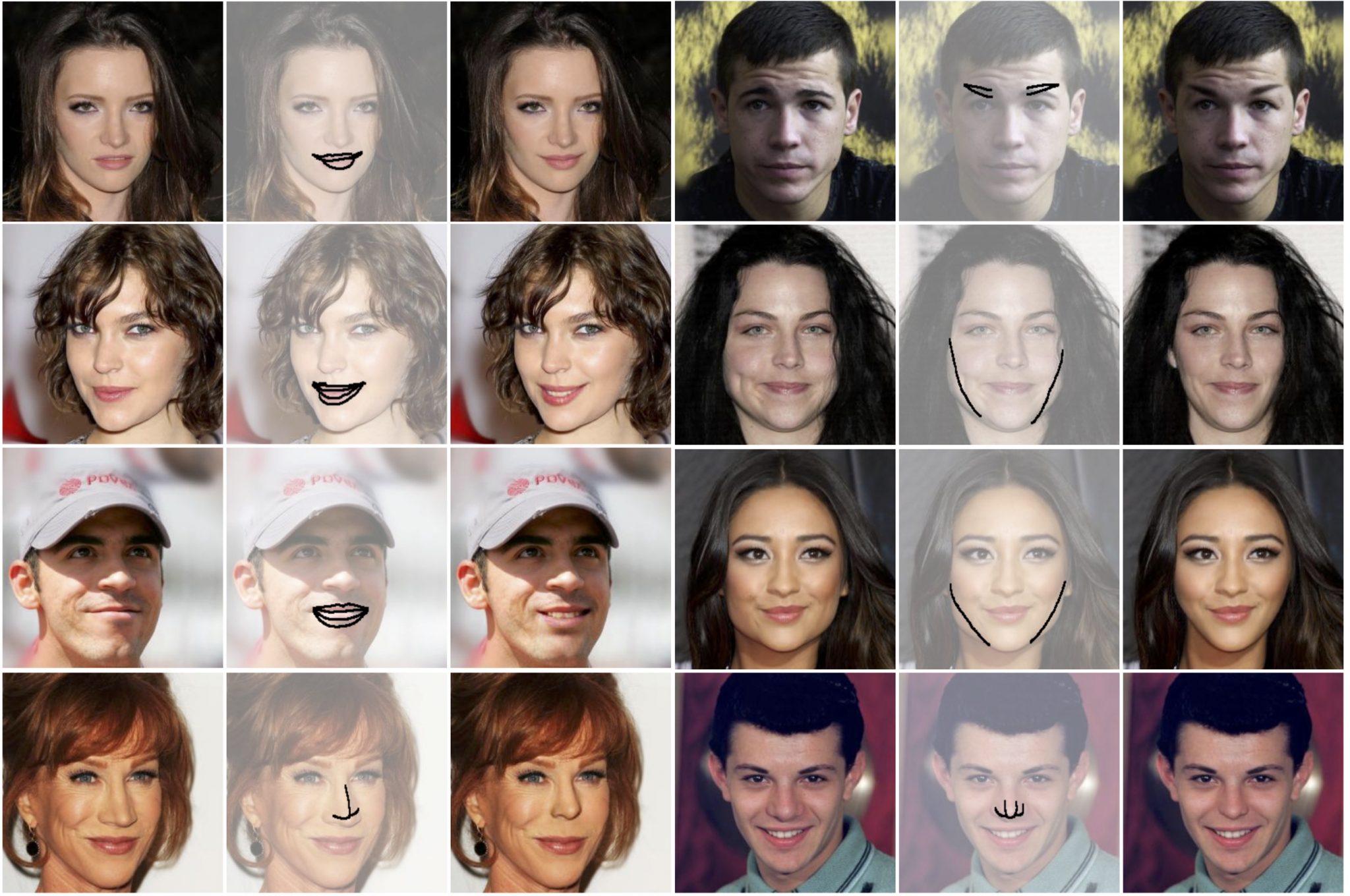

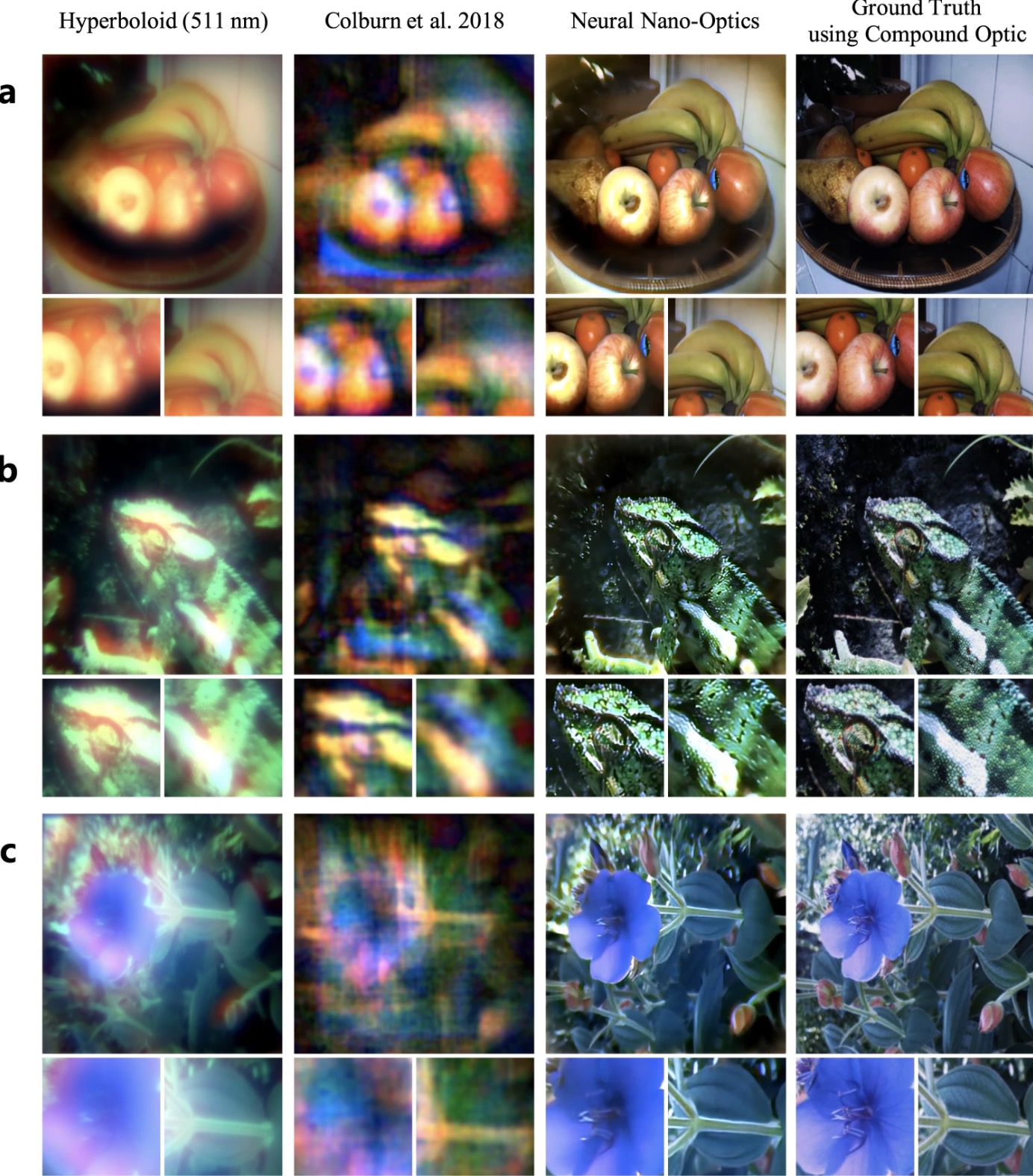

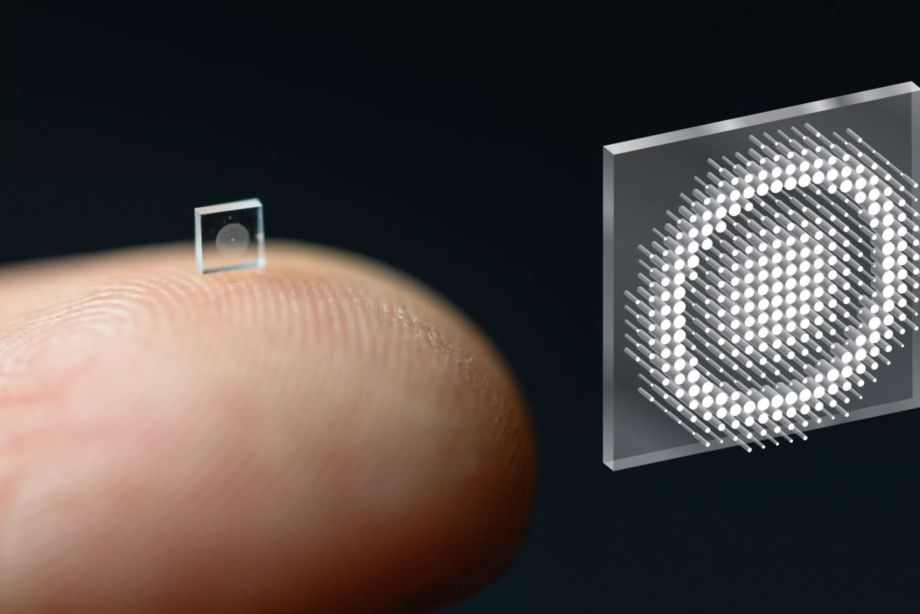

Photography Basics : Spectral Sensitivity Estimation Without a Camera

https://color-lab-eilat.github.io/Spectral-sensitivity-estimation-web/

A number of problems in computer vision and related fields would be mitigated if camera spectral sensitivities were known. As consumer cameras are not designed for high-precision visual tasks, manufacturers do not disclose spectral sensitivities. Their estimation requires a costly optical setup, which triggered researchers to come up with numerous indirect methods that aim to lower cost and complexity by using color targets. However, the use of color targets gives rise to new complications that make the estimation more difficult, and consequently, there currently exists no simple, low-cost, robust go-to method for spectral sensitivity estimation that non-specialized research labs can adopt. Furthermore, even if not limited by hardware or cost, researchers frequently work with imagery from multiple cameras that they do not have in their possession.

To provide a practical solution to this problem, we propose a framework for spectral sensitivity estimation that not only does not require any hardware (including a color target), but also does not require physical access to the camera itself. Similar to other work, we formulate an optimization problem that minimizes a two-term objective function: a camera-specific term from a system of equations, and a universal term that bounds the solution space.

Different than other work, we utilize publicly available high-quality calibration data to construct both terms. We use the colorimetric mapping matrices provided by the Adobe DNG Converter to formulate the camera-specific system of equations, and constrain the solutions using an autoencoder trained on a database of ground-truth curves. On average, we achieve reconstruction errors as low as those that can arise due to manufacturing imperfections between two copies of the same camera. We provide predicted sensitivities for more than 1,000 cameras that the Adobe DNG Converter currently supports, and discuss which tasks can become trivial when camera responses are available.