BREAKING NEWS

LATEST POSTS

-

Pyper – a flexible framework for concurrent and parallel data-processing in Python

Pyper is a flexible framework for concurrent and parallel data-processing, based on functional programming patterns.

https://github.com/pyper-dev/pyper

-

Jacob Bartlett – Apple is Killing Swift

https://blog.jacobstechtavern.com/p/apple-is-killing-swift

Jacob Bartlett argues that Swift, once envisioned as a simple and composable programming language by its creator Chris Lattner, has become overly complex due to Apple’s governance. Bartlett highlights that Swift now contains 217 reserved keywords, deviating from its original goal of simplicity. He contrasts Swift’s governance model, where Apple serves as the project lead and arbiter, with other languages like Python and Rust, which have more community-driven or balanced governance structures. Bartlett suggests that Apple’s control has led to Swift’s current state, moving away from Lattner’s initial vision.

-

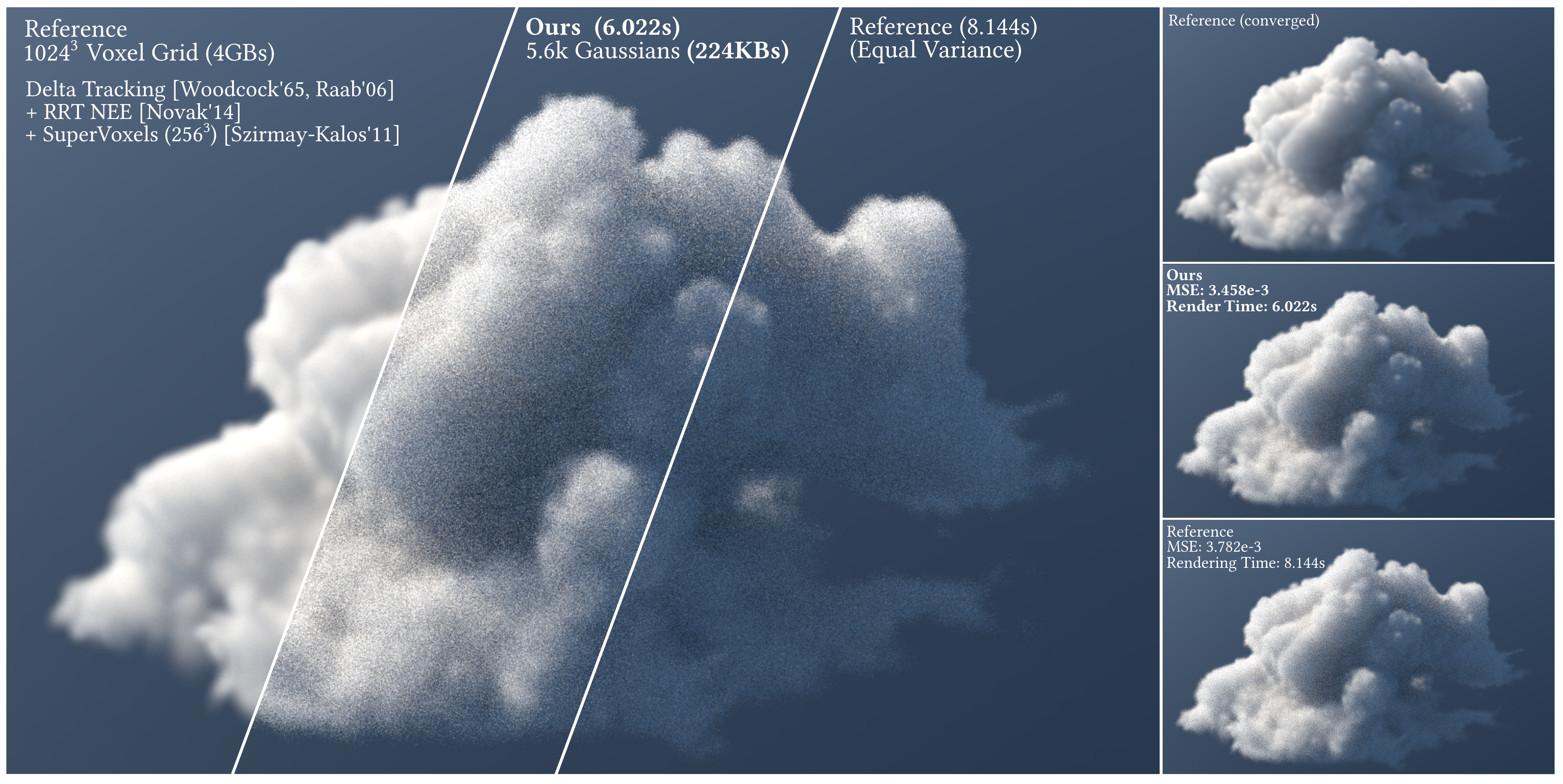

Don’t Splat your Gaussians – Volumetric Ray-Traced Primitives for Modeling and Rendering Scattering and Emissive Media

https://arcanous98.github.io/projectPages/gaussianVolumes.html

We propose a compact and efficient alternative to existing volumetric representations for rendering such as voxel grids.

-

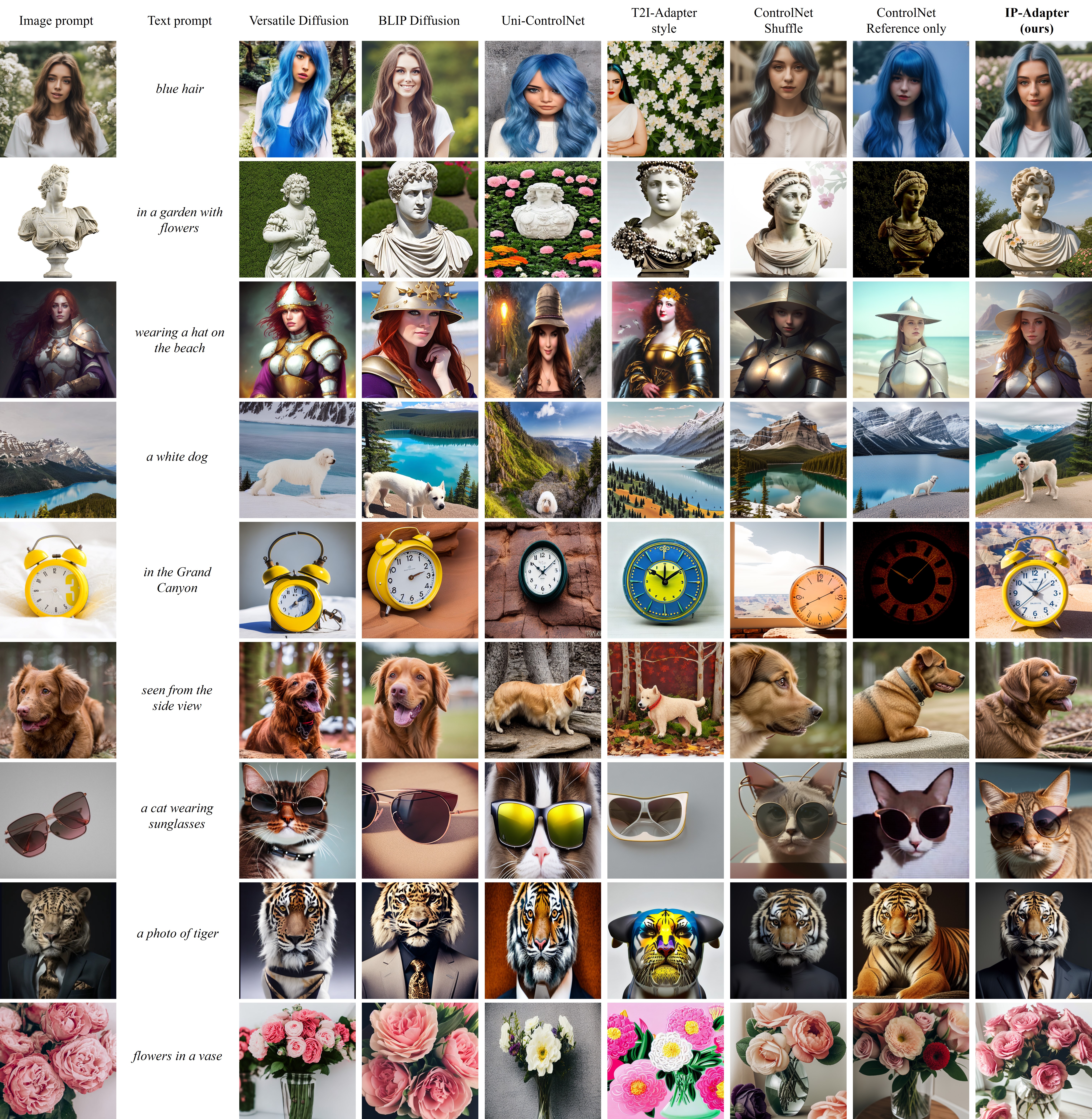

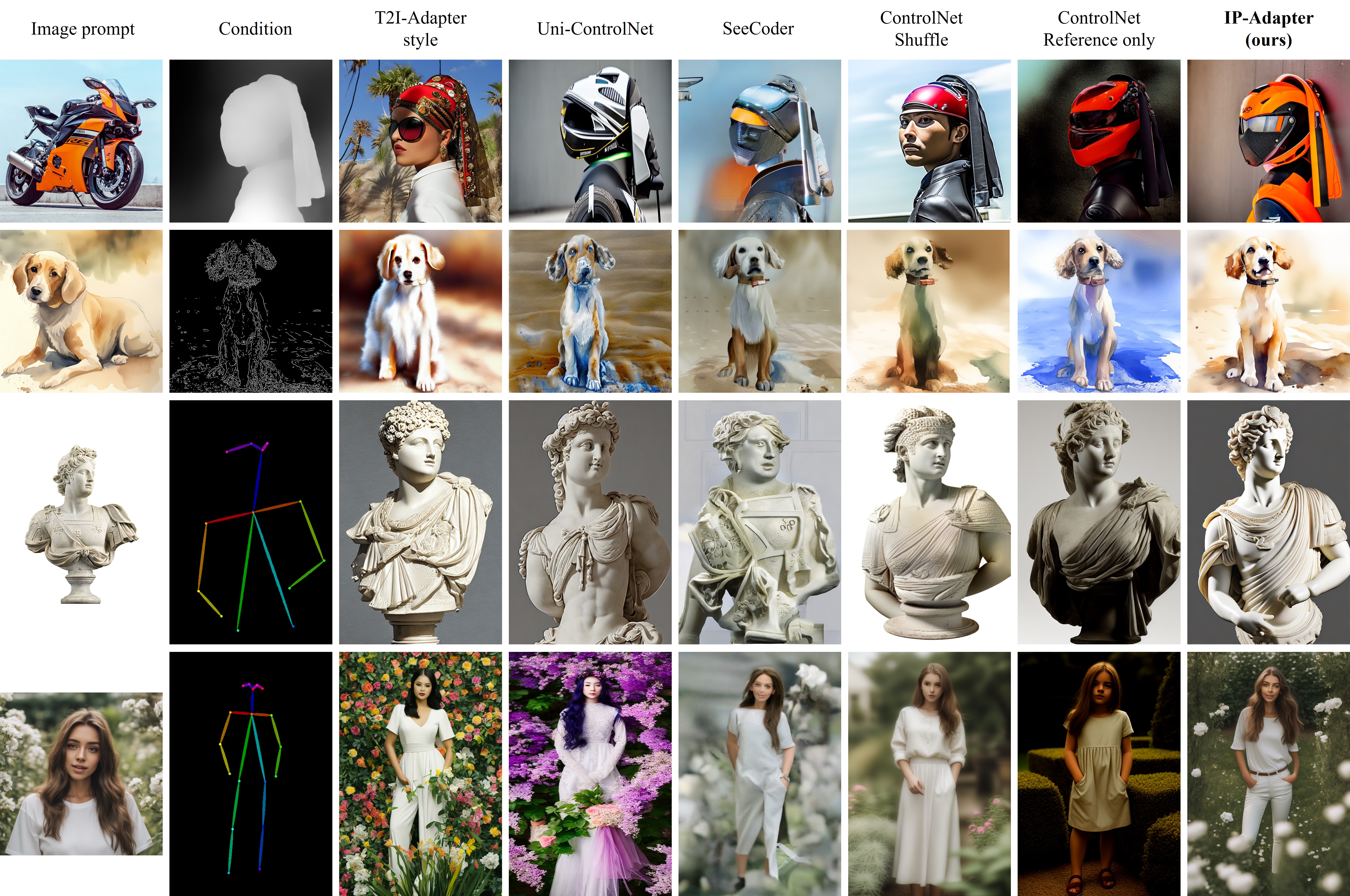

IPAdapter – Text Compatible Image Prompt Adapter for Text-to-Image Image-to-Image Diffusion Models and ComfyUI implementation

github.com/tencent-ailab/IP-Adapter

The IPAdapter are very powerful models for image-to-image conditioning. The subject or even just the style of the reference image(s) can be easily transferred to a generation. Think of it as a 1-image lora. They are an effective and lightweight adapter to achieve image prompt capability for the pre-trained text-to-image diffusion models. An IP-Adapter with only 22M parameters can achieve comparable or even better performance to a fine-tuned image prompt model.

Once the IP-Adapter is trained, it can be directly reusable on custom models fine-tuned from the same base model.The IP-Adapter is fully compatible with existing controllable tools, e.g., ControlNet and T2I-Adapter.

-

SPAR3D – Stable Point-Aware Reconstruction of 3D Objects from Single Images

SPAR3D is a fast single-image 3D reconstructor with intermediate point cloud generation, which allows for interactive user edits and achieves state-of-the-art performance.

https://github.com/Stability-AI/stable-point-aware-3d

https://stability.ai/news/stable-point-aware-3d?utm_source=x&utm_medium=social&utm_campaign=SPAR3D

-

MiniMax-01 goes open source

MiniMax is thrilled to announce the release of the MiniMax-01 series, featuring two groundbreaking models:

MiniMax-Text-01: A foundational language model.

MiniMax-VL-01: A visual multi-modal model.Both models are now open-source, paving the way for innovation and accessibility in AI development!

🔑 Key Innovations

1. Lightning Attention Architecture: Combines 7/8 Lightning Attention with 1/8 Softmax Attention, delivering unparalleled performance.

2. Massive Scale with MoE (Mixture of Experts): 456B parameters with 32 experts and 45.9B activated parameters.

3. 4M-Token Context Window: Processes up to 4 million tokens, 20–32x the capacity of leading models, redefining what’s possible in long-context AI applications.💡 Why MiniMax-01 Matters

1. Innovative Architecture for Top-Tier Performance

The MiniMax-01 series introduces the Lightning Attention mechanism, a bold alternative to traditional Transformer architectures, delivering unmatched efficiency and scalability.2. 4M Ultra-Long Context: Ushering in the AI Agent Era

With the ability to handle 4 million tokens, MiniMax-01 is designed to lead the next wave of agent-based applications, where extended context handling and sustained memory are critical.3. Unbeatable Cost-Effectiveness

Through proprietary architectural innovations and infrastructure optimization, we’re offering the most competitive pricing in the industry:

$0.2 per million input tokens

$1.1 per million output tokens🌟 Experience the Future of AI Today

We believe MiniMax-01 is poised to transform AI applications across industries. Whether you’re building next-gen AI agents, tackling ultra-long context tasks, or exploring new frontiers in AI, MiniMax-01 is here to empower your vision.✅ Try it now for free: hailuo.ai

📄 Read the technical paper: filecdn.minimax.chat/_Arxiv_MiniMax_01_Report.pdf

🌐 Learn more: minimaxi.com/en/news/minimax-01-series-2

💡API Platform: intl.minimaxi.com/

FEATURED POSTS

-

AnimationXpress.com interviews Daniele Tosti for TheCgCareer.com channel

You’ve been in the VFX Industry for over a decade. Tell us about your journey.

It all started with my older brother giving me a Commodore64 personal computer as a gift back in the late 80′. I realised then I could create something directly from my imagination using this new digital media format. And, eventually, make a living in the process.

That led me to start my professional career in 1990. From live TV to games to animation. All the way to live action VFX in the recent years.I really never stopped to crave to create art since those early days. And I have been incredibly fortunate to work with really great talent along the way, which made my journey so much more effective.

What inspired you to pursue VFX as a career?

An incredible combination of opportunities, really. The opportunity to express myself as an artist and earn money in the process. The opportunity to learn about how the world around us works and how best solve problems. The opportunity to share my time with other talented people with similar passions. The opportunity to grow and adapt to new challenges. The opportunity to develop something that was never done before. A perfect storm of creativity that fed my continuous curiosity about life and genuinely drove my inspiration.

Tell us about the projects you’ve particularly enjoyed working on in your career

(more…)

-

ComfyDock – The Easiest (Free) Way to Safely Run ComfyUI Sessions in a Boxed Container

https://www.reddit.com/r/comfyui/comments/1j2x4qv/comfydock_the_easiest_free_way_to_run_comfyui_in/

ComfyDock is a tool that allows you to easily manage your ComfyUI environments via Docker.

Common Challenges with ComfyUI

- Custom Node Installation Issues: Installing new custom nodes can inadvertently change settings across the whole installation, potentially breaking the environment.

- Workflow Compatibility: Workflows are often tested with specific custom nodes and ComfyUI versions. Running these workflows on different setups can lead to errors and frustration.

- Security Risks: Installing custom nodes directly on your host machine increases the risk of malicious code execution.

How ComfyDock Helps

- Environment Duplication: Easily duplicate your current environment before installing custom nodes. If something breaks, revert to the original environment effortlessly.

- Deployment and Sharing: Workflow developers can commit their environments to a Docker image, which can be shared with others and run on cloud GPUs to ensure compatibility.

- Enhanced Security: Containers help to isolate the environment, reducing the risk of malicious code impacting your host machine.

-

Photography basics: Why Use a (MacBeth) Color Chart?

Start here: https://www.pixelsham.com/2013/05/09/gretagmacbeth-color-checker-numeric-values/

https://www.studiobinder.com/blog/what-is-a-color-checker-tool/

In LightRoom

in Final Cut

in Nuke

Note: In Foundry’s Nuke, the software will map 18% gray to whatever your center f/stop is set to in the viewer settings (f/8 by default… change that to EV by following the instructions below).

You can experiment with this by attaching an Exposure node to a Constant set to 0.18, setting your viewer read-out to Spotmeter, and adjusting the stops in the node up and down. You will see that a full stop up or down will give you the respective next value on the aperture scale (f8, f11, f16 etc.).One stop doubles or halves the amount or light that hits the filmback/ccd, so everything works in powers of 2.

So starting with 0.18 in your constant, you will see that raising it by a stop will give you .36 as a floating point number (in linear space), while your f/stop will be f/11 and so on.If you set your center stop to 0 (see below) you will get a relative readout in EVs, where EV 0 again equals 18% constant gray.

In other words. Setting the center f-stop to 0 means that in a neutral plate, the middle gray in the macbeth chart will equal to exposure value 0. EV 0 corresponds to an exposure time of 1 sec and an aperture of f/1.0.

This will set the sun usually around EV12-17 and the sky EV1-4 , depending on cloud coverage.

To switch Foundry’s Nuke’s SpotMeter to return the EV of an image, click on the main viewport, and then press s, this opens the viewer’s properties. Now set the center f-stop to 0 in there. And the SpotMeter in the viewport will change from aperture and fstops to EV.