BREAKING NEWS

LATEST POSTS

-

OpenAI Backs Critterz, an AI-Made Animated Feature Film

https://www.wsj.com/tech/ai/openai-backs-ai-made-animated-feature-film-389f70b0

Film, called ‘Critterz,’ aims to debut at Cannes Film Festival and will leverage startup’s AI tools and resources.

“Critterz,” about forest creatures who go on an adventure after their village is disrupted by a stranger, is the brainchild of Chad Nelson, a creative specialist at OpenAI. Nelson started sketching out the characters three years ago while trying to make a short film with what was then OpenAI’s new DALL-E image-generation tool.

-

AI and the Law: Anthropic to Pay $1.5 Billion to Settle Book Piracy Class Action Lawsuit

https://variety.com/2025/digital/news/anthropic-class-action-settlement-billion-1236509571

The settlement amounts to about $3,000 per book and is believed to be the largest ever recovery in a U.S. copyright case, according to the plaintiffs’ attorneys.

-

Sir Peter Jackson’s Wētā FX records $140m loss in two years, amid staff layoffs

https://www.thepost.co.nz/business/360813799/weta-fx-posts-59m-loss-amid-industry-headwinds

Wētā FX, Sir Peter Jackson’s largest business has posted a $59.3 million loss for the year to March 31, an improvement on an $83m loss last year.

-

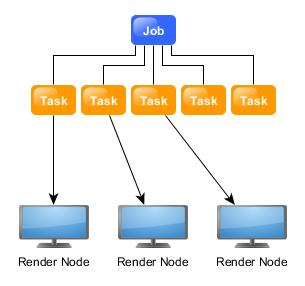

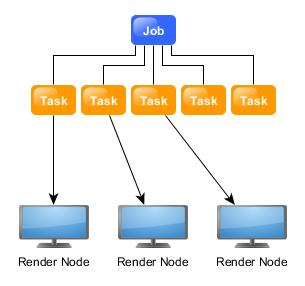

ComfyUI Thinkbox Deadline plugin

Submit ComfyUI workflows to Thinkbox Deadline render farm.

Features

- Submit ComfyUI workflows directly to Deadline

- Batch rendering with seed variation

- Real-time progress monitoring via Deadline Monitor

- Configurable pools, groups, and priorities

https://github.com/doubletwisted/ComfyUI-Deadline-Plugin

https://docs.thinkboxsoftware.com/products/deadline/latest/1_User%20Manual/manual/overview.html

Deadline 10 is a cross-platform render farm management tool for Windows, Linux, and macOS. It gives users control of their rendering resources and can be used on-premises, in the cloud, or both. It handles asset syncing to the cloud, manages data transfers, and supports tagging for cost tracking purposes.

Deadline 10’s Remote Connection Server allows for communication over HTTPS, improving performance and scalability. Where supported, users can use usage-based licensing to supplement their existing fixed pool of software licenses when rendering through Deadline 10.

-

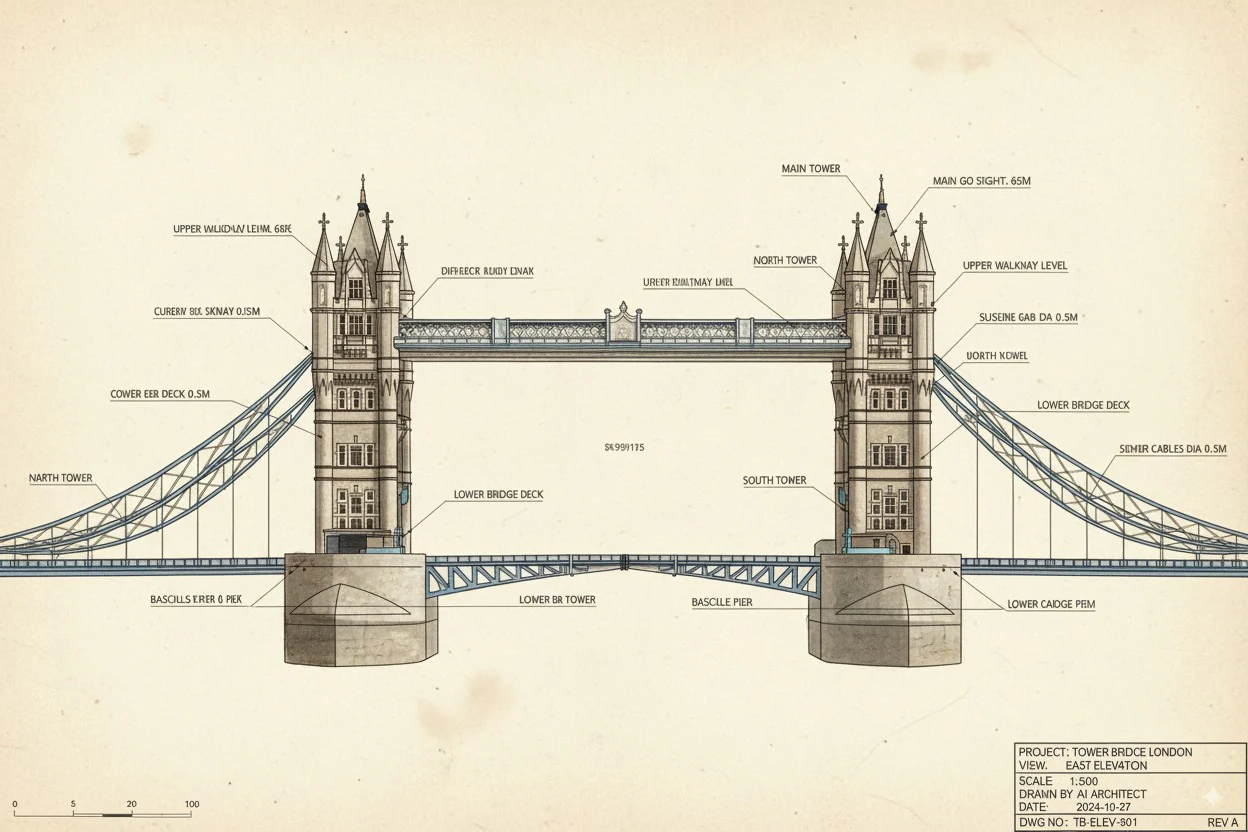

Google’s Nano Banana AI: Free Tool for 3D Architecture Models

https://landscapearchitecture.store/blogs/news/nano-banana-ai-free-tool-for-3d-architecture-models

How to Use Nano Banana AI for Architecture- Go to Google AI Studio.

- Log in with your Gmail and select Gemini 2.5 (Nano Banana).

- Upload a photo — either from your laptop or a Google Street View screenshot.

- Paste this example prompt:

“Use the provided architectural photo as reference. Generate a high-fidelity 3D building model in the look of a 3D-printed architecture model.” - Wait a few seconds, and your 3D architecture model will be ready.

Pro tip: If you want more accuracy, upload two images — a street photo for the facade and an aerial view for the roof/top.

-

Blender 4.5 switches from OpenGL to Vulkan support

Blender is switching from OpenGL to Vulkan as its default graphics backend, starting significantly with Blender 4.5, to achieve better performance and prepare for future features like real-time ray tracing and global illumination. To enable this switch, go to Edit > Preferences > System and set the “Backend” option to “Vulkan,” then restart Blender. This change offers substantial benefits, including faster startup times, improved viewport responsiveness, and more efficient handling of complex scenes by better utilizing your CPU and GPU resources.

Why the Switch to Vulkan?

- Modern Graphics API: Vulkan is a newer, lower-level, and more efficient API that provides developers with greater control over hardware, unlike the older, higher-level OpenGL.

- Performance Boost: This change significantly improves performance in various areas, such as viewport rendering, material loading, and overall UI responsiveness, especially in complex scenes with many textures.

- Better Resource Utilization: Vulkan distributes work more effectively across the CPU and reduces driver overhead, allowing Blender to make better use of your computer’s power.

- Future-Proofing: The Vulkan backend paves the way for advanced features like real-time ray tracing and global illumination in future versions of Blender.

-

Diffuman4D – 4D Consistent Human View Synthesis from Sparse-View Videos with Spatio-Temporal Diffusion Models

Given sparse-view videos, Diffuman4D (1) generates 4D-consistent multi-view videos conditioned on these inputs, and (2) reconstructs a high-fidelity 4DGS model of the human performance using both the input and the generated videos.

FEATURED POSTS

-

ComfyUI Thinkbox Deadline plugin

Submit ComfyUI workflows to Thinkbox Deadline render farm.

Features

- Submit ComfyUI workflows directly to Deadline

- Batch rendering with seed variation

- Real-time progress monitoring via Deadline Monitor

- Configurable pools, groups, and priorities

https://github.com/doubletwisted/ComfyUI-Deadline-Plugin

https://docs.thinkboxsoftware.com/products/deadline/latest/1_User%20Manual/manual/overview.html

Deadline 10 is a cross-platform render farm management tool for Windows, Linux, and macOS. It gives users control of their rendering resources and can be used on-premises, in the cloud, or both. It handles asset syncing to the cloud, manages data transfers, and supports tagging for cost tracking purposes.

Deadline 10’s Remote Connection Server allows for communication over HTTPS, improving performance and scalability. Where supported, users can use usage-based licensing to supplement their existing fixed pool of software licenses when rendering through Deadline 10.

-

ComfyDock – The Easiest (Free) Way to Safely Run ComfyUI Sessions in a Boxed Container

https://www.reddit.com/r/comfyui/comments/1j2x4qv/comfydock_the_easiest_free_way_to_run_comfyui_in/

ComfyDock is a tool that allows you to easily manage your ComfyUI environments via Docker.

Common Challenges with ComfyUI

- Custom Node Installation Issues: Installing new custom nodes can inadvertently change settings across the whole installation, potentially breaking the environment.

- Workflow Compatibility: Workflows are often tested with specific custom nodes and ComfyUI versions. Running these workflows on different setups can lead to errors and frustration.

- Security Risks: Installing custom nodes directly on your host machine increases the risk of malicious code execution.

How ComfyDock Helps

- Environment Duplication: Easily duplicate your current environment before installing custom nodes. If something breaks, revert to the original environment effortlessly.

- Deployment and Sharing: Workflow developers can commit their environments to a Docker image, which can be shared with others and run on cloud GPUs to ensure compatibility.

- Enhanced Security: Containers help to isolate the environment, reducing the risk of malicious code impacting your host machine.

-

ComfyDock – The Easiest (Free) Way to Safely Run ComfyUI Sessions in a Boxed Container

https://www.reddit.com/r/comfyui/comments/1j2x4qv/comfydock_the_easiest_free_way_to_run_comfyui_in/

ComfyDock is a tool that allows you to easily manage your ComfyUI environments via Docker.

Common Challenges with ComfyUI

- Custom Node Installation Issues: Installing new custom nodes can inadvertently change settings across the whole installation, potentially breaking the environment.

- Workflow Compatibility: Workflows are often tested with specific custom nodes and ComfyUI versions. Running these workflows on different setups can lead to errors and frustration.

- Security Risks: Installing custom nodes directly on your host machine increases the risk of malicious code execution.

How ComfyDock Helps

- Environment Duplication: Easily duplicate your current environment before installing custom nodes. If something breaks, revert to the original environment effortlessly.

- Deployment and Sharing: Workflow developers can commit their environments to a Docker image, which can be shared with others and run on cloud GPUs to ensure compatibility.

- Enhanced Security: Containers help to isolate the environment, reducing the risk of malicious code impacting your host machine.

-

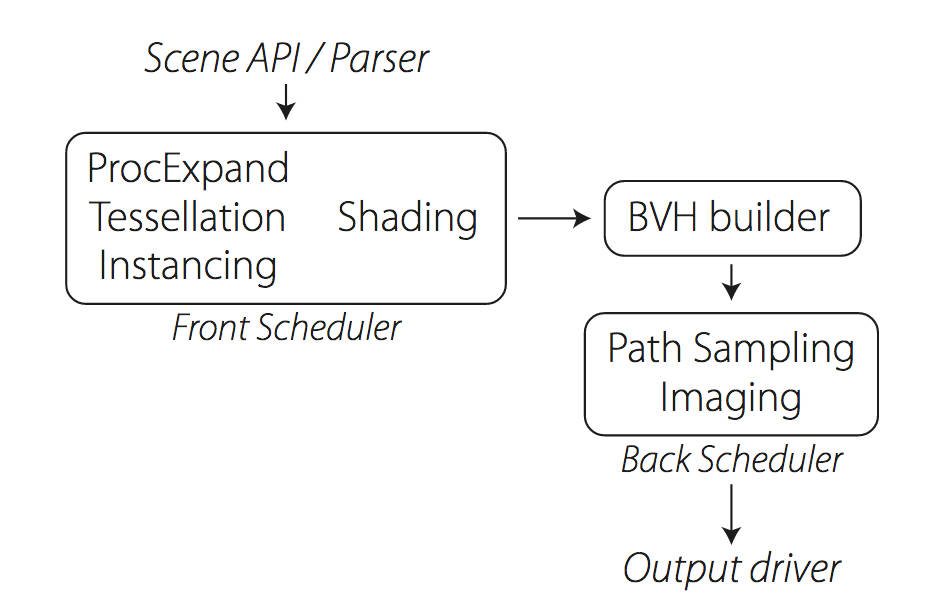

Weta Digital – Manuka Raytracer and Gazebo GPU renderers – pipeline

https://jo.dreggn.org/home/2018_manuka.pdf

http://www.fxguide.com/featured/manuka-weta-digitals-new-renderer/

The Manuka rendering architecture has been designed in the spirit of the classic reyes rendering architecture. In its core, reyes is based on stochastic rasterisation of micropolygons, facilitating depth of field, motion blur, high geometric complexity,and programmable shading.

This is commonly achieved with Monte Carlo path tracing, using a paradigm often called shade-on-hit, in which the renderer alternates tracing rays with running shaders on the various ray hits. The shaders take the role of generating the inputs of the local material structure which is then used bypath sampling logic to evaluate contributions and to inform what further rays to cast through the scene.

Over the years, however, the expectations have risen substantially when it comes to image quality. Computing pictures which are indistinguishable from real footage requires accurate simulation of light transport, which is most often performed using some variant of Monte Carlo path tracing. Unfortunately this paradigm requires random memory accesses to the whole scene and does not lend itself well to a rasterisation approach at all.

Manuka is both a uni-directional and bidirectional path tracer and encompasses multiple importance sampling (MIS). Interestingly, and importantly for production character skin work, it is the first major production renderer to incorporate spectral MIS in the form of a new ‘Hero Spectral Sampling’ technique, which was recently published at Eurographics Symposium on Rendering 2014.

Manuka propose a shade-before-hit paradigm in-stead and minimise I/O strain (and some memory costs) on the system, leveraging locality of reference by running pattern generation shaders before we execute light transport simulation by path sampling, “compressing” any bvh structure as needed, and as such also limiting duplication of source data.

The difference with reyes is that instead of baking colors into the geometry like in Reyes, manuka bakes surface closures. This means that light transport is still calculated with path tracing, but all texture lookups etc. are done up-front and baked into the geometry.The main drawback with this method is that geometry has to be tessellated to its highest, stable topology before shading can be evaluated properly. As such, the high cost to first pixel. Even a basic 4 vertices square becomes a much more complex model with this approach.

Manuka use the RenderMan Shading Language (rsl) for programmable shading [Pixar Animation Studios 2015], but we do not invoke rsl shaders when intersecting a ray with a surface (often called shade-on-hit). Instead, we pre-tessellate and pre-shade all the input geometry in the front end of the renderer.

This way, we can efficiently order shading computations to sup-port near-optimal texture locality, vectorisation, and parallelism. This system avoids repeated evaluation of shaders at the same surface point, and presents a minimal amount of memory to be accessed during light transport time. An added benefit is that the acceleration structure for ray tracing (abounding volume hierarchy, bvh) is built once on the final tessellated geometry, which allows us to ray trace more efficiently than multi-level bvhs and avoids costly caching of on-demand tessellated micropolygons and the associated scheduling issues.For the shading reasons above, in terms of AOVs, the studio approach is to succeed at combining complex shading with ray paths in the render rather than pass a multi-pass render to compositing.

For the Spectral Rendering component. The light transport stage is fully spectral, using a continuously sampled wavelength which is traced with each path and used to apply the spectral camera sensitivity of the sensor. This allows for faithfully support any degree of observer metamerism as the camera footage they are intended to match as well as complex materials which require wavelength dependent phenomena such as diffraction, dispersion, interference, iridescence, or chromatic extinction and Rayleigh scattering in participating media.

As opposed to the original reyes paper, we use bilinear interpolation of these bsdf inputs later when evaluating bsdfs per pathv ertex during light transport4. This improves temporal stability of geometry which moves very slowly with respect to the pixel raster

In terms of the pipeline, everything rendered at Weta was already completely interwoven with their deep data pipeline. Manuka very much was written with deep data in mind. Here, Manuka not so much extends the deep capabilities, rather it fully matches the already extremely complex and powerful setup Weta Digital already enjoy with RenderMan. For example, an ape in a scene can be selected, its ID is available and a NUKE artist can then paint in 3D say a hand and part of the way up the neutral posed ape.

We called our system Manuka, as a respectful nod to reyes: we had heard a story froma former ILM employee about how reyes got its name from how fond the early Pixar people were of their lunches at Point Reyes, and decided to name our system after our surrounding natural environment, too. Manuka is a kind of tea tree very common in New Zealand which has very many very small leaves, in analogy to micropolygons ina tree structure for ray tracing. It also happens to be the case that Weta Digital’s main site is on Manuka Street.