BREAKING NEWS

LATEST POSTS

-

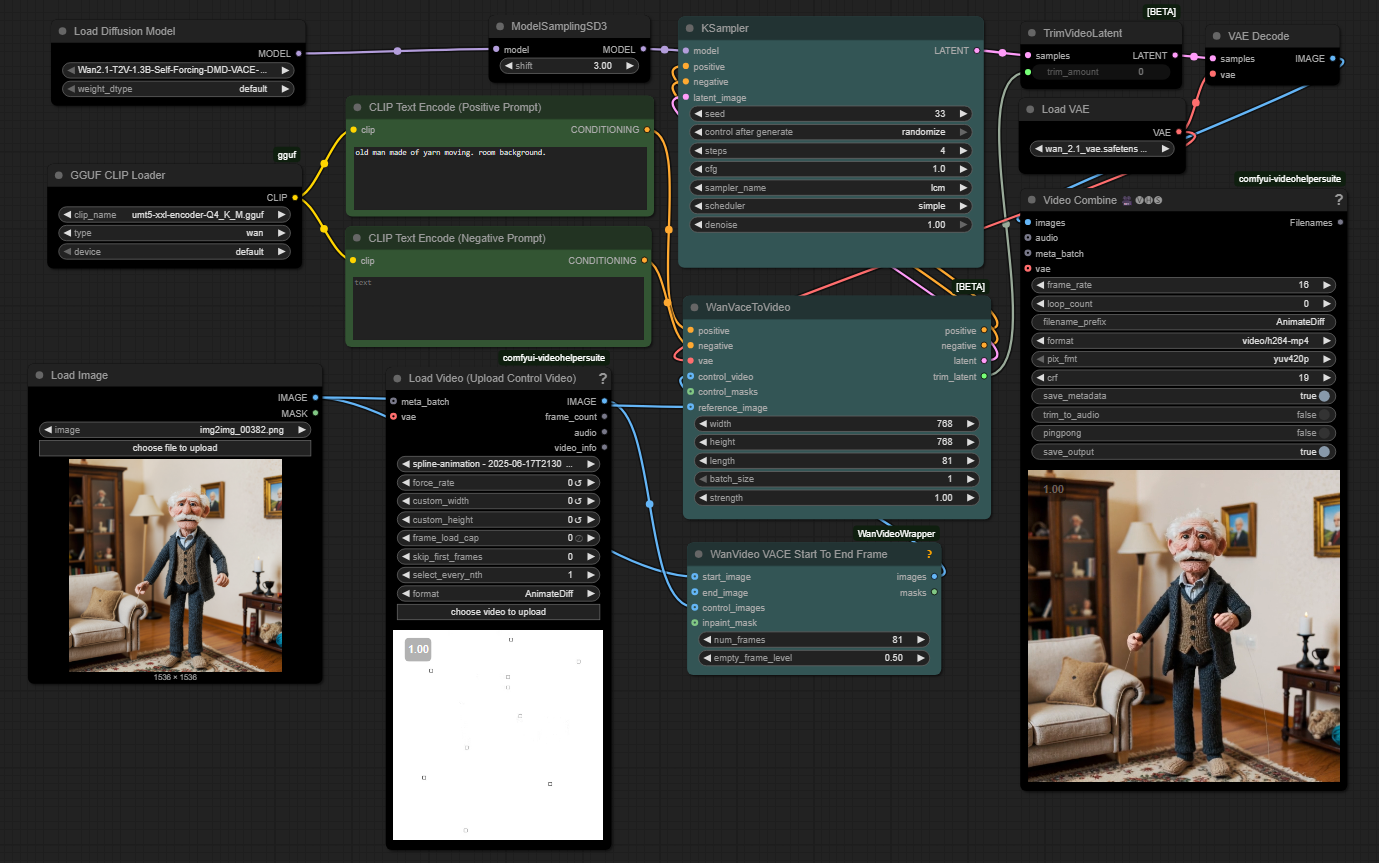

WhatDreamsCost Spline-Path-Control – Create motion controls for ComfyUI

https://github.com/WhatDreamsCost/Spline-Path-Control

https://whatdreamscost.github.io/Spline-Path-Control/

https://github.com/WhatDreamsCost/Spline-Path-Control/tree/main/example_workflows

Spline Path Control is a simple tool designed to make it easy to create motion controls. It allows you to create and animate shapes that follow splines, and then export the result as a

.webmvideo file.

This project was created to simplify the process of generating control videos for tools like VACE. Use it to control the motion of anything (camera movement, objects, humans etc) all without extra prompting.- Multi-Spline Editing: Create multiple, independent spline paths

- Easy To Use Controls: Quickly edit splines and points

- Full Control of Splines and Shapes:

- Start Frame: Set a delay before a spline’s animation begins.

- Duration: Control the speed of the shape along its path.

- Easing: Apply

Linear,Ease-in,Ease-out, andEase-in-outfunctions for smooth acceleration and deceleration. - Tension: Adjust the “curviness” of the spline path.

- Shape Customization: Change the shape (circle, square, triangle), size, fill color, and border.

- Reference Images: Drag and drop or upload a background image to trace paths over an existing image.

- WebM Export: Export your animation with a white background, perfect for use as a control video in VACE.

-

MiniMax-Remover – Taming Bad Noise Helps Video Object Removal Rotoscoping

https://github.com/zibojia/MiniMax-Remover

MiniMax-Remover is a fast and effective video object remover based on minimax optimization. It operates in two stages: the first stage trains a remover using a simplified DiT architecture, while the second stage distills a robust remover with CFG removal and fewer inference steps.

FEATURED POSTS

-

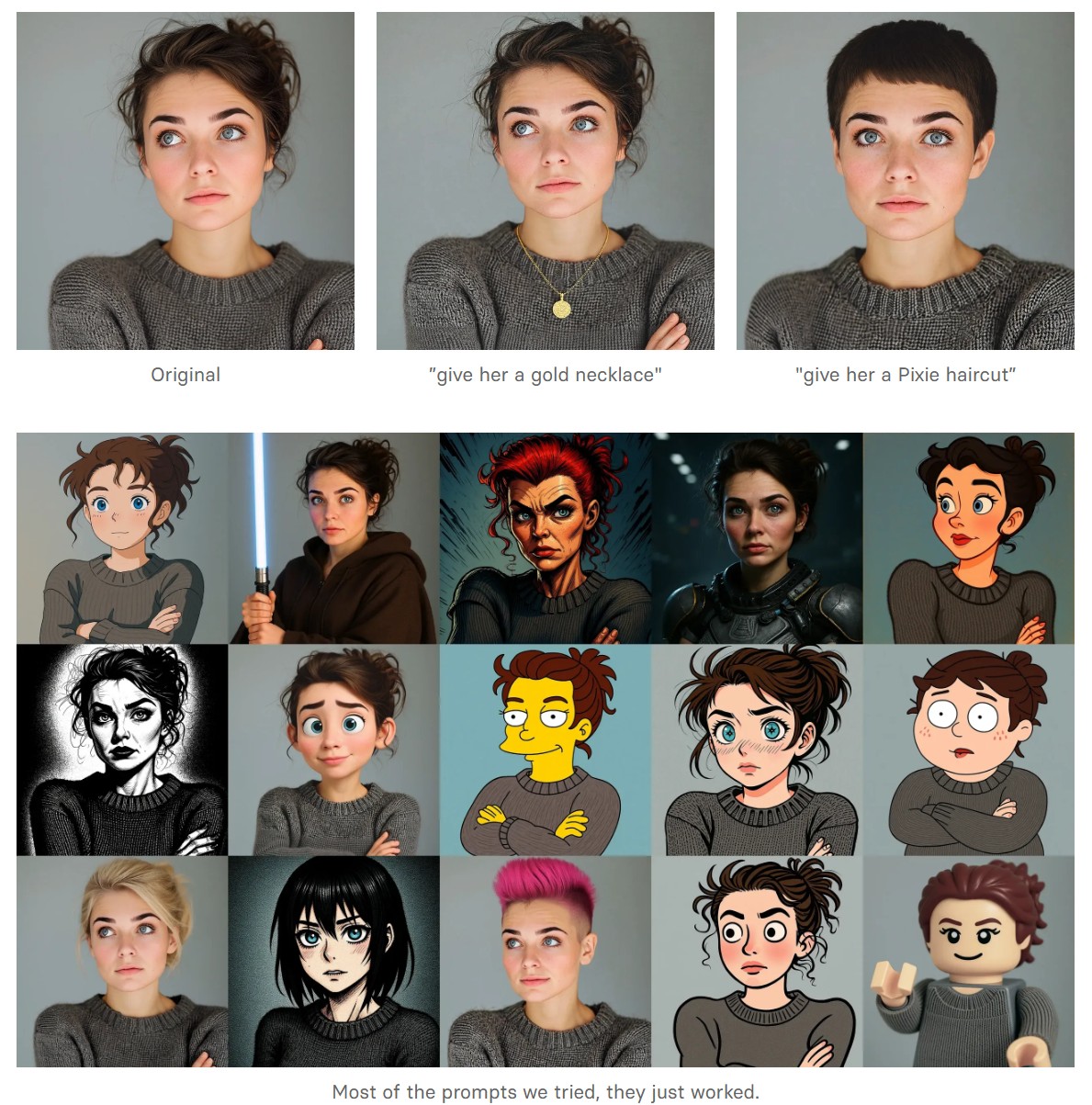

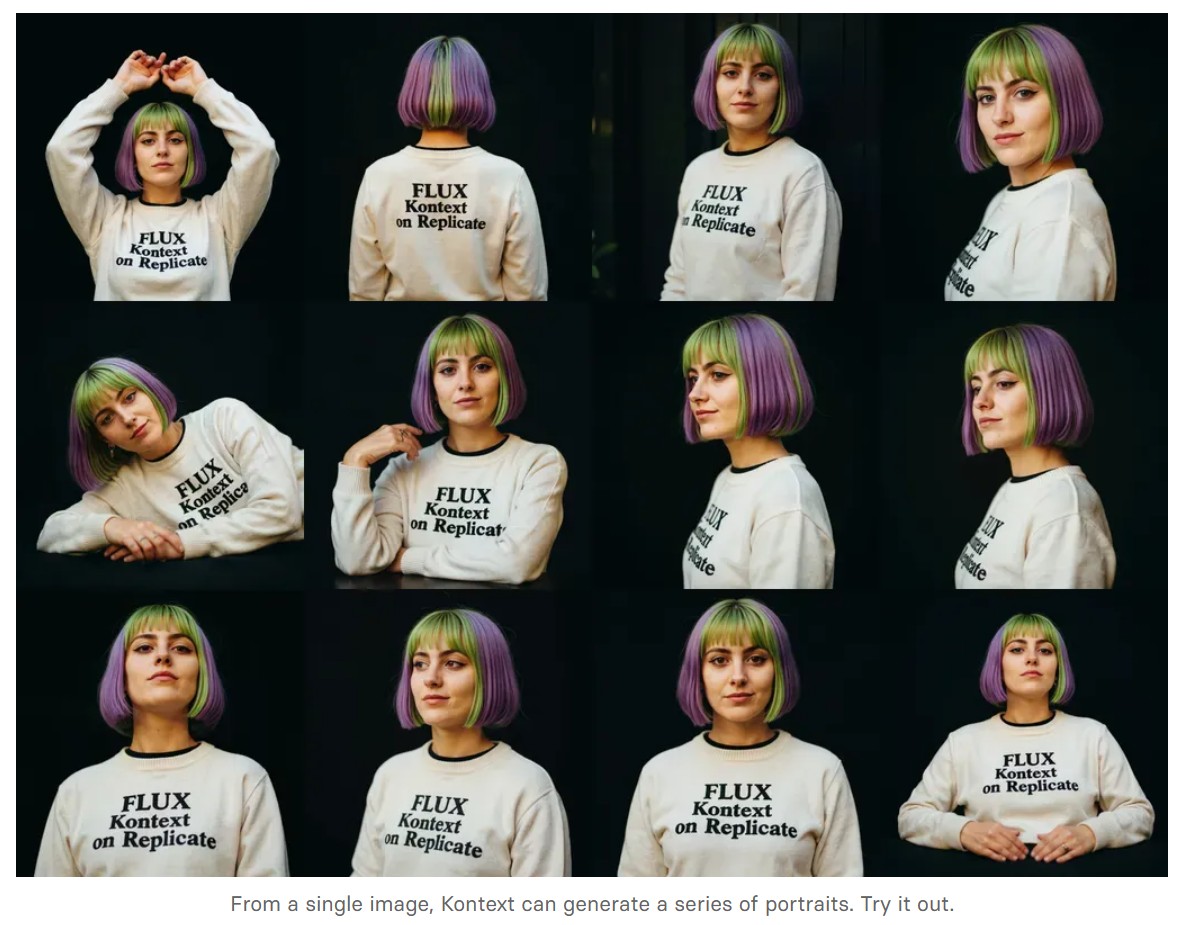

Black Forest Labs released FLUX.1 Kontext

https://replicate.com/blog/flux-kontext

https://replicate.com/black-forest-labs/flux-kontext-pro

There are three models, two are available now, and a third open-weight version is coming soon:

- FLUX.1 Kontext [pro]: State-of-the-art performance for image editing. High-quality outputs, great prompt following, and consistent results.

- FLUX.1 Kontext [max]: A premium model that brings maximum performance, improved prompt adherence, and high-quality typography generation without compromise on speed.

- Coming soon: FLUX.1 Kontext [dev]: An open-weight, guidance-distilled version of Kontext.

We’re so excited with what Kontext can do, we’ve created a collection of models on Replicate to give you ideas:

- Multi-image kontext: Combine two images into one.

- Portrait series: Generate a series of portraits from a single image

- Change haircut: Change a person’s hair style and color

- Iconic locations: Put yourself in front of famous landmarks

- Professional headshot: Generate a professional headshot from any image

-

Ross Pettit on The Agile Manager – How tech firms went for prioritizing cash flow instead of talent (and artists)

For years, tech firms were fighting a war for talent. Now they are waging war on talent.

This shift has led to a weakening of the social contract between employees and employers, with culture and employee values being sidelined in favor of financial discipline and free cash flow.

The operating environment has changed from a high tolerance for failure (where cheap capital and willing spenders accepted slipped dates and feature lag) to a very low – if not zero – tolerance for failure (fiscal discipline is in vogue again).

While preventing and containing mistakes staves off shocks to the income statement, it doesn’t fundamentally reduce costs. Years of payroll bloat – aggressive hiring, aggressive comp packages to attract and retain people – make labor the biggest cost in tech.

…Of course, companies can reduce their labor force through natural attrition. Other labor policy changes – return to office mandates, contraction of fringe benefits, reduction of job promotions, suspension of bonuses and comp freezes – encourage more people to exit voluntarily. It’s cheaper to let somebody self-select out than it is to lay them off.

…Employees recruited in more recent years from outside the ranks of tech were given the expectation that we’ll teach you what you need to know, we want you to join because we value what you bring to the table. That is no longer applicable. Runway for individual growth is very short in zero-tolerance-for-failure operating conditions. Job preservation, at least in the short term for this cohort, comes from completing corporate training and acquiring professional certifications. Training through community or experience is not in the cards.

…The ability to perform competently in multiple roles, the extra-curriculars, the self-directed enrichment, the ex-company leadership – all these things make no matter. The calculus is what you got paid versus how you performed on objective criteria relative to your cohort. Nothing more.

…Here is where the change in the social contract is perhaps the most blatant. In the “destination employer” years, the employee invested in the community and its values, and the employer rewarded the loyalty of its employees through things like runway for growth (stretch roles and sponsored work innovation) and tolerance for error (valuing demonstrable learning over perfection in execution). No longer.

…http://www.rosspettit.com/2024/08/for-years-tech-was-fighting-war-for.html

-

About color: What is a LUT

http://www.lightillusion.com/luts.html

https://www.shutterstock.com/blog/how-use-luts-color-grading

A LUT (Lookup Table) is essentially the modifier between two images, the original image and the displayed image, based on a mathematical formula. Basically conversion matrices of different complexities. There are different types of LUTS – viewing, transform, calibration, 1D and 3D.

-

VQGAN + CLIP AI made Music Video for the song Canvas by Resonate

” In this video, I utilized artificial intelligence to generate an animated music video for the song Canvas by Resonate. This tool allows anyone to generate beautiful images using only text as the input. My question was, what if I used song lyrics as input to the AI, can I make perfect music synchronized videos automatically with the push of a button? Let me know how you think the AI did in this visual interpretation of the song.

After getting caught up in the excitement around DALL·E2 (latest and greatest AI system, it’s INSANE), I searched for any way I could use similar image generation for music synchronization. Since DALL·E2 is not available to the public yet, my search led me to VQGAN + CLIP (Vector Quantized Generative Adversarial Network and Contrastive Language–Image Pre-training), before settling more specifically on Disco Diffusion V5.2 Turbo. If you don’t know what any of these words or acronyms mean, don’t worry, I was just as confused when I first started learning about this technology. I believe we’re reaching a turning point where entire industries are about to shift in reaction to this new process (which is essentially magic!).

DoodleChaos”