BREAKING NEWS

LATEST POSTS

-

HumanDiT – Pose-Guided Diffusion Transformer for Long-form Human Motion Video Generation

https://agnjason.github.io/HumanDiT-page

By inputting a single character image and template pose video, our method can generate vocal avatar videos featuring not only pose-accurate rendering but also realistic body shapes.

-

DynVFX – Augmenting Real Videoswith Dynamic Content

Given an input video and a simple user-provided text instruction describing the desired content, our method synthesizes dynamic objects or complex scene effects that naturally interact with the existing scene over time. The position, appearance, and motion of the new content are seamlessly integrated into the original footage while accounting for camera motion, occlusions, and interactions with other dynamic objects in the scene, resulting in a cohesive and realistic output video.

https://dynvfx.github.io/sm/index.html

-

ByteDance OmniHuman-1

https://omnihuman-lab.github.io

They propose an end-to-end multimodality-conditioned human video generation framework named OmniHuman, which can generate human videos based on a single human image and motion signals (e.g., audio only, video only, or a combination of audio and video). In OmniHuman, we introduce a multimodality motion conditioning mixed training strategy, allowing the model to benefit from data scaling up of mixed conditioning. This overcomes the issue that previous end-to-end approaches faced due to the scarcity of high-quality data. OmniHuman significantly outperforms existing methods, generating extremely realistic human videos based on weak signal inputs, especially audio. It supports image inputs of any aspect ratio, whether they are portraits, half-body, or full-body images, delivering more lifelike and high-quality results across various scenarios.

-

Conda – an open source management system for installing multiple versions of software packages and their dependencies into a virtual environment

https://anaconda.org/anaconda/conda

https://docs.conda.io/projects/conda/en/latest/user-guide/getting-started.html

NOTE The company recently changed their TOS and this service now incurs into costs for teams above a threshold.

Use MicroMamba instead. -

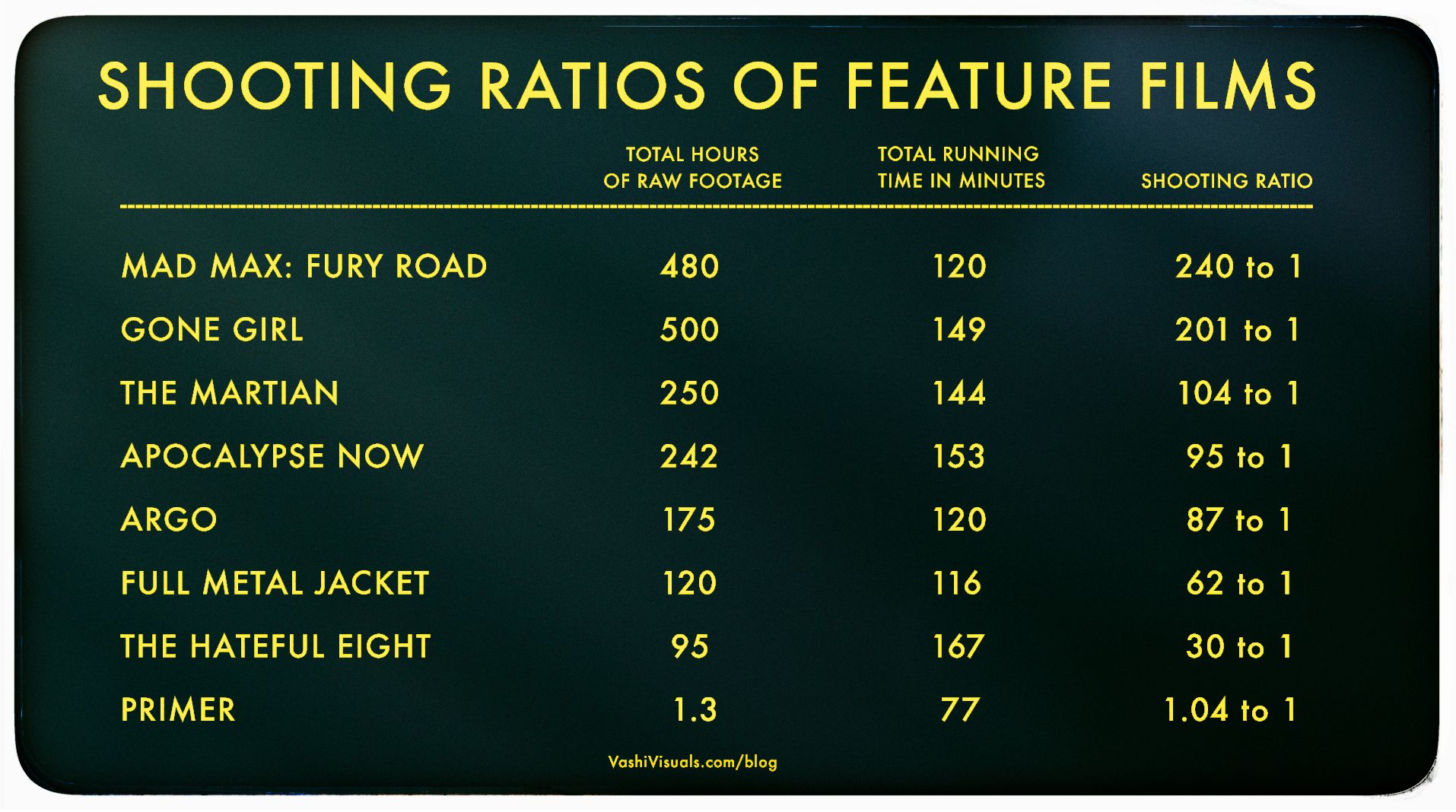

Vashi Nedomansky – Shooting ratios of feature films

In the Golden Age of Hollywood (1930-1959), a 10:1 shooting ratio was the norm—a 90-minute film meant about 15 hours of footage. Directors like Alfred Hitchcock famously kept it tight with a 3:1 ratio, giving studios little wiggle room in the edit.

Fast forward to today: the digital era has sent shooting ratios skyrocketing. Affordable cameras roll endlessly, capturing multiple takes, resets, and everything in between. Gone are the disciplined “Action to Cut” days of film.https://en.wikipedia.org/wiki/Shooting_ratio

FEATURED POSTS

-

GaiaNet – Install and run your own local and decentralized free AI agent service

https://github.com/GaiaNet-AI/gaianet-node

GaiaNet is a decentralized computing infrastructure that enables everyone to create, deploy, scale, and monetize their own AI agents that reflect their styles, values, knowledge, and expertise. It allows individuals and businesses to create AI agents. Each GaiaNet node provides

- a web-based chatbot UI.

- an OpenAI compatible API. See how to use a GaiaNet node as a drop-in OpenAI replacement in your favorite AI agent app.

-

Micael Widell – Insect Macro with Diffuser Photography Basics in 10 Minutes (or more)

- Manual mode

- ISO 200

- Aperture F8

- Shutter speed 1/200

- Overhead flash manual mode to 1/16

- Flash diffuser