BREAKING NEWS

LATEST POSTS

-

-

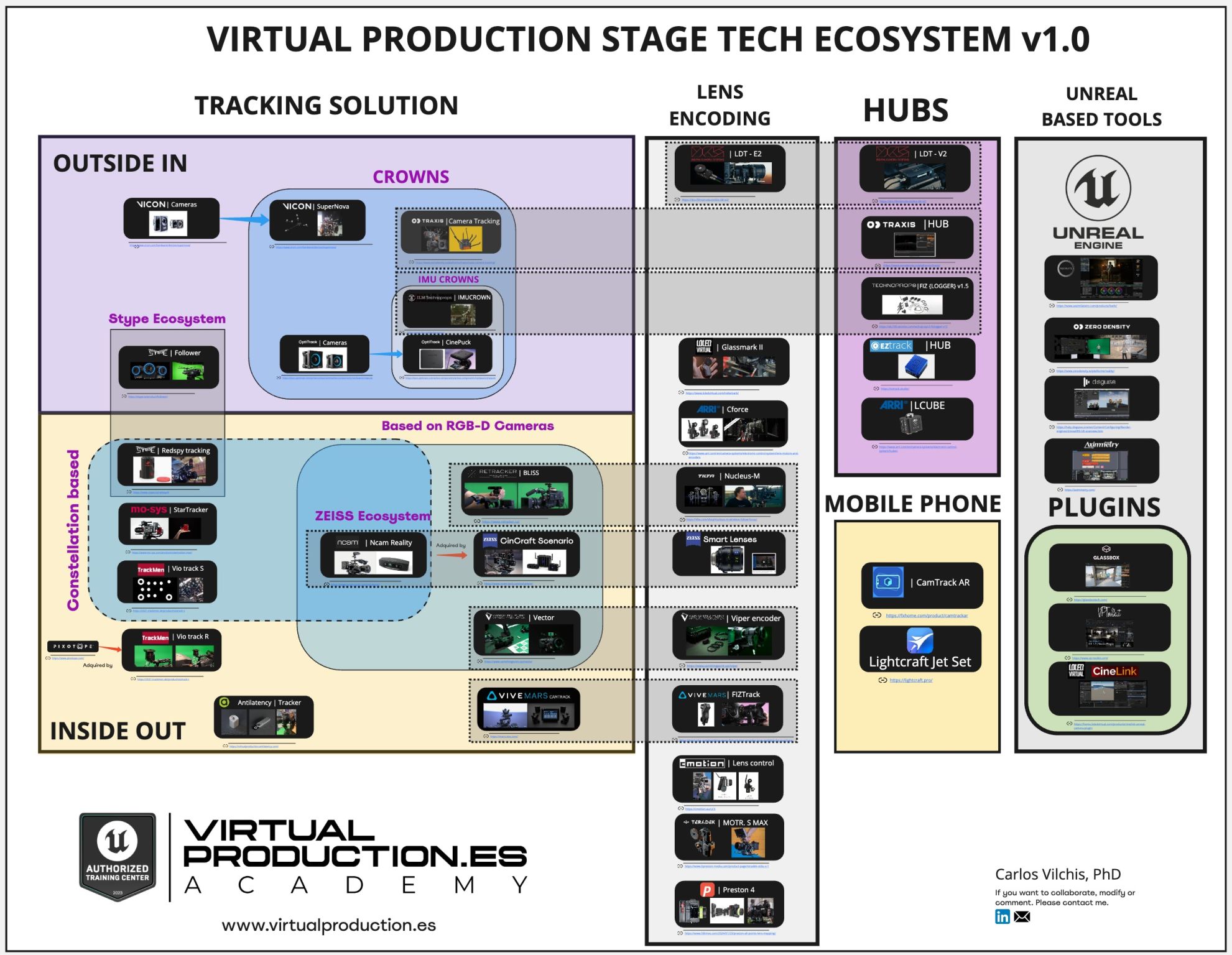

Carlos Vilchi – Virtual Production Stage Tech scheme v1.0

Carlos Vilchi has spent some time working on collecting all the technology related to Stage Tech including:

- -All the tracking technology existing today (inside out, outside in)

- -All lens encoding vendors, and their compatibility.

- -Tools, plugins, or Hubs.

- -The different small ecosystems between: Vicon, ZEISS Cinematography, ILM Technoprops, OptiTrack, stYpe, Antilatency, Ncam Technologies Ltd, Mo-Sys Engineering Ltd, EZtrack®, ARRI, DCS – Digital Camera Systems, Zero Density, Disguise, Aximmetry Technologies, HTC VIVE, Lightcraft Technology and more!

Local copy in the post

(more…) -

Ben McEwan – Deconstructing Despill Algorithms

Despilling is arguably the most important step to get right when pulling a key. A great despill can often hide imperfections in your alpha channel & prevents tedious painting to manually fix edges.

benmcewan.com/blog/2018/05/20/understanding-despill-algorithms/

-

Genex – Generative World Explorer

https://generative-world-explorer.github.io

Planning with partial observation is a central challenge in embodied AI. A majority of prior works have tackled this challenge by developing agents that physically explore their environment to update their beliefs about the world state. However, humans can imagine unseen parts of the world through a mental exploration and revise their beliefs with imagined observations. Such updated beliefs can allow them to make more informed decisions at the current step, without having to physically explore the world first. To achieve this human-like ability, we introduce the Generative World Explorer (Genex), a video generation model that allows an agent to mentally explore a large-scale 3D world (e.g., urban scenes) and acquire imagined observations to update its belief about the world .

-

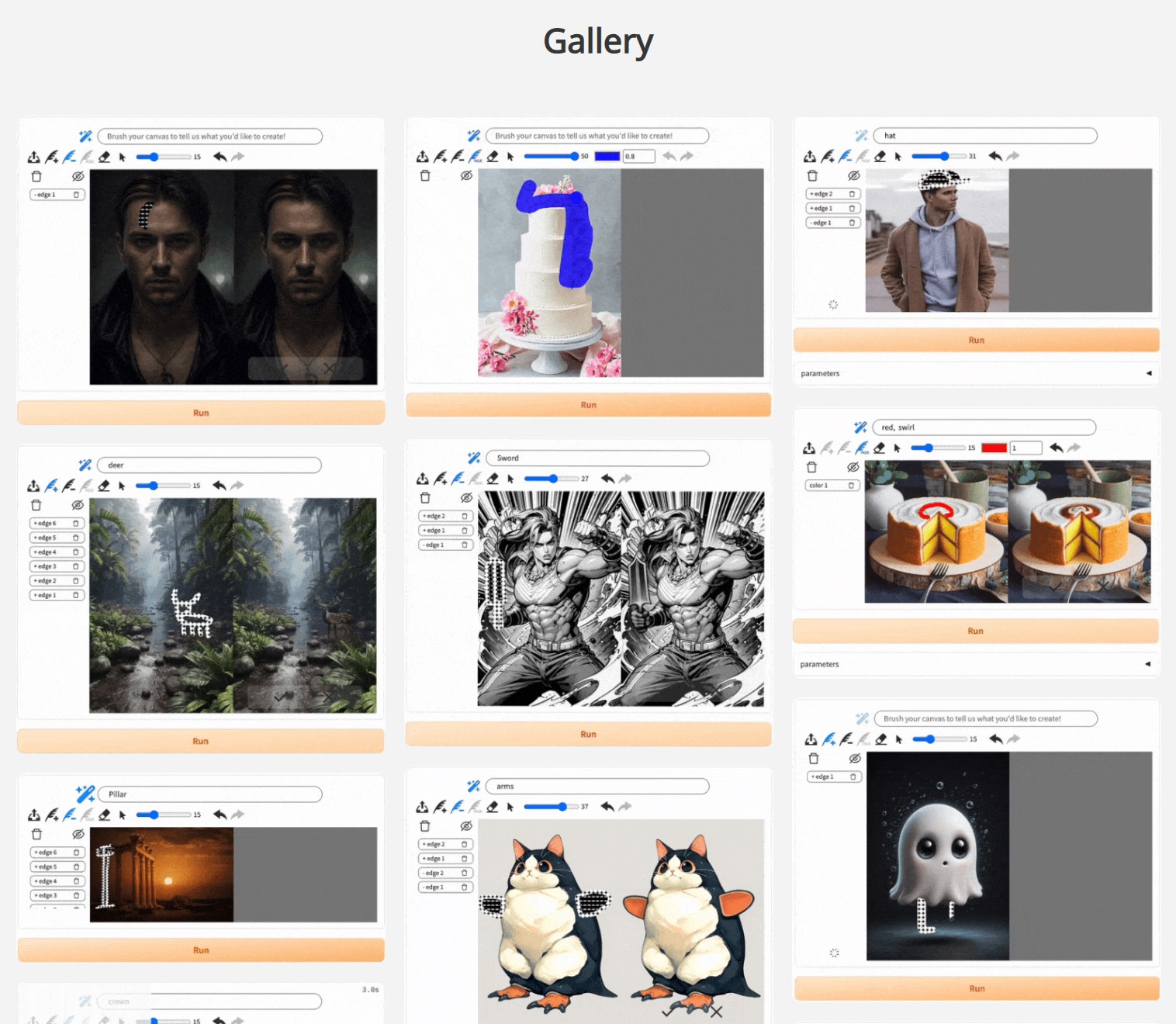

KeenTools 2024.3 – FaceTracker for Blender Stable

FaceTracker for Blender is:

– Markerless facial mocap: capture facial performance and head motion with a matching geometry

– Custom face mesh generation: create digital doubles using snapshots of video frames (available with FaceBundle)

– 3D texture mapping: beauty work, (de)ageing, relighting

– 3D compositing: add digital make-up, dynamic VFX, hair and more

– (NEW) Animation retargeting: convert facial animation to ARKit blendshapes or Rigify rig in one clickhttps://keentools.io/products/facetracker-for-blender

FEATURED POSTS

-

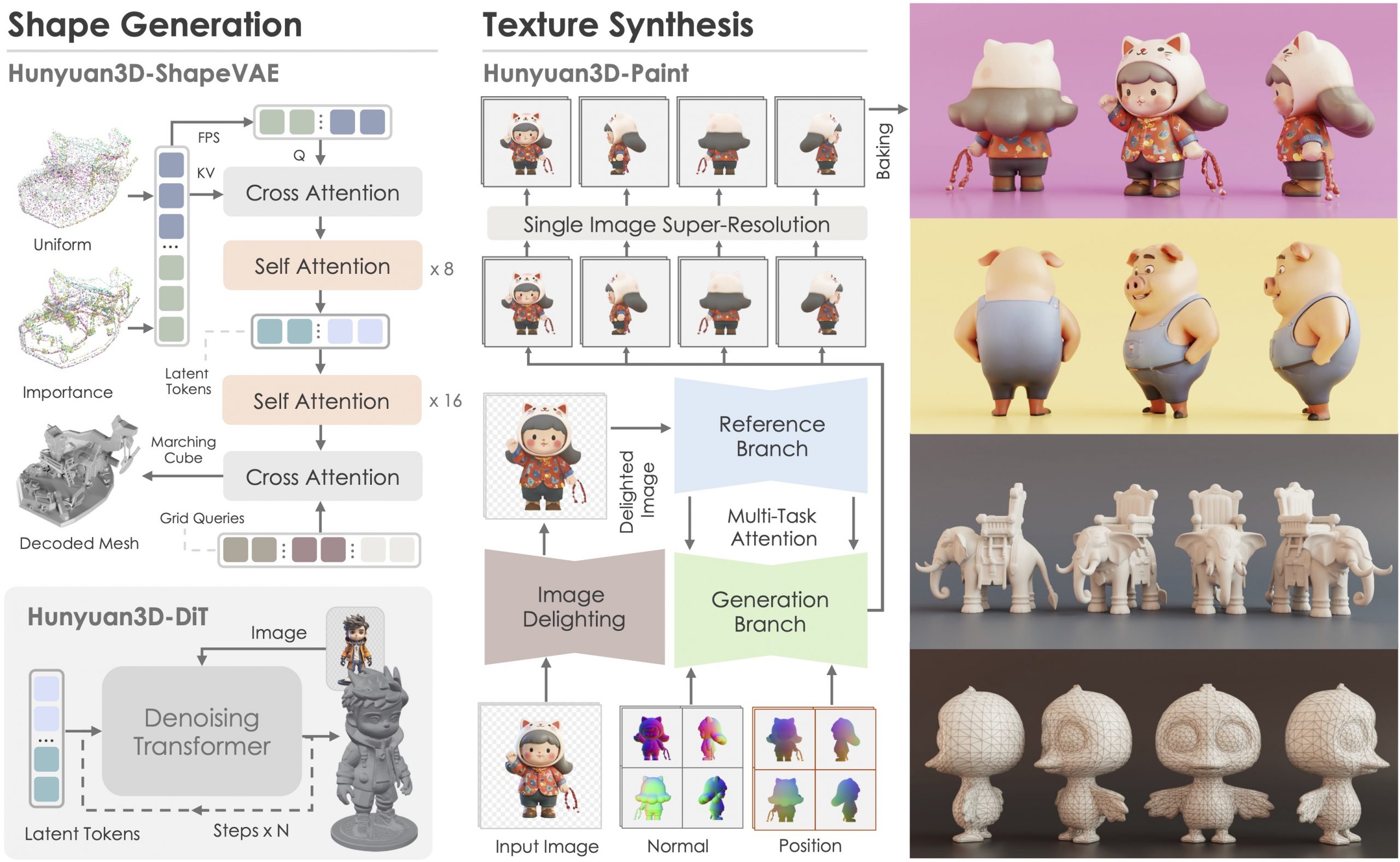

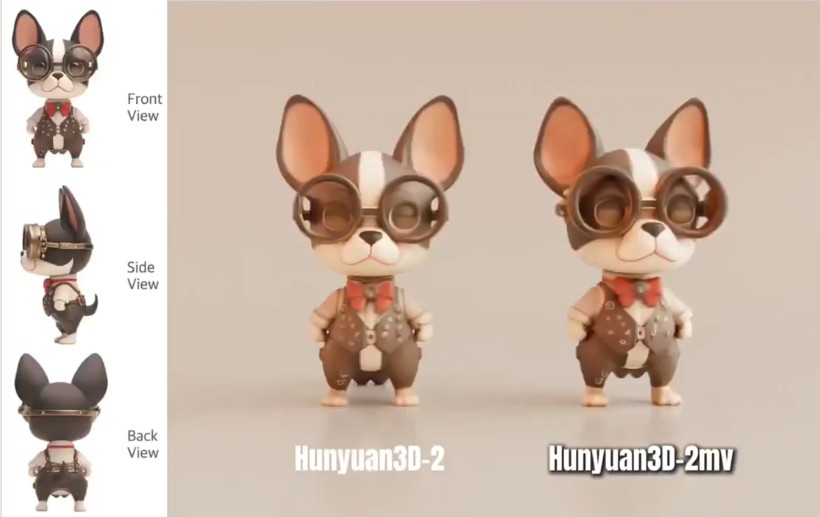

Tencent Hunyuan3D 2.1 goes Open Source and adds MV (Multi-view) and MV Mini

https://huggingface.co/tencent/Hunyuan3D-2mv

https://huggingface.co/tencent/Hunyuan3D-2mini

https://github.com/Tencent/Hunyuan3D-2

Tencent just made Hunyuan3D 2.1 open-source.

This is the first fully open-source, production-ready PBR 3D generative model with cinema-grade quality.

https://github.com/Tencent-Hunyuan/Hunyuan3D-2.1

What makes it special?

• Advanced PBR material synthesis brings realistic materials like leather, bronze, and more to life with stunning light interactions.

• Complete access to model weights, training/inference code, data pipelines.

• Optimized to run on accessible hardware.

• Built for real-world applications with professional-grade output quality.

They’re making it accessible to everyone:

• Complete open-source ecosystem with full documentation.

• Ready-to-use model weights and training infrastructure.

• Live demo available for instant testing.

• Comprehensive GitHub repository with implementation details.

-

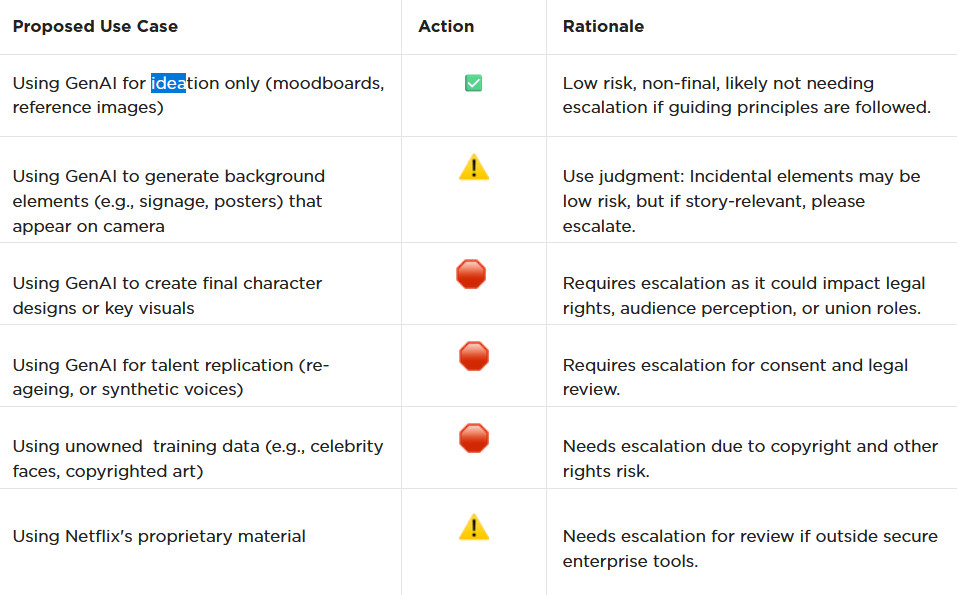

AI and the Law – Netflix : Using Generative AI in Content Production

https://www.cartoonbrew.com/business/netflix-generative-ai-use-guidelines-253300.html

- Temporary Use: AI-generated material can be used for ideation, visualization, and exploration—but is currently considered temporary and not part of final deliverables.

- Ownership & Rights: All outputs must be carefully reviewed to ensure rights, copyright, and usage are properly cleared before integrating into production.

- Transparency: Productions are expected to document and disclose how generative AI is used.

- Human Oversight: AI tools are meant to support creative teams, not replace them—final decision-making rests with human creators.

- Security & Compliance: Any use of AI tools must align with Netflix’s security protocols and protect confidential production material.

-

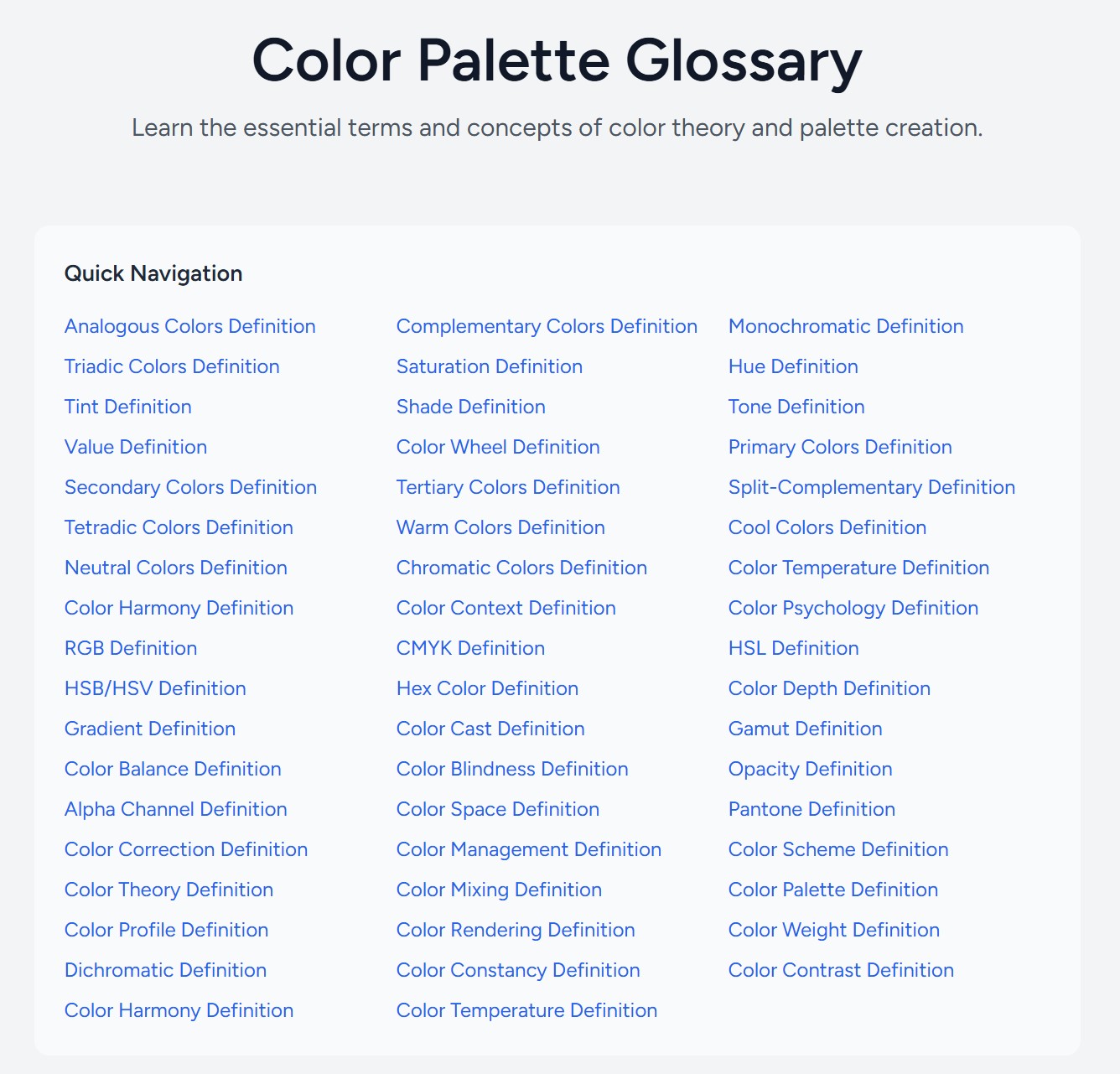

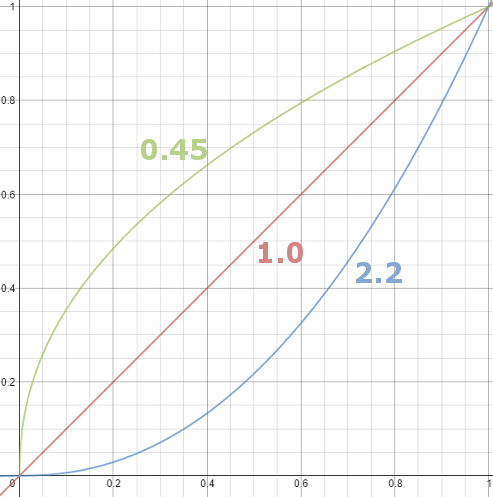

Gamma correction

http://www.normankoren.com/makingfineprints1A.html#Gammabox

https://en.wikipedia.org/wiki/Gamma_correction

http://www.photoscientia.co.uk/Gamma.htm

https://www.w3.org/Graphics/Color/sRGB.html

http://www.eizoglobal.com/library/basics/lcd_display_gamma/index.html

https://forum.reallusion.com/PrintTopic308094.aspx

Basically, gamma is the relationship between the brightness of a pixel as it appears on the screen, and the numerical value of that pixel. Generally Gamma is just about defining relationships.

Three main types:

– Image Gamma encoded in images

– Display Gammas encoded in hardware and/or viewing time

– System or Viewing Gamma which is the net effect of all gammas when you look back at a final image. In theory this should flatten back to 1.0 gamma.

(more…)