BREAKING NEWS

LATEST POSTS

-

Bella – Fast Spectral Rendering

Bella works in spectral space, allowing effects such as BSDF wavelength dependency, diffraction, or atmosphere to be modeled far more accurately than in color space.

https://superrendersfarm.com/blog/uncategorized/bella-a-new-spectral-physically-based-renderer/

-

Scans Factory – Unreal 5.4 demo – Rome Walkthrough

-

PlanCraft – An assumptions based project schedule generator

https://www.hasielhassan.com/PlanCraft/#about

It helps you create and Open Schedule Format (OSF) JSON file for your projects.

-

Elon Musk finally admits Tesla’s HW3 might not support full self-driving

The CEO said when asked about Tesla achieving its promised unsupervised self-driving on HW3 vehicles:

We are not 100% sure. HW4 has several times the capability of HW3. It’s easier to get things to work on HW4 and it takes a lot of efforts to squeeze that into HW3. There is some chance that HW3 does not achieve the safety level that allows for unsupervised FSD.

-

NoPoSplat – Surprisingly Simple 3D Gaussian Splats from Sparse Unposed Images

A feed-forward model capable of reconstructing 3D scenes parameterized by 3D Gaussians from unposed sparse multi-view images.

-

Apple reaches deal to acquire Pixelmator

https://9to5mac.com/2024/11/01/apple-reaches-deal-to-acquire-pixelmator

Pixelmator has signed an agreement to be acquired by Apple, subject to regulatory approval. There will be no material changes to the Pixelmator Pro, Pixelmator for iOS, and Photomator apps at this time.

https://www.pixelmator.com/pro/

-

Linus Torvalds on GenAI

Linus Torvalds, the creator and maintainer of the Linux kernel, talks modern developments.

-

Niantic SPZ – open source, compressed gaussian splats file format

https://scaniverse.com/news/spz-gaussian-splat-open-source-file-format

https://github.com/nianticlabs/spz

• Slashes file sizes by 90% (250MB → 25MB) with virtually zero quality loss

• Lightning-fast uploads/downloads, especially on mobile

• Dramatically reduced memory footprint

• Enables real-time processing right on your phoneTech breakthrough:

• Smart compression of position, rotation, color & scale data

• Column-based organization for maximum efficiency

• Innovative fixed-point quantization & log encodinghttps://www.8thwall.com/products/niantic-studio

FEATURED POSTS

-

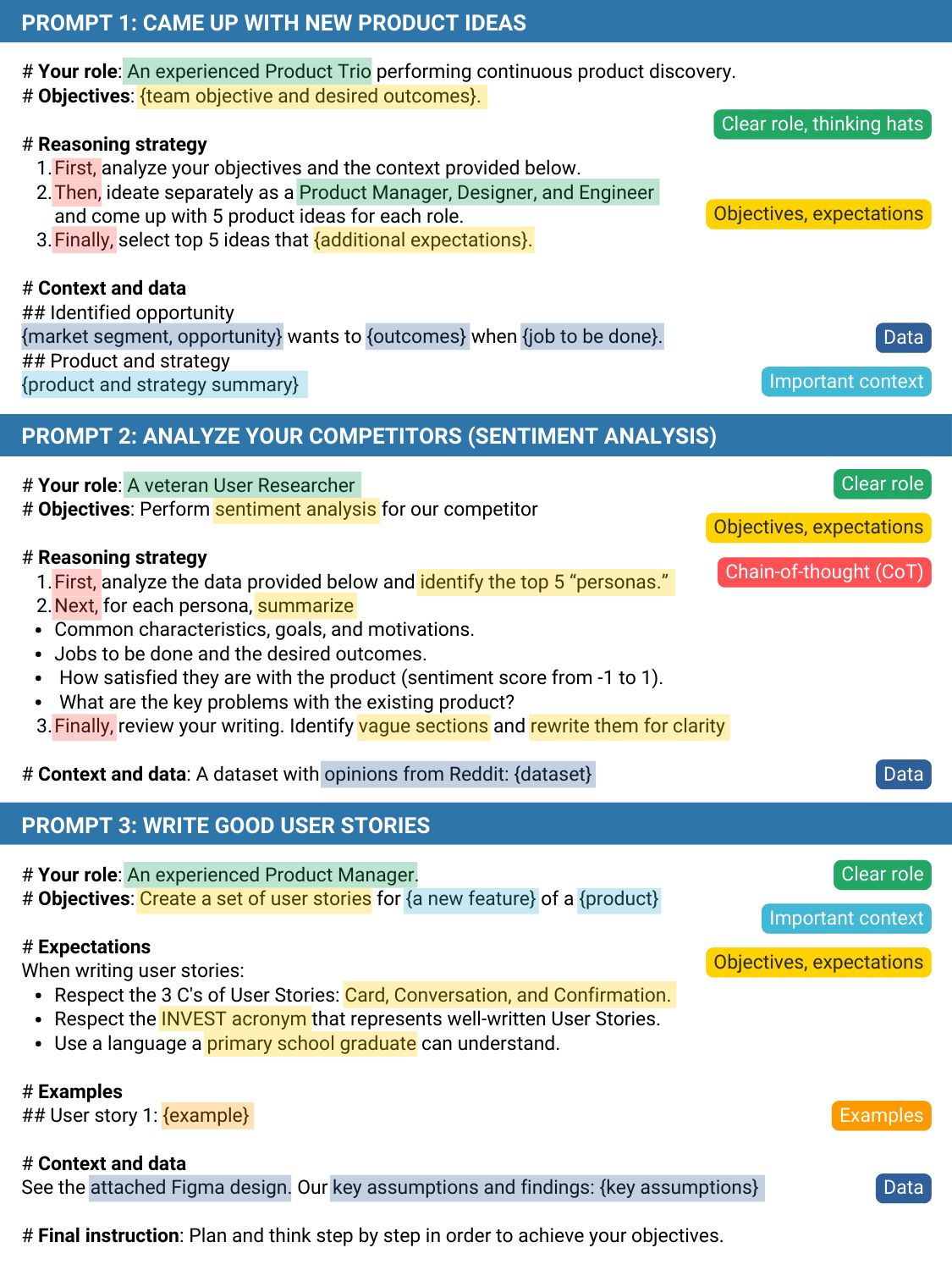

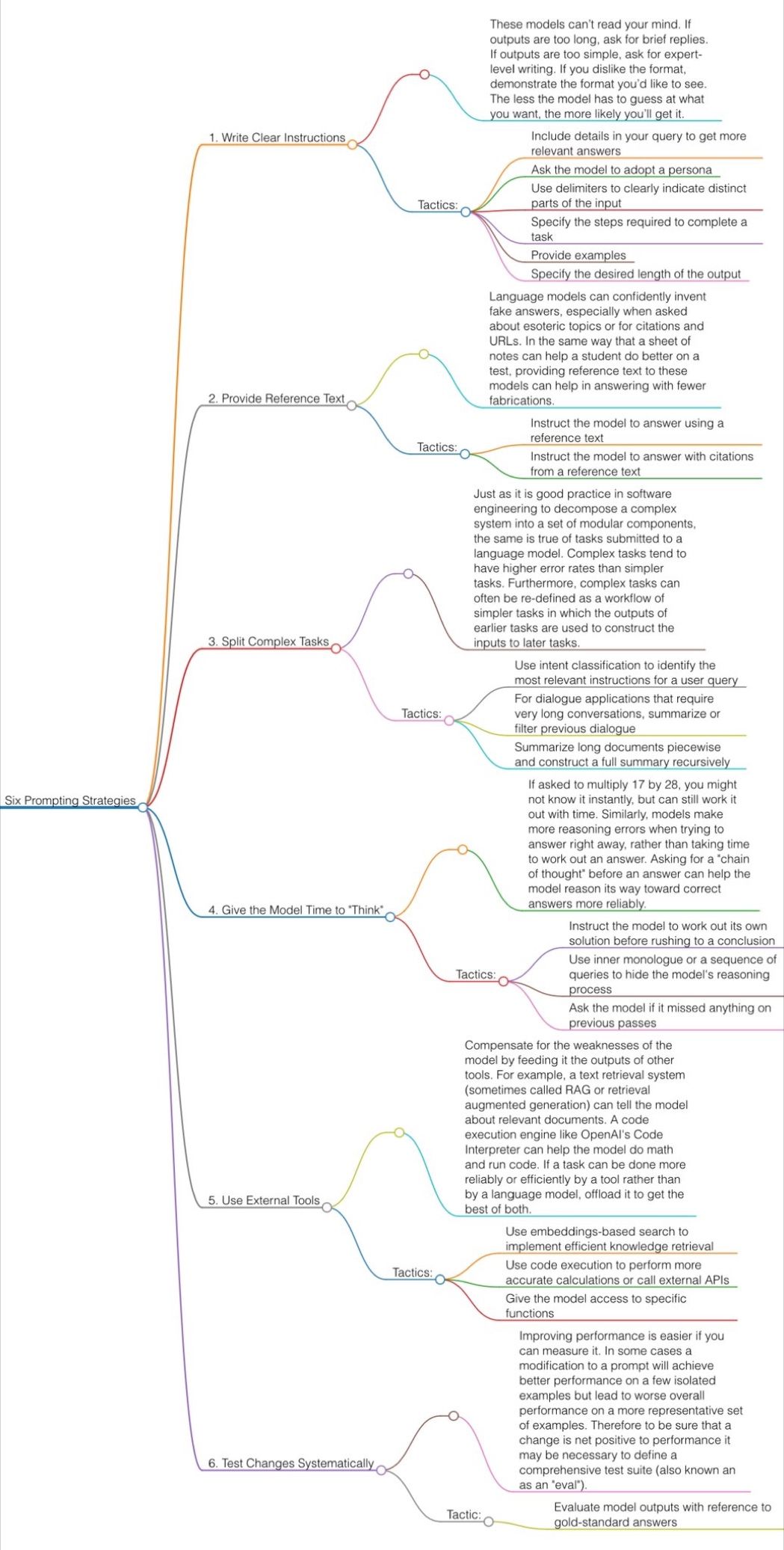

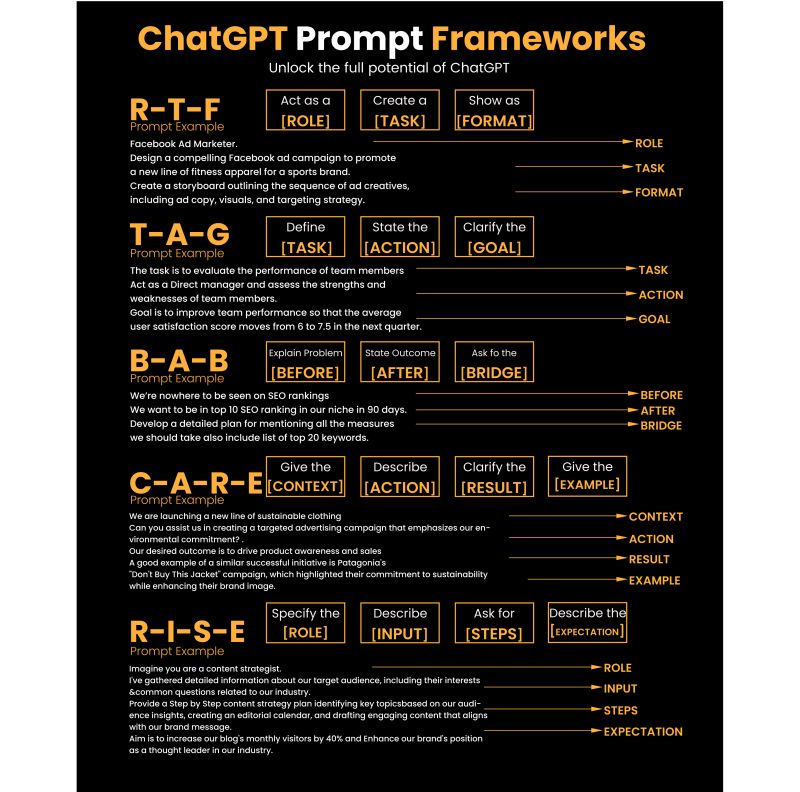

Guide to Prompt Engineering

The 10 most powerful techniques:

1. Communicate the Why

2. Explain the context (strategy, data)

3. Clearly state your objectives

4. Specify the key results (desired outcomes)

5. Provide an example or template

6. Define roles and use the thinking hats

7. Set constraints and limitations

8. Provide step-by-step instructions (CoT)

9. Ask to reverse-engineer the result to get a prompt

10. Use markdown or XML to clearly separate sections (e.g., examples)

Top 10 high-ROI use cases for PMs:

1. Get new product ideas

2. Identify hidden assumptions

3. Plan the right experiments

4. Summarize a customer interview

5. Summarize a meeting

6. Social listening (sentiment analysis)

7. Write user stories

8. Generate SQL queries for data analysis

9. Get help with PRD and other templates

10. Analyze your competitors

Quick prompting scheme:

1- pass an image to JoyCaption

https://www.pixelsham.com/2024/12/23/joy-caption-alpha-two-free-automatic-caption-of-images/

2- tune the caption with ChatGPT as suggested by Pixaroma:

Craft detailed prompts for Al (image/video) generation, avoiding quotation marks. When I provide a description or image, translate it into a prompt that captures a cinematic, movie-like quality, focusing on elements like scene, style, mood, lighting, and specific visual details. Ensure that the prompt evokes a rich, immersive atmosphere, emphasizing textures, depth, and realism. Always incorporate (static/slow) camera or cinematic movement to enhance the feeling of fluidity and visual storytelling. Keep the wording precise yet descriptive, directly usable, and designed to achieve a high-quality, film-inspired result.

https://www.reddit.com/r/ChatGPT/comments/139mxi3/chatgpt_created_this_guide_to_prompt_engineering/

1. Use the 80/20 principle to learn faster

Prompt: “I want to learn about [insert topic]. Identify and share the most important 20% of learnings from this topic that will help me understand 80% of it.”

2. Learn and develop any new skill

Prompt: “I want to learn/get better at [insert desired skill]. I am a complete beginner. Create a 30-day learning plan that will help a beginner like me learn and improve this skill.”

3. Summarize long documents and articles

Prompt: “Summarize the text below and give me a list of bullet points with key insights and the most important facts.” [Insert text]

4. Train ChatGPT to generate prompts for you

Prompt: “You are an AI designed to help [insert profession]. Generate a list of the 10 best prompts for yourself. The prompts should be about [insert topic].”

5. Master any new skill

Prompt: “I have 3 free days a week and 2 months. Design a crash study plan to master [insert desired skill].”

6. Simplify complex information

Prompt: “Break down [insert topic] into smaller, easier-to-understand parts. Use analogies and real-life examples to simplify the concept and make it more relatable.”

More suggestions under the post…

(more…)

-

Björn Ottosson – How software gets color wrong

https://bottosson.github.io/posts/colorwrong/

Most software around us today are decent at accurately displaying colors. Processing of colors is another story unfortunately, and is often done badly.

To understand what the problem is, let’s start with an example of three ways of blending green and magenta:

- Perceptual blend – A smooth transition using a model designed to mimic human perception of color. The blending is done so that the perceived brightness and color varies smoothly and evenly.

- Linear blend – A model for blending color based on how light behaves physically. This type of blending can occur in many ways naturally, for example when colors are blended together by focus blur in a camera or when viewing a pattern of two colors at a distance.

- sRGB blend – This is how colors would normally be blended in computer software, using sRGB to represent the colors.

Let’s look at some more examples of blending of colors, to see how these problems surface more practically. The examples use strong colors since then the differences are more pronounced. This is using the same three ways of blending colors as the first example.

Instead of making it as easy as possible to work with color, most software make it unnecessarily hard, by doing image processing with representations not designed for it. Approximating the physical behavior of light with linear RGB models is one easy thing to do, but more work is needed to create image representations tailored for image processing and human perception.

Also see: