BREAKING NEWS

LATEST POSTS

-

DepthCrafter – Generating Consistent Normals Long Depth Sequences for Open-world Videos

https://depthcrafter.github.io/

We innovate DepthCrafter, a novel video depth estimation approach, by leveraging video diffusion models. It can generate temporally consistent long depth sequences with fine-grained details for open-world videos, without requiring additional information such as camera poses or optical flow.

-

ByteDance Loopy: Taming Audio-Driven Portrait Avatar with Long-Term Motion Dependency

https://loopyavatar.github.io/

Loopy supports various visual and audio styles. It can generate vivid motion details from audio alone, such as non-speech movements like sighing, emotion-driven eyebrow and eye movements, and natural head movements.

-

Ross Pettit on The Agile Manager – How tech firms went for prioritizing cash flow instead of talent (and artists)

For years, tech firms were fighting a war for talent. Now they are waging war on talent.

This shift has led to a weakening of the social contract between employees and employers, with culture and employee values being sidelined in favor of financial discipline and free cash flow.

The operating environment has changed from a high tolerance for failure (where cheap capital and willing spenders accepted slipped dates and feature lag) to a very low – if not zero – tolerance for failure (fiscal discipline is in vogue again).

While preventing and containing mistakes staves off shocks to the income statement, it doesn’t fundamentally reduce costs. Years of payroll bloat – aggressive hiring, aggressive comp packages to attract and retain people – make labor the biggest cost in tech.

…Of course, companies can reduce their labor force through natural attrition. Other labor policy changes – return to office mandates, contraction of fringe benefits, reduction of job promotions, suspension of bonuses and comp freezes – encourage more people to exit voluntarily. It’s cheaper to let somebody self-select out than it is to lay them off.

…Employees recruited in more recent years from outside the ranks of tech were given the expectation that we’ll teach you what you need to know, we want you to join because we value what you bring to the table. That is no longer applicable. Runway for individual growth is very short in zero-tolerance-for-failure operating conditions. Job preservation, at least in the short term for this cohort, comes from completing corporate training and acquiring professional certifications. Training through community or experience is not in the cards.

…The ability to perform competently in multiple roles, the extra-curriculars, the self-directed enrichment, the ex-company leadership – all these things make no matter. The calculus is what you got paid versus how you performed on objective criteria relative to your cohort. Nothing more.

…Here is where the change in the social contract is perhaps the most blatant. In the “destination employer” years, the employee invested in the community and its values, and the employer rewarded the loyalty of its employees through things like runway for growth (stretch roles and sponsored work innovation) and tolerance for error (valuing demonstrable learning over perfection in execution). No longer.

…http://www.rosspettit.com/2024/08/for-years-tech-was-fighting-war-for.html

-

2DGS – 2D Gaussian Splatting for Geometrically Accurate Radiance Fields

A 2D Gaussian Splats technique for extracting cleaner 3D geometry from 3DGS

https://github.com/hbb1/2d-gaussian-splatting

https://surfsplatting.github.io/

https://colab.research.google.com/drive/1qoclD7HJ3-o0O1R8cvV3PxLhoDCMsH8W

3D Gaussian Splatting (3DGS) has recently revolutionized radiance field reconstruction, achieving high quality novel view synthesis and fast rendering speed without baking. However, 3DGS fails to accurately represent surfaces due to the multi-view inconsistent nature of 3D Gaussians. We present 2D Gaussian Splatting (2DGS), a novel approach to model and reconstruct geometrically accurate radiance fields from multi-view images. Our key idea is to collapse the 3D volume into a set of 2D oriented planar Gaussian disks. Unlike 3D Gaussians, 2D Gaussians provide view-consistent geometry while modeling surfaces intrinsically. To accurately recover thin surfaces and achieve stable optimization, we introduce a perspective-accurate 2D splatting process utilizing ray-splat intersection and rasterization. Additionally, we incorporate depth distortion and normal consistency terms to further enhance the quality of the reconstructions. We demonstrate that our differentiable renderer allows for noise-free and detailed geometry reconstruction while maintaining competitive appearance quality, fast training speed, and real-time rendering.

-

Kiosk – Library Tool for 3D Artists

Kiosk streamlines resource management. With tailored filtering, customizable organization, and seamless integration into Maya, Houdini, Blender and Cinema4D. Maintain one library for them all!

https://fabianstrube.gumroad.com/l/kiosk-library

-

USD cookbook examples and python stubs

This repository is a collection of simple USD projects. Each project shows off a single feature or group of USD features.

https://github.com/ColinKennedy/USD-Cookbook

These stubs are designed to be used with a type checker like

mypyto provide static type checking of python code, as well as to provide analysis and completion in IDEs like PyCharm and VSCode (with Pylance). -

Top 3D Printing Website Resources

The Holy Grail – https://github.com/ad-si/awesome-3d-printing

- Thingiverse – https://www.thingiverse.com/

- Makerworld – https://makerworld.com/

- Printables – https://www.printables.com/

- Cults – https://cults3d.com/

- CG Trader – https://www.cgtrader.com/3d-print-models

- Sketchfab – https://sketchfab.com/store/3d-models/stl

- 3D Export – https://3dexport.com/

- MyMiniFactory – https://www.myminifactory.com/

- Thangs – https://thangs.com/

- Yeggi – https://www.yeggi.com/

- FAB365 – https://fab365.net/

- Gambody – https://www.gambody.com/

- All3DP News – https://all3dp.com/

- TCT Magazine – https://www.tctmagazine.com/topics/3D-printing-news/

- 3DPrint.com – https://3dprint.com/

- NASA 3D Models – https://nasa3d.arc.nasa.gov/models/printable

FEATURED POSTS

-

Photography basics: Solid Angle measures

http://www.calculator.org/property.aspx?name=solid+angle

A measure of how large the object appears to an observer looking from that point. Thus. A measure for objects in the sky. Useful to retuen the size of the sun and moon… and in perspective, how much of their contribution to lighting. Solid angle can be represented in ‘angular diameter’ as well.

http://en.wikipedia.org/wiki/Solid_angle

http://www.mathsisfun.com/geometry/steradian.html

A solid angle is expressed in a dimensionless unit called a steradian (symbol: sr). By default in terms of the total celestial sphere and before atmospheric’s scattering, the Sun and the Moon subtend fractional areas of 0.000546% (Sun) and 0.000531% (Moon).

http://en.wikipedia.org/wiki/Solid_angle#Sun_and_Moon

On earth the sun is likely closer to 0.00011 solid angle after athmospheric scattering. The sun as perceived from earth has a diameter of 0.53 degrees. This is about 0.000064 solid angle.

http://www.numericana.com/answer/angles.htm

The mean angular diameter of the full moon is 2q = 0.52° (it varies with time around that average, by about 0.009°). This translates into a solid angle of 0.0000647 sr, which means that the whole night sky covers a solid angle roughly one hundred thousand times greater than the full moon.

More info

http://lcogt.net/spacebook/using-angles-describe-positions-and-apparent-sizes-objects

http://amazing-space.stsci.edu/glossary/def.php.s=topic_astronomy

Angular Size

The apparent size of an object as seen by an observer; expressed in units of degrees (of arc), arc minutes, or arc seconds. The moon, as viewed from the Earth, has an angular diameter of one-half a degree.

The angle covered by the diameter of the full moon is about 31 arcmin or 1/2°, so astronomers would say the Moon’s angular diameter is 31 arcmin, or the Moon subtends an angle of 31 arcmin.

-

Embedding frame ranges into Quicktime movies with FFmpeg

QuickTime (.mov) files are fundamentally time-based, not frame-based, and so don’t have a built-in, uniform “first frame/last frame” field you can set as numeric frame IDs. Instead, tools like Shotgun Create rely on the timecode track and the movie’s duration to infer frame numbers. If you want Shotgun to pick up a non-default frame range (e.g. start at 1001, end at 1064), you must bake in an SMPTE timecode that corresponds to your desired start frame, and ensure the movie’s duration matches your clip length.

How Shotgun Reads Frame Ranges

- Default start frame is 1. If no timecode metadata is present, Shotgun assumes the movie begins at frame 1.

- Timecode ⇒ frame number. Shotgun Create “honors the timecodes of media sources,” mapping the embedded TC to frame IDs. For example, a 24 fps QuickTime tagged with a start timecode of 00:00:41:17 will be interpreted as beginning on frame 1001 (1001 ÷ 24 fps ≈ 41.71 s).

Embedding a Start Timecode

QuickTime uses a

tmcd(timecode) track. You can bake in an SMPTE track via FFmpeg’s-timecodeflag or via Compressor/encoder settings:- Compute your start TC.

- Desired start frame = 1001

- Frame 1001 at 24 fps ⇒ 1001 ÷ 24 ≈ 41.708 s ⇒ TC 00:00:41:17

- FFmpeg example:

ffmpeg -i input.mov \ -c copy \ -timecode 00:00:41:17 \ output.movThis adds a timecode track beginning at 00:00:41:17, which Shotgun maps to frame 1001.

Ensuring the Correct End Frame

Shotgun infers the last frame from the movie’s duration. To end on frame 1064:

- Frame count = 1064 – 1001 + 1 = 64 frames

- Duration = 64 ÷ 24 fps ≈ 2.667 s

FFmpeg trim example:

ffmpeg -i input.mov \ -c copy \ -timecode 00:00:41:17 \ -t 00:00:02.667 \ output_trimmed.movThis results in a 64-frame clip (1001→1064) at 24 fps.

-

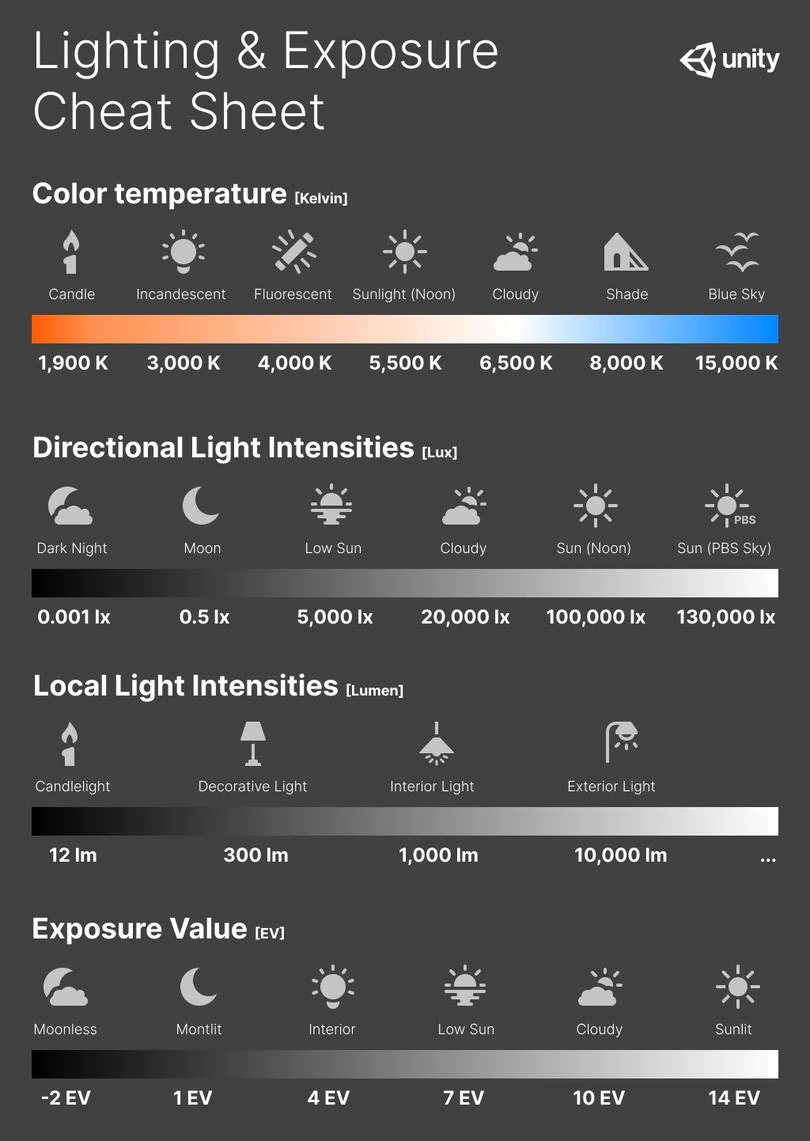

Photography basics: Exposure Value vs Photographic Exposure vs Il/Luminance vs Pixel luminance measurements

Also see: https://www.pixelsham.com/2015/05/16/how-aperture-shutter-speed-and-iso-affect-your-photos/

In photography, exposure value (EV) is a number that represents a combination of a camera’s shutter speed and f-number, such that all combinations that yield the same exposure have the same EV (for any fixed scene luminance).

The EV concept was developed in an attempt to simplify choosing among combinations of equivalent camera settings. Although all camera settings with the same EV nominally give the same exposure, they do not necessarily give the same picture. EV is also used to indicate an interval on the photographic exposure scale. 1 EV corresponding to a standard power-of-2 exposure step, commonly referred to as a stop

EV 0 corresponds to an exposure time of 1 sec and a relative aperture of f/1.0. If the EV is known, it can be used to select combinations of exposure time and f-number.Note EV does not equal to photographic exposure. Photographic Exposure is defined as how much light hits the camera’s sensor. It depends on the camera settings mainly aperture and shutter speed. Exposure value (known as EV) is a number that represents the exposure setting of the camera.

Thus, strictly, EV is not a measure of luminance (indirect or reflected exposure) or illuminance (incidentl exposure); rather, an EV corresponds to a luminance (or illuminance) for which a camera with a given ISO speed would use the indicated EV to obtain the nominally correct exposure. Nonetheless, it is common practice among photographic equipment manufacturers to express luminance in EV for ISO 100 speed, as when specifying metering range or autofocus sensitivity.

The exposure depends on two things: how much light gets through the lenses to the camera’s sensor and for how long the sensor is exposed. The former is a function of the aperture value while the latter is a function of the shutter speed. Exposure value is a number that represents this potential amount of light that could hit the sensor. It is important to understand that exposure value is a measure of how exposed the sensor is to light and not a measure of how much light actually hits the sensor. The exposure value is independent of how lit the scene is. For example a pair of aperture value and shutter speed represents the same exposure value both if the camera is used during a very bright day or during a dark night.

Each exposure value number represents all the possible shutter and aperture settings that result in the same exposure. Although the exposure value is the same for different combinations of aperture values and shutter speeds the resulting photo can be very different (the aperture controls the depth of field while shutter speed controls how much motion is captured).

EV 0.0 is defined as the exposure when setting the aperture to f-number 1.0 and the shutter speed to 1 second. All other exposure values are relative to that number. Exposure values are on a base two logarithmic scale. This means that every single step of EV – plus or minus 1 – represents the exposure (actual light that hits the sensor) being halved or doubled.Formulas

(more…)