BREAKING NEWS

LATEST POSTS

-

Anne-Laure Le Cunff – The Curse of Knowledge

The Curse of Knowledge is a cognitive bias that occurs when someone incorrectly assumes that others have the same or enough background to understand each other.

You can avoid the negative effects of the curse of knowledge by constantly questioning your assumptions as to how much exactly your audience knows.

- Get to know your audience

- Simplify your language

- Use storytelling

- Show, don’t tell

- Engage in active teaching

-

AI image generators could be their own demise due to inbreeding

A paper by computer scientists Matyas Bohacek and Hany Farid with the catchy title ‘Nepotistically Trained Generative-AI Models Collapse‘ shows that training AI image generators on AI images quite quickly leads to a deterioration in the quality of output. Farid likened the phenomenon to inbreeding. “If a species inbreeds with their own offspring and doesn’t diversify their gene pool, it can lead to a collapse of the species,” he said.

https://www.creativebloq.com/ai/ai-art/research-shows-ai-image-generators-could-be-their-own-demise

https://arxiv.org/pdf/2311.12202

-

Public Work – A search engine for free public domain content

Explore 100,000+ copyright-free images from The MET, New York Public Library, and other sources.

-

The most expensive software bug in human history

How a manually misconfigured and untested server continued running old test code during a live trading session, lead the bot to make $8.65 billion in unintended stock trades in just 28 minutes.

-

Ben Meer – Techniques for Staying Calm in Stressful Situations

https://benmeer.com/newsletter/staying-calm/

Stress is your body’s way of signaling that something important is happening.

- Slow Down

- Breathe

- Write

- Focus on Brain Health

- Zoom Out

- Reframe Negative Words

FEATURED POSTS

-

Capturing textures albedo

Building a Portable PBR Texture Scanner by Stephane Lb

http://rtgfx.com/pbr-texture-scanner/How To Split Specular And Diffuse In Real Images, by John Hable

http://filmicworlds.com/blog/how-to-split-specular-and-diffuse-in-real-images/Capturing albedo using a Spectralon

https://www.activision.com/cdn/research/Real_World_Measurements_for_Call_of_Duty_Advanced_Warfare.pdfReal_World_Measurements_for_Call_of_Duty_Advanced_Warfare.pdf

Spectralon is a teflon-based pressed powderthat comes closest to being a pure Lambertian diffuse material that reflects 100% of all light. If we take an HDR photograph of the Spectralon alongside the material to be measured, we can derive thediffuse albedo of that material.

The process to capture diffuse reflectance is very similar to the one outlined by Hable.

1. We put a linear polarizing filter in front of the camera lens and a second linear polarizing filterin front of a modeling light or a flash such that the two filters are oriented perpendicular to eachother, i.e. cross polarized.

2. We place Spectralon close to and parallel with the material we are capturing and take brack-eted shots of the setup7. Typically, we’ll take nine photographs, from -4EV to +4EV in 1EVincrements.

3. We convert the bracketed shots to a linear HDR image. We found that many HDR packagesdo not produce an HDR image in which the pixel values are linear. PTGui is an example of apackage which does generate a linear HDR image. At this point, because of the cross polarization,the image is one of surface diffuse response.

4. We open the file in Photoshop and normalize the image by color picking the Spectralon, filling anew layer with that color and setting that layer to “Divide”. This sets the Spectralon to 1 in theimage. All other color values are relative to this so we can consider them as diffuse albedo.

-

What Is The Resolution and view coverage Of The human Eye. And what distance is TV at best?

https://www.discovery.com/science/mexapixels-in-human-eye

About 576 megapixels for the entire field of view.

Consider a view in front of you that is 90 degrees by 90 degrees, like looking through an open window at a scene. The number of pixels would be:

90 degrees * 60 arc-minutes/degree * 1/0.3 * 90 * 60 * 1/0.3 = 324,000,000 pixels (324 megapixels).At any one moment, you actually do not perceive that many pixels, but your eye moves around the scene to see all the detail you want. But the human eye really sees a larger field of view, close to 180 degrees. Let’s be conservative and use 120 degrees for the field of view. Then we would see:

120 * 120 * 60 * 60 / (0.3 * 0.3) = 576 megapixels.

Or.

7 megapixels for the 2 degree focus arc… + 1 megapixel for the rest.

https://clarkvision.com/articles/eye-resolution.html

Details in the post

-

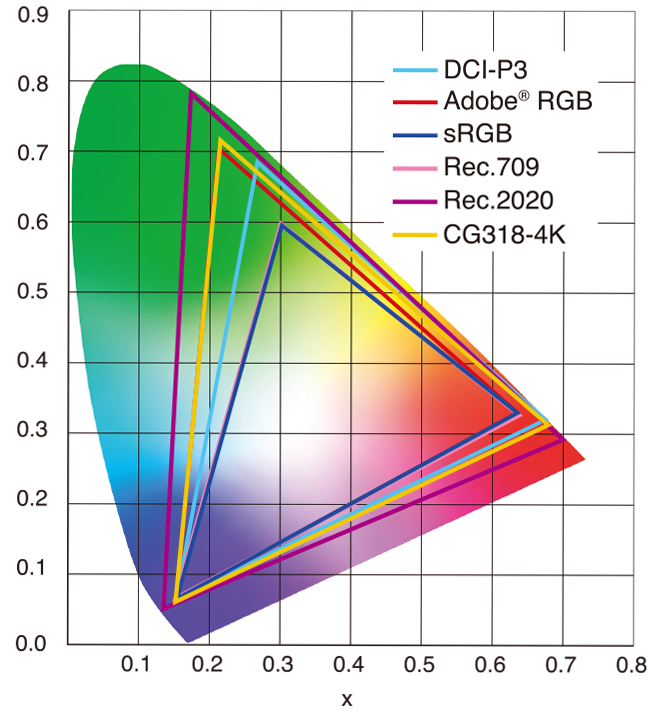

sRGB vs REC709 – An introduction and FFmpeg implementations

1. Basic Comparison

- What they are

- sRGB: A standard “web”/computer-display RGB color space defined by IEC 61966-2-1. It’s used for most monitors, cameras, printers, and the vast majority of images on the Internet.

- Rec. 709: An HD-video color space defined by ITU-R BT.709. It’s the go-to standard for HDTV broadcasts, Blu-ray discs, and professional video pipelines.

- Why they exist

- sRGB: Ensures consistent colors across different consumer devices (PCs, phones, webcams).

- Rec. 709: Ensures consistent colors across video production and playback chains (cameras → editing → broadcast → TV).

- What you’ll see

- On your desktop or phone, images tagged sRGB will look “right” without extra tweaking.

- On an HDTV or video-editing timeline, footage tagged Rec. 709 will display accurate contrast and hue on broadcast-grade monitors.

2. Digging Deeper

Feature sRGB Rec. 709 White point D65 (6504 K), same for both D65 (6504 K) Primaries (x,y) R: (0.640, 0.330) G: (0.300, 0.600) B: (0.150, 0.060) R: (0.640, 0.330) G: (0.300, 0.600) B: (0.150, 0.060) Gamut size Identical triangle on CIE 1931 chart Identical to sRGB Gamma / transfer Piecewise curve: approximate 2.2 with linear toe Pure power-law γ≈2.4 (often approximated as 2.2 in practice) Matrix coefficients N/A (pure RGB usage) Y = 0.2126 R + 0.7152 G + 0.0722 B (Rec. 709 matrix) Typical bit-depth 8-bit/channel (with 16-bit variants) 8-bit/channel (10-bit for professional video) Usage metadata Tagged as “sRGB” in image files (PNG, JPEG, etc.) Tagged as “bt709” in video containers (MP4, MOV) Color range Full-range RGB (0–255) Studio-range Y′CbCr (Y′ [16–235], Cb/Cr [16–240])

Why the Small Differences Matter

(more…) - What they are

-

FXGuide – ACES 2.0 with ILM’s Alex Fry

https://draftdocs.acescentral.com/background/whats-new/

ACES 2.0 is the second major release of the components that make up the ACES system. The most significant change is a new suite of rendering transforms whose design was informed by collected feedback and requests from users of ACES 1. The changes aim to improve the appearance of perceived artifacts and to complete previously unfinished components of the system, resulting in a more complete, robust, and consistent product.

Highlights of the key changes in ACES 2.0 are as follows:

- New output transforms, including:

- A less aggressive tone scale

- More intuitive controls to create custom outputs to non-standard displays

- Robust gamut mapping to improve perceptual uniformity

- Improved performance of the inverse transforms

- Enhanced AMF specification

- An updated specification for ACES Transform IDs

- OpenEXR compression recommendations

- Enhanced tools for generating Input Transforms and recommended procedures for characterizing prosumer cameras

- Look Transform Library

- Expanded documentation

Rendering Transform

The most substantial change in ACES 2.0 is a complete redesign of the rendering transform.

ACES 2.0 was built as a unified system, rather than through piecemeal additions. Different deliverable outputs “match” better and making outputs to display setups other than the provided presets is intended to be user-driven. The rendering transforms are less likely to produce undesirable artifacts “out of the box”, which means less time can be spent fixing problematic images and more time making pictures look the way you want.

Key design goals

- Improve consistency of tone scale and provide an easy to use parameter to allow for outputs between preset dynamic ranges

- Minimize hue skews across exposure range in a region of same hue

- Unify for structural consistency across transform type

- Easy to use parameters to create outputs other than the presets

- Robust gamut mapping to improve harsh clipping artifacts

- Fill extents of output code value cube (where appropriate and expected)

- Invertible – not necessarily reversible, but Output > ACES > Output round-trip should be possible

- Accomplish all of the above while maintaining an acceptable “out-of-the box” rendering

- New output transforms, including:

-

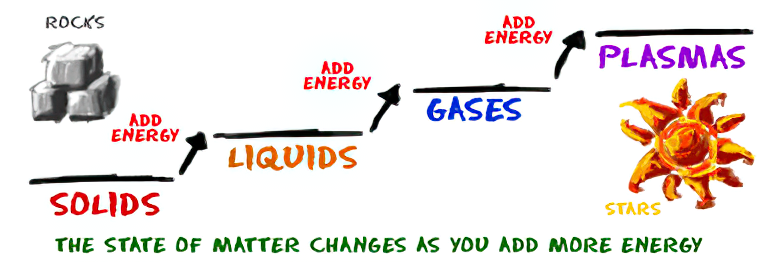

How are Energy and Matter the Same?

www.turnerpublishing.com/blog/detail/everything-is-energy-everything-is-one-everything-is-possible/

www.universetoday.com/116615/how-are-energy-and-matter-the-same/

As Einstein showed us, light and matter and just aspects of the same thing. Matter is just frozen light. And light is matter on the move. Albert Einstein’s most famous equation says that energy and matter are two sides of the same coin. How does one become the other?

Relativity requires that the faster an object moves, the more mass it appears to have. This means that somehow part of the energy of the car’s motion appears to transform into mass. Hence the origin of Einstein’s equation. How does that happen? We don’t really know. We only know that it does.

Matter is 99.999999999999 percent empty space. Not only do the atom and solid matter consist mainly of empty space, it is the same in outer space

The quantum theory researchers discovered the answer: Not only do particles consist of energy, but so does the space between. This is the so-called zero-point energy. Therefore it is true: Everything consists of energy.

Energy is the basis of material reality. Every type of particle is conceived of as a quantum vibration in a field: Electrons are vibrations in electron fields, protons vibrate in a proton field, and so on. Everything is energy, and everything is connected to everything else through fields.