BREAKING NEWS

LATEST POSTS

-

Kelly Boesch – Static and Toward The Light

https://www.kellyboeschdesign.com

I was working an album cover last night and got these really cool images in midjourney so made a video out of it. Animated using Pika. Song made using Suno Full version on my bandcamp. It’s called Static.

https://www.linkedin.com/posts/kellyboesch_midjourney-keyframes-ai-activity-7359244714853736450-Wvcr(more…) -

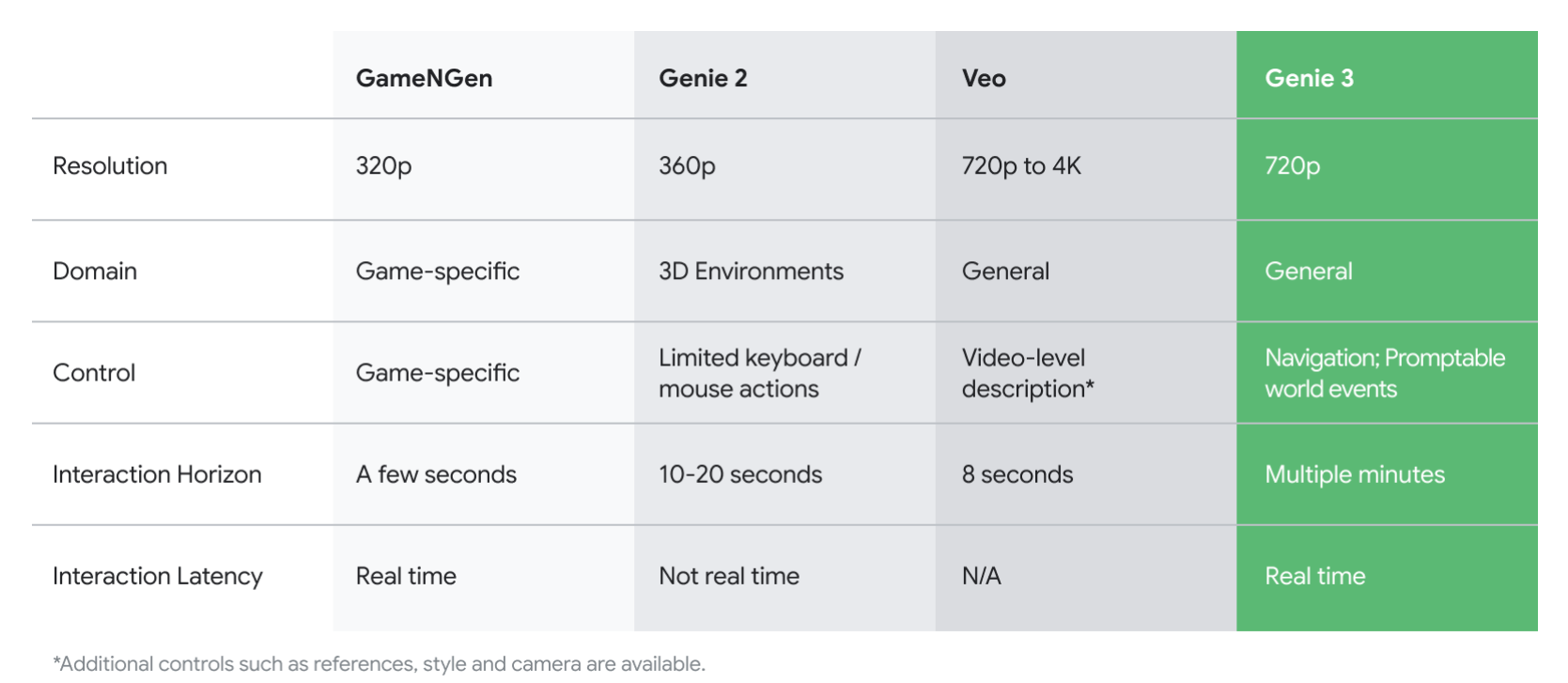

sRGB vs REC709 – An introduction and FFmpeg implementations

1. Basic Comparison

- What they are

- sRGB: A standard “web”/computer-display RGB color space defined by IEC 61966-2-1. It’s used for most monitors, cameras, printers, and the vast majority of images on the Internet.

- Rec. 709: An HD-video color space defined by ITU-R BT.709. It’s the go-to standard for HDTV broadcasts, Blu-ray discs, and professional video pipelines.

- Why they exist

- sRGB: Ensures consistent colors across different consumer devices (PCs, phones, webcams).

- Rec. 709: Ensures consistent colors across video production and playback chains (cameras → editing → broadcast → TV).

- What you’ll see

- On your desktop or phone, images tagged sRGB will look “right” without extra tweaking.

- On an HDTV or video-editing timeline, footage tagged Rec. 709 will display accurate contrast and hue on broadcast-grade monitors.

2. Digging Deeper

Feature sRGB Rec. 709 White point D65 (6504 K), same for both D65 (6504 K) Primaries (x,y) R: (0.640, 0.330) G: (0.300, 0.600) B: (0.150, 0.060) R: (0.640, 0.330) G: (0.300, 0.600) B: (0.150, 0.060) Gamut size Identical triangle on CIE 1931 chart Identical to sRGB Gamma / transfer Piecewise curve: approximate 2.2 with linear toe Pure power-law γ≈2.4 (often approximated as 2.2 in practice) Matrix coefficients N/A (pure RGB usage) Y = 0.2126 R + 0.7152 G + 0.0722 B (Rec. 709 matrix) Typical bit-depth 8-bit/channel (with 16-bit variants) 8-bit/channel (10-bit for professional video) Usage metadata Tagged as “sRGB” in image files (PNG, JPEG, etc.) Tagged as “bt709” in video containers (MP4, MOV) Color range Full-range RGB (0–255) Studio-range Y′CbCr (Y′ [16–235], Cb/Cr [16–240])

Why the Small Differences Matter

(more…) - What they are

-

Narcis Calin’s Galaxy Engine – A free, open source simulation software

This 2025 I decided to start learning how to code, so I installed Visual Studio and I started looking into C++. After days of watching tutorials and guides about the basics of C++ and programming, I decided to make something physics-related. I started with a dot that fell to the ground and then I wanted to simulate gravitational attraction, so I made 2 circles attracting each other. I thought it was really cool to see something I made with code actually work, so I kept building on top of that small, basic program. And here we are after roughly 8 months of learning programming. This is Galaxy Engine, and it is a simulation software I have been making ever since I started my learning journey. It currently can simulate gravity, dark matter, galaxies, the Big Bang, temperature, fluid dynamics, breakable solids, planetary interactions, etc. The program can run many tens of thousands of particles in real time on the CPU thanks to the Barnes-Hut algorithm, mixed with Morton curves. It also includes its own PBR 2D path tracer with BVH optimizations. The path tracer can simulate a bunch of stuff like diffuse lighting, specular reflections, refraction, internal reflection, fresnel, emission, dispersion, roughness, IOR, nested IOR and more! I tried to make the path tracer closer to traditional 3D render engines like V-Ray. I honestly never imagined I would go this far with programming, and it has been an amazing learning experience so far. I think that mixing this knowledge with my 3D knowledge can unlock countless new possibilities. In case you are curious about Galaxy Engine, I made it completely free and Open-Source so that anyone can build and compile it locally! You can find the source code in GitHub

https://github.com/NarcisCalin/Galaxy-Engine

FEATURED POSTS

-

Google Gemini Robotics

For safety considerations, Google mentions a “layered, holistic approach” that maintains traditional robot safety measures like collision avoidance and force limitations. The company describes developing a “Robot Constitution” framework inspired by Isaac Asimov’s Three Laws of Robotics and releasing a dataset unsurprisingly called “ASIMOV” to help researchers evaluate safety implications of robotic actions.

This new ASIMOV dataset represents Google’s attempt to create standardized ways to assess robot safety beyond physical harm prevention. The dataset appears designed to help researchers test how well AI models understand the potential consequences of actions a robot might take in various scenarios. According to Google’s announcement, the dataset will “help researchers to rigorously measure the safety implications of robotic actions in real-world scenarios.”

-

Laowa 25mm f/2.8 2.5-5X Ultra Macro vs 100mm f/2.8 2x lens

https://gilwizen.com/laowa-25mm-ultra-macro-lens-review/

https://www.cameralabs.com/laowa-25mm-f2-8-2-5-5x-ultra-macro-review/

- Pros:

– Lightweight, small size for a high-magnification macro lens

– Highest magnification lens available for non-Canon users

– Excellent sharpness and image quality

– Consistent working distance

– Narrow lens barrel makes it easy to find and track subject

– Affordable

- Cons:

– Manual, no auto aperture control

– No filter thread (but still customizable with caution)

– Dark viewfinder when closing aperture makes focusing difficult in poor light conditions

– Magnification range is short 2.5-5x compared to the competition

Combining a Laowa 25mm 2.5x lens with a Kenko 12mm extension tube

To find the combined magnification when using a Laowa 25mm 2.5x lens with a 12mm Kenko extension tube, given the magnification of the lens itself, the extension tube length, and the combined setup, you can calculate the total magnification.

First, consider the magnification of the lens itself, which is 2.5x.

Then, to find the total magnification when the extension tube is attached, you can use the formula:

Total Magnification = Magnification of the Lens + (Magnification of the Lens * Extension Tube Length / Focal Length of the Lens)

In this case, the extension tube length is 12mm, and the focal length of the lens is 25mm. Using the values:

Total Magnification with 2.5x = 2.5 + (2.5 * 12 / 25) = 2.5 + (30 / 25) = 2.5 + 1.2 = 3.7x

Total Magnification with 5x = 5 + (5 * 12 / 25) = 5 + (60 / 25) = 5 + 2.4 = 7.4x

- Pros:

-

Top 3D Printing Website Resources

The Holy Grail – https://github.com/ad-si/awesome-3d-printing

- Thingiverse – https://www.thingiverse.com/

- Makerworld – https://makerworld.com/

- Printables – https://www.printables.com/

- Cults – https://cults3d.com/

- CG Trader – https://www.cgtrader.com/3d-print-models

- Sketchfab – https://sketchfab.com/store/3d-models/stl

- 3D Export – https://3dexport.com/

- MyMiniFactory – https://www.myminifactory.com/

- Thangs – https://thangs.com/

- Yeggi – https://www.yeggi.com/

- FAB365 – https://fab365.net/

- Gambody – https://www.gambody.com/

- All3DP News – https://all3dp.com/

- TCT Magazine – https://www.tctmagazine.com/topics/3D-printing-news/

- 3DPrint.com – https://3dprint.com/

- NASA 3D Models – https://nasa3d.arc.nasa.gov/models/printable

-

AI Data Laundering: How Academic and Nonprofit Researchers Shield Tech Companies from Accountability

“Simon Willison created a Datasette browser to explore WebVid-10M, one of the two datasets used to train the video generation model, and quickly learned that all 10.7 million video clips were scraped from Shutterstock, watermarks and all.”

“In addition to the Shutterstock clips, Meta also used 10 million video clips from this 100M video dataset from Microsoft Research Asia. It’s not mentioned on their GitHub, but if you dig into the paper, you learn that every clip came from over 3 million YouTube videos.”

“It’s become standard practice for technology companies working with AI to commercially use datasets and models collected and trained by non-commercial research entities like universities or non-profits.”

“Like with the artists, photographers, and other creators found in the 2.3 billion images that trained Stable Diffusion, I can’t help but wonder how the creators of those 3 million YouTube videos feel about Meta using their work to train their new model.”

-

Brett Jones / Phil Reyneri (Lightform) / Philipp7pc: The study of Projection Mapping through Projectors

Video Projection Tool Software

https://hcgilje.wordpress.com/vpt/https://www.projectorpoint.co.uk/news/how-bright-should-my-projector-be/

http://www.adwindowscreens.com/the_calculator/

heavym

https://heavym.net/en/MadMapper

https://madmapper.com/