BREAKING NEWS

LATEST POSTS

-

The philosophy of Charles Schulz, the creator of the ‘Peanuts’ comic strip

- Name the five wealthiest people in the world.

- Name the last five Heisman trophy winners.

- Name the last five winners of the Miss America pageant.

- Name ten people who have won the Nobel or Pulitzer Prize.

- Name the last half dozen Academy Award winners for best actor and actress.

- Name the last decade’s worth of World Series winners.

How did you do?

The point is, none of us remember the headliners of yesterday.

These are no second-rate achievers.They are the best in their fields.

But the applause dies.

Awards tarnish …

Achievements are forgotten.

Accolades and certificates are buried with their owners.Here’s another quiz. See how you do on this one:

- List a few teachers who aided your journey through school.

- Name three friends who have helped you through a difficult time.

- Name five people who have taught you something worthwhile.

- Think of a few people who have made you feel appreciated and special.

- Think of five people you enjoy spending time with.

Easier?

-

The God of War Texture Optimization Algorithm: Mip Flooding

“delve into an algorithm developed by Sean Feeley, a Senior Staff Environment Tech Artist that is part of the creative minds at Santa Monica Studio. This algorithm, originally designed to address edge inaccuracy on foliage, has the potential to revolutionize the way we approach texture optimization in the gaming industry. ”

-

TurboSquid move towards supporting AI against its own policies

https://www.turbosquid.com/ai-3d-generator

The AI is being trained using a mix of Shutterstock 2D imagery and 3D models drawn from the TurboSquid marketplace. However, it’s only being trained on models that artists have approved for this use.

People cannot generate a model and then immediately sell it. However, a generated 3D model can be used as a starting point for further customization, which could then be sold on the TurboSquid marketplace. However, models created using our generative 3D tool—and their derivatives—can only be sold on the TurboSquid marketplace.

TurboSquid does not accept AI-generated content from our artists

As AI-powered tools become more accessible, it is important for us to address the impact AI has on our artist community as it relates to content made licensable on TurboSquid. TurboSquid, in line with its parent company Shutterstock, is taking an ethically responsible approach to AI on its platforms. We want to ensure that artists are properly compensated for their contributions to AI projects while supporting customers with the protections and coverage issued through the TurboSquid license.In order to ensure that customers are protected, that intellectual property is not misused, and that artists’ are compensated for their work, TurboSquid will not accept content uploaded and sold on our marketplace that is generated by AI. Per our Publisher Agreement, artists must have proven IP ownership of all content that is submitted. AI-generated content is produced using machine learning models that are trained using many other creative assets. As a result, we cannot accept content generated by AI because its authorship cannot be attributed to an individual person, and we would be unable to ensure that all artists who were involved in the generation of that content are compensated.

-

How to View Apple’s Spatial Videos

https://blog.frame.io/2024/02/01/how-to-capture-and-view-vision-pro-spatial-video/

Apple’s Immersive Videos format is a special container for 3D or “spatial” video. You can capture spatial video to this format either by using the Vision Pro as a head-mounted camera, or with an iPhone 15 Pro or 15 Pro Max. The headset offers better capture because its cameras are more optimized for 3D, resulting in higher resolution and improved depth effects.

While the iPhone wasn’t designed specifically as a 3D camera, it can use its primary and ultrawide cameras in landscape orientation simultaneously, allowing it to capture spatial video—as long as you hold it horizontally. Computational photography is used to compensate for the lens differences, and the output is two separate 1080p, 30fps videos that capture a 180-degree field of view.

These spatial videos are stored using the MV-HEVC (Multi-View High-Efficiency Video Coding) format, which uses H.265 compression to crunch this down to approximately 130MB per minute, including spatial audio. Unlike conventional stereoscopic formats—which combine the two views into a flattened video file that’s either side-by-side or top/bottom—these spatial videos are stored as discrete tracks within the file container.

Spatialify is an iOS app designed to view and convert various 3D formats. It also works well on Mac OS, as long as your Mac has an Apple Silicon CPU. And it supports MV-HEVC, so you’ll be all set. It’s just $4.99, a genuine bargain considering what it does. Find Spatialify here.

FEATURED POSTS

-

Photography basics: Production Rendering Resolution Charts

https://www.urtech.ca/2019/04/solved-complete-list-of-screen-resolution-names-sizes-and-aspect-ratios/

Resolution – Aspect Ratio 4:03 16:09 16:10 3:02 5:03 5:04 CGA 320 x 200 QVGA 320 x 240 VGA (SD, Standard Definition) 640 x 480 NTSC 720 x 480 WVGA 854 x 450 WVGA 800 x 480 PAL 768 x 576 SVGA 800 x 600 XGA 1024 x 768 not named 1152 x 768 HD 720 (720P, High Definition) 1280 x 720 WXGA 1280 x 800 WXGA 1280 x 768 SXGA 1280 x 1024 not named (768P, HD, High Definition) 1366 x 768 not named 1440 x 960 SXGA+ 1400 x 1050 WSXGA 1680 x 1050 UXGA (2MP) 1600 x 1200 HD1080 (1080P, Full HD) 1920 x 1080 WUXGA 1920 x 1200 2K 2048 x (any) QWXGA 2048 x 1152 QXGA (3MP) 2048 x 1536 WQXGA 2560 x 1600 QHD (Quad HD) 2560 x 1440 QSXGA (5MP) 2560 x 2048 4K UHD (4K, Ultra HD, Ultra-High Definition) 3840 x 2160 QUXGA+ 3840 x 2400 IMAX 3D 4096 x 3072 8K UHD (8K, 8K Ultra HD, UHDTV) 7680 x 4320 10K (10240×4320, 10K HD) 10240 x (any) 16K (Quad UHD, 16K UHD, 8640P) 15360 x 8640

-

Gamma correction

http://www.normankoren.com/makingfineprints1A.html#Gammabox

https://en.wikipedia.org/wiki/Gamma_correction

http://www.photoscientia.co.uk/Gamma.htm

https://www.w3.org/Graphics/Color/sRGB.html

http://www.eizoglobal.com/library/basics/lcd_display_gamma/index.html

https://forum.reallusion.com/PrintTopic308094.aspx

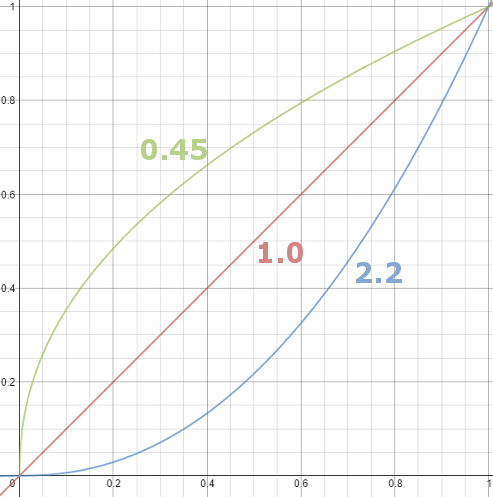

Basically, gamma is the relationship between the brightness of a pixel as it appears on the screen, and the numerical value of that pixel. Generally Gamma is just about defining relationships.

Three main types:

– Image Gamma encoded in images

– Display Gammas encoded in hardware and/or viewing time

– System or Viewing Gamma which is the net effect of all gammas when you look back at a final image. In theory this should flatten back to 1.0 gamma.

(more…)

-

Narcis Calin’s Galaxy Engine – A free, open source simulation software

This 2025 I decided to start learning how to code, so I installed Visual Studio and I started looking into C++. After days of watching tutorials and guides about the basics of C++ and programming, I decided to make something physics-related. I started with a dot that fell to the ground and then I wanted to simulate gravitational attraction, so I made 2 circles attracting each other. I thought it was really cool to see something I made with code actually work, so I kept building on top of that small, basic program. And here we are after roughly 8 months of learning programming. This is Galaxy Engine, and it is a simulation software I have been making ever since I started my learning journey. It currently can simulate gravity, dark matter, galaxies, the Big Bang, temperature, fluid dynamics, breakable solids, planetary interactions, etc. The program can run many tens of thousands of particles in real time on the CPU thanks to the Barnes-Hut algorithm, mixed with Morton curves. It also includes its own PBR 2D path tracer with BVH optimizations. The path tracer can simulate a bunch of stuff like diffuse lighting, specular reflections, refraction, internal reflection, fresnel, emission, dispersion, roughness, IOR, nested IOR and more! I tried to make the path tracer closer to traditional 3D render engines like V-Ray. I honestly never imagined I would go this far with programming, and it has been an amazing learning experience so far. I think that mixing this knowledge with my 3D knowledge can unlock countless new possibilities. In case you are curious about Galaxy Engine, I made it completely free and Open-Source so that anyone can build and compile it locally! You can find the source code in GitHub

https://github.com/NarcisCalin/Galaxy-Engine