BREAKING NEWS

LATEST POSTS

-

LumaLabs.ai – Introducing Modify Video

https://lumalabs.ai/blog/news/introducing-modify-video

Reimagine any video. Shoot it in post with director-grade control over style, character, and setting. Restyle expressive actions and performances, swap entire worlds, or redesign the frame to your vision.

Shoot once. Shape infinitely. -

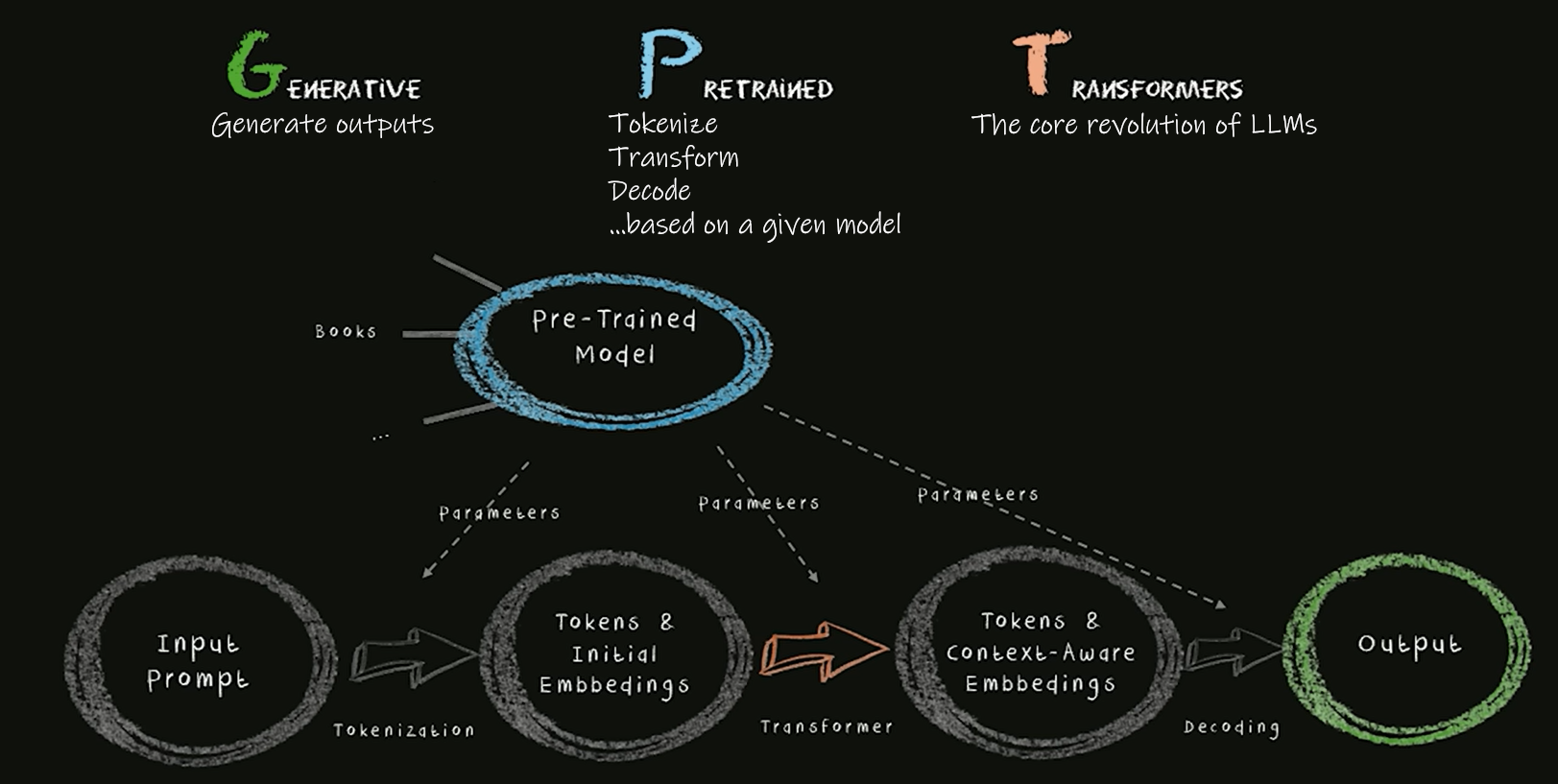

Transformer Explainer -Interactive Learning of Text-Generative Models

https://github.com/poloclub/transformer-explainer

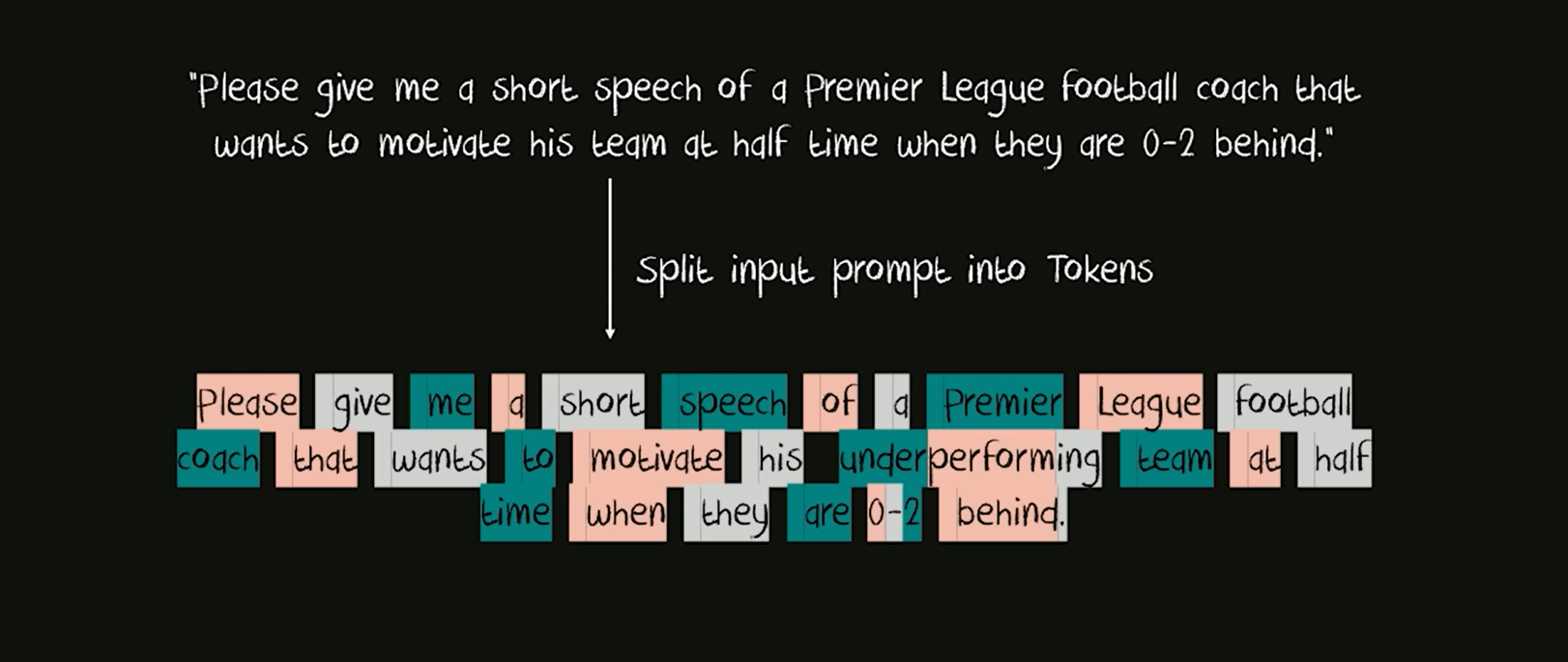

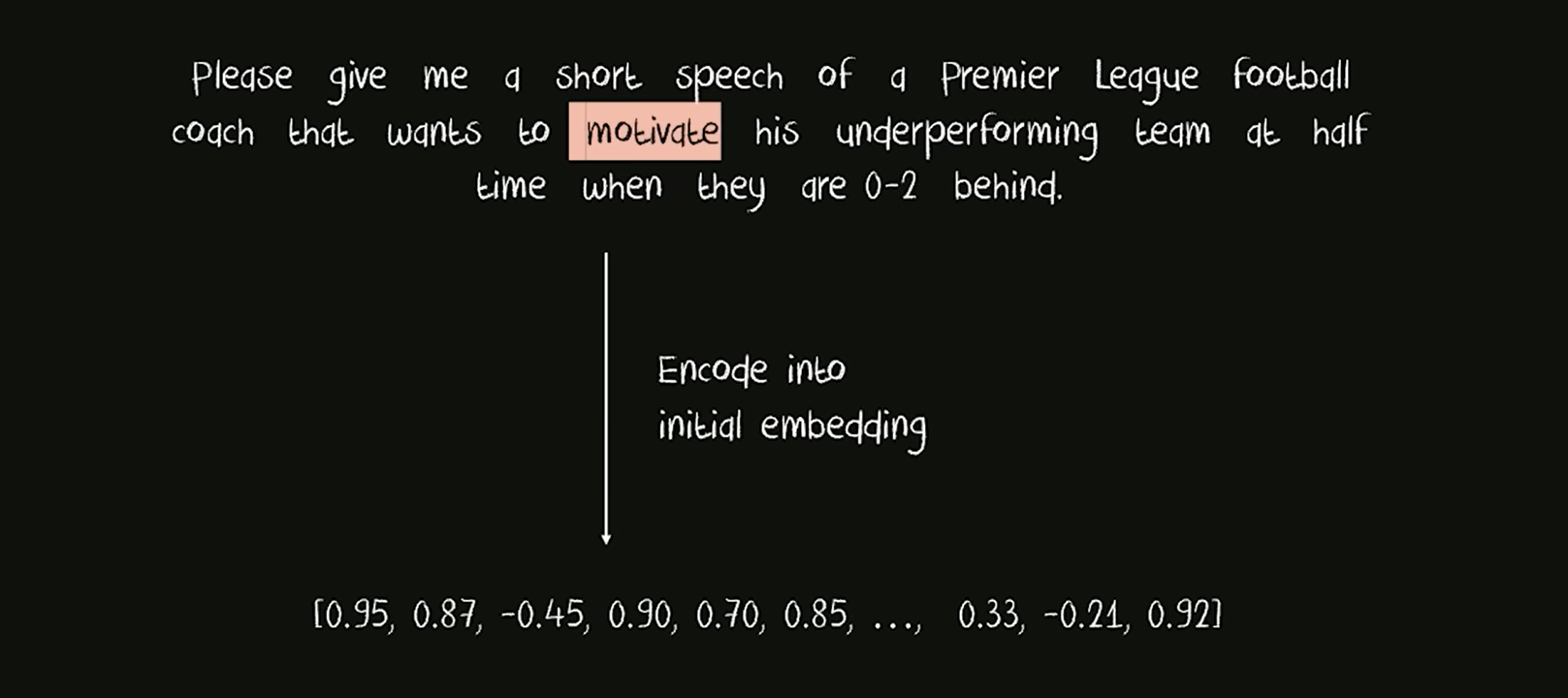

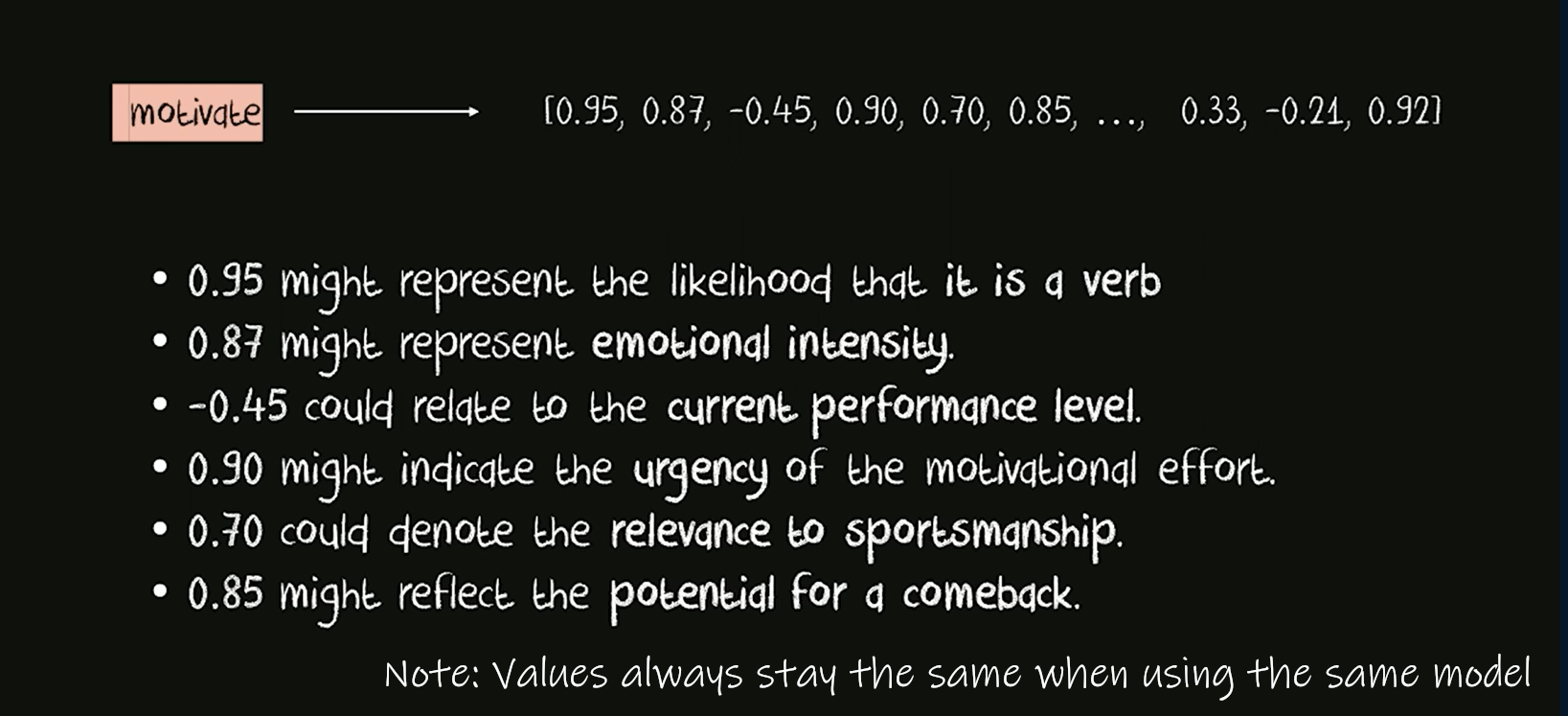

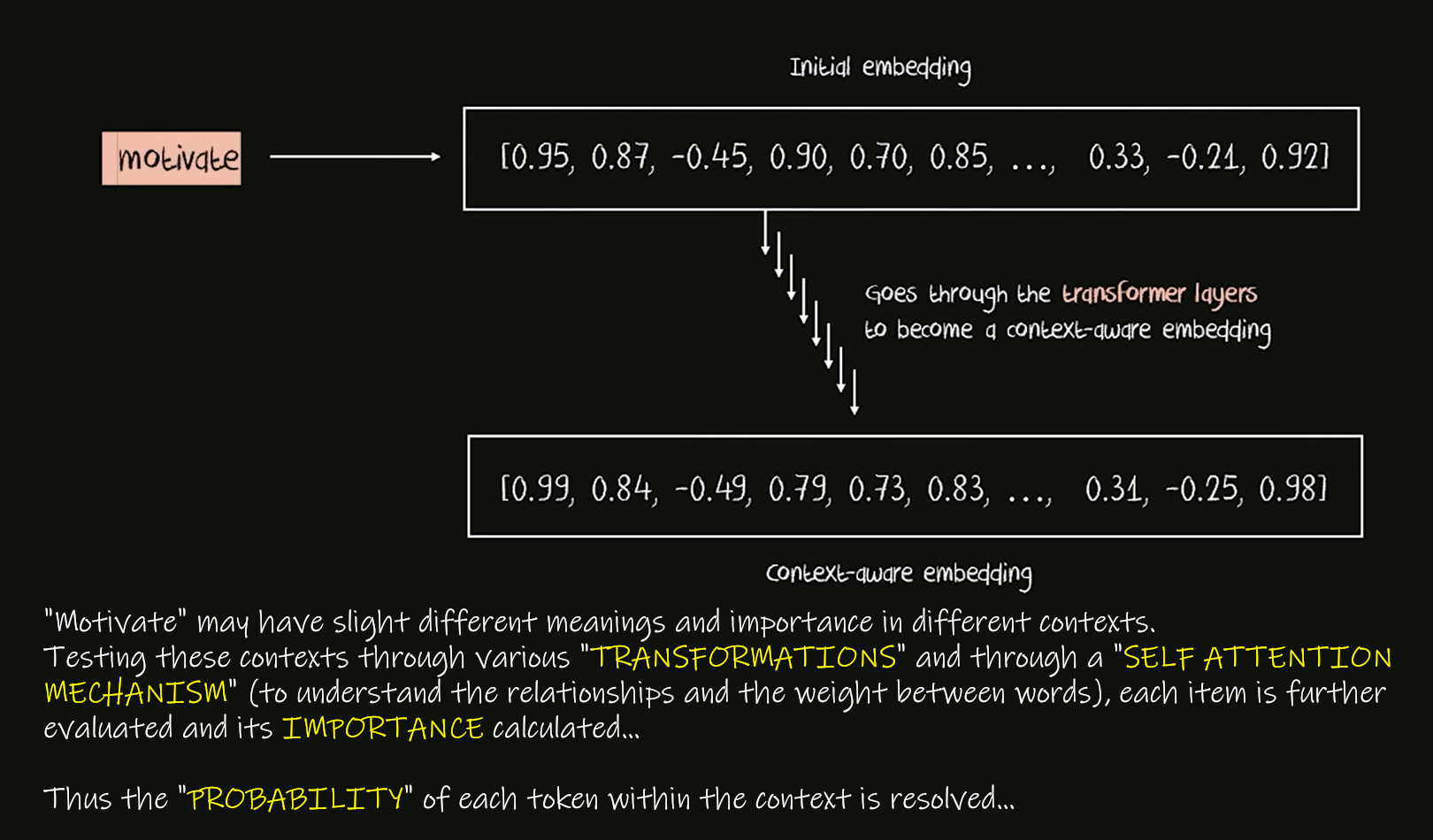

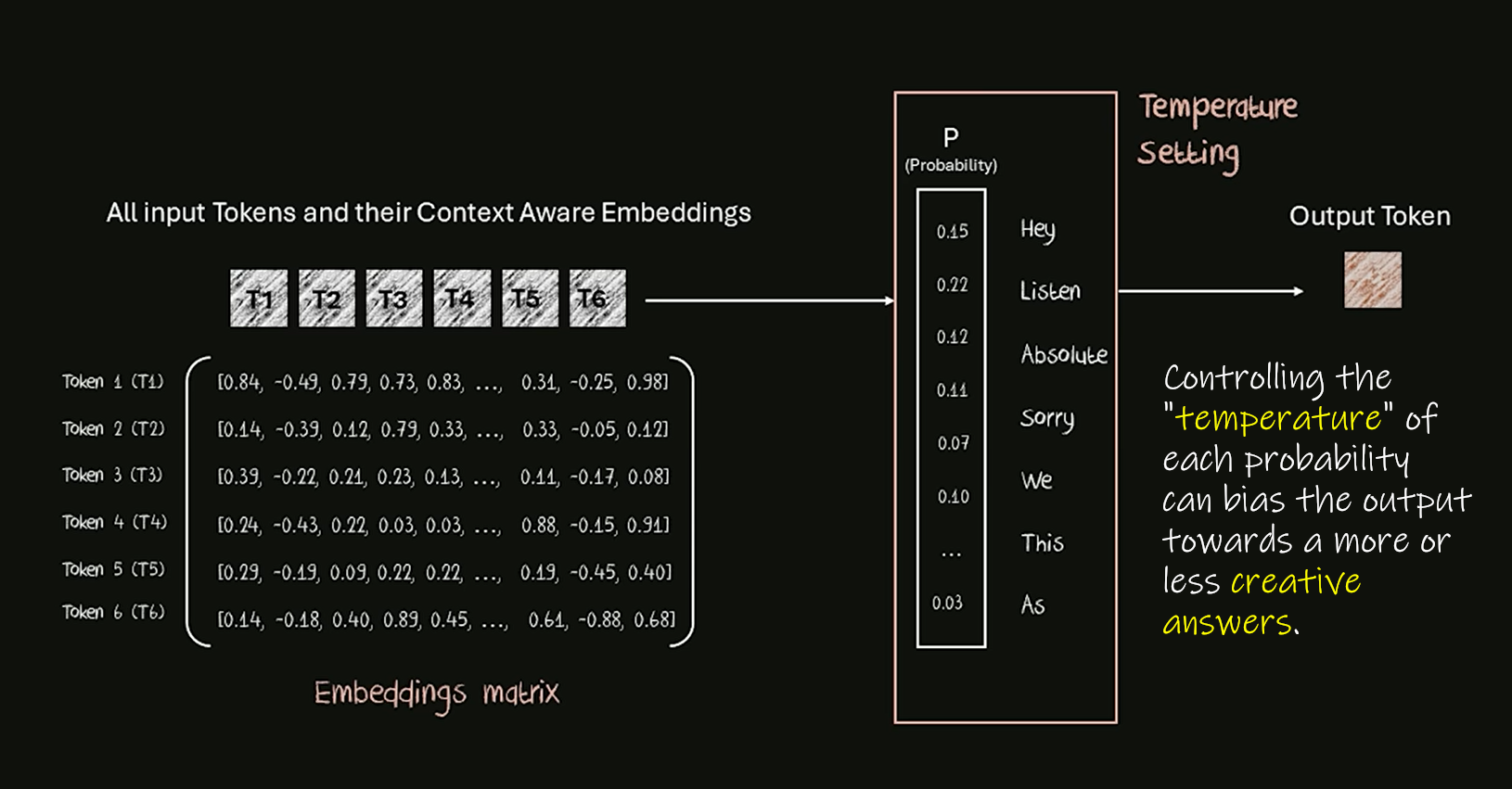

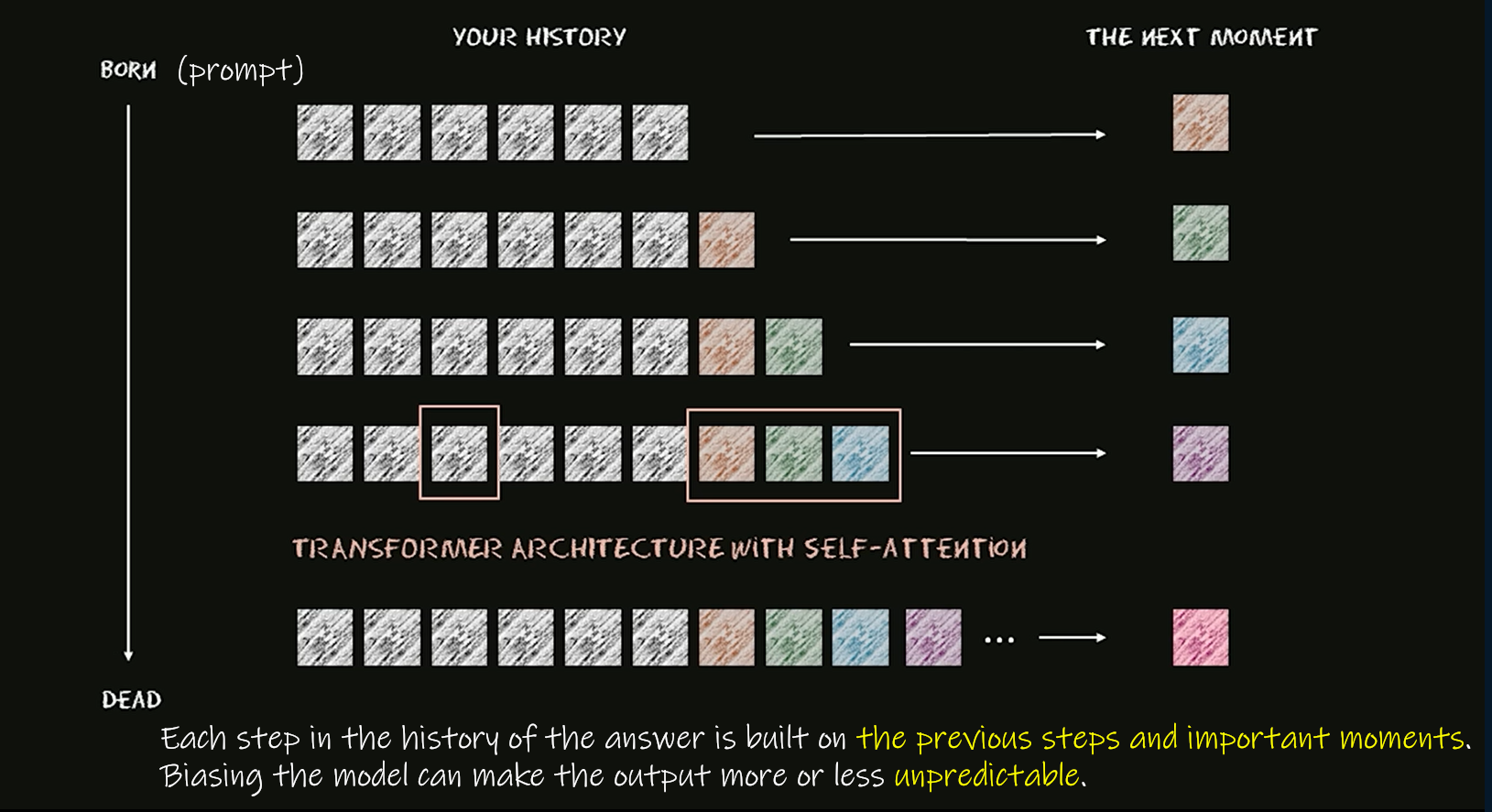

Transformer Explainer is an interactive visualization tool designed to help anyone learn how Transformer-based models like GPT work. It runs a live GPT-2 model right in your browser, allowing you to experiment with your own text and observe in real time how internal components and operations of the Transformer work together to predict the next tokens. Try Transformer Explainer at http://poloclub.github.io/transformer-explainer

-

Henry Daubrez – How to generate VR/ 360 videos directly with Google VEO

https://www.linkedin.com/posts/upskydown_vr-googleveo-veo3-activity-7334269406396461059-d8Da

If you prompt for a 360° video in VEO (like literally write “360°” ) it can generate a Monoscopic 360 video, then the next step is to inject the right metadata in your file so you can play it as an actual 360 video.

Once it’s saved with the right Metadata, it will be recognized as an actual 360/VR video, meaning you can just play it in VLC and drag your mouse to look around. -

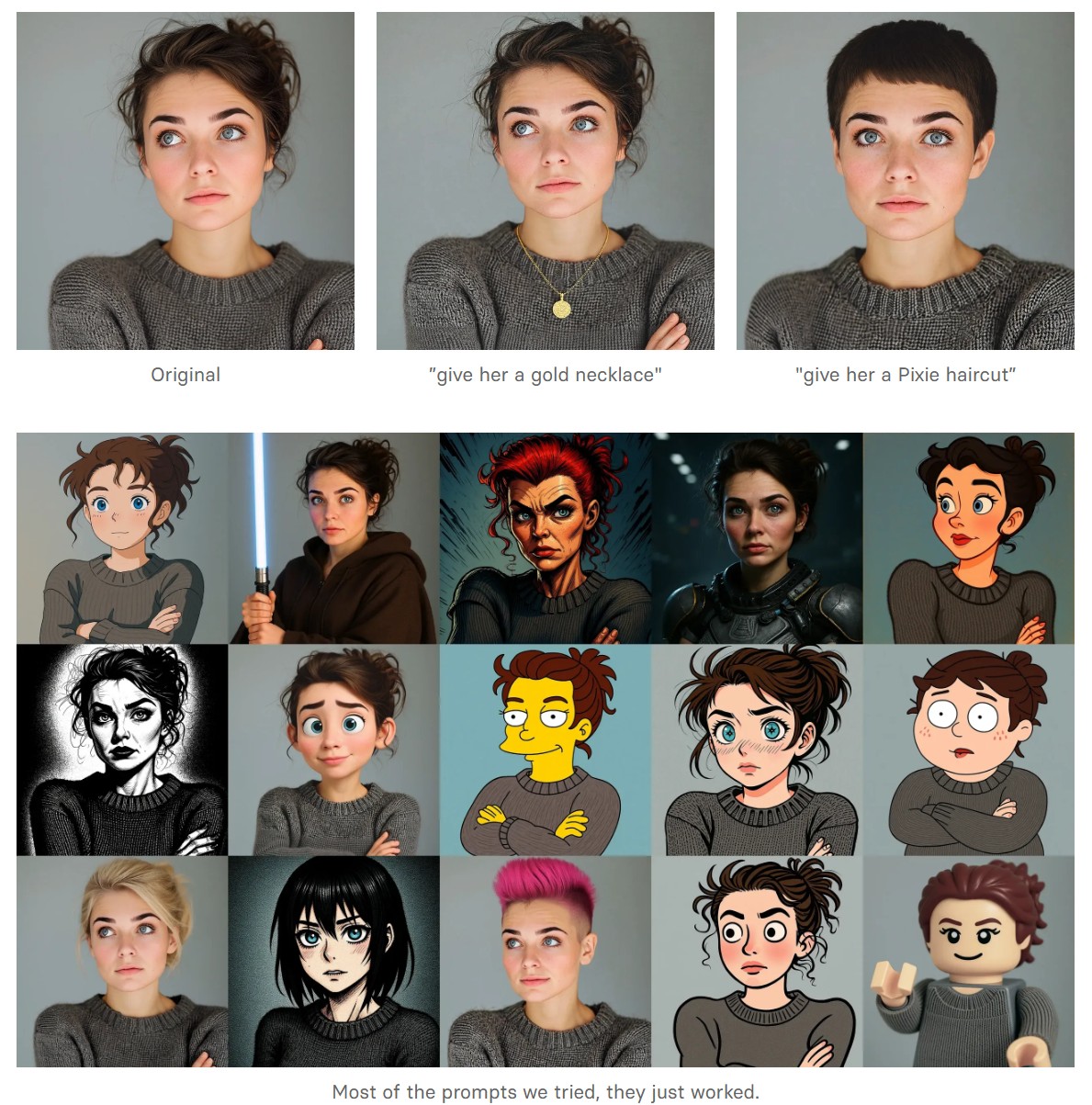

Black Forest Labs released FLUX.1 Kontext

https://replicate.com/blog/flux-kontext

https://replicate.com/black-forest-labs/flux-kontext-pro

There are three models, two are available now, and a third open-weight version is coming soon:

- FLUX.1 Kontext [pro]: State-of-the-art performance for image editing. High-quality outputs, great prompt following, and consistent results.

- FLUX.1 Kontext [max]: A premium model that brings maximum performance, improved prompt adherence, and high-quality typography generation without compromise on speed.

- Coming soon: FLUX.1 Kontext [dev]: An open-weight, guidance-distilled version of Kontext.

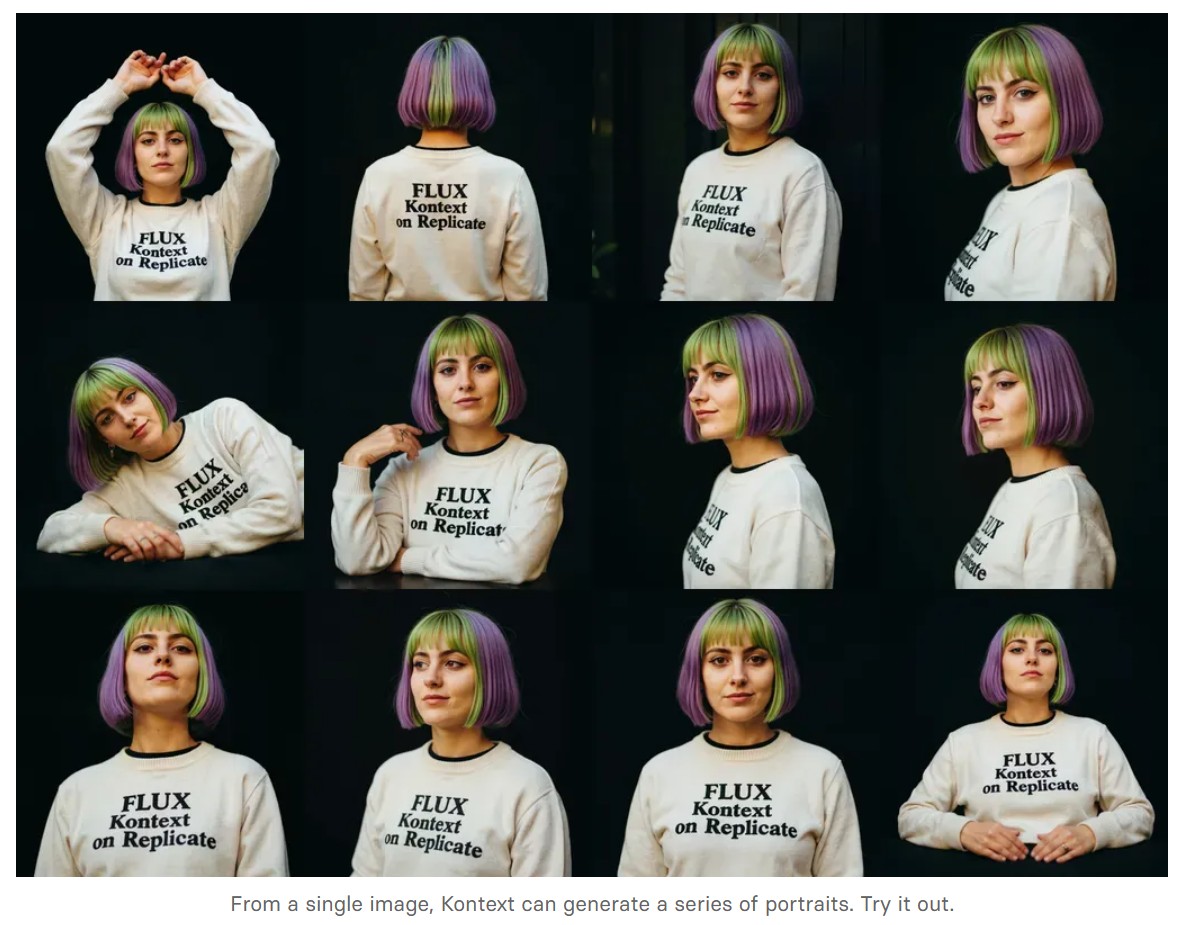

We’re so excited with what Kontext can do, we’ve created a collection of models on Replicate to give you ideas:

- Multi-image kontext: Combine two images into one.

- Portrait series: Generate a series of portraits from a single image

- Change haircut: Change a person’s hair style and color

- Iconic locations: Put yourself in front of famous landmarks

- Professional headshot: Generate a professional headshot from any image

-

AI Models – A walkthrough by Andreas Horn

the 8 most important model types and what they’re actually built to do: ⬇️

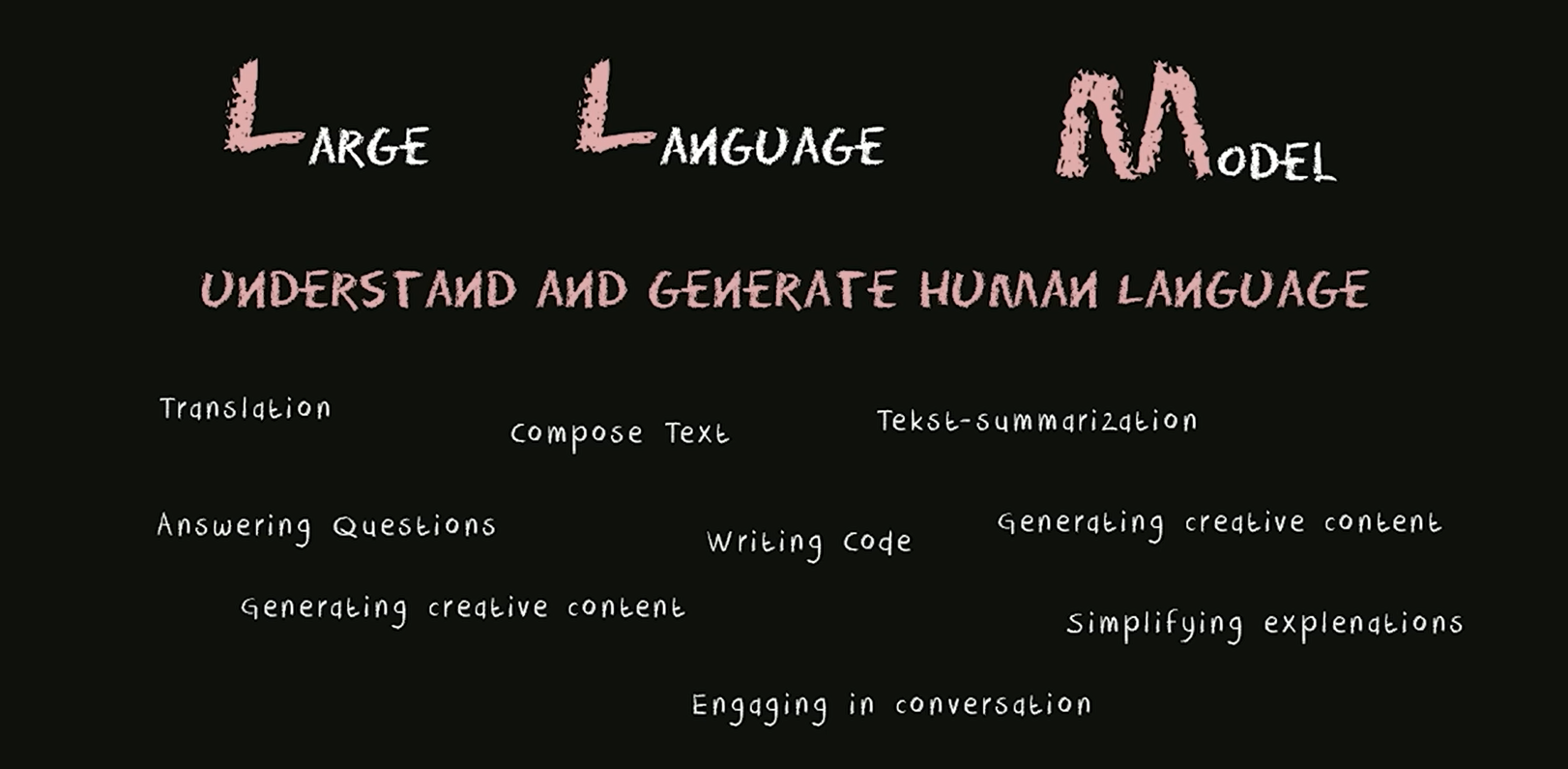

1. 𝗟𝗟𝗠 – 𝗟𝗮𝗿𝗴𝗲 𝗟𝗮𝗻𝗴𝘂𝗮𝗴𝗲 𝗠𝗼𝗱𝗲𝗹

→ Your ChatGPT-style model.

Handles text, predicts the next token, and powers 90% of GenAI hype.

🛠 Use case: content, code, convos.

2. 𝗟𝗖𝗠 – 𝗟𝗮𝘁𝗲𝗻𝘁 𝗖𝗼𝗻𝘀𝗶𝘀𝘁𝗲𝗻𝗰𝘆 𝗠𝗼𝗱𝗲𝗹

→ Lightweight, diffusion-style models.

Fast, quantized, and efficient — perfect for real-time or edge deployment.

🛠 Use case: image generation, optimized inference.

3. 𝗟𝗔𝗠 – 𝗟𝗮𝗻𝗴𝘂𝗮𝗴𝗲 𝗔𝗰𝘁𝗶𝗼𝗻 𝗠𝗼𝗱𝗲𝗹

→ Where LLM meets planning.

Adds memory, task breakdown, and intent recognition.

🛠 Use case: AI agents, tool use, step-by-step execution.

4. 𝗠𝗼𝗘 – 𝗠𝗶𝘅𝘁𝘂𝗿𝗲 𝗼𝗳 𝗘𝘅𝗽𝗲𝗿𝘁𝘀

→ One model, many minds.

Routes input to the right “expert” model slice — dynamic, scalable, efficient.

🛠 Use case: high-performance model serving at low compute cost.

5. 𝗩𝗟𝗠 – 𝗩𝗶𝘀𝗶𝗼𝗻 𝗟𝗮𝗻𝗴𝘂𝗮𝗴𝗲 𝗠𝗼𝗱𝗲𝗹

→ Multimodal beast.

Combines image + text understanding via shared embeddings.

🛠 Use case: Gemini, GPT-4o, search, robotics, assistive tech.

6. 𝗦𝗟𝗠 – 𝗦𝗺𝗮𝗹𝗹 𝗟𝗮𝗻𝗴𝘂𝗮𝗴𝗲 𝗠𝗼𝗱𝗲𝗹

→ Tiny but mighty.

Designed for edge use, fast inference, low latency, efficient memory.

🛠 Use case: on-device AI, chatbots, privacy-first GenAI.

7. 𝗠𝗟𝗠 – 𝗠𝗮𝘀𝗸𝗲𝗱 𝗟𝗮𝗻𝗴𝘂𝗮𝗴𝗲 𝗠𝗼𝗱𝗲𝗹

→ The OG foundation model.

Predicts masked tokens using bidirectional context.

🛠 Use case: search, classification, embeddings, pretraining.

8. 𝗦𝗔𝗠 – 𝗦𝗲𝗴𝗺𝗲𝗻𝘁 𝗔𝗻𝘆𝘁𝗵𝗶𝗻𝗴 𝗠𝗼𝗱𝗲𝗹

→ Vision model for pixel-level understanding.

Highlights, segments, and understands *everything* in an image.

🛠 Use case: medical imaging, AR, robotics, visual agents.

-

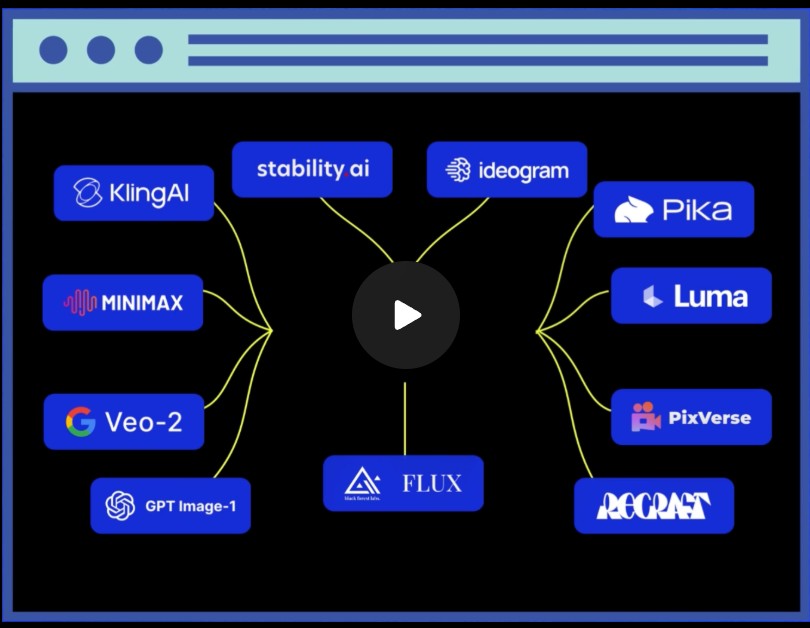

Introducting ComfyUI Native API Nodes

https://blog.comfy.org/p/comfyui-native-api-nodes

Models Supported

- Black Forest Labs Flux 1.1[pro] Ultra, Flux .1[pro]

- Kling 2.0, 1.6, 1.5 & Various Effects

- Luma Photon, Ray2, Ray1.6

- MiniMax Text-to-Video, Image-to-Video

- PixVerse V4 & Effects

- Recraft V3, V2 & Various Tools

- Stability AI Stable Image Ultra, Stable Diffusion 3.5 Large

- Google Veo2

- Ideogram V3, V2, V1

- OpenAI GPT4o image

- Pika 2.2

FEATURED POSTS

-

ComfyDock – The Easiest (Free) Way to Safely Run ComfyUI Sessions in a Boxed Container

https://www.reddit.com/r/comfyui/comments/1j2x4qv/comfydock_the_easiest_free_way_to_run_comfyui_in/

ComfyDock is a tool that allows you to easily manage your ComfyUI environments via Docker.

Common Challenges with ComfyUI

- Custom Node Installation Issues: Installing new custom nodes can inadvertently change settings across the whole installation, potentially breaking the environment.

- Workflow Compatibility: Workflows are often tested with specific custom nodes and ComfyUI versions. Running these workflows on different setups can lead to errors and frustration.

- Security Risks: Installing custom nodes directly on your host machine increases the risk of malicious code execution.

How ComfyDock Helps

- Environment Duplication: Easily duplicate your current environment before installing custom nodes. If something breaks, revert to the original environment effortlessly.

- Deployment and Sharing: Workflow developers can commit their environments to a Docker image, which can be shared with others and run on cloud GPUs to ensure compatibility.

- Enhanced Security: Containers help to isolate the environment, reducing the risk of malicious code impacting your host machine.