BREAKING NEWS

LATEST POSTS

-

K-Lens One – A Light Field Lens that captures RGB + Depth

www.newsshooter.com/2021/10/31/klens-one-a-light-field-lens-that-captures-rgb-depth/

A mirror system (Image Multiplier) in the K|Lens splits the light rays into 9 separate images that are mapped on the camera sensor. All 9 of these images have slightly different perspectives. The best way to picture it is if you imagine using 9 separate cameras in a narrow array at the same time.

-

Floating point precision and errors in 3D production

https://blog.demofox.org/2017/11/21/floating-point-precision

https://www.h-schmidt.net/FloatConverter/IEEE754.html

The challenge with precision limits in production is tightly connected to the context of the assets, camera and procedural requirements the render scene needs to support.

For example a far away camera chasing a plane may not reveal issues that the same scene may show with a far away camera’s closeup of a displaced water drop on the plane’s windshield.

This is a rough example, but it helps putting things in perspective.Always best testing for specific patterns or targets within a given setup.

FEATURED POSTS

-

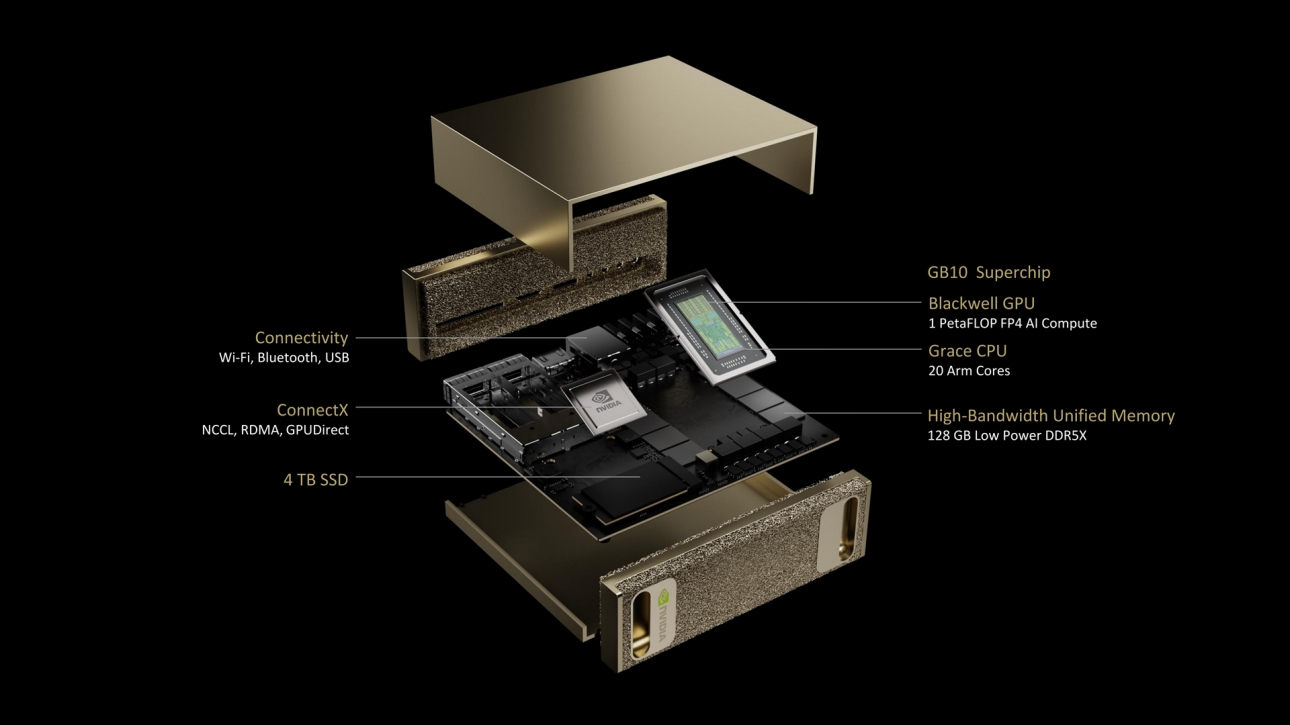

Nvidia unveils $3,000 desktop AI computer for home LLM researchers

https://arstechnica.com/ai/2025/01/nvidias-first-desktop-pc-can-run-local-ai-models-for-3000

https://www.nvidia.com/en-us/project-digits

Some smaller open-weights AI language models (such as Llama 3.1 70B, with 70 billion parameters) and various AI image-synthesis models like Flux.1 dev (12 billion parameters) could probably run comfortably on Project DIGITS, but larger open models like Llama 3.1 405B, with 405 billion parameters, may not. Given the recent explosion of smaller AI models, a creative developer could likely run quite a few interesting models on the unit.

DIGITS’ 128GB of unified RAM is notable because a high-power consumer GPU like the RTX 4090 has only 24GB of VRAM. Memory serves as a hard limit on AI model parameter size, and more memory makes room for running larger local AI models.

-

Open Source Nvidia Omniverse

blogs.nvidia.com/blog/2019/03/18/omniverse-collaboration-platform/

developer.nvidia.com/nvidia-omniverse

An open, Interactive 3D Design Collaboration Platform for Multi-Tool Workflows to simplify studio workflows for real-time graphics.

It supports Pixar’s Universal Scene Description technology for exchanging information about modeling, shading, animation, lighting, visual effects and rendering across multiple applications.

It also supports NVIDIA’s Material Definition Language, which allows artists to exchange information about surface materials across multiple tools.

With Omniverse, artists can see live updates made by other artists working in different applications. They can also see changes reflected in multiple tools at the same time.

For example an artist using Maya with a portal to Omniverse can collaborate with another artist using UE4 and both will see live updates of each others’ changes in their application.