BREAKING NEWS

LATEST POSTS

-

Sony tests AI-powered Playstation characters

https://www.independent.co.uk/tech/ai-playstation-characters-sony-ps5-chatgpt-b2712813.html

A demo video, first reported by The Verge, showed an AI version of the character Aloy from the Playstation game Horizon Forbidden West conversing through voice prompts during gameplay on the PS5 console.

The character’s facial expressions are also powered by Sony’s advanced AI software Mockingbird, while the speech artificially replicates the voice of the actor Ashly Burch.

-

BEAR – BE-A-Rigger – Maya Rigging Tool

https://github.com/Grackable/bear_core

BEAR claims to be the most intuitive and easy-to-use rigging tool available, offering production-proven features that streamline the rigging workflow for maximum efficiency and consistency.

-

Jellyfish Pictures suspends operations

https://www.broadcastnow.co.uk/post-and-vfx/jellyfish-pictures-suspends-operations/5202847.article

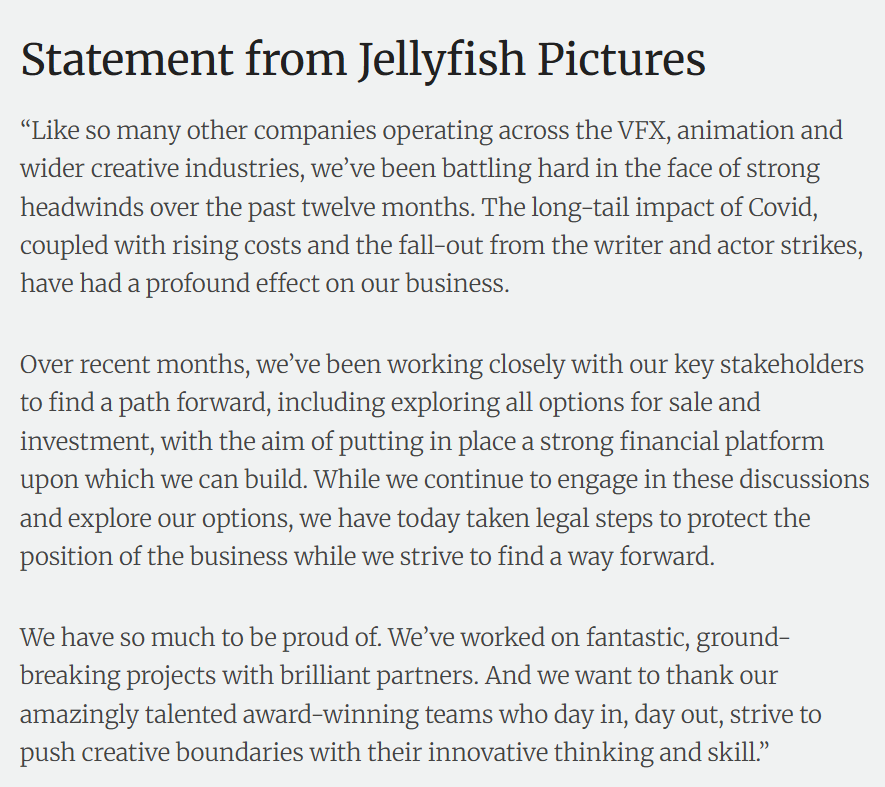

According to a report in Indian news outlet, Animation Xpress, Jellyfish is facing financial struggles and has temporarily suspended its global operations.

-

AI and the Law – Judge allows authors AI copyright lawsuit against Meta to move forward

The lawsuit has already provided a few glimpses into how Meta approaches copyright, with court filings from the plaintiffs claiming that Mark Zuckerberg gave the Llama team permission to train the models using copyrighted works and that other Meta team members discussed the use of legally questionable content for AI training.

FEATURED POSTS

-

Microsoft DAViD – Data-efficient and Accurate Vision Models from Synthetic Data

Our human-centric dense prediction model delivers high-quality, detailed (depth) results while achieving remarkable efficiency, running orders of magnitude faster than competing methods, with inference speeds as low as 21 milliseconds per frame (the large multi-task model on an NVIDIA A100). It reliably captures a wide range of human characteristics under diverse lighting conditions, preserving fine-grained details such as hair strands and subtle facial features. This demonstrates the model’s robustness and accuracy in complex, real-world scenarios.

https://microsoft.github.io/DAViD

The state of the art in human-centric computer vision achieves high accuracy and robustness across a diverse range of tasks. The most effective models in this domain have billions of parameters, thus requiring extremely large datasets, expensive training regimes, and compute-intensive inference. In this paper, we demonstrate that it is possible to train models on much smaller but high-fidelity synthetic datasets, with no loss in accuracy and higher efficiency. Using synthetic training data provides us with excellent levels of detail and perfect labels, while providing strong guarantees for data provenance, usage rights, and user consent. Procedural data synthesis also provides us with explicit control on data diversity, that we can use to address unfairness in the models we train. Extensive quantitative assessment on real input images demonstrates accuracy of our models on three dense prediction tasks: depth estimation, surface normal estimation, and soft foreground segmentation. Our models require only a fraction of the cost of training and inference when compared with foundational models of similar accuracy.

-

THOMAS MANSENCAL – The Apparent Simplicity of RGB Rendering

https://thomasmansencal.substack.com/p/the-apparent-simplicity-of-rgb-rendering

The primary goal of physically-based rendering (PBR) is to create a simulation that accurately reproduces the imaging process of electro-magnetic spectrum radiation incident to an observer. This simulation should be indistinguishable from reality for a similar observer.

Because a camera is not sensitive to incident light the same way than a human observer, the images it captures are transformed to be colorimetric. A project might require infrared imaging simulation, a portion of the electro-magnetic spectrum that is invisible to us. Radically different observers might image the same scene but the act of observing does not change the intrinsic properties of the objects being imaged. Consequently, the physical modelling of the virtual scene should be independent of the observer.

-

Christopher Butler – Understanding the Eye-Mind Connection – Vision is a mental process

https://www.chrbutler.com/understanding-the-eye-mind-connection

The intricate relationship between the eyes and the brain, often termed the eye-mind connection, reveals that vision is predominantly a cognitive process. This understanding has profound implications for fields such as design, where capturing and maintaining attention is paramount. This essay delves into the nuances of visual perception, the brain’s role in interpreting visual data, and how this knowledge can be applied to effective design strategies.

This cognitive aspect of vision is evident in phenomena such as optical illusions, where the brain interprets visual information in a way that contradicts physical reality. These illusions underscore that what we “see” is not merely a direct recording of the external world but a constructed experience shaped by cognitive processes.

Understanding the cognitive nature of vision is crucial for effective design. Designers must consider how the brain processes visual information to create compelling and engaging visuals. This involves several key principles:

- Attention and Engagement

- Visual Hierarchy

- Cognitive Load Management

- Context and Meaning