BREAKING NEWS

LATEST POSTS

-

Tencent Hunyuan AI Video – A Systematic Framework For Large Video Generation Model

https://aivideo.hunyuan.tencent.com

https://github.com/Tencent/HunyuanVideo

Unlike other models like Sora, Pika2, Veo2, HunyuanVideo’s neural network weights are uncensored and openly distributed, which means they can be run locally under the right circumstances (for example on a consumer 24 GB VRAM GPU) and it can be fine-tuned or used with LoRAs to teach it new concepts.

-

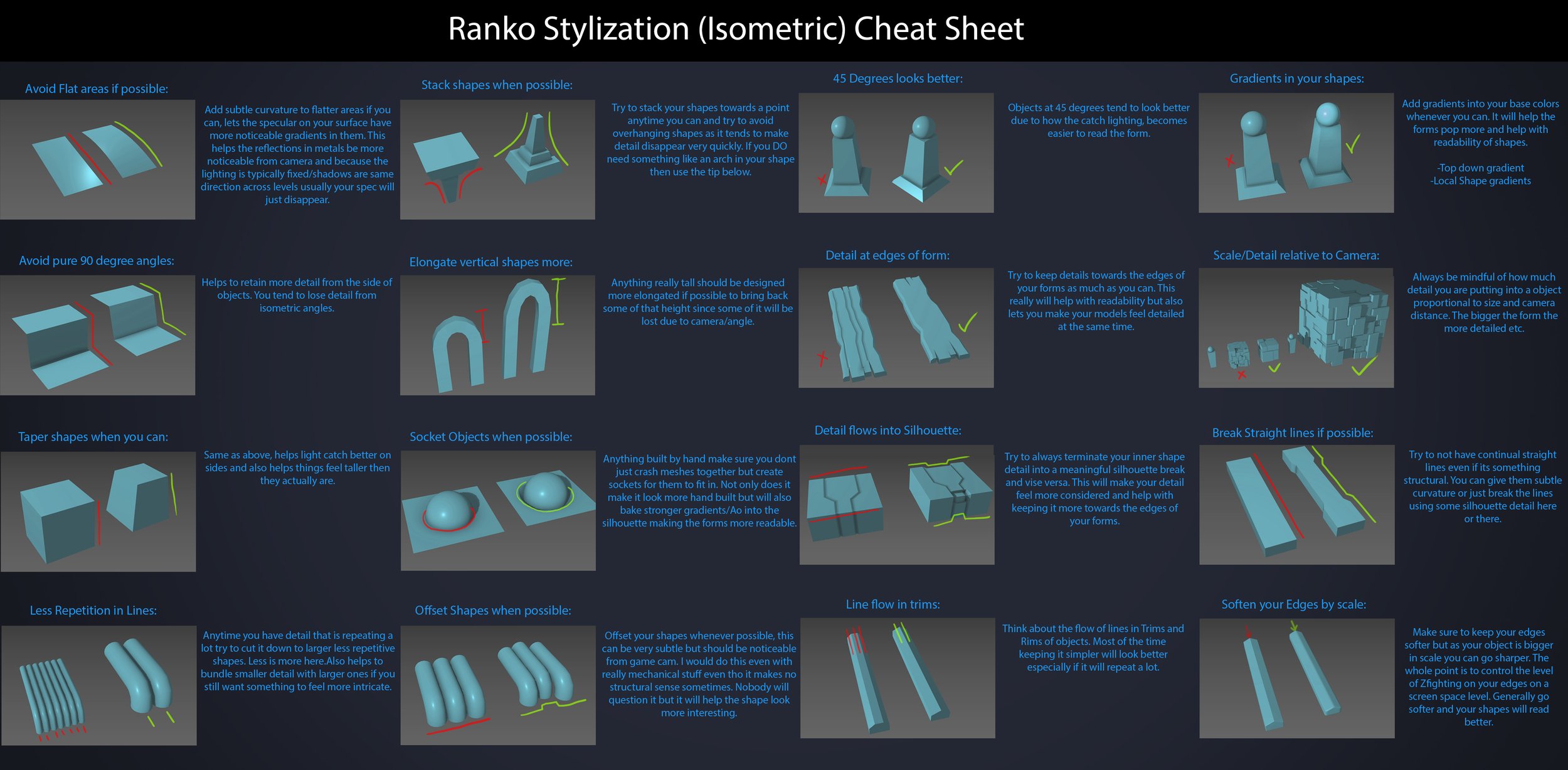

Ranko Prozo – Modelling design tips

Every Project I work on I always create a stylization Cheat sheet. Every project is unique but some principles carry over no matter what. This is a sheet I use a lot when I work on isometric stylized projects to help keep my assets consistent and interesting. None of these concepts are my own, just lots of tips I learned over the years. I have also added this to a page on my website, will continue to update with more tips and tricks, just need time to compile it all :)

-

Wyz Borrero – AI-generated “casting”

Guillermo del Toro and Ben Affleck, among others, have voiced concerns about the capabilities of generative AI in the creative industries. They believe that while AI can produce text, images, sound, and video that are technically proficient, it lacks the authentic emotional depth and creative intuition inherent in human artistry—qualities that define works like those of Shakespeare, Dalí, or Hitchcock.

Generative AI models are trained on vast datasets and excel at recognizing and replicating patterns. They can generate coherent narratives, mimic writing or artistic styles, and even compose poetry and music. However, they do not possess consciousness or genuine emotions. The “emotion” conveyed in AI-generated content is a reflection of learned patterns rather than true emotional experience.

Having extensively tested and used generative AI over the past four years, I observe that the rapid advancement of the field suggests many current limitations could be overcome in the future. As models become more sophisticated and training data expands, AI systems are increasingly capable of generating content that is coherent, contextually relevant, stylistically diverse, and can even evoke emotional responses.

The following video is an AI-generated “casting” using a text-to-video model specifically prompted to test emotion, expressions, and microexpressions. This is only the beginning.

FEATURED POSTS

-

Zibra.AI – Real-Time Volumetric Effects in Virtual Production. Now free for Indies!

A New Era for Volumetrics

For a long time, volumetric visual effects were viable only in high-end offline VFX workflows. Large data footprints and poor real-time rendering performance limited their use: most teams simply avoided volumetrics altogether. It’s similar to the early days of online video: limited computational power and low network bandwidth made video content hard to share or stream. Today, of course, we can’t imagine the internet without it, and we believe volumetrics are on a similar path.

With advanced data compression and real-time, GPU-driven decompression, anyone can now bring CGI-class visual effects into Unreal Engine.

From now on, it’s completely free for individual creators!

What it means for you?

(more…)

-

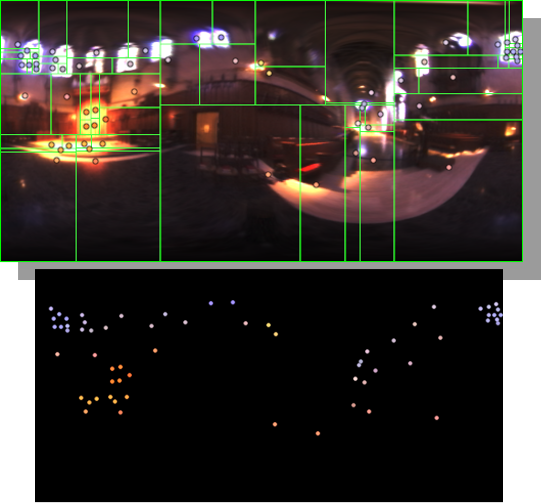

HDRI Median Cut plugin

www.hdrlabs.com/picturenaut/plugins.html

Note. The Median Cut algorithm is typically used for color quantization, which involves reducing the number of colors in an image while preserving its visual quality. It doesn’t directly provide a way to identify the brightest areas in an image. However, if you’re interested in identifying the brightest areas, you might want to look into other methods like thresholding, histogram analysis, or edge detection, through openCV for example.

Here is an openCV example:

(more…)