BREAKING NEWS

LATEST POSTS

-

OpenAI – Sora 2 is here, more physically accurate, realistic, and more controllable than prior systems

https://openai.com/index/sora-2/

It also features synchronized dialogue and sound effects. Create with it in the new Sora app.

-

DokuWiki and TiddlyWiki- Free Self Hosted Wikis

https://www.dokuwiki.org/dokuwiki

DokuWiki (PHP, flat-file)

- Maturity & stability: Actively developed since 2004, very stable.

- Storage: All content stored in plain text files (no DB at all).

- Portability: Just copy the folder; backups are trivial.

- Features: User management, access control lists, plugins, templates.

- Syntax: Own lightweight markup, but easy to learn.

- Use case: Great for documentation, personal wikis, code snippets, knowledge bases.

This is probably the closest modern equivalent to self hosted Wikie in philosophy.

TiddlyWiki (Single-file wiki in HTML/JS)

- Maturity: First released in 2004, still maintained.

- Storage: Everything in a single self-contained HTML file (JavaScript inside).

- Portability: Just copy one file, runs in any browser.

- Hosting: Can be self-hosted on any web server, or even run locally.

- Features: Plugin ecosystem, themes, good for personal notes and code snippets.

- Use case: Perfect for highly portable personal wikis, though less suited for multi-user environments.

This is the most portable option — literally one file to back up.

-

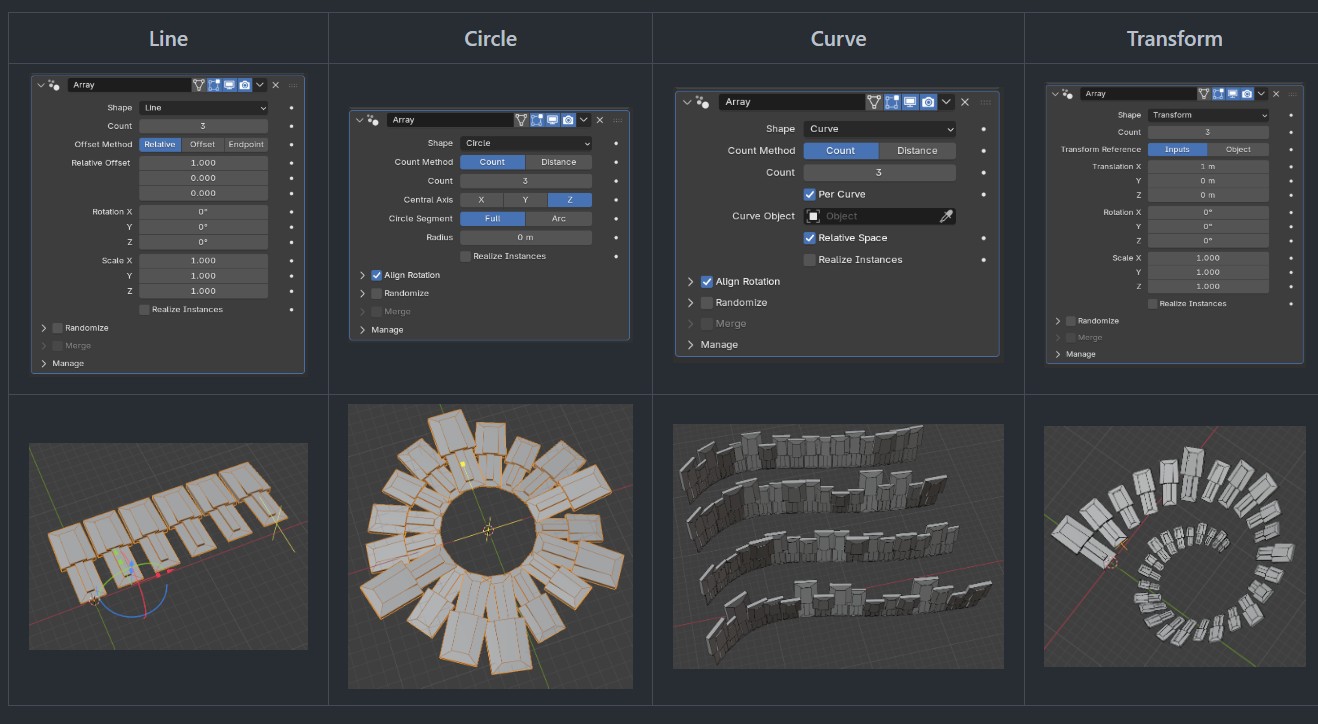

Geometry Nodes – New essential assets for Blender 5.0

https://projects.blender.org/blender/blender/pulls/145645

High level tools to make the power of Geometry Nodes accessible to any user familiar with modifiers.

The focus here is (opposite to builtin Geometry Nodes) to combine lots of options and functionality into one convenient package, that can be extended by editing the nodes, or integrating it into a node-setup, but is focused on being used without node editing.

-

Ian Curtis – AI world models generated these 3D environments from a single image in minutes, and I turned them into a custom spaceship game level in an afternoon

Here’s how I created it:

Design: I started by generating cohesive concept images in Midjourney, with sleek white interiors with yellow accents to define the overall vibe.

Generate: Using World Labs, I transformed those images into fully explorable and persistent 3D environments in minutes.

Assemble: I cropped out doorways inside the Gaussian splats, then aligned and stitched multiple rooms together using PlayCanvas Supersplat, creating a connected spaceship layout.

Experience: Just a few hours later, I was walking through a custom interactive game level that started as a simple idea earlier that day. -

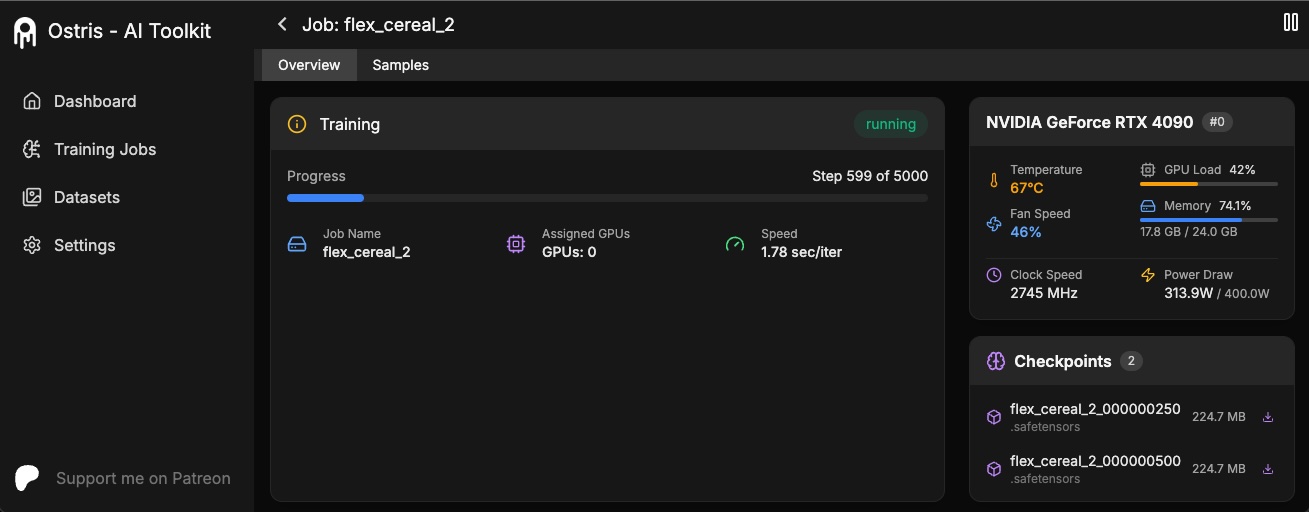

AI Toolkit by Ostris – An all in one training suite for diffusion models

https://github.com/ostris/ai-toolkit/tree/main

Screenshot The AI Toolkit UI is a web interface for the AI Toolkit. It allows you to easily start, stop, and monitor jobs. It also allows you to easily train models with a few clicks. It also allows you to set a token for the UI to prevent unauthorized access so it is mostly safe to run on an exposed server.

-

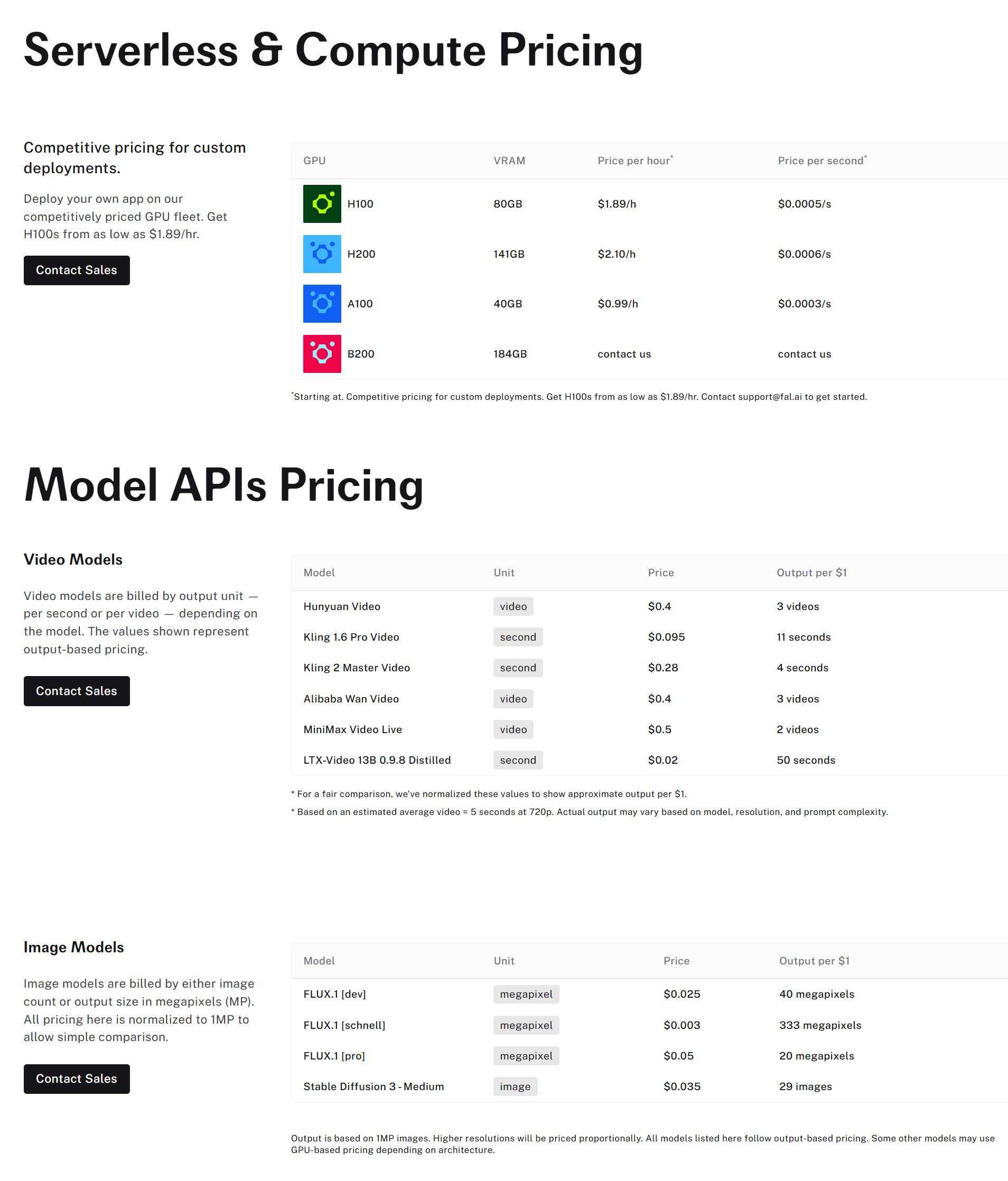

Fal.AI – Generative media platform for developers

Core Capabilities & Differentiators

Capability Description / Value Model Gallery Over 600+ production-ready models for image, video, audio, 3D. Fal AI Serverless / On-demand Compute You don’t have to set up GPU clusters yourself. It offers serverless GPUs with no cold starts or autoscaler setup. Fal AI Custom / Private Deployments Support for bringing your own model weights, private endpoints, and secure model serving. Fal AI High Throughput & Speed fal claims their inference engine for diffusion models is “up to 10× faster” and built for scale (100M+ daily inference calls) with “99.99% uptime.” Fal AI Enterprise / Compliance SOC 2 compliance, single sign-on, analytics, priority support, and tooling aimed at enterprise deployment and procurement. Fal AI Flexible Pricing Options include per-output (serverless) or hourly GPU pricing (for more custom compute). Fal AI Use Cases & Positioning

- Useful for rapid prototyping or productionizing generative media features (e.g. image generation, video, voice).

- Appeals to teams that don’t want to manage MLOps/infra — it abstracts a lot of the “plumbing.”

- Targets both startups and enterprises — they emphasize scale, reliability, and security.

- They also showcase that fal is used by recognized companies in AI, design, and media (testimonials on site

FEATURED POSTS

-

Survivorship Bias: The error resulting from systematically focusing on successes and ignoring failures. How a young statistician saved his planes during WW2.

A young statistician saved their lives.

His insight (and how it can change yours):

(more…)

During World War II, the U.S. wanted to add reinforcement armor to specific areas of its planes.

Analysts examined returning bombers, plotted the bullet holes and damage on them (as in the image below), and came to the conclusion that adding armor to the tail, body, and wings would improve their odds of survival.

But a young statistician named Abraham Wald noted that this would be a tragic mistake. By only plotting data on the planes that returned, they were systematically omitting the data on a critical, informative subset: The planes that were damaged and unable to return.